Stefaan Verhulst

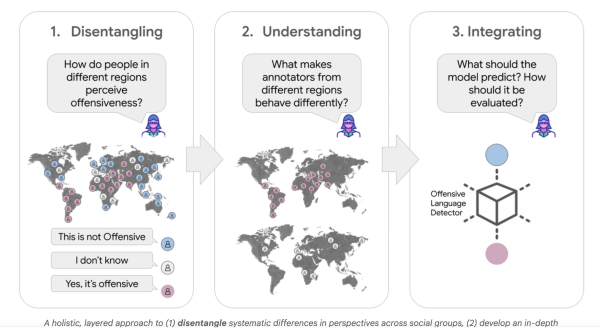

Paper by Aida Davani and Vinodkumar Prabhakaran: “Modern artificial intelligence (AI) systems rely on input from people. Human feedback helps train models to perform useful tasks, guides them toward safe and responsible behavior, and is used to assess their performance. While hailing the recent AI advancements, we should also ask: which humans are we actually talking about? For AI to be most beneficial, it should reflect and respect the diverse tapestry of values, beliefs, and perspectives present in the pluralistic world in which we live, not just a single “average” or majority viewpoint. Diversity in perspectives is especially relevant when AI systems perform subjective tasks, such as deciding whether a response will be perceived as helpful, offensive, or unsafe. For instance, what one value system deems as offensive may be perfectly acceptable within another set of values.

Since divergence in perspectives often aligns with socio-cultural and demographic lines, preferentially capturing certain groups’ perspectives over others in data may result in disparities in how well AI systems serve different social groups. For instance, we previously demonstrated that simply taking a majority vote from human annotations may obfuscate valid divergence in perspectives across social groups, inadvertently marginalizing minority perspectives, and consequently performing less reliably for groups marginalized in the data. How AI systems should deal with such diversity in perspectives depends on the context in which they are used. However, current models lack a systematic way to recognize and handle such contexts.

With this in mind, here we describe our ongoing efforts in pursuit of capturing diverse perspectives and building AI for the pluralistic society in which we live… (More)”.

Article by Shayne Longpre: “We often take the internet for granted. It’s an ocean of information at our fingertips—and it simply works. But this system relies on swarms of “crawlers”—bots that roam the web, visit millions of websites every day, and report what they see. This is how Google powers its search engines, how Amazon sets competitive prices, and how Kayak aggregates travel listings. Beyond the world of commerce, crawlers are essential for monitoring web security, enabling accessibility tools, and preserving historical archives. Academics, journalists, and civil societies also rely on them to conduct crucial investigative research.

Crawlers are endemic. Now representing half of all internet traffic, they will soon outpace human traffic. This unseen subway of the web ferries information from site to site, day and night. And as of late, they serve one more purpose: Companies such as OpenAI use web-crawled data to train their artificial intelligence systems, like ChatGPT.

Understandably, websites are now fighting back for fear that this invasive species—AI crawlers—will help displace them. But there’s a problem: This pushback is also threatening the transparency and open borders of the web, that allow non-AI applications to flourish. Unless we are thoughtful about how we fix this, the web will increasingly be fortified with logins, paywalls, and access tolls that inhibit not just AI but the biodiversity of real users and useful crawlers…(More)”.

Report by the Scottish Government: “This research builds on that framework and seeks to explore how Scottish Government might better understand the impact of public participation on policy decision-making. As detailed above, there is a plethora of potential, and anticipated, benefits which may arise from increased citizen participation in policy decision-making, as well as lots of participatory activity already taking place across the organisation. Now is an opportune time to consider impact, to support the design and delivery of participatory engagements that are impactful and that are more likely to realise the benefits of public participation. Through a review of academic and grey literature along with stakeholder engagement, this study aims to answer the following questions:

- 1. How is impact conceptualised in literature related to public participation, and what are some practice examples?

- 2. How is impact conceptualised by stakeholders and what do they perceive as the current blockers, challenges or facilitators in a Scottish Government setting?

- 3. What evaluation tools or frameworks are used to evaluate the impact of public participation processes, and which ones might be applicable /usable in a Scottish Government setting?…(More)”.

Article by Sara Herschander: “How do we know you won’t pull an OpenAI?”

It’s the question Stella Biderman has gotten used to answering when she seeks funding from major foundations for EleutherAI, her two-year-old nonprofit A.I. lab that has developed open-source artificial intelligence models.

The irony isn’t lost on her. Not long ago, she declined a deal dangled by one of Silicon Valley’s most prominent venture capitalists who, with the snap of his fingers, promised to raise $100 million for the fledgling nonprofit lab — over 30 times EleutherAI’s current annual budget — if only the lab’s leaders would agree to drop its 501(c)(3) status.

In today’s A.I. gold rush, where tech giants spend billions on increasingly powerful models and top researchers command seven-figure salaries, to be a nonprofit A.I. lab is to be caught in a Catch-22: defend your mission to increasingly wary philanthropic funders or give in to temptation and become a for-profit company.

Philanthropy once played an outsize role in building major A.I. research centers and nurturing influential theorists — by donating hundreds of millions of dollars, largely to university labs — yet today those dollars are dwarfed by the billions flowing from corporations and venture capitalists. For tech nonprofits and their philanthropic backers, this has meant embracing a new role: pioneering the research and safeguards the corporate world won’t touch.

“If making a lot of money was my goal, that would be easy,” said Biderman, whose employees have seen their pay packages triple or quadruple after being poached by companies like OpenAI, Anthropic, and Google.

But EleutherAI doesn’t want to join the race to build ever-larger models. Instead, backed by grants from Open Philanthropy, Omidyar Network, and A.I. companies Hugging Face and StabilityAI, the group has carved out a different niche: researching how A.I. systems make decisions, maintaining widely used training datasets, and shaping global policy around A.I. safety and transparency…(More)”.

Paper by Jenny Lewis: “Around the world in recent times, numerous policy design labs have been established, related to a rising focus on the need for public sector innovation. These labs are a response to the challenging nature of many societal problems and often have a purpose of navigating uncertainty. They do this by “labbing” ill-structured problems through moving them into an experimental environment, outside of traditional government structures, and using a design-for-policy approach. Labs can, therefore, be considered as a particular type of procedural policy tool, used in attempts to change how policy is formulated and implemented to address uncertainty. This paper considers the role of policy design labs in learning and explores the broader governance context they are embedded within. It examines whether labs have the capacity to innovate and also retain and circulate learning to other policy actors. It argues that labs have considerable potential to change the spaces of policymaking at the micro level and innovate, but for learning to be kept rather than lost, innovation needs to be institutionalized in governing structures at higher levels…(More)”.

OECD Report: “Cross-border data flows are the lifeblood of today’s social and economic interactions, but they also raise a range of new challenges, including for privacy and data protection, national security, cybersecurity, digital protectionism and regulatory reach. This has led to a surge in regulation conditioning (or prohibiting) its flow or mandating that data be stored or processed domestically (data localisation). However, the economic implications of these measures are not well understood. This report provides estimates on what is at stake, highlighting that full fragmentation could reduce global GDP by 4.5%. It also underscores the benefits associated with open regimes with safeguards which could see global GDP increase by 1.7%. In a world where digital fragmentation is growing, global discussions on these issues can help harness the benefits of an open and safeguarded internet…(More)”.

Report by Datasphere Initiative: “The Sandboxes for AI report explores the role of regulatory sandboxes in the development and governance of artificial intelligence. Originally presented as a working paper at the Global Sandbox Forum Inaugural Meeting in July 2024, the report was further refined through expert consultations and an online roundtable in December 2024. It examines sandboxes that have been announced, are under development, or have been completed, identifying common patterns in their creation, timing, and implementation. By providing insights into why and how regulators and companies should consider AI sandboxes, the report serves as a strategic guide for fostering responsible innovation.

In a rapidly evolving AI landscape, traditional regulatory processes often struggle to keep pace with technological advancements. Sandboxes offer a flexible and iterative approach, allowing policymakers to test and refine AI governance models in a controlled environment. The report identifies 66 AI, data, or technology-related sandboxes globally, with 31 specifically designed for AI innovation across 44 countries. These initiatives focus on areas such as machine learning, data-driven solutions, and AI governance, helping policymakers address emerging challenges while ensuring ethical and transparent AI development…(More)”.

Article by Natasha Lomas: “Make room for yet another partnership on AI. Current AI, a “public interest” initiative focused on fostering and steering development of artificial intelligence in societally beneficial directions, was announced at the French AI Action summit on Monday. It’s kicking off with an initial $400 million in pledges from backers and a plan to pull in $2.5 billion more over the next five years.

Such figures might are small beer when it comes to AI investment, with the French president fresh from trumpeting a private support package worth around $112 billion (which itself pales beside U.S. investments of $500 billion aiming to accelerate the tech). But the partnership is not focused on compute, so its backers believe such relatively modest sums will still be able to produce an impact in key areas where AI could make a critical difference to advancing the public interest in areas like healthcare and supporting climate goals.

The initial details are high level. Under the top-line focus on “the enabling environment for public interest AI,” the initiative has a number of stated aims — including pushing to widen access to “high quality” public and private datasets for AI training; support for open source infrastructure and tooling to boost AI transparency and security; and support for developing systems to measure AI’s social and environmental impact.

Its founder, Martin Tisné, said the goal is to create a financial vehicle “to provide a North Star for public financing of critical efforts,” such as bringing AI to bear on combating cancers or coming up with treatments for long COVID.

“I think what’s happening is you’ve got a data bottleneck coming in artificial intelligence, because we’re running out of road with data on the web, effectively … and here, what we need is to really unlock innovations in how to make data accessible and available,” he told TechCrunch….(More)”

Article by Dylan Scott: “In the initial days of the Trump administration, officials scoured federal websites for any mention of what they deemed “DEI” keywords — terms as generic as “diverse” and “historically” and even “women.” They soon identified reams of some of the country’s most valuable public health data containing some of the targeted words, including language about LGBTQ+ people, and quickly took down much of it — from surveys on obesity and suicide rates to real-time reports on immediate infectious disease threats like bird flu.

The removal elicited a swift response from public health experts who warned that without this data, the country risked being in the dark about important health trends that shape life-and-death public health decisions made in communities across the country.

Some of this data was restored in a matter of days, but much of it was incomplete. In some cases, the raw data sheets were posted again, but the reference documents that would allow most people to decipher them were not. Meanwhile, health data continues to be taken down: The New York Times reported last week that data from the Centers for Disease Control and Prevention on bird flu transmission between humans and cats had been posted and then promptly removed…

It is difficult to capture the sheer breadth and importance of the public health data that has been affected. Here are a few illustrative examples of reports that have either been tampered with or removed completely, as compiled by KFF.

The Behavioral Risk Factor Surveillance System (BRFSS), which is “one of the most widely used national health surveys and has been ongoing for about 40 years,” per KFF, is an annual survey that contacts 400,000 Americans to ask people about everything from their own perception of their general health to exercise, diet, sexual activity, and alcohol and drug use.

That in turn allows experts to track important health trends, like the fluctuations in teen vaping use. One recent study that relied on BRFSS data warned that a recent ban on flavored e-cigarettes (also known as vapes) may be driving more young people to conventional smoking, five years after an earlier Yale study based on the same survey led to the ban being proposed in the first place. The Supreme Court and the Trump administration are currently revisiting the flavored vape ban, and the Yale study was cited in at least one amicus brief for the case.

This survey has also been of particular use in identifying health disparities among LGBTQ+ people, such as higher rates of uninsurance and reported poor health compared to the general population. Those findings have motivated policymakers at the federal, state and local levels to launch new initiatives aimed specifically at that at-risk population.

As of now, most of the BRFSS data has been restored, but the supplemental materials that make it legible to lay people still has not…(More)”.

Report by Danielle Goldfarb: “From collecting millions of online price data to measure inflation, to assessing the economic impact of the COVID-19 pandemic on low-income workers, digital data sets can be used to benefit the public interest. Using these and other examples, this special report explores how digital data sets and advances in artificial intelligence (AI) can provide timely, transparent and detailed insights into global challenges. These experiments illustrate how governments and civil society analysts can reuse digital data to spot emerging problems, analyze specific group impacts, complement traditional metrics or verify data that may be manipulated. AI and data governance should extend beyond addressing harms. International institutions and governments need to actively steward digital data and AI tools to support a step change in our understanding of society’s biggest challenges…(More)”