Toolkit by the Broadband Commission Working Group on Data Governance: “.. the Toolkit serves as a practical, capacity-building resource for policymakers, regulators, and governments. It offers actionable guidance on key data governance priorities — including legal frameworks, institutional roles, cross-border data flows, digital self-determination, and data for AI.

As a key capacity building resource, the Toolkit aims to empower policymakers, regulators and data practitioners to navigate the complexities of data governance in the digital era. Plans are currently underway to translate the Toolkit into French, Spanish, Chinese, and Arabic to ensure broader global accessibility and impact. Pilot implementation at country level is also being explored for Q4 2025 to support national-level uptake.

The Data Governance Toolkit

The Data Governance Toolkit: Navigating Data in the Digital Age offers a practical, rights-based guide to help governments, institutions, and stakeholders make data work for all.

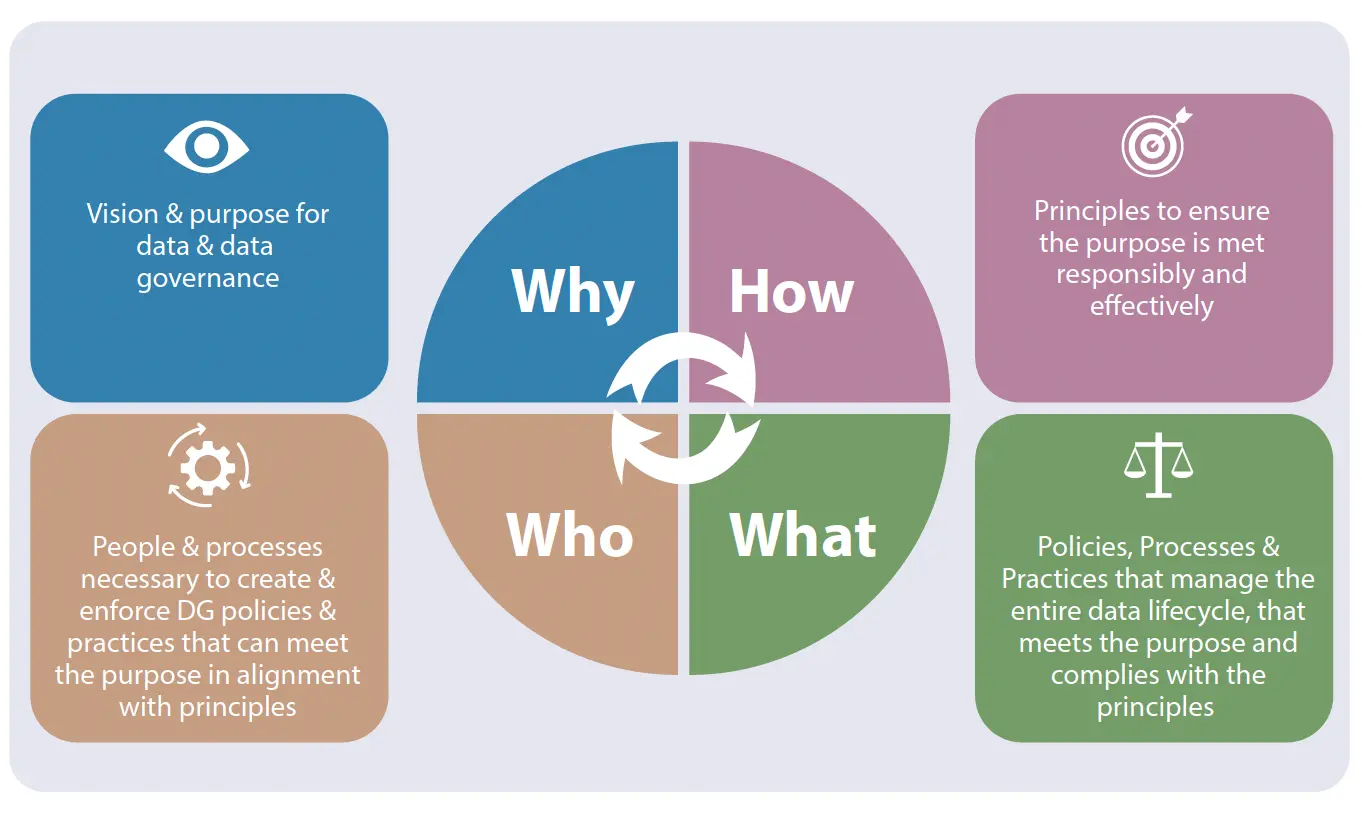

The Toolkit is organized around four foundational data governance components—referred to as the 4Ps of Data Governance:

- Why (Purpose): How to define a vision and purpose for data governance in the context of AI, digital transformation, and sustainable development.

- How (Principles): What principles should guide a governance framework to balance innovation, security, and ethical considerations.

- Who (People and Processes): Identifying the stakeholders, institutions, and processes required to build and enforce responsible governance structures.

- What (Practices and Mechanisms): Policies and best practices to manage data across its entire lifecycle while ensuring privacy, interoperability, and regulatory compliance.

The Toolkit also includes:

- A self-assessment framework to help organizations evaluate their current capabilities;

- A glossary of key terms to foster shared understanding;

- A curated list of other toolkits and frameworks for deeper engagement.

Designed to be adaptable across regions and sectors, the Data Governance Toolkit is not a one-size-fits-all manual—but a modular resource to guide smarter, safer, and fairer data use in the digital age…(More)”