Stefaan Verhulst

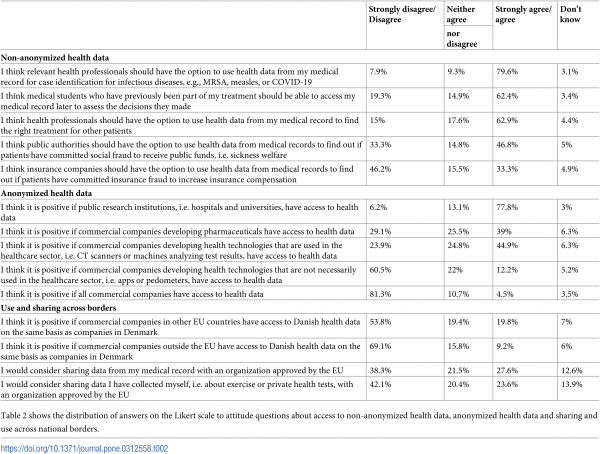

Paper by Lea Skovgaard et al: “Everyday clinical care generates vast amounts of digital data. A broad range of actors are interested in reusing these data for various purposes. Such reuse of health data could support medical research, healthcare planning, technological innovation, and lead to increased financial revenue. Yet, reuse also raises questions about what data subjects think about the use of health data for various different purposes. Based on a survey with 1071 respondents conducted in 2021 in Denmark, this article explores attitudes to health data reuse. Denmark is renowned for its advanced integration of data infrastructures, facilitating data reuse. This is therefore a relevant setting from which to explore public attitudes to reuse, both as authorities around the globe are currently working to facilitate data reuse opportunities, and in the light of the recent agreement on the establishment in 2024 of the European Health Data Space (EHDS) within the European Union (EU). Our study suggests that there are certain forms of health data reuse—namely transnational data sharing, commercial involvement, and use of data as national economic assets—which risk undermining public support for health data reuse. However, some of the purposes that the EHDS is supposed to facilitate are these three controversial purposes. Failure to address these public concerns could well challenge the long-term legitimacy and sustainability of the data infrastructures currently under construction…(More)”

Paper by Isabel James: “Despite the global proliferation of ocean governance frameworks that feature socioeconomic variables, the inclusion of community needs and local ecological knowledge remains underrepresented. Participatory mapping or Participatory GIS (PGIS) has emerged as a vital method to address this gap by engaging communities that are conventionally excluded from ocean planning and marine conservation. Originally developed for forest management and Indigenous land reclamation, the scholarship on PGIS remains predominantly focused on terrestrial landscapes. This review explores recent research that employs the method in the marine realm, detailing common methodologies, data types and applications in governance and conservation. A typology of ocean-centered PGIS studies was identified, comprising three main categories: fisheries, habitat classification and blue economy activities. Marine Protected Area (MPA) design and conflict management are the most prevalent conservation applications of PGIS. Case studies also demonstrate the method’s effectiveness in identifying critical marine habitats such as fish spawning grounds and monitoring endangered megafauna. Participatory mapping shows particular promise in resource and data limited contexts due to its ability to generate large quantities of relatively reliable, quick and low-cost data. Validation steps, including satellite imagery and ground-truthing, suggest encouraging accuracy of PGIS data, despite potential limitations related to human error and spatial resolution. This review concludes that participatory mapping not only enriches scientific research but also fosters trust and cooperation among stakeholders, ultimately contributing to more resilient and equitable ocean governance…(More)”.

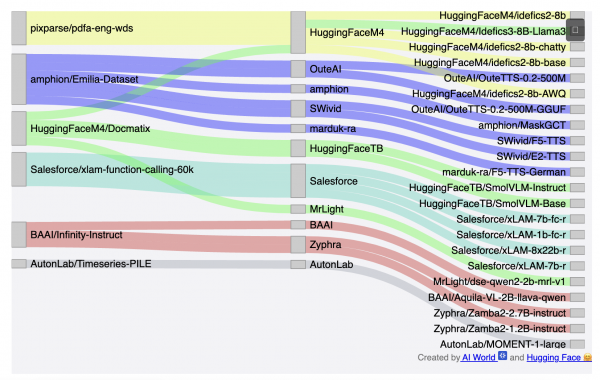

A Sankey diagram developed by AI World and Hugging Face:”… illustrating the flow from top open-source datasets through AI organizations to their derivative models, showcasing the collaborative nature of AI development…(More)”.

Paper by Riccardo Gallotti, Davide Maniscalco, Marc Barthelemy & Manlio De Domenico: “The description of human mobility is at the core of many fundamental applications ranging from urbanism and transportation to epidemics containment. Data about human movements, once scarce, is now widely available thanks to new sources such as phone call detail records, GPS devices, or Smartphone apps. Nevertheless, it is still common to rely on a single dataset by implicitly assuming that the statistical properties observed are robust regardless of data gathering and processing techniques. Here, we test this assumption on a broad scale by comparing human mobility datasets obtained from 7 different data-sources, tracing 500+ millions individuals in 145 countries. We report wide quantifiable differences in the resulting mobility networks and in the displacement distribution. These variations impact processes taking place on these networks like epidemic spreading. Our results point to the need for disclosing the data processing and, overall, to follow good practices to ensure robust and reproducible results…(More)”

Paper by Alireza Ghafarollahi, and Markus J. Buehler: “A key challenge in artificial intelligence (AI) is the creation of systems capable of autonomously advancing scientific understanding by exploring novel domains, identifying complex patterns, and uncovering previously unseen connections in vast scientific data. In this work, SciAgents, an approach that leverages three core concepts is presented: (1) large-scale ontological knowledge graphs to organize and interconnect diverse scientific concepts, (2) a suite of large language models (LLMs) and data retrieval tools, and (3) multi-agent systems with in-situ learning capabilities. Applied to biologically inspired materials, SciAgents reveals hidden interdisciplinary relationships that were previously considered unrelated, achieving a scale, precision, and exploratory power that surpasses human research methods. The framework autonomously generates and refines research hypotheses, elucidating underlying mechanisms, design principles, and unexpected material properties. By integrating these capabilities in a modular fashion, the system yields material discoveries, critiques and improves existing hypotheses, retrieves up-to-date data about existing research, and highlights strengths and limitations. This is achieved by harnessing a “swarm of intelligence” similar to biological systems, providing new avenues for discovery. How this model accelerates the development of advanced materials by unlocking Nature’s design principles, resulting in a new biocomposite with enhanced mechanical properties and improved sustainability through energy-efficient production is shown…(More)”.

The Economist: “Academics have long been accused of jargon-filled writing that is impossible to understand. A recent cautionary tale was that of Ally Louks, a researcher who set off a social media storm with an innocuous post on X celebrating the completion of her PhD. If it was Ms Louks’s research topic (“olfactory ethics”—the politics of smell) that caught the attention of online critics, it was her verbose thesis abstract that further provoked their ire. In two weeks, the post received more than 21,000 retweets and 100m views.

Although the abuse directed at Ms Louks reeked of misogyny and anti-intellectualism—which she admirably shook off—the reaction was also a backlash against an academic use of language that is removed from normal life. Inaccessible writing is part of the problem. Research has become harder to read, especially in the humanities and social sciences. Though authors may argue that their work is written for expert audiences, much of the general public suspects that some academics use gobbledygook to disguise the fact that they have nothing useful to say. The trend towards more opaque prose hardly allays this suspicion…(More)”.

Paper by Ann Fitz-Gerald and Jenn Hennebry: “This article examines the realities of modern day warfare, including a rising trend in hybrid threats and irregular warfare which employ emerging technologies supported by digital and data-driven processes. The way in which these technologies become applied generates a widened battlefield and leads to a greater number of civilians being caught up in conflict. Humanitarian groups mandated to protect civilians have adapted their approaches to the use of new emerging technologies. However, the lack of international consensus on the use of data, the public and private nature of the actors involved in conflict, the transnational aspects of the widened battlefield, and the heightened security risks in the conflict space pose enormous challenges for the protection of civilians agenda. Based on the dual-usage aspect of emerging technologies, the challenges associated with regulation and the need for those affected by conflict to demonstrate resilience towards, and knowledge of, digital media literacy, this paper proposes the development of guidance for a “minimum basic technology infrastructure” which is supported by technology, regulation, and public awareness and education…(More)”.

Article by Todd Carpenter: “Presently, if one ignores the hype around Generative AI systems, we can recognize that software tools are not sentient. Nor can they (yet) overcome the problem of coming up with creative solutions to novel problems. They are limited in what they can do by the training data that they are supplied. They do hold the prospect for making us more efficient and productive, particularly for wrote tasks. But given enough training data, one could consider how much farther this could be taken. In preparation for that future, when it comes to the digital twins, the landscape of the ownership of the intellectual property (IP) behind them is already taking shape.

Several chatbots have been set up to replicate long-dead historical figures so that you can engage with them in their “voice”. Hellohistory is an AI-driven chatbot that provides people the opportunity to, “have in-depth conversations with history’s greatest.” A different tool, Historical Figures Chat, was widely panned not long after its release in 2023, and especially by historians who strongly objected. There are several variations on this theme of varying quality. Of course, with all things GenAI, they will improve over time and many of the obvious and problematic issues will be resolved either by this generation of companies or the next. Whether there is real value and insight to be gained, apart from the novelty, of engaging with “real historical figures” is the multi-billion dollar question. Much like the World Wide Web in the 1990s, very likely there is value, but it will be years before it can be clearly discerned what that value is and how to capitalize upon it. In anticipation of that day, many organizations are positioning themselves to capture that value.

While many universities have taken a very liberal view of ownership of the intellectual property of their students and faculty — far more liberal than many corporations might — others are quite more restrictive…(More)”.

Article by Kashmir Hill: “Darcy Bullock, a civil engineering professor at Purdue University, turns to his computer screen to get information about how fast cars are traveling on Interstate 65, which runs 887 miles from Lake Michigan to the Gulf of Mexico. It’s midafternoon on a Monday, and his screen is mostly filled with green dots indicating that traffic is moving along nicely. But near an exit on the outskirts of Indianapolis, an angry red streak shows that cars have stopped moving.

A traffic camera nearby reveals the cause: A car has spun out, causing gridlock.

In recent years, vehicles that have wireless connectivity have become a critical source of information for transportation departments and for academics who study traffic patterns. The data these vehicles emit — including speed, how hard they brake and accelerate, and even if their windshield wipers are on — can offer insights into dangerous road conditions, congestion or poorly timed traffic signals.

“Our cars know more about our roads than agencies do,” said Dr. Bullock, who regularly works with the Indiana Department of Transportation to conduct studies on how to reduce traffic congestion and increase road safety. He credits connected-car data with detecting hazards that would have taken years — and many accidents — to find in the past.

The data comes primarily from commercial trucks and from cars made by General Motors that are enrolled in OnStar, G.M.’s internet-connected service. (Drivers know OnStar as the service that allows them to lock their vehicles from a smartphone app or find them if they have been stolen.) Federal safety guidelines require commercial truck drivers to be routinely monitored, but people driving G.M. vehicles may be surprised to know that their data is being collected, though it is indicated in the fine print of the company’s privacy policy…(More)”.

Essay by Aaron Horvath: “Foundations make grants conditional on demonstrable results. Charities tout the evidentiary basis of their work. And impact consultants play both sides: assisting funders in their pursuit of rational beneficence and helping grantees translate the jumble of reality into orderly, spreadsheet-ready metrics.

Measurable impact has crept into everyday understandings of charity as well. There’s the extensive (often fawning) news coverage of data-crazed billionaire philanthropists, so-called thought leaders exhorting followers to rethink their contributions to charity, and popular books counseling that intuition and sentiment are poor guides for making the world a better place. Putting ideas into action, charity evaluators promote research-backed listings of the most impactful nonprofits. Why give to your local food bank when there’s one in Somerville, Massachusetts, with a better rating?

Over the past thirty years, amid a larger crisis of civic engagement, social isolation, and political alienation, measurable impact has seeped into our civic imagination and become one of the guiding ideals for public-spirited beneficence. And while its proponents do not always agree on how best to achieve or measure the extent of that impact, they have collectively recast civic engagement as objective, pragmatic, and above the fray of politics—a triumph of the head over the heart. But how did we get here? And what happens to our capacity for meaningful collective action when we think of civic life in such depersonalized and quantified terms?…(More)”.