Stefaan Verhulst

Article by Michael P. Goodyear: “Twenty-one-year-old college student Shane hopes to write a song for his boyfriend. In the past, Shane would have had to wait for inspiration to strike, but now he can use generative artificial intelligence to get a head start. Shane decides to use Anthropic’s AI chat system, Claude, to write the lyrics. Claude dutifully complies and creates the words to a love song. Shane, happy with the result, adds notes, rhythm, tempo, and dynamics. He sings the song and his boyfriend loves it. Shane even decides to post a recording to YouTube, where it garners 100,000 views.

But Shane did not realize that this song’s lyrics are similar to those of “Love Story,” Taylor Swift’s hit 2008 song. Shane must now contend with copyright law, which protects original creative expression such as music. Copyright grants the rights owner the exclusive rights to reproduce, perform, and create derivatives of the copyrighted work, among other things. If others take such actions without permission, they can be liable for damages up to $150,000. So Shane could be on the hook for tens of thousands of dollars for copying Swift’s song.

Copyright law has surged into the news in the past few years as one of the most important legal challenges for generative AI tools like Claude—not for the output of these tools but for how they are trained. Over two dozen pending court cases grapple with the question of whether training generative AI systems on copyrighted works without compensating or getting permission from the creators is lawful or not. Answers to this question will shape a burgeoning AI industry that is predicted to be worth $1.3 trillion by 2032.

Yet there is another important question that few have asked: Who should be liable when a generative AI system creates a copyright-infringing output? Should the user be on the hook?…(More)”

Blog by the UK Policy Lab: “…Different policies can play out in radically different ways depending on circumstance and place. Accordingly it is important for policy professionals to have access to a diverse suite of people-centred methods, from gentle and compassionate techniques that increase understanding with small groups of people to higher-profile, larger-scale engagements. The image below shows a spectrum of people-centred and participatory methods that can be used in policy, ranging from light-touch involvement (e.g. consultation), to structured deliberation (e.g. citizens’ assemblies) and deeper collaboration and empowerment (e.g. participatory budgeting). This spectrum of participation is speculatively mapped against stages of the policy cycle…(More)”.

Special series by Skoll for the Stanford Social Innovation Review: “…we explore system orchestration, collaborative funding, government partnerships, mission-aligned investing, reimagined storytelling, and evaluation and learning. These seven articles highlight successful approaches to collective action and share compelling examples of social transformation.

The time is now for philanthropy to align the speed and scale of our investments with the scope of the global challenges that social innovators seek to address. We hope this series will spark fresh thinking and new ideas for how we can create durable systemic change quickly and together…(More)”.

Article by Akash Kapur: “In recent years, governments have increasingly pursued variants of digital sovereignty to regulate and control the global digital ecosystem. The pursuit of AI sovereignty represents the latest iteration in this quest.

Digital sovereignty may offer certain benefits, but it also poses undeniable risks, including the possibility of undermining the very goals of autonomy and self-reliance that nations are seeking. These risks are particularly pronounced for smaller nations with less capacity, which might do better in a revamped, more inclusive, multistakeholder system of digital governance.

Organizing digital governance around agency rather than sovereignty offers the possibility of such a system. Rather than reinforce the primacy of nations, digital agency asserts the rights, priorities, and needs not only of sovereign governments but also of the constituent parts—the communities and individuals—they purport to represent.

Three cross-cutting principles underlie the concept of digital agency: recognizing stakeholder multiplicity, enhancing the latent possibilities of technology, and promoting collaboration. These principles lead to three action-areas that offer a guide for digital policymakers: reinventing institutions, enabling edge technologies, and building human capacity to ensure technical capacity…(More)”.

OECD Report: “The most recent phase of digital transformation is marked by rapid technological changes, creating both opportunities and risks for the economy and society. The Volume 2 of the OECD Digital Economy Outlook 2024 explores emerging priorities, policies and governance practices across countries. It also examines trends in the foundations that enable digital transformation, drive digital innovation and foster trust in the digital age. The volume concludes with a statistical annex…

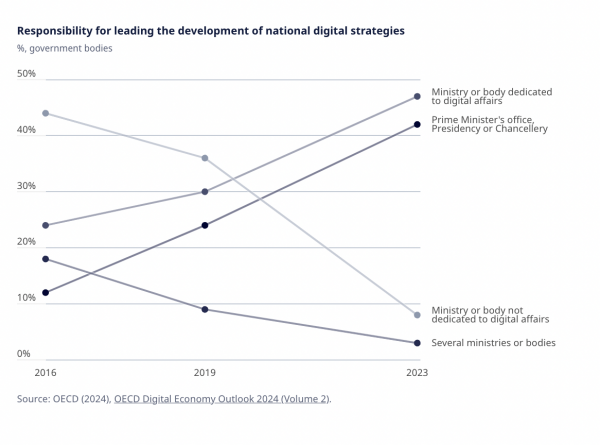

In 2023, digital government, connectivity and skills topped the list of digital policy priorities. Increasingly developed at a high level of government, national digital strategies play a critical role in co-ordinating these efforts. Nearly half of the 38 countries surveyed develop these strategies through dedicated digital ministries, up from just under a quarter in 2016. Among 1 200 policy initiatives tracked across the OECD, one-third aim to boost digital technology adoption, social prosperity, and innovation. AI and 5G are the most often-cited technologies…(More)”

Paper by Geoff Keeling et al: “Moral imagination” is the capacity to register that one’s perspective on a decision-making situation is limited, and to imagine alternative perspectives that reveal new considerations or approaches. We have developed a Moral Imagination approach that aims to drive a culture of responsible innovation, ethical awareness, deliberation, decision-making, and commitment in organizations developing new technologies. We here present a case study that illustrates one key aspect of our approach – the technomoral scenario – as we have applied it in our work with product and engineering teams. Technomoral scenarios are fictional narratives that raise ethical issues surrounding the interaction between emerging technologies and society. Through facilitated roleplaying and discussion, participants are prompted to examine their own intentions, articulate justifications for actions, and consider the impact of decisions on various stakeholders. This process helps developers to reenvision their choices and responsibilities, ultimately contributing to a culture of responsible innovation…(More)”.

Blog by Robin Nowok: “Data-Powered Positive Deviance (DPPD) is a new method that combines the principles of Positive Deviance with the power of digital data and advanced analytics. Positive Deviance is based on the observation that in every community or organization, some individuals achieve significantly better outcomes than their peers, despite having similar challenges and resources. These individuals or groups are referred to as positive deviants.

The DPPD method follows the same logic as the Positive Deviance approach but leverages existing, non-traditional data sources, either instead of or in conjunction with traditional data sources. This allows for the identification of positive deviants on larger geographic and temporal scales. Once identified, we can then uncover the behaviors that lead to their success, enabling others to adopt these practices.

In a world where top-down solutions often fall short, DPPD offers a fresh perspective. It focuses on finding what’s already working within communities, rather than imposing external solutions. This can lead to more sustainable, culturally appropriate, and effective interventions.

Our online course is designed to get you started on your DPPD journey. Through five modules, you’ll gain both theoretical knowledge and practical skills to apply DPPD in your own work…(More)”.

OECD Report: “The swift evolution of AI technologies calls for policymakers to consider and proactively manage AI-driven change. The OECD’s Expert Group on AI Futures was established to help meet this need and anticipate AI developments and their potential impacts. Informed by insights from the Expert Group, this report distils research and expert insights on prospective AI benefits, risks and policy imperatives. It identifies ten priority benefits, such as accelerated scientific progress, productivity gains and better sense-making and forecasting. It discusses ten priority risks, such as facilitation of increasingly sophisticated cyberattacks; manipulation, disinformation, fraud and resulting harms to democracy; concentration of power; incidents in critical systems and exacerbated inequality and poverty. Finally, it points to ten policy priorities, including establishing clearer liability rules, drawing AI “red lines”, investing in AI safety and ensuring adequate risk management procedures. The report reviews existing public policy and governance efforts and remaining gaps…(More)”.

Paper by Dino Pedreschi et al: “Human-AI coevolution, defined as a process in which humans and AI algorithms continuously influence each other, increasingly characterises our society, but is understudied in artificial intelligence and complexity science literature. Recommender systems and assistants play a prominent role in human-AI coevolution, as they permeate many facets of daily life and influence human choices through online platforms. The interaction between users and AI results in a potentially endless feedback loop, wherein users’ choices generate data to train AI models, which, in turn, shape subsequent user preferences. This human-AI feedback loop has peculiar characteristics compared to traditional human-machine interaction and gives rise to complex and often “unintended” systemic outcomes. This paper introduces human-AI coevolution as the cornerstone for a new field of study at the intersection between AI and complexity science focused on the theoretical, empirical, and mathematical investigation of the human-AI feedback loop. In doing so, we: (i) outline the pros and cons of existing methodologies and highlight shortcomings and potential ways for capturing feedback loop mechanisms; (ii) propose a reflection at the intersection between complexity science, AI and society; (iii) provide real-world examples for different human-AI ecosystems; and (iv) illustrate challenges to the creation of such a field of study, conceptualising them at increasing levels of abstraction, i.e., scientific, legal and socio-political…(More)”.

Article by John Letzing: “Denmark unveiled its own artificial intelligence supercomputer last month, funded by the proceeds of wildly popular Danish weight-loss drugs like Ozempic. It’s now one of several sovereign AI initiatives underway, which one CEO believes can “codify” a country’s culture, history, and collective intelligence – and become “the bedrock of modern economies.”

That particular CEO, Jensen Huang, happens to run a company selling the sort of chips needed to pursue sovereign AI – that is, to construct a domestic vintage of the technology, informed by troves of homegrown data and powered by the computing infrastructure necessary to turn that data into a strategic reserve of intellect…

It’s not surprising that countries are forging expansive plans to put their own stamp on AI. But big-ticket supercomputers and other costly resources aren’t feasible everywhere.

Training a large language model has gotten a lot more expensive lately; the funds required for the necessary hardware, energy, and staff may soon top $1 billion. Meanwhile, geopolitical friction over access to the advanced chips necessary for powerful AI systems could further warp the global playing field.

Even for countries with abundant resources and access, there are “sovereignty traps” to consider. Governments pushing ahead on sovereign AI could risk undermining global cooperation meant to ensure the technology is put to use in transparent and equitable ways. That might make it a lot less safe for everyone.

An example: a place using AI systems trained on a local set of values for its security may readily flag behaviour out of sync with those values as a threat…(More)”.