Stefaan Verhulst

Paper by Leonie Rebecca Freise et al: “The rapid evolution of the software development industry challenges developers to manage their diverse tasks effectively. Traditional assistant tools in software development often fall short of supporting developers efficiently. This paper explores how generative artificial intelligence (GAI) tools, such as Github Copilot or ChatGPT, facilitate job crafting—a process where employees reshape their jobs to meet evolving demands. By integrating GAI tools into workflows, software developers can focus more on creative problem-solving, enhancing job satisfaction, and fostering a more innovative work environment. This study investigates how GAI tools influence task, cognitive, and relational job crafting behaviors among software developers, examining its implications for professional growth and adaptability within the industry. The paper provides insights into the transformative impacts of GAI tools on software development job crafting practices, emphasizing their role in enabling developers to redefine their job functions…(More)”.

Article by Rohan Sharma: “Artificial intelligence (AI) has the potential to solve some of today’s most pressing societal challenges – from climate change to healthcare disparities – but it could also exacerbate existing inequalities if not developed and deployed responsibly.

The rapid pace of AI development, growing awareness of AI’s societal impact and the urgent need to harness AI for positive change make bridging the ‘AI divide’ essential now. Public-private partnerships (PPPs) can play a crucial role in ensuring AI is developed ethically, sustainably and inclusively by leveraging the strengths of multiple stakeholders across sectors and regions…

To bridge the AI divide effectively, collaboration among governments, private companies, civil society and other stakeholders is crucial. PPPs unite these stakeholders’ strengths to ensure AI is developed ethically, sustainably, and inclusively.

1. Bridging the resource and expertise gap

By combining public oversight and private innovation, PPPs bridge resource and expertise gaps. Governments offer funding, regulations and access to public data; companies contribute technical expertise, creativity and market solutions. This collaboration accelerates AI technologies for social good.

Singapore’s National AI Strategy 2.0, for instance, exemplifies how PPPs drive ethical AI development. By bringing together over one hundred experts from academia, industry and government, Singapore is building a trusted AI ecosystem focused on global challenges like health and climate change. Empowering citizens and businesses to use AI responsibly, Singapore demonstrates how PPPs create inclusive AI systems, serving as a model for others.

2. Fostering cross-border collaboration

AI development is a global endeavour, but countries vary in expertise and resources. PPPs facilitate international knowledge sharing, technology transfer and common ethical standards, ensuring AI benefits are distributed globally, rather than concentrated in a few regions or companies.

3. Ensuring multi-stakeholder engagement

Inclusive AI development requires involving not just public and private sectors, but also civil society organizations and local communities. Engaging these groups in PPPs brings diverse perspectives to AI design and deployment, integrating ethical, social and cultural considerations from the start.

These approaches underscore the value of PPPs in driving AI development through diverse expertise, shared resources and international collaboration…(More)”.

Blog by Sean Trott: “…The goal of this post is to distill what I take to be the most important, immediately applicable, and generalizable insights from these classes. That means that readers should be able to apply those insights without a background in math or knowing how to, say, build a linear model in R. In that way, it’ll be similar to my previous post about “useful cognitive lenses to see through”, but with a greater focus on evaluating claims specifically.

Lesson #1: Consider the whole distribution, not just the central tendency.

If you spend much time reading news articles or social media posts, the odds are good you’ll encounter some descriptive statistics: numbers summarizing or describing a distribution (a set of numbers or values in a dataset). One of the most commonly used descriptive statistics is the arithmetic mean: the sum of every value in a distribution, divided by the number of values overall. The arithmetic mean is a measure of “central tendency”, which just means it’s a way to characterize the typical or expected value in that distribution.

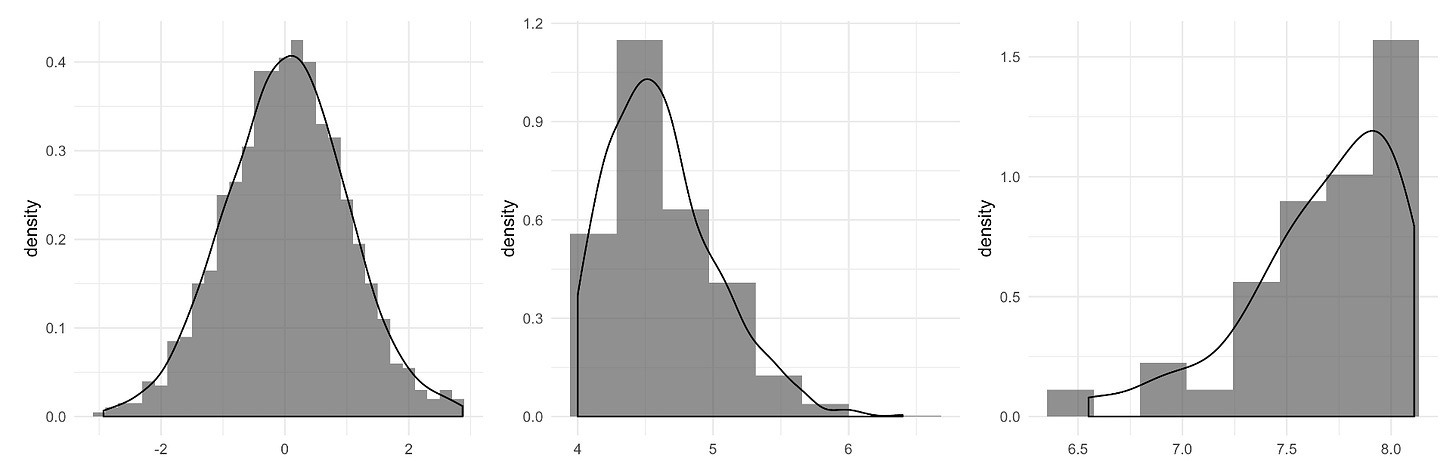

The arithmetic mean is a really useful measure. But as many readers might already know, it’s not perfect. It’s strongly affected by outliers—values that are really different from the rest of the distribution—and things like the skew of a distribution (see the image below for examples of skewed distribution).

In particular, the mean is pulled in the direction of outliers or distribution skew. That’s the logic behind the joke about the average salary of people at a bar jumping up as soon as a billionaire walks in. It’s also why other measures of central tendency, such as the median, are often presented alongside (or instead of) the mean—especially for distributions that happen to be very skewed, such as income or wealth.

It’s not that one of these measures is more “correct”. As Stephen Jay Gould wrote in his article The Median Is Not the Message, they’re just different perspectives on the same distribution:

A politician in power might say with pride, “The mean income of our citizens is $15,000 per year.” The leader of the opposition might retort, “But half our citizens make less than $10,000 per year.” Both are right, but neither cites a statistic with impassive objectivity. The first invokes a mean, the second a median. (Means are higher than medians in such cases because one millionaire may outweigh hundreds of poor people in setting a mean, but can balance only one mendicant in calculating a median.)..(More)”

Article by Ingrid Wickelgren: But unless something tragic happens, body camera footage generally goes unseen. “We spend so much money collecting and storing this data, but it’s almost never used for anything,” says Benjamin Graham, a political scientist at the University of Southern California.

Graham is among a small number of scientists who are reimagining this footage as data rather than just evidence. Their work leverages advances in natural language processing, which relies on artificial intelligence, to automate the analysis of video transcripts of citizen-police interactions. The findings have enabled police departments to spot policing problems, find ways to fix them and determine whether the fixes improve behavior.

Only a small number of police agencies have opened their databases to researchers so far. But if this footage were analyzed routinely, it would be a “real game changer,” says Jennifer Eberhardt, a Stanford University psychologist, who pioneered this line of research. “We can see beat-by-beat, moment-by-moment how an interaction unfolds.”

In papers published over the past seven years, Eberhardt and her colleagues have examined body camera footage to reveal how police speak to white and Black people differently and what type of talk is likely to either gain a person’s trust or portend an undesirable outcome, such as handcuffing or arrest. The findings have refined and enhanced police training. In a study published in PNAS Nexus in September, the researchers showed that the new training changed officers’ behavior…(More)”.

Paper by Heather Douglas et al: “Public engagement with science has become a prominent area of research and effort for democratizing science. In the fall of 2020, we held an online conference, Public Engagement with Science: Defining and Measuring Success, to address questions of how to do public engagement well. The conference was organized around conceptualizations of the publics engaged, with attendant epistemic, ethical, and political valences. We present here the typology of publics we used (volunteer, representative sample, stakeholder, and community publics), discuss the differences among those publics and what those differences mean for practice, and situate this typology within the existing work on public engagement with science. We then provide an overview of the essays published in this journal arising from the conference which provides a window into the rich work presented at the event…(More)”.

Article by Marta Poblet, Stefaan Verhulst, and Anna Colom: “Valencia has a rich history in water management, a legacy shaped by both triumphs and tragedies. This connection to water is embedded in the city’s identity, yet modern floods test its resilience in new ways.

During the recent floods, Valencians experienced a troubling paradox. In today’s connected world, digital information flows through traditional and social media, weather apps, and government alert systems designed to warn us of danger and guide rapid responses. Despite this abundance of data, a tragedy unfolded last month in Valencia. This raises a crucial question: how can we ensure access to the right data, filter it for critical signals, and transform those signals into timely, effective action?

Data stewardship becomes essential in this process.

In particular, the devastating floods in Valencia underscore the importance of:

- having access to data to strengthen the signal (first mile challenges)

- separating signal from noise

- translating signal into action (last mile challenges)…(More)”.

Paper by Alex Fox and Chris Fox: “…argues that traditional approaches to improving public sector productivity, such as adopting private sector practices, technology-driven reforms, and tighter management, have failed to address the complex and evolving needs of public service users. It proposes a shift towards a strengths-based, person-led model, where public services are co-produced with individuals, families, and communities…(More)”.

Paper by Stephen J. Redding: “This paper reviews recent quantitative urban models. These models are sufficiently rich to capture observed features of the data, such as many asymmetric locations and a rich geography of the transport network. Yet these models remain sufficiently tractable as to permit an analytical characterization of their theoretical properties. With only a small number of structural parameters (elasticities) to be estimated, they lend themselves to transparent identification. As they rationalize the observed spatial distribution of economic activity within cities, they can be used to undertake counterfactuals for the impact of empirically-realistic public-policy interventions on this observed distribution. Empirical applications include estimating the strength of agglomeration economies and evaluating the impact of transport infrastructure improvements (e.g., railroads, roads, Rapid Bus Transit Systems), zoning and land use regulations, place-based policies, and new technologies such as remote working…(More)”.

Article by Olivier Zunz: “The age of the average emerged from the engineering of high mass consumption during the second industrial revolution of the late nineteenth century, when tinkerers in industry joined forces with scientists to develop new products and markets. The division of labor between them became irrelevant as industrial innovation rested on advances in organic chemistry, the physics of electricity, and thermodynamics. Working together, these industrial engineers and managers created the modern mass market that penetrated all segments of society from the middle out. Thus, in the heyday of the Gilded Age, at the height of the inequality pitting robber barons against the “common man,” was born, unannounced but increasingly present, the “average American.” It is in searching for the average consumer that American business managers at the time drew a composite portrait of an imagined individual. Here was a person nobody ever met or knew, merely a statistical conceit, who nonetheless felt real.

This new character was not uniquely American. Forces at work in America were also operative in Europe, albeit to a lesser degree. Thus, Austrian novelist Robert Musil, who died in 1942, reflected on the average man in his unfinished modernist masterpiece, The Man Without Qualities. In the middle of his narrative, Musil paused for a moment to give a definition of the word average: “What each one of us as laymen calls, simply, the average [is] a ‘something,’ but nobody knows exactly what…. the ultimate meaning turns out to be something arrived at by taking the average of what is basically meaningless” but “[depending] on [the] law of large numbers.” This, I think, is a powerful definition of the American social norm in the “age of the average”: a meaningless something made real, or seemingly real, by virtue of its repetition. Economists called this average person the “representative individual” in their models of the market. Their complex simplification became an agreed-upon norm, at once a measure of performance and an attainable goal. It was not intended to suggest that all people are alike. As William James once approvingly quoted an acquaintance of his, “There is very little difference between one man and another; but what little there is, is very important.” And that remained true in the age of the average…(More)”

Conversation between Sarah Castell and Stephen Elstub: “…there’s a real problem here: the public are only going to get to know about a mini-public if it gets media coverage, but the media will only cover it if it makes an impact. But it’s more likely to make an impact if the public are aware of it. That’s a tension that mini-publics need to overcome, because it’s important that they reach out to the public. Ultimately it doesn’t matter how inclusive the recruitment is and how well it’s done. It doesn’t matter how well designed the process is. It is still a small number of people involved, so we want mini-publics to be able to influence public opinion and stimulate public debate. And if they can do that, then it’s more likely to affect elite opinion and debate as well, and possibly policy.

One more thing is that, people in power aren’t in the habit of sharing power. And that’s why it’s very difficult. I think the politicians are mainly motivated around this because they hope it’s going to look good to the electorate and get them some votes, but they are also worried about low levels of trust in society and what the ramifications of that might be. But in general, people in power don’t give it away very easily…

Part of the problem is that a lot of the research around public views on deliberative processes was done through experiments. It is useful, but it doesn’t quite tell us what will happen when mini-publics are communicated to the public in the messy real public sphere. Previously, there just weren’t that many well-known cases that we could actually do field research on. But that is starting to change.

There’s also more interdisciplinary work needed in this area. We need to improve how communication strategies around citizens’ assembly are done – there must be work that’s relevant in political communication studies and other fields who have this kind of insight…(More)”.