Stefaan Verhulst

Article by Communities around the world are increasingly recognizing that breaking down silos and leveraging shared resources and interdependencies across economic, social, and environmental issues can help accelerate progress on multiple issues simultaneously. As a framework for organizing local development priorities, the world’s 17 Sustainable Development Goals (SDGs) uniquely combine a need for broad technical expertise with an opportunity to synergize across domains—all while adhering to the principle of leaving no one behind. For local leaders attempting to tackle intersecting issues using the SDGs, one underpinning question is how to support new forms of collaboration to maximize impact and progress?

In early May, over 100 people across the East Central Florida (ECF) region in the U.S. participated in “Partnership for the Goals: Creating a Resilient and Thriving Community,” a two-day multi-stakeholder convening spearheaded by a team of local leaders from the East Central Florida Regional Resilience Collaborative (ECFR2C), the Central Florida Foundation, the City of Orlando, Florida for Good, Orange County, and the University of Central Florida. The convening grew out of a multi-year resilience planning process that leveraged the SDGs as a framework for tackling local economic, social, and environmental priorities all at once.

To move from community-wide planning to community-wide action, the organizers experimented with a 17 Rooms process—a new approach to accelerating collaborative action for the SDGs pioneered by the Center for Sustainable Development at Brookings and The Rockefeller Foundation. We collaborated with the ECF local organizing team and, in the process, spotted a range of more broadly relevant insights that we describe here…(More)”.

Article by Iavor Bojinov, Karthik Rajkumar, Guillaume Saint-Jacques, Erik Brynjolfsson, and Sinan Aral: “Whom should you connect with the next time you’re looking for a job? To answer this question, we analyzed data from multiple large-scale randomized experiments involving 20 million people to measure how different types of connections impact job mobility. Our results, published recently in Science Magazine, show that your strongest ties — namely your connections to immediate coworkers, close friends, and family — were actually the least helpful for finding new opportunities and securing a job. You’ll have better luck with your weak ties: the more infrequent, arm’s-length relationships with acquaintances.

To be more specific, the ties that are most helpful for finding new jobs tend to be moderately weak: They strike a balance between exposing you to new social circles and information and having enough familiarity and overlapping interests so that the information is useful. Our findings uncovered the relationship between the strength of the connection (as measured by the number of mutual connections prior to connecting) and the likelihood that a job seeker transitions to a new role within the organization of a connection.The observation that weak ties are more beneficial for finding a job is not new. Sociologist Mark Granovetter first laid out this idea in a seminal 1973 paper that described how a person’s network affects their job prospects. Since then, the theory, known as the “strength of weak ties,” has become one of the most influential in the social sciences — underpinning network theories of information diffusion, industry structure, and human cooperation….(More)”.

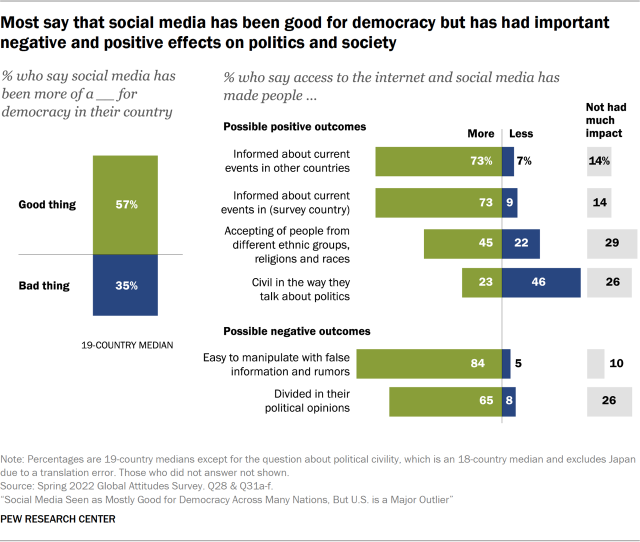

Pew Research: “As people across the globe have increasingly turned to Facebook, Twitter, WhatsApp and other platforms to get their news and express their opinions, the sphere of social media has become a new public space for discussing – and often arguing bitterly – about political and social issues. And in the mind of many analysts, social media is one of the major reasons for the declining health of democracy in nations around the world.

However, as a new Pew Research Center survey of 19 advanced economies shows, ordinary citizens see social media as both a constructive and destructive component of political life, and overall most believe it has actually had a positive impact on democracy. Across the countries polled, a median of 57% say social media has been more of a good thing for their democracy, with 35% saying it is has been a bad thing.

There are substantial cross-national differences on this question, however, and the United States is a clear outlier: Just 34% of U.S. adults think social media has been good for democracy, while 64% say it has had a bad impact. In fact, the U.S. is an outlier on a number of measures, with larger shares of Americans seeing social media as divisive…(More)”.

Report by Geoff Mulgan, Oliver Marsh, and Anina Henggeler: “…examines how governments — and the societies around them — mobilised intelligence to handle the COVID-19 pandemic and its effects. It also makes recommendations as to how they could improve their ability to organise intelligence for future challenges of all kinds, from pandemics to climate change.

The study draws on dozens of interviews with senior officials and others in many countries including Estonia, Australia, New Zealand, Germany, Finland, USA, Chile, Canada, Portugal, Taiwan, Singapore, India, Bangladesh, UAE, South Korea and the UK, as well as the European Commission and UN agencies — along with roundtables and literature analysis.

The pandemic was an unprecedented event in its global impacts and in the scale of government responses. It required a myriad of policy decisions: about testing, lockdowns, masks, school closures, visiting rules at care homes and vaccinations.

Our interest is in what contributed to those decisions, and we define intelligence broadly to include data, evidence, models, tacit knowledge, foresight and creativity and innovation — all the means that can help governments make better decisions, particularly under conditions of stress and uncertainty.

Each type of intelligence played an important role. Governments needed health as well as non-health data to help understand how the virus was spreading in real time and its impacts. They needed models — for example, to judge if their hospitals were at risk of being overrun. They needed evidence — for example on whether enforcing mask-wearing would be effective. And they needed to tap into the knowledge of citizens and frontline staff quickly to spot potential problems and frictions.

Most governments had to improvise new methods of organising that intelligence, particularly as they grappled not just with the immediate health challenges, but also with the knock-on challenges to economies, communities, mental health, school systems and sectors such as hospitality.

As we show there was extraordinary innovation globally around the gathering of data, from mass serological testing to analysis of sewage, from mobilising mobile phone data to citizen generated data on symptoms. There was an equally impressive explosion of research and evidence; and innovative approaches to problem solving and creativity, from vaccine development to Personal Protective Equipment (PPE).

However, we also point to problems:

- Imbalances in terms of what was attended to — with physical health given much more attention than mental health or educational impacts in models and data, which was understandable in the early phases of the crisis but more problematic later on as trade-offs had to be managed

- Imbalances in different kinds of expertise in scientific advice and influence, for instance in who got to sit on and be heard in expert advisory committees

- Very varied ability of countries to share information and data between tiers of government

- Very varied ability to mobilise key sources, such as commercial data, and varied use of intelligence from outside sources, such as from other countries or from civic groups,

- Even when there were strong sources of advice and evidence, weak capacities to synthesise multiple kinds of intelligence at the core of governments…(More)”.

Review by Carol Dumaine: “In 1918, as the Great War was coming to an end after four bloody years of brutal conflict, an influenza pandemic began to ravage societies around the globe. While in Paris negotiating the terms of the peace agreement in the spring of 1919, evidence indicates that US president Woodrow Wilson was stricken with the flu.

Wilson, who had been intransigent in insisting on just peace terms for the defeated nations (what he called “peace without victory”), underwent a profound change of mental state that his personal physician and closest advisors attributed to his illness. While sick, Wilson suddenly agreed to all the terms he had previously adamantly rejected and approved a treaty that made onerous demands of Germany.

Wilson’s reversal left Germans embittered and his own advisors disillusioned. Historian John M. Barry, who recounts this episode in his book about the 1918 pandemic, The Great Influenza, observes that most historians agree “that the harshness toward Germany of the Paris peace treaty helped create the economic hardship, nationalistic reaction, and political chaos that fostered the rise of Hitler.”

This anecdote is a vivid illustration of how a public health disaster can intersect with world affairs, potentially sowing the seeds for a future of war. Converging crises can leave societies with too little time to regroup, breaking down resilience and capacities for governance. Barry concludes from his research into the 1918 pandemic that to forestall this loss of authority—and perhaps to avoid future, unforeseen repercussions—government leaders should share the unvarnished facts and evolving knowledge of a situation.

Society is ultimately based on trust; during the flu pandemic, “as trust broke down, people became alienated not only from those in authority, but from each other.” Barry continues, “Those in authority must retain the public’s trust. The way to do that is to distort nothing, to put the best face on nothing, to try to manipulate no one.”

Charles Weiss makes a similar argument in his new book, The Survival Nexus: Science, Technology, and World Affairs. Weiss contends that the preventable human and economic losses of the COVID-19 pandemic were the result of politicians avoiding harsh truths: “Political leaders suppressed evidence of virus spread, downplayed the importance of the epidemic and the need to observe measures to protect the health of the population, ignored the opinions of local experts, and publicized bogus ‘cures’—all to avoid economic damage and public panic, but equally importantly to consolidate political power and to show themselves as strong leaders who were firmly in control.” …(More)”.

Article by Julia Cohen: “In just over a month after the change in Twitter leadership, there have been significant changes to the social media platform, in its new “Twitter 2.0.” version. For researchers who use Twitter as a primary source of data, including many of the computer scientists at USC’s Information Sciences Institute (ISI), the effects could be debilitating…

Over the years, Twitter has been extremely friendly to researchers, providing and maintaining a robust API (application programming interface) specifically for academic research. The Twitter API for Academic Research allows researchers with specific objectives who are affiliated with an academic institution to gather historical and real-time data sets of tweets, and related metadata, at no cost. Currently, the Twitter API for Academic Research continues to be functional and maintained in Twitter 2.0.

The data obtained from the API provides a means to observe public conversations and understand people’s opinions about societal issues. Luca Luceri, a Postdoctoral Research Associate at ISI called Twitter “a primary platform to observe online discussion tied to political and social issues.” And Twitter touts its API for Academic Research as a way for “academic researchers to use data from the public conversation to study topics as diverse as the conversation on Twitter itself.”

However, if people continue deactivating their Twitter accounts, which appears to be the case, the makeup of the user base will change, with data sets and related studies proportionally affected. This is especially true if the user base evolves in a way that makes it more ideologically homogeneous and less diverse.

According to MIT Technology Review, in the first week after its transition, Twitter may have lost one million users, which translates to a 208% increase in lost accounts. And there’s also the concern that the site could not work as effectively, because of the substantial decrease in the size of the engineering teams. This includes concerns about the durability of the service researchers rely on for data, namely the Twitter API. Jason Baumgartner, founder of Pushshift, a social media data collection, analysis, and archiving platform, said in several recent API requests, his team also saw a significant increase in error rates – in the 25-30% range –when they typically see rates near 1%. Though for now this is anecdotal, it leaves researchers wondering if they will be able to rely on Twitter data for future research.

One example of how the makeup of the less-regulated Twitter 2.0 user base could significantly be altered is if marginalized groups leave Twitter at a higher rate than the general user base, e.g. due to increased hate speech. Keith Burghardt, a Computer Scientist at ISI who studies hate speech online said, “It’s not that an underregulated social media changes people’s opinions, but it just makes people much more vocal. So you will probably see a lot more content that is hateful.” In fact, a study by Montclair State University found that hate speech on Twitter skyrocketed in the week after the acquisition of Twitter….(More)”.

Book by Jeffrey M. Binder: “Bringing together the histories of mathematics, computer science, and linguistic thought, Language and the Rise of the Algorithm reveals how recent developments in artificial intelligence are reopening an issue that troubled mathematicians well before the computer age: How do you draw the line between computational rules and the complexities of making systems comprehensible to people? By attending to this question, we come to see that the modern idea of the algorithm is implicated in a long history of attempts to maintain a disciplinary boundary separating technical knowledge from the languages people speak day to day.

Here Jeffrey M. Binder offers a compelling tour of four visions of universal computation that addressed this issue in very different ways: G. W. Leibniz’s calculus ratiocinator; a universal algebra scheme Nicolas de Condorcet designed during the French Revolution; George Boole’s nineteenth-century logic system; and the early programming language ALGOL, short for algorithmic language. These episodes show that symbolic computation has repeatedly become entangled in debates about the nature of communication. Machine learning, in its increasing dependence on words, erodes the line between technical and everyday language, revealing the urgent stakes underlying this boundary.

The idea of the algorithm is a levee holding back the social complexity of language, and it is about to break. This book is about the flood that inspired its construction…(More)”.

Book edited by Selen A. Ercan et al: “… brings together a wide range of methods used in the study of deliberative democracy. It offers thirty-one different methods that scholars use for theorizing, measuring, exploring, or applying deliberative democracy. Each chapter presents one method by explaining its utility in deliberative democracy research and providing guidance on its application by drawing on examples from previous studies. The book hopes to inspire scholars to undertake methodologically robust, intellectually creative, and politically relevant research. It fills a significant gap in a rapidly growing field of research by assembling diverse methods and thereby expanding the range of methodological choices available to students, scholars, and practitioners of deliberative democracy…(More)”.

Press Release: “On Wednesday, a new Industry Data for Society Partnership (IDSP) was launched by GitHub, Hewlett Packard Enterprise (HPE), LinkedIn, Microsoft, Northumbrian Water Group, R2 Factory and UK Power Networks. The IDSP is a first-of-its-kind cross-industry partnership to help advance more open and accessible private-sector data for societal good. The founding members of the IDSP agree to provide greater access to their data, where appropriate, to help tackle some of the world’s most pressing challenges in areas such as sustainability and inclusive economic growth.

In the past few years, open data has played a critical role in enabling faster research and collaboration across industries and with the public sector. As we saw during COVID-19, pandemic data that was made more open enabled researchers to make faster progress and gave citizens more information to inform their day-to-day activities. The IDSP’s goal is to continue this model into new areas and help address other complex societal challenges. The IDSP will serve as a forum for the participating companies to foster collaboration, as well as a resource for other entities working on related issues.

IDSP members commit to the following:

- To open data or provide greater access to data, where appropriate, to help solve pressing societal problems in a usable, responsible and inclusive manner.

- To share knowledge and information for the effective use of open data and data collaboration for social benefit.

- To invest in skilling a broad class of professionals to use data effectively and responsibly for social impact.

- To protect individuals’ privacy in all these activities.

The IDSP will also bring in other organizations with expertise in societal issues. At launch, The GovLab’s Data Program based at New York University and the Open Data Institute will both be partnership Affiliates to provide guidance and expertise for partnership endeavors…(More)”.

Blog by Anna Ibru and Dane Gambrell at The GovLab: “…In recent years, public institutions around the world are piloting new youth engagement initiatives like Creamos that tap the expertise and experiences of young people to develop projects, programs, and policies and address complex social challenges within communities.

To learn from and scale best practices from international models of youth engagement, The GovLab has develop case studies about three path breaking initiatives: Nuortenbudjetti, Helsinki’s participatory budgeting initiative for youth; Forum Jove BCN, Barcelona’s youth led citizens’ assembly; and Creamos, an open innovation and coaching program for young social innovators in Chile. For government decision makers and institutions who are looking to engage and empower young people to get involved in their communities, develop real-world solutions, and strengthen democracy, these examples describe these initiatives and their outcomes along with guidance on how to design and replicate such projects in your community. Young people are still a widely untapped resource who are too-often left out in policy and program design. The United Nations affirms that it is impossible to meet the UN SDGs by 2030 without active participation of the 1.8 billion youth in the world. Government decision makers and institutions must capitalize on the opportunity to engage and empower young people. The successes of Nuortenbudjetti, Forum Jove BCN, and Creamos provide a roadmap for policymakers looking to engage in this space….(More)” See also: Nuortenbudjetti: Helsinki’s Youth Budget; Creamos: Co-creating youth-led social innovation projects in Chile and Forum Jove BCN: Barcelona’s Youth Forum.