Stefaan Verhulst

Article by Toluwani Aliu: “From plotting missing bridges in Rwanda to community championed geospatial initiatives in Nigeria, AI is tackling decades-old local issues…AI-supported platform Darli, which supports 20+ African languages, has given over 110,000 farmers access to advice, logistics and finance…Tailoring AI to underserved areas creates scalable public benefits, fosters equity and offers frameworks for sustainable digital transformation…(More)”.

Paper by Enikő Kovács-Szépvölgyi, Dorina Anna Tóth, and Roland Kelemen: “While the digital environment offers new opportunities to realise children’s rights, their right to participation remains insufficiently reflected in digital policy frameworks. This study analyses the right of the child to be heard in the academic literature and in the existing international legal and EU regulatory frameworks. It explores how children’s participation right is incorporated into EU and national digital policies and examines how genuine engagement can strengthen children’s digital resilience and support their well-being. By applying the 7C model of coping skills and analysing its interaction with the right to participation, the study highlights how these elements mutually reinforce the achievement of the Sustainable Development Goals (SDGs). Through a qualitative analysis of key strategic documents and the relevant policy literature, the research identifies the tension between the formal acknowledgment of children’s right to participate and its practical implementation at law- and policy-making levels within the digital context. Although the European Union’s examined strategies emphasise children’s participation, their practical implementation often remains abstract and fragmented at the state level. While the new BIK+ strategy shows a stronger formal emphasis on child participation, this positive development in policy language has not yet translated into a substantive change in children’s influence at the state level. This nuance highlights that despite a positive trend in policy rhetoric, the essential dimension of genuine influence remains underdeveloped…(More)”. See also: Who Decides What and How Data is Re-Used? Lessons Learned from Youth-Led Co-Design for Responsible Data Reuse in Services

Article by Henry Farrell: “Dan Wang’s new book, Breakneck: China’s Quest to Engineer the Future, came out last week…The biggest lesson I took from Breakneck was not about China, or the U.S., but the importance of “process knowledge.” That is not a concept that features much in the existing debates about trans-Pacific geopolitics, nor discussions about what America ought do to revitalize its economy. Dan makes a very strong case that it should.

As I’ve said twice, I’m biased. I’m fascinated by process knowledge and manufacturing because I spent a chunk of the late 1990s talking to manufacturers in Bologna and Baden-Wurttemberg for my Ph.D. dissertation.

I was carrying out research in the twilight of a long period of interest in so-called “industrial districts,” small localized regions with lots of small firms engaged in a particular sector of the economy. Paul Krugman’s Geography and Trade (maybe my favorite of his books) talks about some of the economic theory behind this form of concentrated production: economic sociologists and economic geographers had their own arguments. Economists, sociologists and geographers all emphasized the crucial importance of local diffuse knowledge about how to do things in making these economies successful. Such knowledge was in part the product of market interactions, but it wasn’t itself a commodity that could be bought and sold. It was more often tacit: a sense of how to do things, and who best to talk to, which could not easily be articulated. The sociologists were particularly interested in the informal institutions, norms and social practices that held this together. They identified different patterns of local institutional development, which the Communist party in Emilia-Romagna and Tuscany, and the Christian Democrats in the Veneto and Marche, had built on to foster vibrant local economies.

I was interested in Bologna because it had a heavy concentration of small manufacturers of packaging machinery, which could be compared, if you squinted a little, with the bigger and more famous cluster of engineering firms around Stuttgart in Germany. There were myriads of small companies in the unlovely industrial outskirts of Bologna, each with its own particular line of products. Most of these companies had been founded by people who had apprenticed and worked for someone else, spotted their own opportunity to iterate on their knowhow, and gone independent…(More)”.

Paper by Melisa Basol: “Artificial intelligence (AI) is reshaping digital autonomy. This article examines AI-driven manipulation, exploring its mechanisms, ethical challenges, and potential safeguards. AI systems are increasingly integrated into personal, social, and political domains, shaping decision-making processes. While these systems are often framed as neutral tools, AI systems can manipulate on three levels: (1) structural manipulation, where AI systems shape decisions through design choices like ranking algorithms and engagement-driven models; (2) exploitation by external actors, where AI is leveraged to amplify the spread of harmful falsehoods, automated deception, and personalized manipulation attempts; and (3) emergent manipulation, where AI systems may exhibit unexpected or autonomous influence over users, even in the absence of human intent. The article underscores the lack of a cohesive framework for defining and regulating AI manipulation, complicating efforts to mitigate its risks. To counteract these risks, this article draws on psychological theories of manipulation, persuasion, and social influence while examining the power asymmetries, systemic biases, and epistemic instability that complicate efforts to safeguard digital agency in the age of generative AI. Additionally, this article proposes a tiered intervention approach at three levels: (1) capability-level safeguards; (2) human-interaction interventions; and (3) systemic governance frameworks. Ultimately, this article challenges the prevailing narrative of an AI ‘revolution’, arguing that AI does not inherently democratize knowledge or expand autonomy but instead risks consolidating control under the guise of progress. Effective governance must move beyond transparency towards proactive regulatory mechanisms that prevent manipulation, curb power asymmetries, and protect human agency…(More)”.

Paper by Margot E. Kaminski and Gianclaudio Malgieri: “Privacy law has long centered on the individual. But we observe a meaningful shift toward group harm and rights. There is growing recognition that data-driven practices, including the development and use of artificial intelligence (AI) systems, affect not just atomized individuals but also their neighborhoods and communities, including and especially situationally vulnerable and historically marginalized groups.

This Article explores a recent shift in both data privacy law and the newly developing law of AI: a turn towards stakeholder participation in the governance of AI and data systems, specifically by impacted groups often though not always representing historically marginalized communities. In this Article we chart this development across an array of recent laws in both the United States and the European Union. We explain reasons for the turn, both theoretical and practical. We then offer analysis of the legal scaffolding of impacted stakeholder participation, establishing a catalog of both existing and possible interventions. We close with a call for reframing impacted stakeholders as rights-holders, and for recognizing several variations on a group right to contest AI systems, among other collective means of leveraging and invoking rights individuals have already been afforded…(More)”.

Article by Rainer Kattel: “Europe today faces no shortage of crises. From climate breakdown and geopolitical instability to social fragmentation and digital disruption, the continent is being reshaped by forces that defy easy policy responses. In this increasingly turbulent landscape, innovation is no longer a technocratic pursuit confined to boosting productivity or improving competitiveness, as the consensus of the early twenty-first century prescribed. It has become a political, economic and institutional necessity—one that demands not only new ideas but new ways of organising the public institutions that can turn those ideas into systemic change.

At the heart of this challenge lie innovation agencies. Long the workhorses of science, technology and industrial policy, these agencies—often semi-autonomous and operating at arm’s length from ministries—have traditionally focused on supporting firms, facilitating research and distributing grants. Yet over the past decade, their mandates have expanded dramatically. Now tasked with delivering missions such as decarbonising mobility, transforming food systems or building digital sovereignty, innovation agencies are being asked to operate not just as funders or intermediaries but as architects of change across complex socio-technical systems.

This shift is long overdue. In theory, Europe has embraced the logic of mission-oriented innovation. Horizon Europe, the EU’s flagship research programme, commits over €50 billion to grand societal challenges. The idea is straightforward: set ambitious, shared goals and allow national and regional actors to develop locally appropriate solutions.

But the reality on the ground is starkly different. At the EU level, implementation remains locked in rigid frameworks of compliance and administrative oversight. At the local and regional level, innovation flourishes—labs, pilots and experiments abound—but rarely scales beyond the project phase. The result is a peculiar imbalance: too much stability at the top, too much agility at the bottom and too little capacity in the middle…(More)”.

Report by The Data Tank and Impact Licensing Initiative: “…report develops an overview of mechanisms and components of business and data governance

models for health data collaboratives. In this report, we use the terms ‘data collaborative’ or

‘ecosystem for data reuse’ indistinguishably to refer to collaborations between different stakeholders

across multiple sectors to exchange data in a way that overcomes silos to create public value (Susha

et al., 2017).

This report synthesises a rapid literature review of academic, policy, and industry documents,

including case studies, to examine governance and business models for health data reuse. We

examine in the literature different dimensions involved in sustaining an ecosystem for data reuse.

The report sets these out in the current regulatory context. It also considers the role that

mechanisms like a social license and impact licensing play in the sustainable governance of the

different business models as essential complements to the regulatory context. It analyses case

studies that can be mapped onto these models and offers pathways for a process to decide on a

business model…(More)”.

Article by Rob Goodman and Jimmy Soni: “Just what is information? For such an intuitive idea, its precise nature proved remarkably hard to pin down. For centuries, it seemed to hover somewhere in a half-world between the visible and the unseen, the physical and the evanescent, the enduring medium and its fleeting message. It haunted the ancients as much as it did Claude Shannon and his Bell Labs colleagues in New York and New Jersey, who were trying to engirdle the world with wires and telecoms cables in the mid-20th century.

Shannon – mathematician, American, jazz fanatic, juggling enthusiast – is the founder of information theory, and the architect of our digital world. It was Shannon’s paper ‘A Mathematical Theory of Communication’ (1948) that introduced the bit, an objective measure of how much information a message contains…

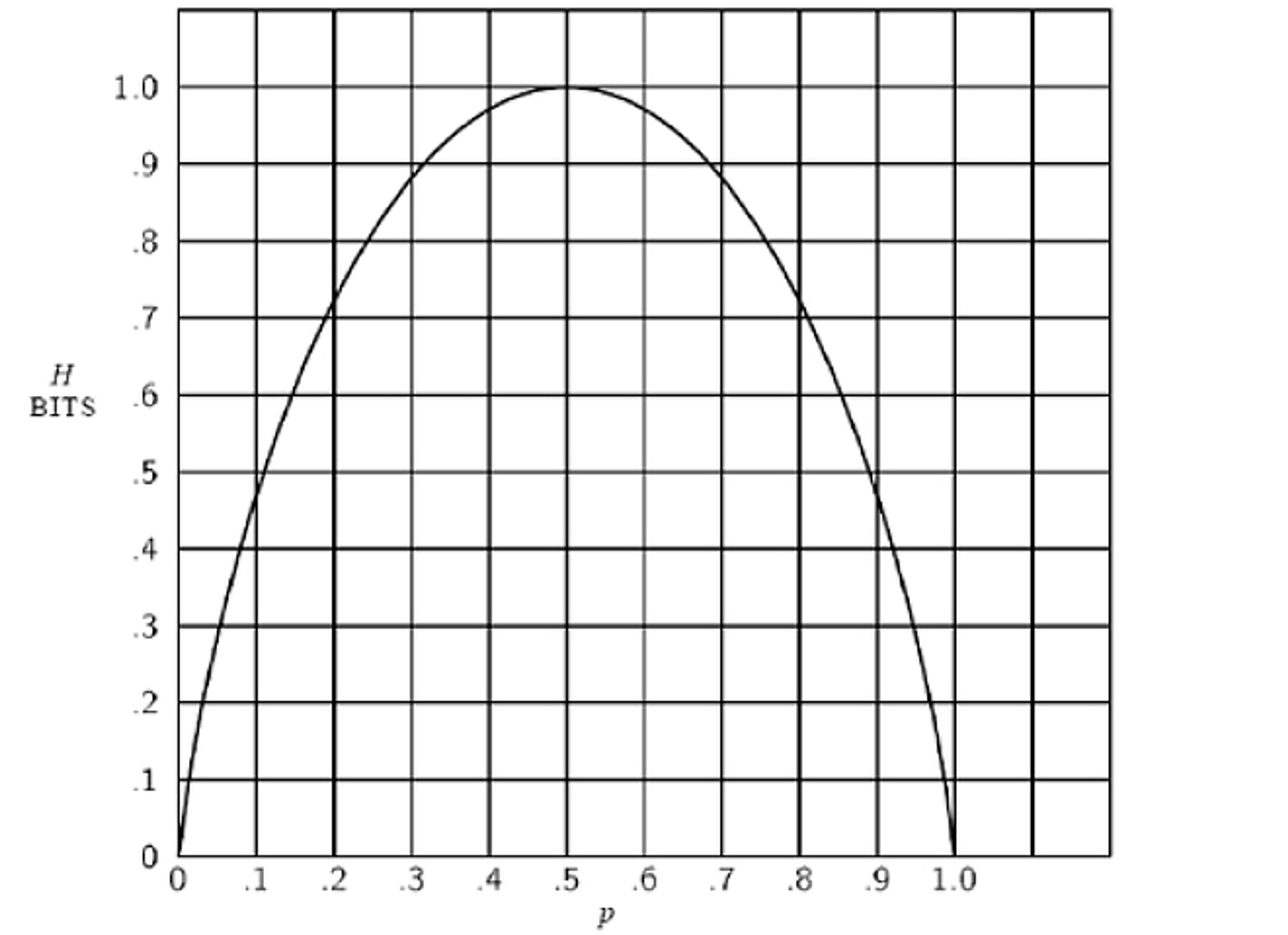

Shannon’s ‘mathematical theory’ sets out two big ideas. The first is that information is probabilistic. We should begin by grasping that information is a measure of the uncertainty we overcome, Shannon said – which we might also call surprise. What determines this uncertainty is not just the size of the symbol vocabulary, as Nyquist and Hartley thought. It’s also about the odds that any given symbol will be chosen. Take the example of a coin-toss, the simplest thing Shannon could come up with as a ‘source’ of information. A fair coin carries two choices with equal odds; we could say that such a coin, or any ‘device with two stable positions’, stores one binary digit of information. Or, using an abbreviation suggested by one of Shannon’s co-workers, we could say that it stores one bit.

But the crucial step came next. Shannon pointed out that most of our messages are not like fair coins. They are like weighted coins. A biased coin carries less than one bit of information, because the result of any flip is less surprising. Shannon illustrated the point with this graph. You see that the amount of information conveyed by our coin flip (on the y-axis) reaches its apex when the odds are 50-50, represented as 0.5 on the x-axis; but as the outcome grows more predictable in either direction depending on the size of the bias, the information carried by the coin steadily declines.

The messages humans send are more like weighted coins than unweighted coins, because the symbols we use aren’t chosen at random, but depend in probabilistic ways on what preceded them. In images that resemble something other than TV static, dark pixels are more likely to appear next to dark pixels, and light next to light. In written messages that are something other than random strings of text, each letter has a kind of ‘pull’ on the letters that follow it…(More)”

Paper by Scott E Page: “Recent breakthroughs in Al combined with steady advances in information technology change the physics of organizational and institutional design. Discussions and deliberations can now include people in disparate locations speaking simultaneously. This opens up new possible designs for interactions that will enhance our ability to produce collective intelligence.

How do we make collective intelligence happen? Who should be in the room, and how do we design and structure their interactions? Design-minded social scientists approach these questions by embedding them within a variety of formal frameworks. Economists design markets and matching processes to produce efficient, fair allocations with aligned incentives. Political scientists create voting rules to select winners with broad support. Organizational scientists design communication and authority structures capable of generating innovative solutions and thoughtful strategic decisions.

These design-minded social scientists operate within sets of constraints. Some are cognitive. People can only store, attend to, and process so much information. Some are physical. Rooms can only be so large. Some are temporal. Everyone must be available Tuesday at 4pm. These constraints limit possible designs, and that reduces the efficiency, fairness, representativeness, and innovativeness of the outcomes that we might achieve.

Those constraints have now changed. Recent breakthroughs in Artificial Intelligence (AI) combined with steady advancements in information technologies have altered the physics of organizational and institutional design (Farrell et al., 2025). In doing so, they have expanded the set of possible designs. The logic is straightforward: Removing constraints allows us to achieve more…(More)”.

Paper by Tara Cookson and Ruth Carlitz: “In 2013, the United Nations called for a “Data Revolution” to advance sustainable development. “Data for Good” initiatives that have followed bring together development and humanitarian actors with technology companies. Few studies have examined the composition of Data for Good partnerships or assessed the uptake and use of the data they generate. We help fill this gap with a case study of Meta’s (then Facebook) Survey on Gender Equality at Home, which reached over half a million Facebook users in more than 200 countries. The survey was developed in partnership with international development and humanitarian organizations. Our study is uniquely informed by our involvement in this partnership: we contributed subject matter expertise to the development of the survey and advised on dissemination strategies for the resulting data, which we also analyzed in our own academic work. We complement this autoethnographic perspective with insights from scholars of partnerships for development, and a practitioner framework to understand the factors connecting data to action. We find that including multiple partners can widen the scope of a project such that it gains breadth but loses depth. In addition, while it is (somewhat) possible to quantify the impact of a Data for Good partnership in terms of data use, “goodness” can also be assessed in terms of the process of producing data. Specifically, collaborations between organizations with different interests and resources may be of significant social value, particularly when they learn from one another—even if such goodness is harder to quantify…(More)”.