Stefaan Verhulst

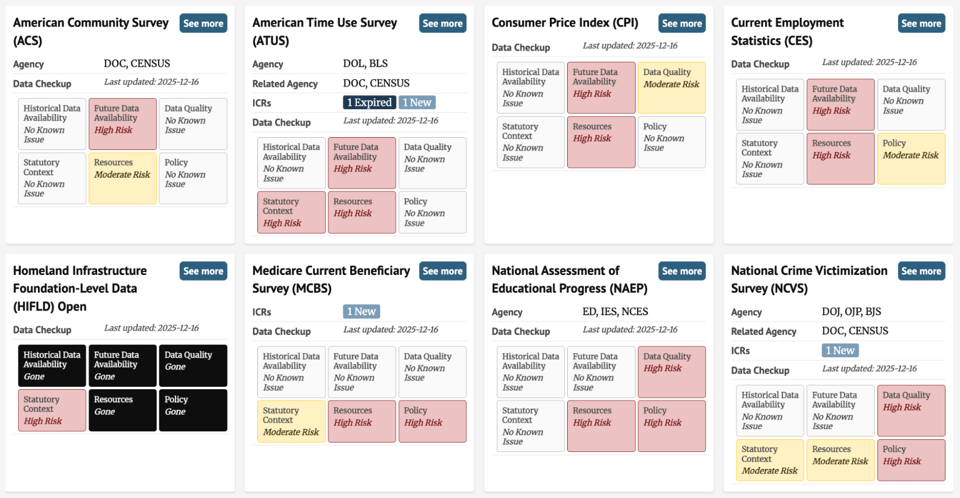

Tool by the dataindex.us: “… excited to launch the Data Checkup – a comprehensive framework for assessing the health of federal data collections, highlighting key dimensions of risk and presenting a clear status of data well-being.

When we started dataindex.us, one of our earliest tools was a URL tracker: a simple way to monitor whether a webpage or data download link was up or down. In early 2025, that kind of monitoring became urgent as thousands of federal webpages and datasets went dark.

As many of those pages came back online, often changed from their original form, we realized URL tracking wasn’t sufficient. Threats to federal data are coming from multiple directions, including loss of capacity, reduced funding, targeted removal of variables, and the termination of datasets that don’t align with administration priorities.

The more important question became: how do we assess the risk that a dataset might disappear, change, or degrade in the future? We needed a way to evaluate the health of a federal dataset that was broad enough to apply across many types of data, yet specific enough to capture the different ways datasets can be put at risk. That led us to develop the Data Checkup.

Once we had an initial concept, we brought together experts from across the data ecosystem to get feedback on that concept. The current Data Checkup framework reflects the feedback received from more than 30 colleagues.

The result is a framework built around six dimensions:

- Historical Data Availability

- Future Data Availability

- Data Quality

- Statutory Context

- Staffing and Funding

- Policy

Each dimension is assessed and assigned a status that communicates its level of risk:

- Gone

- High Risk

- Moderate Risk

- No Known Issue

Together, this assessment provides a more complete picture of dataset health than availability checks alone.

The Data Checkup is designed to serve the needs of both data users and data advocates. It supports a wide range of use cases, including academic research, policy decision-making, journalism, advocacy, and litigation…Here you can see the Data Checkup framework applied to a subset of datasets. At a high level, it provides a snapshot of dataset wellbeing, allowing you to quickly identify which datasets are facing risks…(More)”

Article by Oliver Roeder: “…From childhood, maps present the wooden feel of permanence. A globe sits on the sideboard. A teacher yanks down a spooled world from above the chalkboard, year after year. Road atlases are forever wedged in seat-back pockets. But maps, of course, could always be changing. In the short term, buildings and roads are built. In the medium term, territory is conquered and nations fall and are founded. In the long term, rivers change course and glaciers melt and mountains rise. In this era of conflicts in Ukraine and the Middle East, the undoing of national and international orders, and technological upheaval, a change in maps appears to be accelerating.

The world is impossible to map perfectly — too detailed, too spherical, too much fractal coastline. A map is necessarily a model. In one direction, the model asymptotes to the one-to-one scale map from the Jorge Luis Borges story that literally covers the land, a map of the empire the size of the empire, which proves useless and is left in tatters across the desert. There is a limit in the other direction too, not map expanded to world, but world collapsed into map — pure abstraction on a phone screen, obscuring the real world outside, geodata for delivery companies and rideshare apps.

But if maps abstract the world, their makers demand and encourage a close association with it. There must eventually be a search for the literal ground truth. As the filmmaker Carl Theodor Dreyer once said, “You can’t simplify reality without understanding it first.” Cartography, in turn, informs interactions with the world…(More)”.

Essay by Nick Vlahos: “…The consistent challenge of scale often misidentifies the main constraint in modern democracy. The binding limit on mass deliberation is not simply that there are “too many people” spread across a large territory. It is also not that masses are inherently poor deliberators and completely prone to be swayed by demagogues. Surely, it is not hard to appreciate why large amounts of people might not be able to govern large territories. However, as I see it, the problem is that modern societies have organised time as if democracy were an after-hours activity, and organised political economy as if participation were a luxury rather than a public obligation. Put differently, the claim that “a million people cannot deliberate” often means something more prosaic: a million people cannot all stop working, travel, prepare, deliberate, and return to work without destabilising the economy. It is not that people cannot be organised in masses for conversation, because we have enough theoretical and practical resources to design such processes, both in-person and online…(More)”.

Book by Hélène Landemore: “Bought by special interests, detached from real life, obsessed with reelection. Politicians make big promises, deliver little to nothing, and keep the game rigged in their favor. But what can we do?

In Politics Without Politicians, acclaimed political theorist Hélène Landemore asks and answers a radical question: What if we didn’t need politicians at all? What if everyday people—under the right conditions—could govern much better?

With disarming clarity and a deep sense of urgency, Landemore argues that electoral politics is broken but democracy isn’t. We’ve just been doing it wrong. Drawing on ancient Athenian practices and contemporary citizens’ assemblies, Landemore champions an alternative approach that is alive, working, and growing around the world: civic lotteries that select everyday people to govern—not as career politicians but as temporary stewards of the common good.

When regular citizens come together in this way, they make smarter, fairer, more forward-thinking decisions, often bringing out the best in one another. Witnessing this process firsthand, Landemore has learned that democracy should be like a good party where even the shyest guests feel welcome to speak, listen, and be heard.

With sharp analysis and real-world examples, drawing from her experience with deliberative processes in France and elsewhere, Landemore shows us how to move beyond democracy as a spectator sport, embracing it as a shared practice—not just in the voting booth but in shaping the laws and policies that govern our lives.

This is not a book about what’s wrong—it’s a manifesto for what’s possible. If you’ve ever felt powerless, Politics Without Politicians will show you how “We the People” take back democracy…(More)”.

Paper by Iiris Lehto: “The datafication of healthcare and social welfare services has increased the demand for data care work. Data care work denotes the practical, hands-on labour of caring for data. Drawing on ethnographic material from a data team, this article examines its mundane practices within a wellbeing services county in Finland, with a focus on the sticking points that constitute the dark side of data care work. These sticking points stem mainly from organisational factors, regulatory and policy changes, and technical challenges that frequently intersect. The analysis further suggests that the sticking points reflect persistent struggles to maintain data quality, a task that is central to data care work. Inaccurate data can produce biased decisions, particularly in such areas as funding and care workforce allocation. Acknowledging this often-hidden labour is crucial for understanding how data infrastructures function in everyday healthcare and social welfare settings. As a relatively new approach in care research, the concept of data care work enables a broader examination of the implications of datafication for these services…(More)”.

Paper by Xiao Xiang Zhu, Sining Chen, Fahong Zhang, Yilei Shi, and Yuanyuan Wang: “We introduce GlobalBuildingAtlas, a publicly available dataset providing global and complete coverage of building polygons, heights and Level of Detail 1 (LoD1) 3D building models. This is the first open dataset to offer high quality, consistent, and complete building data in 2D and 3D form at the individual building level on a global scale. Towards this dataset, we developed machine learning-based pipelines to derive building polygons and heights (called GBA.Height) from global PlanetScope satellite data, respectively. Also a quality-based fusion strategy was employed to generate higher-quality polygons (called GBA.Polygon) based on existing open building polygons, including our own derived one. With more than 2.75 billion buildings worldwide, GBA.Polygon surpasses the most comprehensive database to date by more than 1 billion buildings…(More)”.

About: “…At the heart of JAIGP lies a commitment to learning through collaborative exploration. We believe that understanding emerges not from perfect knowledge, but from thoughtful inquiry conducted in partnership with both humans and AI systems.

In this space, we embrace productive uncertainty. We recognize that AI-generated research challenges traditional notions of authorship, creativity, and expertise. Rather than pretending to have all the answers, we invite researchers, thinkers, and curious minds to join us in exploring these questions together.

Every paper submitted to JAIGP represents an experiment in human-AI collaboration. Some experiments will succeed brilliantly; others will teach us valuable lessons. All contributions help us understand the evolving landscape of AI-assisted research. Through this collective exploration, we learn not just about our research topics, but about the very nature of knowledge creation in the age of AI…(More)”.

Book by Tom Griffiths: “Everyone has a basic understanding of how the physical world works. We learn about physics and chemistry in school, letting us explain the world around us in terms of concepts like force, acceleration, and gravity—the Laws of Nature. But we don’t have the same fluency with concepts needed to understand the world inside us—the Laws of Thought. While the story of how mathematics has been used to reveal the mysteries of the universe is familiar, the story of how it has been used to study the mind is not.

There is no one better to tell that story than Tom Griffiths, the head of Princeton’s AI Lab and a renowned expert in the field of cognitive science. In this groundbreaking book, he explains the three major approaches to formalizing thought—rules and symbols, neural networks, and probability and statistics—introducing each idea through the stories of the people behind it. As informed conversations about thought, language, and learning become ever more pressing in the age of AI, The Laws of Thought is an essential read for anyone interested in the future of technology…(More)“.

The Case for Innovating and Institutionalizing How We Define Questions by Stefaan Verhulst:

“In an age defined by artificial intelligence, data abundance, and evidence-driven rhetoric, it is tempting to believe that progress depends primarily on better answers. Faster models. Larger datasets. More sophisticated analytics. Yet many of the most visible failures in policy, innovation, and public trust today share a quieter origin: not bad answers, but badly framed questions.

What societies choose to ask, or fail to ask, determines what gets measured, what gets funded, and ultimately what gets built. Agenda-setting is not a preliminary step to governance; it is governance. And yet, despite its importance, the practice of defining questions remains largely informal, opaque, and captured by a narrow set of actors.

This is the gap the 100 Questions initiative seeks to address. Its aim is not philosophical reflection for its own sake, but something decidedly practical: accelerating innovation, research, and evidence-based decision-making by improving the way problems are framed in the first place.

Why questions matter more than we admit

Every major public decision begins long before legislation is drafted or funding allocated. It begins with scoping — deciding what the problem actually is. It moves to prioritization — choosing which issues deserve attention now rather than later. And it culminates in structuring the quest for evidence — determining what kinds of data, research, or experimentation are needed to move forward…(More)”.

Resource by Stefaan Verhulst and Adam Zable: “In today’s AI-driven world, the reuse of data beyond its original purpose is no longer exceptional – it is foundational. Data collected for one context is now routinely combined, shared, or repurposed for others. While these practices can create significant public value, many data reuse initiatives face persistent gaps in legitimacy.

Existing governance tools, often centered on individual, point-in-time consent, do not reflect the collective and evolving nature of secondary data use. They also provide limited ways for communities to influence decisions once data is shared, particularly as new risks, technologies, partners, or use cases emerge. Where consent is transactional and static, governing data reuse requires mechanisms that are relational and adaptive.

A social license for data re-use responds to this challenge by framing legitimacy as an ongoing relationship between data users and affected communities. It emphasizes the importance of clearly articulated expectations around purpose, acceptable uses, safeguards, oversight, and accountability, and of revisiting those expectations as circumstances change.

To support this work in practice, The GovLab has now released Operationalizing a Social License for Data Re-Use: Questions to Signal and Capture Community Preferences and Expectations. This new facilitator’s guide is designed for people who convene and lead engagement around data reuse and need practical tools to support those conversations early.

The guide focuses on the first phase of operationalizing a social license: establishing community preferences and expectations. It provides a structured worksheet and facilitation guidance to help practitioners convene deliberative sessions that uncover priorities, acceptable uses, safeguards, red lines, and conditions for reuse as data is shared, combined, or scaled…(More)”.