Stefaan Verhulst

Article by Emanuel Maiberg: “The report, titled “Are AI Bots Knocking Cultural Heritage Offline?” was written by Weinberg of the GLAM-E Lab, a joint initiative between the Centre for Science, Culture and the Law at the University of Exeter and the Engelberg Center on Innovation Law & Policy at NYU Law, which works with smaller cultural institutions and community organizations to build open access capacity and expertise. GLAM is an acronym for galleries, libraries, archives, and museums. The report is based on a survey of 43 institutions with open online resources and collections in Europe, North America, and Oceania. Respondents also shared data and analytics, and some followed up with individual interviews. The data is anonymized so institutions could share information more freely, and to prevent AI bot operators from undermining their counter measures.

Of the 43 respondents, 39 said they had experienced a recent increase in traffic. Twenty-seven of those 39 attributed the increase in traffic to AI training data bots, with an additional seven saying the AI bots could be contributing to the increase.

“Multiple respondents compared the behavior of the swarming bots to more traditional online behavior such as Distributed Denial of Service (DDoS) attacks designed to maliciously drive unsustainable levels of traffic to a server, effectively taking it offline,” the report said. “Like a DDoS incident, the swarms quickly overwhelm the collections, knocking servers offline and forcing administrators to scramble to implement countermeasures. As one respondent noted, ‘If they wanted us dead, we’d be dead.’”…(More)”

Paper by Daron Acemoglu, Asuman Ozdaglar & James Siderius: “We consider the political consequences of the use of artificial intelligence (AI) by online platforms engaged in social media content dissemination, entertainment, or electronic commerce. We identify two distinct but complementary mechanisms, the social media channel and the digital ads channel, which together and separately contribute to the polarization of voters and consequently the polarization of parties. First, AI-driven recommendations aimed at maximizing user engagement on platforms create echo chambers (or “filter bubbles”) that increase the likelihood that individuals are not confronted with counter-attitudinal content. Consequently, social media engagement makes voters more polarized, and then parties respond by becoming more polarized themselves. Second, we show that party competition can encourage platforms to rely more on targeted digital ads for monetization (as opposed to a subscription-based business model), and such ads in turn make the electorate more polarized, further contributing to the polarization of parties. These effects do not arise when one party is dominant, in which case the profit-maximizing business model of the platform is subscription-based. We discuss the impact regulations can have on the polarizing effects of AI-powered online platforms…(More)”.

Paper by Jorrit de Jong, Fernando Fernandez-Monge et al: “Over the last decades, scholars and practitioners have focused their attention on the use of data for improving public action, with a renewed interest in the emergence of big data and artificial intelligence. The potential of data is particularly salient in cities, where vast amounts of data are being generated from traditional and novel sources. Despite this growing interest, there is a need for a conceptual and operational understanding of the beneficial uses of data. This article presents a comprehensive and precise account of how cities can use data to address problems more effectively, efficiently, equitably, and in a more accountable manner. It does so by synthesizing and augmenting current research with empirical evidence derived from original research and learnings from a program designed to strengthen city governments’ data capacity. The framework can be used to support longitudinal and comparative analyses as well as explore questions such as how different uses of data employed at various levels of maturity can yield disparate outcomes. Practitioners can use the framework to identify and prioritize areas in which building data capacity might further the goals of their teams and organizations…(More)“

About: “The GYPI Report offers a powerful, data-driven overview of youth political participation in over 141 countries. From voting rights to civic activism, the report explores how young people engage in politics and where gaps persist. Inside, you’ll find:

- Global rankings and country-level scores across four key dimensions of youth participation: Socio-Economic, Civic Space, Political Affairs and Elections,

- Regional insights and thematic trends,

- Actionable recommendations for policymakers, civil society, and international organisations.

Whether you’re a decision-maker, activist, researcher, or advocate, the report gives you the tools to better understand and strengthen youth participation in public life…(More)”.

Report by the UN Sustainable Development Solutions Network (SDSN): “Ten years after the adoption of the Sustainable Development Goals (SDGs), progress remains alarmingly off-track, with less than 20% of targets projected to be achieved by 2030…The SDR includes the SDG Index and Dashboards, which rank all UN Member States on their performance across the 17 Goals, and this year’s report features a new Index (SDGi), which focuses on 17 headline indicators to track overall SDG progress over time…This year’s SDR highlights five key findings:

The Global Financial Architecture (GFA) must be urgently reformed to finance global public goods and achieve sustainable development. Roughly half the world’s population resides in countries that cannot adequately invest in sustainable development due to unsustainable debt burdens and limited access to affordable, long-term capital. Sustainable development is a high-return investment, yet the GFA continues to direct capital toward high-income countries instead of EMDEs, which offer stronger growth prospects and higher returns. Global public goods also remain significantly underfinanced. The upcoming Ff4D offers a critical opportunity for UN Member States to reform this system and ensure that international financing flows at scale to EMDEs to achieve sustainable development…

At the global level, SDG progress has stalled; none of the 17 Global Goals are on track, and only 17% of the SDG targets are on track to be achieved by 2030. Conflicts, structural vulnerabilities, and limited fiscal space continue to hinder progress, especially in emerging and developing economies (EMDEs). The five targets showing significant reversal in progress since 2015 include: obesity rate (SDG 2), press freedom (SDG 16), sustainable nitrogen management (SDG 2), the red list index (SDG 15), and the corruption perception index (SDG 16). Conversely, many countries have made notable progress in expanding access to basic services and infrastructure, including: mobile broadband use (SDG 9), access to electricity (SDG 7), internet use (SDG 9), under-5 mortality rate (SDG 3), and neonatal mortality (SDG 3). However, future progress on many of these indicators, including health-related outcomes, is threatened by global tensions and the decline in international development finance.

Barbados leads again in UN-based multilateralism commitment, while the U.S. ranks last. The SDR 2025’s Index of countries’ support to UN-based multilateralism (UN-Mi) ranks countries based on their support for and engagement with the UN system. The top three countries most committed to UN multilateralism are: Barbados (#1), Jamaica (#2), and Trinidad and Tobago (#3). Among G20 nations, Brazil (#25) ranks highest, while Chile (#7) leads among OECD countries. In contrast, the U.S., which recently withdrew from the Paris Climate Agreement and the World Health Organization (WHO) and formally declared its opposition to the SDGs and the 2030 Agenda, ranks last (#193) for the second year in a row…(More)”

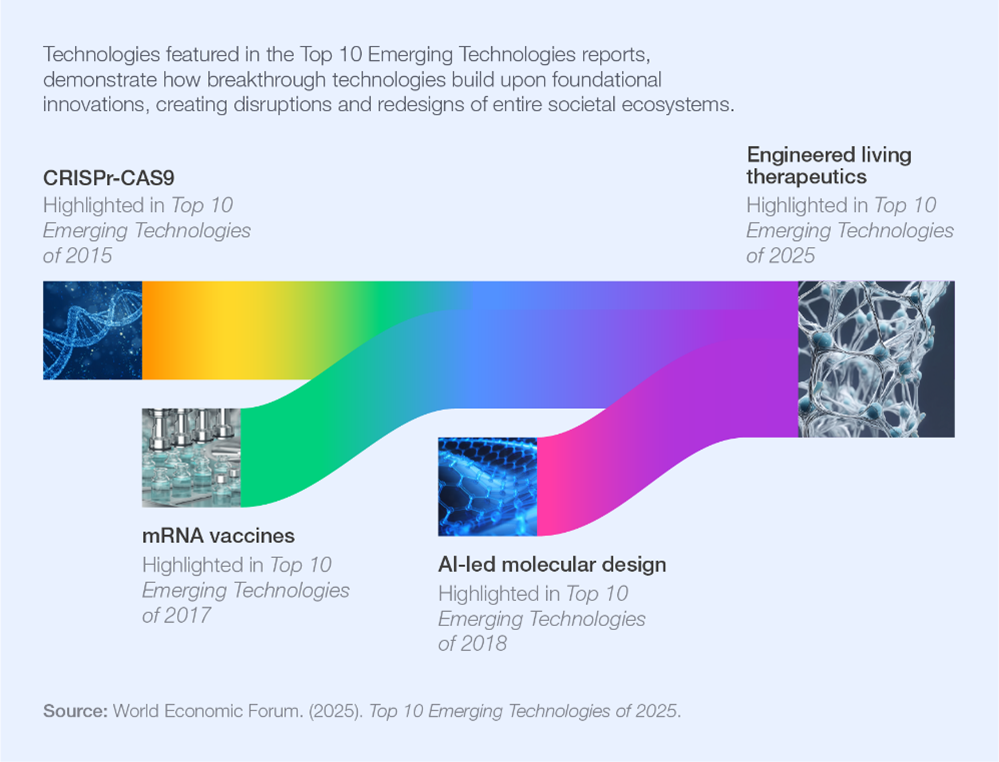

World Economic Forum: “As cities become more connected, collaborative sensing is enabling vehicles, traffic systems and emergency services to coordinate in real time – improving safety and easing congestion. This is just one of the World Economic Forum’s Top 10 Emerging Technologies of 2025 that is expected to deliver real-world impact within three to five years and address urgent global challenges….The report outlines what is needed to bring them to scale: investment, infrastructure, standards and responsible governance, and calls on business, government and the scientific community to collaborate to ensure their development serves the public good.

This year’s edition highlights a trend towards technology convergence. For example, structural battery composites combine energy with storage design, while engineered living therapeutics merge synthetic biology and precision medicine. Such integration signals a shift away from standalone innovations to more integrated systems-based solutions, reshaping what is possible.

“The path from breakthrough research to tangible societal progress depends on transparency, collaboration, and open science,” said Frederick Fenter, Chief Executive Editor, Frontiers. “Together with the World Economic Forum, we have once again delivered trusted, evidence-based insights on emerging technologies that will shape a better future for all.”

The Top 10 Emerging Technologies of 2025

Trust and safety in a connected world:

1. Collaborative sensing

Networks of connected sensors can help vehicles, cities and emergency services share information in real time. This can improve safety, reduce traffic and respond faster to crises.

2. Generative watermarking

This technology adds invisible tags to AI-generated content, making it easier to tell what is real and what is not. It could help fight misinformation and protect trust online…(More)”.

Creative Commons: “Creative Commons (CC) today announces the public kickoff of the CC signals project, a new preference signals framework designed to increase reciprocity and sustain a creative commons in the age of AI. The development of CC signals represents a major step forward in building a more equitable, sustainable AI ecosystem rooted in shared benefits. This step is the culmination of years of consultation and analysis. As we enter this new phase of work, we are actively seeking input from the public.

As artificial intelligence (AI) transforms how knowledge is created, shared, and reused, we are at a fork in the road that will define the future of access to knowledge and shared creativity. One path leads to data extraction and the erosion of openness; the other leads to a walled-off internet guarded by paywalls. CC signals offer another way, grounded in the nuanced values of the commons expressed by the collective.

Based on the same principles that gave rise to the CC licenses and tens of billions of works openly licensed online, CC signals will allow dataset holders to signal their preferences for how their content can be reused by machines based on a set of limited but meaningful options shaped in the public interest. They are both a technical and legal tool and a social proposition: a call for a new pact between those who share data and those who use it to train AI models.

“CC signals are designed to sustain the commons in the age of AI,” said Anna Tumadóttir, CEO, Creative Commons. “Just as the CC licenses helped build the open web, we believe CC signals will help shape an open AI ecosystem grounded in reciprocity.”

CC signals recognize that change requires systems-level coordination. They are tools that will be built for machine and human readability, and are flexible across legal, technical, and normative contexts. However, at their core CC signals are anchored in mobilizing the power of the collective. While CC signals may range in enforceability, legally binding in some cases and normative in others, their application will always carry ethical weight that says we give, we take, we give again, and we are all in this together.…

Now Ready for Feedback

More information about CC signals and early design decisions are available on the CC website. We are committed to developing CC signals transparently and alongside our partners and community. We are actively seeking public feedback and input over the next few months as we work toward an alpha launch in November 2025….(More)”

Book edited by Rita Guerreiro Teixeira et al: “…explores the opportunities and challenges of implementing inclusive rule-making processes in international organisations (IOs). Expert authors examine the impact of inclusiveness across a wide range of organisations and policy issues, from climate change and peace and security to energy governance and securities regulation.

Chapters combine novel academic research with insights from IO practitioners to identify ways of making rule-making more inclusive, building on the ongoing work of the Partnership of International Organisations for Effective International Rule-Making. They utilise both qualitative and quantitative research methods to analyse the functions and consequences of inclusive rule-making; mechanisms for citizen participation; and the challenges of engaging with private actors and for-profit stakeholders. Ultimately, the book highlights key strategies for maintaining favourable public perceptions and trust in international institutions, emphasizing the importance of making rule-making more accountable, legitimate and accessible…(More)”.

Article by Don Moynihan: “From 2016 to 2020, the Australian government operated an automated debt assessment and recovery system, known as “Robodebt,” to recover fraudulent or overpaid welfare benefits. The goal was to save $4.77 billion through debt recovery and reduced public service costs. However, the algorithm and policies at the heart of Robodebt caused wildly inaccurate assessments, and administrative burdens that disproportionately impacted those with the least resources. After a federal court ruled the policy unlawful, the government was forced to terminate Robodebt and agree to a $1.8 billion settlement.

Robodebt is important because it is an example of a costly failure with automation. By automation, I mean the use of data to create digital defaults for decisions. This could involve the use of AI, or it could mean the use of algorithms reading administrative data. Cases like Robodebt serve as canaries in the coalmine for policymakers interested in using AI or algorithms as an means to downsize public services on the hazy notion that automation will pick up the slack. But I think they are missing the very real risks involved.

To be clear, the lesson is not “all automation is bad.” Indeed, it offer real benefits in potentially reducing administrative costs and hassles and increasing access to public services (e.g. the use of automated or “ex parte” renewals for Medicaid, for example, which Republicans are considering limiting in their new budget bill). It is this promise that makes automation so attractive to policymakers. But it is also the case that automation can be used to deny access to services, and to put people into digital cages that are burdensome to escape from. This is why we need to learn from cases where it has been deployed.

The experience of Robodebt underlines the dangers of using citizens as lab rats to adopt AI on a broad scale before it is has been proven to work. Alongside the parallel collapse of the Dutch government childcare system, Robodebt provides an extraordinarily rich text to understand how automated decision processes can go wrong.

I recently wrote about Robodebt (with co-authors Morten Hybschmann, Kathryn Gimborys, Scott Loudin, Will McClellan), both in the journal of Perspectives on Public Management and Governance and as a teaching case study at the Better Government Lab...(More)”.

Paper by Kim M. Pepin: “Every decision a person makes is based on a model. A model is an idea about how a process works based on previous experience, observation, or other data. Models may not be explicit or stated (Johnson-Laird, 2010), but they serve to simplify a complex world. Models vary dramatically from conceptual (idea) to statistical (mathematical expression relating observed data to an assumed process and/or other data) or analytical/computational (quantitative algorithm describing a process). Predictive models of complex systems describe an understanding of how systems work, often in mathematical or statistical terms, using data, knowledge, and/or expert opinion. They provide means for predicting outcomes of interest, studying different management decision impacts, and quantifying decision risk and uncertainty (Berger et al. 2021; Li et al. 2017). They can help decision-makers assimilate how multiple pieces of information determine an outcome of interest about a complex system (Berger et al. 2021; Hemming et al. 2022).

People rely daily on system-level models to reach objectives. Choosing the fastest route to a destination is one example. Such a decision may be based on either a mental model of the road system developed from previous experience or a traffic prediction mapping application based on mathematical algorithms and current data. Either way, a system-level model has been applied and there is some uncertainty. In contrast, predicting outcomes for new and complex phenomena, such as emerging disease spread, a biological invasion risk (Chen et al. 2023; Elderd et al. 2006; Pepin et al. 2022), or climatic impacts on ecosystems is more uncertain. Here public service decision-makers may turn to mathematical models when expert opinion and experience do not resolve enough uncertainty about decision outcomes. But using models to guide decisions also relies on expert opinion and experience. Also, even technical experts need to make modeling choices regarding model structure and data inputs that have uncertainty (Elderd et al. 2006) and these might not be completely objective decisions (Bedson et al. 2021). Thus, using models for guiding decisions has subjectivity from both the developer and end-user, which can lead to apprehension or lack of trust about using models to inform decisions.

Models may be particularly advantageous to decision-making in One Health sectors, including health of humans, agriculture, wildlife, and the environment (hereafter called One Health sectors) and their interconnectedness (Adisasmito et al. 2022)…(More)”.