Stefaan Verhulst

Allison Schrager at Quartz: “Forecasts rely on data from the past, and while we now have better data than ever—and better techniques and technology with which to measure them—when it comes to forecasting, in many ways, data has never been more useless. And as data become more integral to our lives and the technology we rely upon, we must take a harder look at the past before we assume it tells us anything about the future.

To some extent, the weaknesses of data has always existed. Data are, by definition, information about what has happened in the past. Because populations and technology are constantly changing, they alter how we respond to incentives, policy, opportunities available to us, and even social cues. This undermines the accuracy of everything we try to forecast: elections, financial markets, even how long it will take to get to the airport.

But there is reason to believe we are experiencing more change than before. The economy is undergoing a major structural change by becoming more globally integrated, which increases some risks while reducing others, while technology has changed how we transact and communicate. I’ve written before how it’s now impossible for the movie industry to forecast hit films. Review-aggregation site Rotten Tomatoes undermines traditional marketing plans and the rise of the Chinese market means film makers must account for different tastes. Meanwhile streaming has changed how movies are consumed and who watches them. All these changes mean data from 10, or even five, years ago tell producers almost nothing about movie-going today.

We are in the age of big data that offers to promise of more accurate predictions. But it seems in some of the most critical aspects of our lives, data has never been more wrong….(More)”.

Geoff Mulgan et al at Nesta: “It builds on work Nesta has done in many fields – from health and culture to public services – to find more rounded and realistic ways of capturing the many dimensions of value created by public action. It is relevant to our work influencing governments and charities as well as to our own work as a funder, since our status as a charity commits us to creating public benefit.

Our aim in this work is to make value more transparent and more open to interrogation, whether that concerns libraries, bicycle lanes, museums, primary health services or training programmes for the unemployed. We recognise that value may come from government action; it can also be created by others, in civil society and business. And we recognise that value can often be complex, whether in terms of who benefits, or how it relates to values, as well as more technical issues such as what discount rates to apply.

But unless value is attended to explicitly, we risk ending up with unhappy results….(More)”.

Chapter by Oldrich Bubak and Henry Jacek in Trivialization and Public Opinion: “Democracy (Re)Imagined begins with a brief review of opinion surveys, which, over the recent decades, indicate steady increases in the levels of mistrust of the media, skepticism of the government’s effectiveness, and the public’s antipathy toward politics. It thus continues to explore the realities and the logic behind these perspectives. What can be done to institute good governance and renew the faith in the democratic system? It is becoming evident that rather than relying on the idea of more democracy, governance for the new age is smart, bringing in people where they are most capable and engaged. Here, the focus is primarily on the United States providing an extreme case in the evolution of democratic systems and a rationale for revisiting the tenets of governance.

Earlier, we have identified some deep lapses in public discourse and alluded to a number of negative political and policy outcomes across the globe. It may thus not be a revelation that the past several decades have seen a disturbing trend apparent in the views and choices of people throughout the democratic world—a declining political confidence and trust in government. These have been observed in European nations, Canada as well as the United States, countries different in their political and social histories (Dalton 2017). Consider some numbers from a recent US poll, the 2016 Survey of American Political Culture. The survey found, for example, that 64% of the American public had little or no confidence in the federal government’s capacity to solve problems (up from 60% in 1996), while 56% believed “the government in Washington threatens the freedom of ordinary Americans.” About 88% of respondents thought “political events these days seem more like theater or entertainment than like something to be taken seriously” (up from 79% in 1996). As well, 75% of surveyed individuals thought that one cannot “believe much” the mainstream media content (Hunter and Bowman 2016). As in other countries, such numbers, consistent across polls, tell a story much different than responses collected half a century ago.

Some, unsurprised, argue citizens have always had a level of skepticism and mistrust toward their government but appreciated their regime legitimacy, a democratic capacity to exercise their will and choose a new government. However, other scholars are arriving at a more pessimistic conclusion: People have begun questioning the very foundations of their systems of government—the legitimacy of liberal democratic regimes. Foa and Mounk, for example, examined responses from three waves of cross-national surveys (1995–2014) focusing on indicators of regime legitimacy: “citizens’ express support for the system as a whole; the degree to which they support key institutions of liberal democracy, such as civil rights; their willingness to advance their political causes within the existing political system; and their openness to authoritarian alternatives such as military rule” (2016, 6). They find citizens to be not only progressively critical of their government but also “cynical about the value of democracy as a political system, less hopeful that anything they do might influence public policy, and more willing to express support for authoritarian alternatives” (2016, 7). The authors point out that in 2011, 24% of those born in the 1980s thought democracy 1 was a “bad” system for the US, while 26% of the same cohort believed it is unimportant 2 for people to “choose their leaders in free elections.” Also in 2011, 32% of respondents of all ages reported a preference for a “strong leader” who need not “bother with parliament and elections” (up from 24% in 1995). As well, Foa and Mounk (2016) observe a decrease in interest and participation in conventional (including voting and political party membership) and non-conventional political activities (such as participation in protests or social movement).

These responses only beckon more questions, particularly as some scholars believe that “[t]he changing values and skills of Western publics encourage a new type of assertive or engaged citizen who is skeptical about political elites and the institutions of representative democracy” (Dalton 2017, 391). In this and the next chapter, we explore the realities and the logic behind these perspectives. Is the current system working as intended? What can be done to renew the faith in government and citizenship? What can we learn from how public comes to their opinions? We focus primarily on the developments in the United States, providing an extreme case in an evolution of a democratic system and a rationale for revisiting the tenets of governance. We will begin to discern the roots of many of the above stances and see that regaining effectiveness and legitimacy in modern governance demands more than just “more democracy.” Governance for the new age is smart, bringing in citizens where they are most capable and engaged. But change will demand a proper understanding of the underlying problems and a collective awareness of the solutions. And getting there requires us to cope with trivialization….(More)”

Paper by Mariana Mazzucato and Rainer Kattel: “Public value is value that is created collectively for a public purpose. This requires understanding of how public institutions can engage citizens in defining purpose (participatory structures), nurture organisational capabilities and capacity to shape new opportunities (organisational competencies); dynamically assess the value created (dynamic evaluation); and ensure that societal value is distributed equitably (inclusive growth).Rainer KattelMariana Mazzucato and Public value is value that is created collectively for a public purpose. This requires understanding of how public institutions can engage citizens in defining purpose (participatory structures), nurture organisational capabilities and capacity to shape new opportunities (organisational competencies); dynamically assess the value created (dynamic evaluation); and ensure that societal value is distributed equitably (inclusive growth).

Purpose-driven capitalism requires more than just words and gestures of goodwill. It requires purpose to be put at the centre of how companies and governments are run and how they interact with civil society.

Keynes claimed that practitioners who thought they were just getting the ‘job done’ were slaves of defunct economic theory.1 Purposeful capitalism, if it is to happen on the ground for real, requires a rethinking of value in economic theory and how it has shaped actions.

Today’s dominant economics framework restricts its understanding of value to a theory of exchange; only that which has a price is valuable. ‘Collective’ effort is missed since it is only individual decisions that matter:

even wages are seen as outcomes of an individual’s choice (maximisation of utility) between leisure versus work. ‘Social value’ itself is limited to looking at economic ‘welfare’ principles; that is, aggregate outcomes from individual behaviours…(More)”

Press Release: “While researchers from small and medium-sized companies and academic institutions often have enormous numbers of ideas, they don’t always have enough time or resources to develop them all. As a result, many ideas get left behind because companies and academics typically have to focus on narrow areas of research. This is known as the “Innovation Gap”. ESCulab (European Screening Centre: unique library for attractive biology) aims to turn this problem into an opportunity by creating a comprehensive library of high-quality compounds. This will serve as a basis for testing potential research targets against a wide variety of compounds.

Any researcher from a European academic institution or a small to medium-sized enterprise within the consortium can apply for a screening of their potential drug target. If a submitted target idea is positively assessed by a committee of experts it will be run through a screening process and the submitting party will receive a dossier of up to 50 potentially relevant substances that can serve as starting points for further drug discovery activities.

ESCulab will build Europe’s largest collaborative drug discovery platform and is equipped with a total budget of € 36.5 million: Half is provided by the European Union’s Innovative Medicines Initiative (IMI) and half comes from in-kind contributions from companies of the European Federation of Pharmaceutical Industries an Associations (EFPIA) and the Medicines for Malaria Venture. It builds on the existing library of the European Lead Factory , which consists of around 200,000 compounds, as well as around 350,000 compounds from EFPIA companies. The European Lead Factory aims to initiate 185 new drug discovery projects through the ESCulab project by screening drug targets against its library.

… The platform has already provided a major boost for drug discovery in Europe and is a strong example of how crowdsourcing, collective intelligence and the cooperation within the IMI framework can create real value for academia, industry, society and patients….(More)”

Blair Sheppard and Ceri-Ann Droog at Strategy and Business: “For the last 70 years the world has done remarkably well. According to the World Bank, the number of people living in extreme poverty today is less than it was in 1820, even though the world population is seven times as large. This is a truly remarkable achievement, and it goes hand in hand with equally remarkable overall advances in wealth, scientific progress, human longevity, and quality of life.

But the organizations that created these triumphs — the most prominent businesses, governments, and multilateral institutions of the post–World War II era — have failed to keep their implicit promises. As a result, today’s leading organizations face a global crisis of legitimacy. For the first time in decades, their influence, and even their right to exist, are being questioned.

Businesses are also being held accountable in new ways for the welfare, prosperity, and health of the communities around them and of the general public. Our own global firm, PwC, is among these businesses. The accusations facing any individual enterprise may or may not be justified, but the broader attitudes underlying them must be taken seriously.

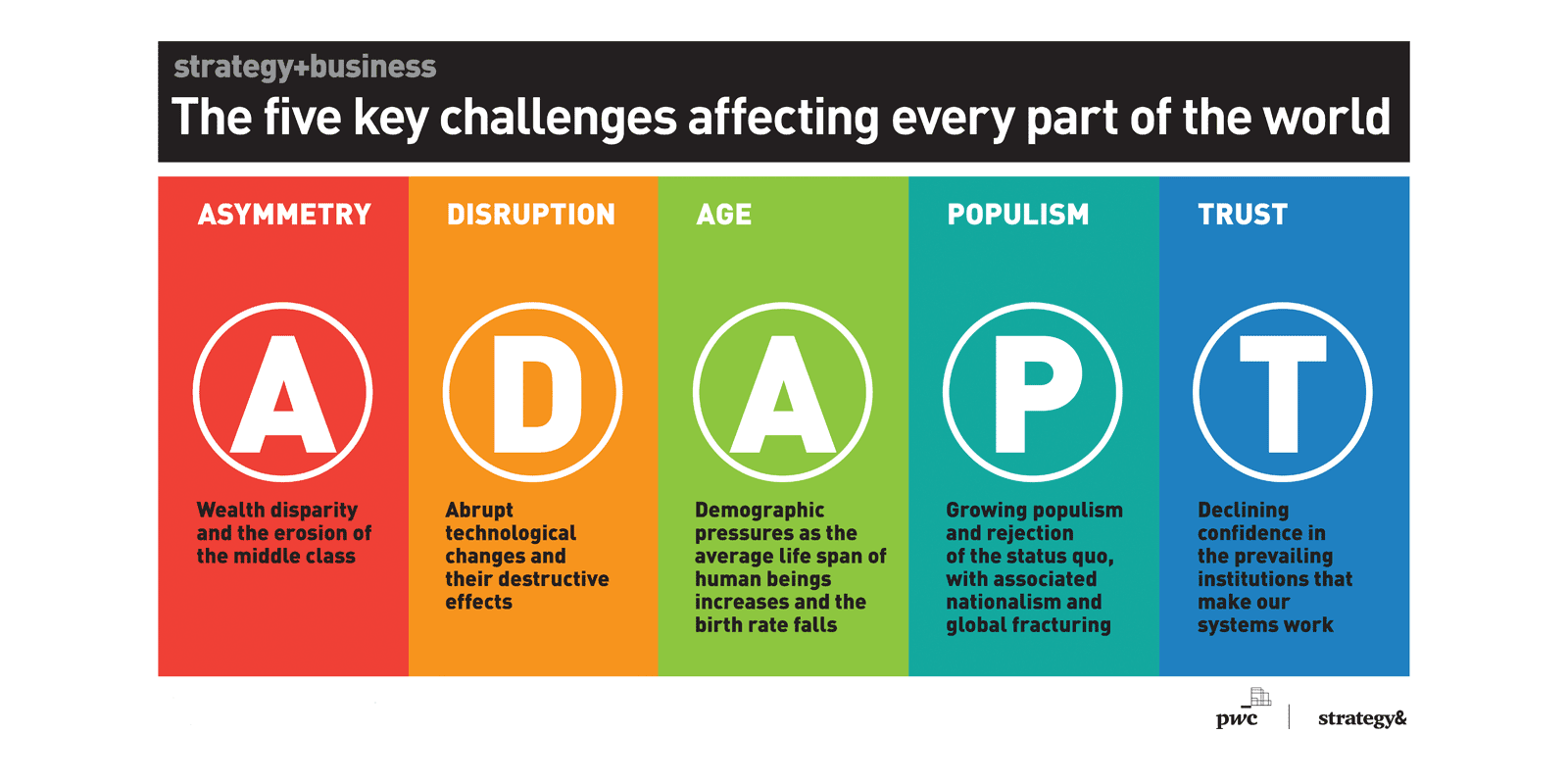

The causes of this crisis of legitimacy have to do with five basic challenges affecting every part of the world:

- Asymmetry: Wealth disparity and the erosion of the middle class

- Disruption: Abrupt technological changes and their destructive effects

- Age: Demographic pressures as the average life span of human beings increases and the birth rate falls

- Populism: Growing populism and rejection of the status quo, with associated nationalism and global fracturing

- Trust: Declining confidence in the prevailing institutions that make our systems work.

(We use the acronym ADAPT to list these challenges because it evokes the inherent change in our time and the need for institutions to respond with new attitudes and behaviors.)

Source: strategy-business.com/ADAPT

A few other challenges, such as climate change and human rights issues, may occur to you as equally important. They are not included in this list because they are not at the forefront of this particular crisis of legitimacy in the same way. But they are affected by it; if leading businesses and global institutions lose their perceived value, it will be harder to address every other issue affecting the world today.

Ignoring the crisis of legitimacy is not an option — not even for business leaders who feel their primary responsibility is to their shareholders. If we postpone solutions too long, we could go past the point of no return: The cost of solving these problems will be too high. Brexit could be a test case. The costs and difficulties of withdrawal could be echoed in other political breakdowns around the world. And if you don’t believe that widespread economic and political disruption is possible right now, then consider the other revolutions and abrupt, dramatic changes in sovereignty that have occurred in the last 250 years, often with technological shifts and widespread dissatisfaction as key factors….(More)”.

Knowledge@Wharton: “Data analytics and artificial intelligence are transforming our lives. Be it in health care, in banking and financial services, or in times of humanitarian crises — data determine the way decisions are made. But often, the way data is collected and measured can result in biased and incomplete information, and this can significantly impact outcomes.

In a conversation with Knowledge@Wharton at the SWIFT Institute Conference on the Impact of Artificial Intelligence and Machine Learning in the Financial Services Industry, Alexandra Olteanu, a post-doctoral researcher at Microsoft Research, U.S. and Canada, discussed the ethical and people considerations in data collection and artificial intelligence and how we can work towards removing the biases….

….Knowledge@Wharton: Bias is a big issue when you’re dealing with humanitarian crises, because it can influence who gets help and who doesn’t. When you translate that into the business world, especially in financial services, what implications do you see for algorithmic bias? What might be some of the consequences?

Olteanu: A good example is from a new law in the New York state according to which insurance companies can now use social media to decide the level for your premiums. But, they could in fact end up using incomplete information. For instance, you might be buying your vegetables from the supermarket or a farmer’s market, but these retailers might not be tracking you on social media. So nobody knows that you are eating vegetables. On the other hand, a bakery that you visit might post something when you buy from there. Based on this, the insurance companies may conclude that you only eat cookies all the time. This shows how even incomplete data can affect you….(More)”.

Press Release: “EU member states, car manufacturers and navigation systems suppliers will share information on road conditions with the aim of improving road safety. Cora van Nieuwenhuizen, Minister of Infrastructure and Water Management, agreed this today with four other EU countries during the ITS European Congress in Eindhoven. These agreements mean that millions of motorists in the Netherlands will have access to more information on unsafe road conditions along their route.

The data on road conditions that is registered by modern cars is steadily improving. For instance, information on iciness, wrong-way drivers and breakdowns in emergency lanes. This kind of data can be instantly shared with road authorities and other vehicles following the same route. Drivers can then adapt their driving behaviour appropriately so that accidents and delays are prevented….

The partnership was announced today at the ITS European Congress, the largest European event in the fields of smart mobility and the digitalisation of transport. Among other things, various demonstrations were given on how sharing this type of data contributes to road safety. In the year ahead, the car manufacturers BMW, Volvo, Ford and Daimler, the EU member states Germany, the Netherlands, Finland, Spain and Luxembourg, and navigation system suppliers TomTom and HERE will be sharing data. This means that millions of motorists across the whole of Europe will receive road safety information in their car. Talks on participating in the partnership are also being conducted with other European countries and companies.

ADAS

At the ITS congress, Minister Van Nieuwenhuizen and several dozen parties today also signed an agreement on raising awareness of advanced driver assistance systems (ADAS) and their safe use. Examples of ADAS include automatic braking systems, and blind spot detection and lane keeping systems. Using these driver assistance systems correctly makes driving a car safer and more sustainable. The agreement therefore also includes the launch of the online platform “slimonderweg.nl” where road users can share information on the benefits and risks of ADAS.

Minister Van Nieuwenhuizen: “Motorists are often unaware of all the capabilities modern cars offer. Yet correctly using driver assistance systems really can increase road safety. From today, dozens of parties are going to start working on raising awareness of ADAS and improving and encouraging the safe use of such systems so that more motorists can benefit from them.”

Connected Transport Corridors

Today at the congress, progress was also made regarding the transport of goods. For example, at the end of this year lorries on three transport corridors in our country will be sharing logistics data. This involves more than just information on environmental zones, availability of parking, recommended speeds and predicted arrival times at terminals. Other new technologies will be used in practice on a large scale, including prioritisation at smart traffic lights and driving in convoy. Preparatory work on the corridors around Amsterdam and Rotterdam and in the southern Netherlands has started…..(More)”.

Chapter by Michiel de Lange in The Right to the Smart City: “The current datafication of cities raises questions about what Lefebvre and many after him have called “the right to the city.” In this contribution, I investigate how the use of data for civic purposes may strengthen the “right to the datafied city,” that is, the degree to which different people engage and participate in shaping urban life and culture, and experience a sense of ownership. The notion of the commons acts as the prism to see how data may serve to foster this participatory “smart citizenship” around collective issues. This contribution critically engages with recent attempts to theorize the city as a commons. Instead of seeing the city as a whole as a commons, it proposes a more fine-grained perspective of the “commons-as-interface.” The “commons-as-interface,” it is argued, productively connects urban data to the human-level political agency implied by “the right to the city” through processes of translation and collectivization. The term is applied to three short case studies, to analyze how these processes engender a “right to the datafied city.” The contribution ends by considering the connections between two seemingly opposed discourses about the role of data in the smart city – the cybernetic view versus a humanist view. It is suggested that the commons-as-interface allows for more detailed investigations of mediation processes between data, human actors, and urban issues….(More)”.

Kiona N. Smith at Forbes: “What could the 107-year-old tragedy of the Titanic possibly have to do with modern problems like sustainable agriculture, human trafficking, or health insurance premiums? Data turns out to be the common thread. The modern world, for better or or worse, increasingly turns to algorithms to look for patterns in the data and and make predictions based on those patterns. And the basic methods are the same whether the question they’re trying to answer is “Would this person survive the Titanic sinking?” or “What are the most likely routes for human trafficking?”

An Enduring Problem

Predicting survival at sea based on the Titanic dataset is a standard practice problem for aspiring data scientists and programmers. Here’s the basic challenge: feed your algorithm a portion of the Titanic passenger list, which includes some basic variables describing each passenger and their fate. From that data, the algorithm (if you’ve programmed it well) should be able to draw some conclusions about which variables made a person more likely to live or die on that cold April night in 1912. To test its success, you then give the algorithm the rest of the passenger list (minus the outcomes) and see how well it predicts their fates.

Online communities like Kaggle.com have held competitions to see who can develop the algorithm that predicts survival most accurately, and it’s also a common problem presented to university classes. The passenger list is big enough to be useful, but small enough to be manageable for beginners. There’s a simple set out of outcomes — life or death — and around a dozen variables to work with, so the problem is simple enough for beginners to tackle but just complex enough to be interesting. And because the Titanic’s story is so famous, even more than a century later, the problem still resonates.

“It’s interesting to see that even in such a simple problem as the Titanic, there are nuggets,” said Sagie Davidovich, Co-Founder & CEO of SparkBeyond, who used the Titanic problem as an early test for SparkBeyond’s AI platform and still uses it as a way to demonstrate the technology to prospective customers….(More)”.