Stefaan Verhulst

Report by Congressional Research Service: “Artificial intelligence (AI) is a rapidly growing field of technology with potentially significant implications for national security. As such, the U.S. Department of Defense (DOD) and other nations are developing AI applications for a range of military functions. AI research is underway in the fields of intelligence collection and analysis, logistics, cyber operations, information operations, command and control, and in a variety of semi-autonomous and autonomous vehicles.

Already, AI has been incorporated into military operations in Iraq and Syria. Congressional action has the potential to shape the technology’s development further, with budgetary and legislative decisions influencing the growth of military applications as well as the pace of their adoption.

AI technologies present unique challenges for military integration, particularly because the bulk of AI development is happening in the commercial sector. Although AI is not unique in this regard, the defense acquisition process may need to be adapted for acquiring emerging technologies like AI.

In addition, many commercial AI applications must undergo significant modification prior to being functional for the military. A number of cultural issues also challenge AI acquisition, as some commercial AI companies are averse to partnering with DOD due to ethical concerns, and even within the department, there can be resistance to incorporating AI technology into existing weapons systems and processes.

Potential international rivals in the AI market are creating pressure for the United States to compete for innovative military AI applications. China is a leading competitor in this regard, releasing a plan in 2017 to capture the global

AI technology could, for example, facilitate autonomous operations, lead to more informed military decisionmaking, and increase the speed and scale of military action. However, it may also be unpredictable or vulnerable to unique forms of manipulation. As a result of these factors, analysts hold a broad range of opinions on how influential AI will be in future combat operations.

While a small number of analysts believe that the technology will have minimal impact, most believe that AI will have at least an evolutionary—if not revolutionary—effect

Book edited by Nathalie Behnke, Jörg Broschek and Jared Sonnicksen: “This edited volume provides a comprehensive overview of the diverse and multi-faceted research on governance in multilevel systems. The book features a collection of cutting-edge trans-Atlantic contributions, covering topics such as federalism, decentralization as well as various forms and processes of regionalization and Europeanization. While the field of multilevel governance is comparatively young, research in the subject has also come of age as considerable theoretical, conceptual and empirical advances have been achieved since the first influential works were published in the early noughties. The present volume aims to gauge the state-of-the-art in the different research areas as it brings together a selection of original contributions that are united by a variety of configurations, dynamics

Paper by Anna Wilson and Stefano De Paoli at First Monday: “Social and socioeconomic interactions and transactions often require trust. In digital spaces, the main approach to facilitating trust has effectively been to try to reduce or even remove the need for it through the implementation of reputation systems. These generate metrics based on digital data such as ratings and reviews submitted by users, interaction histories, and so on, that are intended to label individuals as more or less reliable or trustworthy in a particular interaction context. We undertake

We suggest that conventional approaches to the design of such systems are rooted in a capitalist, competitive paradigm, relying on methodological

This is an over-simplification of the role of relationships, contract law, and risk. We believe there is a gap in the understanding of the capabilities of SC’s. With that in

Book by Neil Selwyn: “The rise of digital technology is transforming the world in which we live. Our digitalized societies demand new ways of thinking about the social, and this short book introduces readers to an approach that can deliver this: digital sociology.

Neil Selwyn examines the concepts, tools and practices that sociologists are developing to analyze the intersections of the social and the digital. Blending theory and empirical examples, the five chapters highlight areas of inquiry where digital approaches are taking hold and shaping the discipline of sociology today. The bookexplores key topics such as digital race and digital labor, as well as the fast-changingnature of digital research methods and diversifying forms of digital scholarship.

Designed for use in advanced undergraduate and graduate courses, this timely introduction will be an invaluable resource for all sociologists seeking to focus their craft and thinking toward the social complexities of the digital age

Dissertation by Chia-Fang Chung: “Many people collect and analyze data about themselves to improve their health and wellbeing. With the prevalence of smartphones and wearable sensors, people are able to collect detailed and complex data about their everyday behaviors, such as diet, exercise, and sleep. This everyday behavioral data can support individual health goals, help manage health conditions, and complement traditional medical examinations conducted in clinical visits. However, people often need support to interpret this self-tracked data. For example, many people share their data with health experts, hoping to use this data to support more personalized diagnosis and recommendations as well as to receive emotional support. However, when attempting to use this data in collaborations, people and their health experts often struggle to make sense of the data. My dissertation examines how to support collaborations between individuals and health experts using personal informatics data.

My research builds an empirical understanding of individual and collaboration goals around using personal informatics data, current practices of using this data to support collaboration, and challenges and expectations for integrating the use of this data into clinical workflows. These understandings help designers and researchers advance the design of personal informatics systems as well as the theoretical understandings of patient-provider collaboration.

Based on my formative work, I propose

DemocracyLab: “Today’s most significant problems are being addressed primarily by governments, using systems and tools designed hundreds of years ago. From climate change to inequality, the status quo is proving inadequate, and time is running out.

The role of our democratic institutions is analogous to breathing — inhaling citizen input and exhaling government action. The civic technology movement is inventing new ways to gather input, make decisions and execute collective action. The science fiction end state is an enlightened collective intelligence. But in the short term, it’s enough to seek incremental improvements in how citizens are engaged and government services are delivered. This will increase our chances of solving a wide range of problems in communities of all scales.

The match between the government and tech sectors is complementary. Governments and nonprofits are widely perceived as lagging in technological adoption and innovation. The tech sector’s messiah complex has been muted by Cambridge Analytica, but the principles of user-centered design, iterative development, and continuous learning have not lost their value. Small groups of committed technologists can easily test hypotheses about ways to make institutions work better. Trouble is, it’s really hard for them to earn a living doing it.

The Problem for Civic Tech

The unique challenge facing civic tech was noted by Fast Forward, a tech nonprofit accelerator, in a recent report that aptly described the chicken and egg problem plaguing tech nonprofits:

Many foundations will not fund a nonprofit without signs of proven impact. Tech nonprofits are unique. They must build their product before they can prove impact, and they cannot build the tech product without funding.

This is compounded by the fact that government procurement processes are often protracted and purchasers risk averse. Rather than a thousand flowers blooming in learning-rich civic experiments, civic entrepreneurs are typically frustrated and ineffectual, finding that their ideas are difficult to monetize, met with skepticism by government, and starved for capital.

These challenges are described well in the Knight and Rita Allen Foundations’ report Scaling Civic Tech. The report notes the difference between “buyer” revenue that is earned from providing

WeDialogue: “… is a global experiment to test new solutions for commenting on news online. The objective of weDialogue is to promote humility in public discourse and prevent digital harassment and trolling.

What am I expected to do?

The task is simple. You are asked to fill out a survey, then wait until the experiment begins. You will then be given a login for your platform. There you will be able to read and comment on news as if it was a normal online newspaper or blog. We would like people to comment as much as possible, but you are free to contribute as much as you want. At the end of the experiment we would be very grateful if you could fill in a final survey and provide us with feedback on the overall experience.

Why is important to test new platforms for news comments?

We know the problems of harassment and trolling (see our video), but the solution is not obvious. Developers have proposed new platforms, but these have not been tested rigorously. weDialogue is a participatory action research project that aims to combine academic expertise and citizens’ knowledge and experience to test potential solutions.

What are you going to do with the research?

All our research and data will be publicly available so that others can build upon it. Both the Deliberatorium and Pol.is are free software that can be reused. The data we will create and the resulting publications will be released in an open access environment.

Who is weDialogue?

Taxi and Limousine Commission’s (TLC): “Cities aren’t born smart. They become smart by understanding what is happening on their streets. Measurement is key to management, and amid the incomparable expansion of for-hire transportation service in New York City, measuring street activity is more important than ever. Between 2015 (when app companies first began reporting data) and June 2018, trips by app services increased more than 300%, now totaling over 20 million trips each month. That’s more cars, more drivers, and more mobility.

Taxi and Limousine Commission’s (TLC): “Cities aren’t born smart. They become smart by understanding what is happening on their streets. Measurement is key to management, and amid the incomparable expansion of for-hire transportation service in New York City, measuring street activity is more important than ever. Between 2015 (when app companies first began reporting data) and June 2018, trips by app services increased more than 300%, now totaling over 20 million trips each month. That’s more cars, more drivers, and more mobility.

We know the true scope of this transformation today only because of the New York City Taxi and Limousine Commission’s (TLC) pioneering regulatory actions. Unlike most cities in the country, app services cannot operate in NYC unless they give the City detailed information about every trip. This is mandated by TLC rules and is not contingent on companies voluntarily “sharing” only a self-selected portion of the large amount of data they collect. Major trends in the taxi and for-hire vehicle industry are highlighted in TLC’s 2018 Factbook.

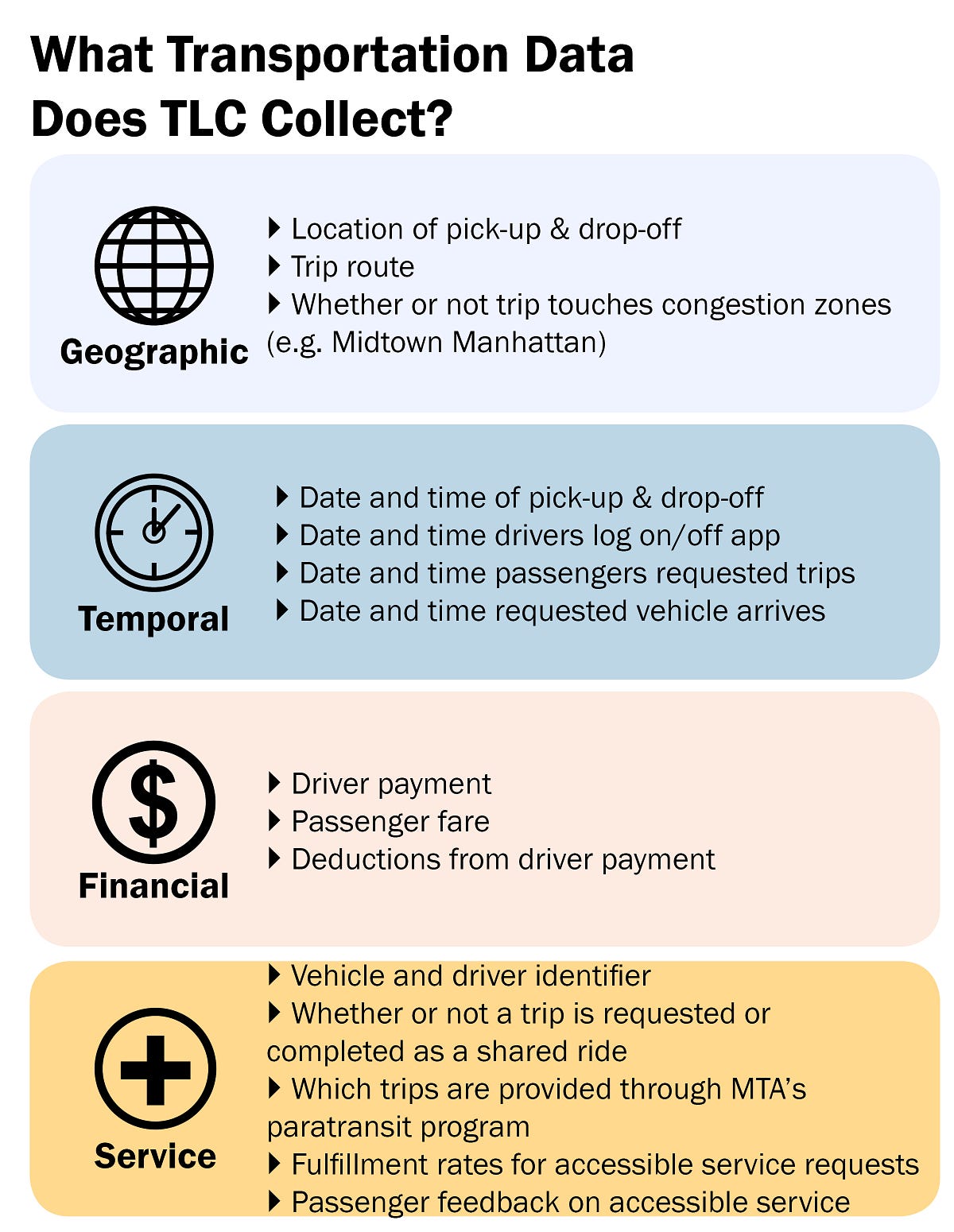

What Transportation Data Does TLC Collect?

Notably, Uber, Lyft, and their competitors today must give the TLC granular data about each and every trip and request for service. TLC does not receive passenger information; we require only the data necessary to understand traffic patterns, working conditions, vehicle efficiency, service availability, and other important information.

One of the most important aspects of the data TLC collects is that they are stripped of identifying information and made available to the public. Through the City’s Open Data portal, TLC’s trip data help businesses distinguish new business opportunities from saturated markets, encourage competition, and help investors follow trends in both new app transportation and the traditional car service and hail taxi markets. As app companies contemplate going public, their investors have surely already bookmarked TLC’s Open Data site.

Using Data to Improve Mobility

With this information NYC now knows people are getting around the boroughs using app services and shared rides with greater frequency. These are the same NYC neighborhoods that traditionally were not served by yellow cabs and often have less robust public transportation options. We also know these services provide an increasing number of trips in congested areas like Manhattan and the inner rings of Brooklyn and Queens, where public transportation options are relatively plentiful….(More)”.

Book edited by Katrin Bergener, Michael Räckers and Armin Stein: “Structuring, or, as it is referred to in the title of this book, the art of structuring, is one of the core elements in the discipline of Information Systems. While the world is becoming increasingly complex, and a growing number of disciplines are evolving to help make it a better place,

The contributions in this book build these bridges, which are vital in order to communicate between different worlds of thought and methodology – be it between Information Systems (IS) research and practice, or between IS research and other research disciplines. They describe how structuring can be and should be done so as to foster communication and collaboration. The topics covered reflect various layers of structure that can serve as bridges: models, processes, data, organizations, and technologies. In turn, these aspects are complemented by visionary outlooks on how structure influences the field….(More)”.