Stefaan Verhulst

John Thornhill at the Financial Times: “During the Iraq war, the Los Angeles Times attempted to harness the collective wisdom of its readers by crowdsourcing an editorial, called a wikitorial, on the conflict. It was a disaster. The arguments between the hawks and doves quickly erupted into a ranting match. The only way to salvage the mess was to “fork” the debate inviting the two sides to refine separate arguments.

If it is impossible to crowdsource an opinion column, is it any more realistic to do so with news in our hyper-partisan age? We are about to find out as Jimmy Wales, the founder of Wikipedia, is launching Wikitribune in an attempt to do just that. Declaring that “news is broken”, Mr Wales said his intention was to combine the radical community spirit of Wikipedia with the best practices of journalism. His crowdfunded news site, free of advertising and paywalls, will initially be staffed by 10 journalists working alongside volunteer contributors.

Mr Wales is right that the news business desperately needs to regain credibility given the erosion of trusted media organisations, the proliferation of fake news and the howling whirlwind of social media. It is doubly problematic in an era in which unscrupulous politicians, governments and corporations can now disintermediate the media by providing their own “alternative facts” direct to the public.

Unlikely as it is that Wikitribune has stumbled upon the answer, it should be applauded for asking the right questions. How can the media invent sustainable new models that combine credibility, relevance and reach? One thing to note is that Wikipedia has for years been producing crowdsourced news in the Wikinews section of its site, with little impact. Wikinews invites anyone to write the news. But the service is slow, clunky and dull.

As a separate project, Wikitribune is breaking with Wikipedia’s core philosophy by entrusting experts with authority. As a journalist, I warm to the idea that Mr Wales thinks we serve some useful purpose. But it will surely take time for his new site to create a viable hybrid culture….(More)”.

Legislators have always struggled to address this problem. But in the first 100 days of Donald Trump’s administration, new gun legislation has only expanded, not restricted gun rights. In short order, lawmakers made it easier for certain people with mental illness to buy guns, and pushed to expand the locations where people can carry firearms.

Over the past few years, however, gun owners and sellers have started taking matters into their own hands and have come up with creative solutions to reduce the threat from guns.

From working with public health organisations so gun sellers can recognise the signs of depression in a prospective buyer to developing biometric gun locks, citizen scientists are cobbling together measures they hope will stave off the worst aspects of US gun culture.

The Federation of American Scientists estimates that 320 million firearms circulate in the US – about enough for every man, woman and child. According to the independent policy group Gun Violence Archive, there were 385 mass shootings in 2016, and it looks as if the numbers for 2017 will not differ wildly.

In the absence of regulations against guns, individual gun sellers and owners are trying to help”

Although the number of these incidents is alarming, it is dwarfed by the amount of suicides, which account for more than half of all firearms deaths (see graph, right). And last year, a report from the Associated Press and the USA Today Network showed that accidental shootings kill almost twice as many children as is shown in US government data.

In just one week in 2009, New Hampshire gun shop owner Ralph Demicco sold three guns that were ultimately used by their new owners to end their own lives. Demicco’s horror and dismay that he had inadvertently contributed to their deaths led him to start what has become known as the Gun Shop Project.

The project uses insights from the study of suicide to teach gun sellers to recognise signs of suicidal intent in buyers, and know when to avoid selling a gun. To do this, Demicco teamed up with Catherine Barber, an epidemiologist at the Harvard T. H. Chan School of Public Health.

Part of what the project does is challenge myths. With suicide, the biggest is that people plan suicides over a long period. But empirical evidence shows that people usually act in a moment of brief but extreme emotion. One study has found that nearly half of people who attempted suicide contemplated their attempt for less than 10 minutes. In the time it takes to find another method, a suicidal crisis often passes, so even a small delay in obtaining a gun could make a difference….Another myth that Demicco and Barber are seeking to dispel is that if you take away someone’s gun, they’ll just find another way to hurt themselves. While that’s sometimes true, Barber says, alternatives are less likely to be fatal. Gun attempts result in death more than 80 per cent of the time; only 2 per cent of pill-based suicide attempts are lethal.

Within a year of its launch in 2009, half of all gun sellers in New Hampshire had hung posters about the warning signs of suicide by the cash registers in their stores. The programme has expanded to 21 states, and Barber is now analysing data to see how well it is working.

Another grass-roots project is trying to prevent children from accidentally shooting themselves. Kai Kloepfer, an undergraduate at Massachusetts Institute of Technology, has been working on a fingerprint lock to prevent anyone other than the owner using a gun. He has founded a start-up called Biofire Technologies to improve the lock’s reliability and bring it into production….

Grass-roots schemes like the Gun Shop Project have a better chance of being successful, because gun users are already buying in. But it may take years for the project to become big enough to have a significant effect on national statistics.

Regulatory changes might be needed to make any improvements stick in the long term. At the very least, new regulations shouldn’t block the gun community’s efforts at self-governance.

Change will not come quickly, regardless. Barber sees parallels between the Gun Shop Project and campaigns against drink driving in the 1980s and 90s.

“One commercial didn’t change rates of drunk driving. It was an ad on TV, a scene in a movie, repeated over and over, that ultimately had an impact,” she says….(More)

Ssanyu Rebecca at Making All Voices Count: “The call for a data revolution by the UN Secretary General’s High Level Panel in the run up to Agenda 2030 stimulated debate and action in terms of innovative ways of generating and sharing data.

Since then, technological advances have supported increased access to data and information through initiatives such as open data platforms and SMS-based citizen reporting systems. The main driving force for these advances is for data to be timely and usable in decision-making. Among the several actors in the data field are the proponents of citizen-generated data (CGD) who assert its potential in the context of the sustainable development agenda.

Nevertheless, there is need for more evidence on the potential of CGD in influencing policy and service delivery, and contributing to the achievement of the sustainable development goals. Our study on Citizen-generated data in the information ecosystem: exploring links for sustainable development sought to obtain answers. Using case studies on the use of CGD in two different scenarios in Uganda and Kenya, Development Research and Training (DRT) and Development Initiatives (DI) collaborated to carry out this one-year study.

In Uganda, we focused on a process of providing unsolicited citizen feedback to duty- bearers and service providers in communities. This was based on the work of Community Resource Trackers, a group of volunteers supported by DRT in five post-conflict districts (Gulu, Kitgum, Pader, Katakwi and Kotido) to identify and track community resources and provide feedback on their use. These included financial and in-kind resources, allocated through central and local government, NGOs and donors.

In Kenya, we focused on a formalised process of CGD involving the Ministry of Education and National Taxpayers Association. The School Report Card (SRC) is an effort to increase parental participation in schooling. SRC is a scorecard for parents to assess the performance of their school each year in ten areas relatingto the quality of education.

What were the findings?

The two processes provided insights into the changes CGD influences in the areas of accountability, resource allocation, service delivery and government response.

Both cases demonstrated the relevance of CGD in improving service delivery. They showed that the uptake of CGD and response by government depends significantly on the quality of relationships that CGD producers create with government, and whether the initiatives relate to existing policy priorities and interests of government.

The study revealed important effects on improving citizen behaviours. Community members who participated in CGD processes, understood their role as citizens and participated fully in development processes, with strong skills, knowledge and confidence.

The Kenya case study revealed that CGD can influence policy change if it is generated and used at large scale, and in direct linkage with a specific sector; but it also revealed that this is difficult to measure.

In Uganda we observed distinct improvements in service delivery and accessibility at the local level – which was the motivation for engaging in CGD in the first instance….(More) (Full Report)”.

Review by Aizhan Tursunbayeva, Massimo Franco, and Claudia Pagliari: “Although the intersection between social media and health has received considerable research attention, little is known about how public sector health organizations are using social media for e-Government. This systematic literature review sought to capture, classify, appraise and synthesize relevant evidence from four international research databases and gray literature. From 2441 potentially relevant search results only 22 studies fully met the inclusion criteria. This modest evidence-base is mostly descriptive, unidisciplinary and lacks the theoretical depth seen in other branches of e-Government research. Most studies were published in the last five years in medical journals, focus on Twitter and come from high income countries. The reported e-Government objectives mainly fall into Bertot et al.’s (2010) categories of transparency/accountability, democratic participation, and co-production, with least emphasis on the latter. A unique category of evaluation also emerged. The lack of robust evidence makes it difficult to draw conclusions about the effectiveness of these approaches in the public health sector and further research is warranted….(More)”.

Open Knowledge International: “The Global Open Data Index (GODI) is the annual global benchmark for publication of open government data, run by the Open Knowledge Network. Our crowdsourced survey measures the openness of government data according to the Open Definition.

By having a tool that is run by civil society, GODI creates valuable insights for government’s data publishers to understand where they have data gaps. It also shows how to make data more useable and eventually more impactful. GODI therefore provides important feedback that governments are usually lacking.

For the last 5 years we have been revising GODI methodology to fit the changing needs of the open data movement. This year, we changed our entire survey design by adding experimental questions to assess data findability and usability. We also improved our datasets definitions by looking at essential data points that can solve real world problems. Using more precise data definitions also increased the reliability of our cross-country comparison. See all about the GODI methodology here

In addition, this year shall be more than a mere measurement tool. We see it as a tool for conversation. To spark debate, we release GODI in two phases:

- The dialogue phase – We are releasing the data to the public after a rigorous review. Yet, like everyone, our work is not assessment in not always perfect. We give all users a chance to contest the index results for 30 days, starting May 2nd. In this period, users of the index can comment on our assessments through our Global Open Data Index forum. On June 2nd, we will review those comments and will change some index submissions if needed.

- The final results – on June 15 we will present the final results of the index. For the first time ever, we will also publish the GODI white paper. This paper will include our main findings and recommendations to advance open data publication….

… findings from this year’s GODI

- GODI highlights data gaps. Open data is the final stage of an information production chain, where governments measure and collect data, process and share data internally, and publish this data openly. While being designed to measure open data, the Index also highlights gaps in this production chain. Does a government collect data at all? Why is data not collected? Some governments lack the infrastructure and resources to modernise their information systems; other countries do not have information systems in place at all.

- Data findability is a major challenge. We have data portals and registries, but government agencies under one national government still publish data in different ways and different locations. Moreover, they have different protocols for license and formats. This has a hazardous impact – we may not find open data, even if it is out there, and therefore can’t use it. Data findability is a prerequisite for open data to fulfill its potential and currently most data is very hard to find.

- A lot of ‘data’ IS online, but the ways in which it is presented are limiting their openness. Governments publish data in many forms, not only as tabular datasets but also visualisations, maps, graphs and texts. While this is a good effort to make data relatable, it sometimes makes the data very hard or even impossible for reuse. It is crucial for governments to revise how they produce and provide data that is in good quality for reuse in its raw form. For that, we need to be aware what is best raw data required which varies from data category to category.

- Open licensing is a problem, and we cannot assess public domain status. Each year we find ourselves more confused about open data licences. On the one hand, more governments implement their unique open data license versions. Some of them are compliant with the Open Definition, but most are not officially acknowledged. On the other hand, some governments do not provide open licenses, but terms of use, that may leave users in the dark about the actual possibilities to reuse data. There is a need to draw more attention to data licenses and make sure data producers understand how to license data better….(More)”.

Book edited by Sonia E. Alvarez, Jeffrey W. Rubin, Millie Thayer, Gianpaolo Baiocchi, and Agustín Laó-Montes: “The contributors to Beyond Civil Society argue that the conventional distinction between civic and uncivic protest, and between activism in institutions and in the streets, does not accurately describe the complex interactions of forms and locations of activism characteristic of twenty-first-century Latin America. They show that most contemporary political activism in the region relies upon both confrontational collective action and civic participation at different moments. Operating within fluid, dynamic, and heterogeneous fields of contestation, activists have not been contained by governments or conventional political categories, but rather have overflowed their boundaries, opening new democratic spaces or extending existing ones in the process. These essays offer fresh insight into how the politics of activism, participation, and protest are manifest in Latin America today while providing a new conceptual language and an interpretive framework for examining issues that are critical for the future of the region and beyond. (Read the foreword by Arturo Escobar and introduction)…(More)”

Most simply, a blockchain is an inexpensive and transparent way to record transactions….A blockchain system, though, inherently enforces rules about authentication and transaction security. That makes it safe and affordable for a person to store any amount of money securely and confidently. While that’s still in the future, blockchain-based systems are already helping people in the developing world in very real ways.

Sending money internationally

In 2016, emigrants working abroad sent an estimated US$442 billion to their families in their home countries. This global flow of cash is a significant factor in the financial well-being of families and societies in developing nations. But the process of sending money can be extremely expensive….Hong Kong’s blockchain-enabled Bitspark has transaction costs so low it charges a flat HK$15 for remittances of less than HK$1,200 (about $2 in U.S. currency for transactions less than $150) and 1 percent for larger amounts. Using the secure digital connections of a blockchain system lets the company bypass existing banking networks and traditional remittance systems.

Similar services helping people send money to the Philippines, Ghana, Zimbabwe, Uganda, Sierra Leone and Rwanda also charge a fraction of the current banking rates.

Insurance

Most people in the developing world lack health and life insurance, primarily because it’s so expensive compared to income. Some of that is because of high administrative costs: For every dollar of insurance premium collected, administrative costs amounted to $0.28 in Brazil, $0.54 in Costa Rica, $0.47 in Mexico and $1.80 in the Philippines. And many people who live on less than a dollar a day have neither the ability to afford any insurance, nor any company offering them services….Consuelo is a blockchain-based microinsurance service backed by Mexican mobile payments company Saldo.mx. Customers can pay small amounts for health and life insurance, with claims verified electronically and paid quickly.

Helping small businesses

Blockchain systems can also help very small businesses, which are often short of cash and also find it expensive – if not impossible – to borrow money. For instance, after delivering medicine to hospitals, small drug retailers in China often wait up to 90 days to get paid. But to stay afloat, these companies need cash. They rely on intermediaries that pay immediately, but don’t pay in full. A $100 invoice to a hospital might be worth $90 right away – and the intermediary would collect the $100 when it was finally paid….

Humanitarian aid

Blockchain technology can also improve humanitarian assistance. Fraud, corruption, discrimination and mismanagement block some money intended to reduce poverty and improve education and health care from actually helping people.In early 2017 the U.N. World Food Program launched the first stage of what it calls “Building Block,” giving food and cash assistance to needy families in Pakistan’s Sindh province. An internet-connected smartphone authenticated and recorded payments from the U.N. agency to food vendors, ensuring the recipients got help, the merchants got paid and the agency didn’t lose track of its money.

…In the future, blockchain-based projects can help people and governments in other ways, too. As many as 1.5 billion people – 20 percent of the world’s population – don’t have any documents that can verify their identity. That limits their ability to use banks, but also can bar their way when trying to access basic human rights like voting, getting health care, going to school and traveling.

Several companies are launching blockchain-powered digital identity programs that can help create and validate individuals’ identities….(More)”

Book by Alberto Alemanno: “Don’t get mad – get lobbying! From the Austrian student who took on Facebook to the Mexicans who campaigned successfully for a Soda sugar tax to the British scientist who lobbied for transparency in drug trials, citizen lobbyists are pushing through changes even in the darkest of times. Here’s how you can join them.

Many democratic societies are experiencing a crisis of faith. We cast our votes and a few of us even run for office, but our supposedly representative governments seem driven by the interests of big business, powerful individuals and wealthy lobby groups. All the while the world’s problems – like climate change, Big Data, corporate greed, the rise of nationalist movements – seem more pressing than ever. What hope do any of us have of making a difference?…..

We can shape and change policies. How? Not via more referenda and direct democracy, as the populists are arguing, but by becoming ‘citizen lobbyists’ – learning the tools that the big corporate lobbyists use, but to advance causes we really care about, from saving a local library to taking action against fracking. The world of government appears daunting, but this book outlines a ten-step process that anyone can use, bringing their own talent and expertise to make positive change…

10 steps to becoming an expert lobbyist:

- Pick Your Battle

- Do Your Homework

- Map Your Lobbying Environment

- Lobbying Plan

- Pick Your Allies

- You Pays?

- Communication and Media Plan

- Face-to-Face Meeting

- Monitoring and Implementation

- Stick to the (Lobbying) Rules

If you’re looking to improve – or to join – your community, if you’re searching for a sense of purpose or a way to take control of what’s going on around you, switching off is no longer an option. It’s time to make your voice count….(More)”.

The McKinsey Center for Government: “Governments face a pressing question: How to do more with less? Raising productivity could save $3.5 trillion a year—or boost outcomes at no extra cost.

Higher costs and rising demand have driven rapid increases in spending on core public services such as education, healthcare, and transport—while countries must grapple with complex challenges such as population aging, economic inequality, and protracted security concerns. Government expenditure amounts to more than a third of global GDP, budgets are strained, and the world public-sector deficit is close to $4 trillion a year.

At the same time, governments are struggling to meet citizens’ rising expectations. Satisfaction with key state services, such as public transportation, schools, and healthcare facilities, is less than half that of nonstate providers, such as banks or utilities.

Governments need a way to deliver better outcomes—and a better experience for citizens—at a sustainable cost. A new paper by the McKinsey Center for Government (MCG), Government productivity: Unlocking the $3.5 trillion opportunity, suggests that goal is within reach. It shows that several countries have achieved dramatic productivity improvements in recent years—for example, by improving health, public safety, and education outcomes while maintaining or even reducing spending per capita or per student in those sectors.

If other countries were to match the improvements already demonstrated in these pockets of excellence, the world’s governments could potentially save as much as $3.5 trillion a year by 2021—equivalent to the entire global fiscal gap. Alternatively, countries could choose to keep spending constant while boosting the quality of key services. For example, if all the countries studied had improved the productivity of their healthcare systems at the rate of comparable best performers over the past 5 years, they would have added 1.4 years to the healthy life expectancy of their combined populations. That translates into 12 billion healthy life years gained, without additional per capita spending…(More)”

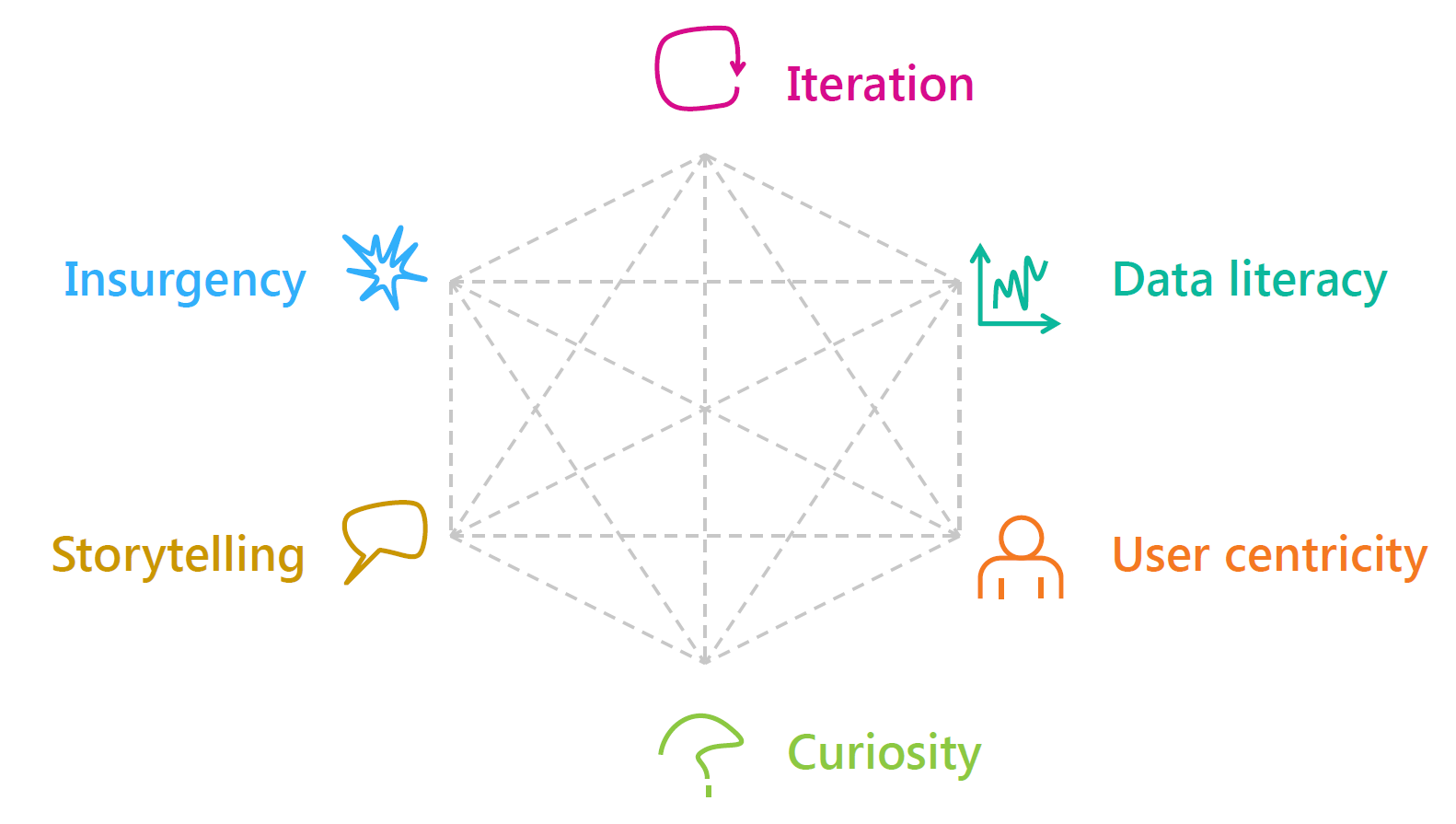

Matt Kerlogue at the OECD: “… has been investigating the topic of skills for public sector innovation. The result of our work has been to produce a beta model of “core skills” for public sector innovation which we are sharing for comment. Our work on innovation skills also sits within the framework of the OECD’s skills for a 21st century Civil Service, and the beta model of core skills for public sector innovation will ultimately form an annex to this work programme’s final report.

Our skills model is designed around six areas of “core skills”: iteration, data literacy, user centricity, curiosity, storytelling, and insurgency. These six skills areas are not the only skills for public sector innovation, each innovation project and challenge will have its own particular needs. Nor will all public servants need to make use of or apply these skills in every aspect of their day-to-day job. Rather, these are six skills areas that with proper promotion/advocacy and development we believe can enable a wider adoption of innovation practices and thus an increased level of innovation. In fact, there are a number of other skills that are already covered in existing public sector competency frameworks that are relevant for innovation, such as collaboration, strategic thinking, political awareness, coaching.

…(More)”