Stefaan Verhulst

Paper by Marc Schuilenburg and Yarin Eski: “Why do people voluntarily give away their personal data to private companies? In this paper, we show how data sharing is experienced at the level of Tesla car owners. We regard Tesla cars as luxury surveillance goods for which the drivers voluntarily choose to share their personal data with the US company. Based on an analysis of semi-structured interviews and observations of Tesla owners’ posts on Facebook groups, we discern three elements of luxury surveillance: socializing, enjoying and enduring. We conclude that luxury surveillance can be traced back to the social bonds created by a gift economy…(More)”.

Article by Stefaan Verhulst: “After a long gestation period of three years, the European Health Data Space (EHDS) is now coming into effect across the European Union, potentially ushering in a new era of health data access, interoperability, and innovation. As this ambitious initiative enters the implementation phase, it brings with it the opportunity to fundamentally reshape how health systems across Europe operate. More generally, the EHDS contains important lessons (and some cautions) for the rest of the world, suggesting how a fragmented, reactive model of healthcare may transition to one that is more integrated, proactive, and prevention-oriented.

For too long, health systems–in the EU and around the world–have been built around treating diseases rather than preventing them. Now, we have an opportunity to change that paradigm. Data, and especially the advent of AI, give us the tools to predict and intervene before illness takes hold. Data offers the potential for a system that prioritizes prevention–one where individuals receive personalized guidance to stay healthy, policymakers access real-time evidence to address risks before they escalate, and epidemics are predicted weeks in advance, enabling proactive, rapid, and highly effective responses.

But to make AI-powered preventive health care a reality, and to make the EHDS a success, we need a new data governance approach, one that would include two key components:

- The ability to reuse data collected for other purposes (e.g., mobility, retail sales, workplace trends) to improve health outcomes.

- The ability to integrate different data sources–clinical records and electronic health records (EHRS), but also environmental, social, and economic data — to build a complete picture of health risks.

In what follows, we outline some critical aspects of this new governance framework, including responsible data access and reuse (so-called secondary use), moving beyond traditional consent models to a social license for reuse, data stewardship, and the need to prioritize high-impact applications. We conclude with some specific recommendations for the EHDS, built from the preceding general discussion about the role of AI and data in preventive health…(More)”.

Article by Adam Zable and Stefaan Verhulst: “Non-Traditional Data (NTD)—digitally captured, mediated, or observed data such as mobile phone records, online transactions, or satellite imagery—is reshaping how we identify, understand, and respond to public interest challenges. As part of the Third Wave of Open Data, these often privately held datasets are being responsibly re-used through new governance models and cross-sector collaboration to generate public value at scale.

In our previous post, we shared emerging case studies across health, urban planning, the environment, and more. Several months later, the momentum has not only continued but diversified. New projects reaffirm NTD’s potential—especially when linked with traditional data, embedded in interdisciplinary research, and deployed in ways that are privacy-aware and impact-focused.

This update profiles recent initiatives that push the boundaries of what NTD can do. Together, they highlight the evolving domains where this type of data is helping to surface hidden inequities, improve decision-making, and build more responsive systems:

- Financial Inclusion

- Public Health and Well-Being

- Socioeconomic Analysis

- Transportation and Urban Mobility

- Data Systems and Governance

- Economic and Labor Dynamics

- Digital Behavior and Communication…(More)”.

UNCTAD Report: Frontier technologies, particularly artificial intelligence (AI), are profoundly transforming our economies and societies, reshaping production processes, labour markets and the ways in which we live and interact. Will AI accelerate progress towards the Sustainable Development Goals, or will it exacerbate existing inequalities, leaving the underprivileged further behind? How can developing countries harness AI for sustainable development? AI is the first technology in history that can make decisions and generate ideas on its own. This sets it apart from traditional technologies and challenges the notion of technological neutrality.

The rapid development of AI has also outpaced the ability of Governments to respond effectively. The Technology and Innovation Report 2025 aims to guide policymakers through the complex AI

andscape and support them in designing science, technology and innovation (STI) policies that foster inclusive and equitable technological progress.

The world already has significant digital divides, and with the rise of AI, these could widen even further. In response, the Report argues for AI development based on inclusion and equity, shifting the focus from

technology to people. AI technologies should complement rather than displace human workers and production should be restructured so that the benefits are shared fairly among countries, firms and

workers. It is also important to strengthen international collaboration, to enable countries to co-create inclusive AI governance.

The Report examines five core themes:

A. AI at the technological frontier

B. Leveraging AI for productivity and workers’ empowerment

C. Preparing to seize AI opportunities

D. Designing national policies for AI

E. Global collaboration for inclusive and equitable AI…(More)”

Book by Rahul Bhargava: “…new toolkit for data storytelling in community settings, one purpose-built for goals like inclusion, empowerment, and impact. Data science and visualization has spread into new domains it was designed for – community organizing, education, journalism, civic governance, and more. The dominant computational methods and processes, which have not changed in response, are causing significant discriminatory and harmful impacts, documented by leading scholars across a variety of populations. Informed by 15 years of collaborations in academic and professional settings with nonprofits and marginalized populations, the book articulates a new approach for aligning the processes and media of data work with social good outcomes, learning from the practices of newspapers, museums, community groups, artists, and libraries.

This book introduces a community-driven framework as a response to the urgent need to realign data theories and methods around justice and empowerment to avoid further replicating harmful power dynamics and ensure everyone has a seat at the table in data-centered community processes. It offers a broader toolbox for working with data and presenting it, pushing beyond the limited vocabulary of surveys, spreadsheets, charts and graphs…(More)”.

Paper by Uri Y. Hacohen: “Data is often heralded as “the world’s most valuable resource,” yet its potential to benefit society remains unrealized due to systemic barriers in both public and private sectors. While open data-defined as data that is available, accessible, and usable-holds immense promise to advance open science, innovation, economic growth, and democratic values, its utilization is hindered by legal, technical, and organizational challenges. Public sector initiatives, such as U.S. and European Union open data regulations, face uneven enforcement and regulatory complexity, disproportionately affecting under-resourced stakeholders such as researchers. In the private sector, companies prioritize commercial interests and user privacy, often obstructing data openness through restrictive policies and technological barriers. This article proposes an innovative, four-layered policy framework to overcome these obstacles and foster data openness. The framework includes (1) improving open data infrastructures, (2) ensuring legal frameworks for open data, (3) incentivizing voluntary data sharing, and (4) imposing mandatory data sharing obligations. Each policy cluster is tailored to address sector-specific challenges and balance competing values such as privacy, property, and national security. Drawing from academic research and international case studies, the framework provides actionable solutions to transition from a siloed, proprietary data ecosystem to one that maximizes societal value. This comprehensive approach aims to reimagine data governance and unlock the transformative potential of open data…(More)”.

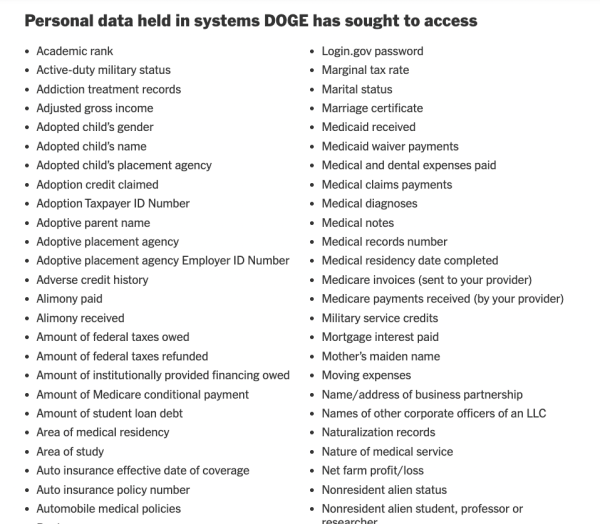

Article by Emily Badger and Sheera Frenkel: “The federal government knows your mother’s maiden name and your bank account number. The student debt you hold. Your disability status. The company that employs you and the wages you earn there. And that’s just a start. It may also know your …and at least 263 more categories of data.These intimate details about the personal lives of people who live in the United States are held in disconnected data systems across the federal government — some at the Treasury, some at the Social Security Administration and some at the Department of Education, among other agencies.

The Trump administration is now trying to connect the dots of that disparate information. Last month, President Trump signed an executive order calling for the “consolidation” of these segregated records, raising the prospect of creating a kind of data trove about Americans that the government has never had before, and that members of the president’s own party have historically opposed.

The effort is being driven by Elon Musk, the world’s richest man, and his lieutenants with the Department of Government Efficiency, who have sought access to dozens of databases as they have swept through agencies across the federal government. Along the way, they have elbowed past the objections of career staff, data security protocols, national security experts and legal privacy protections…(More)”.

Essay by Brian J. A. Boyd: “…How could stewardship of artificially living AI be pursued on a broader, even global, level? Here, the concept of “integral ecology” is helpful. Pope Francis uses the phrase to highlight the ways in which everything is connected, both through the web of life and in that social, political, and environmental challenges cannot be solved in isolation. The immediate need for stewardship over AI is to ensure that its demands for power and industrial production are addressed in a way that benefits those most in need, rather than de-prioritizing them further. For example, the energy requirements to develop tomorrow’s AI should spur research into small modular nuclear reactors and updated distribution systems, making energy abundant rather than causing regressive harms by driving up prices on an already overtaxed grid. More broadly, we will need to find the right institutional arrangements and incentive structures to make AI Amistics possible.

We are having a painfully overdue conversation about the nature and purpose of social media, and tech whistleblowers like Tristan Harris have offered grave warnings about how the “race to the bottom of the brain stem” is underway in AI as well. The AI equivalent of the addictive “infinite scroll” design feature of social media will likely be engagement with simulated friends — but we need not resign ourselves to it becoming part of our lives as did social media. And as there are proposals to switch from privately held Big Data to a public Data Commons, so perhaps could there be space for AI that is governed not for maximizing profit but for being sustainable as a common-pool resource, with applications and protocols ordered toward long-run benefit as defined by local communities…(More)”.

Book by Andy J. Merolla and Jeffrey A. Hall: “We spend much of our waking lives communicating with others. How does each moment of interaction shape not only our relationships but also our worldviews? And how can we create moments of connection that improve our health and well-being, particularly in a world in which people are feeling increasingly isolated?

Drawing from their extensive research, Andy J. Merolla and Jeffrey A. Hall establish a new way to think about our relational life: as existing within “social biomes”—complex ecosystems of moments of interaction with others. Each interaction we have, no matter how unimportant or mundane it might seem, is a building block of our identities and beliefs. Consequently, the choices we make about how we interact and who we interact with—and whether we interact at all—matter more than we might know. Merolla and Hall offer a sympathetic, practical guide to our vital yet complicated social lives and propose realistic ways to embrace and enhance connection and hope…(More)”.

Article by UNDP: “Increasingly AI techniques like natural language processing, machine learning and predictive analytics are being used alongside the most common methods in collective intelligence, from citizen science and crowdsourcing to digital democracy platforms.

At its best, AI can be used to augment and scale the intelligence of groups. In this section we describe the potential offered by these new combinations of human and machine intelligence. First we look at the applications that are most common, where AI is being used to enhance efficiency and categorize unstructured data, before turning to the emerging role of AI – where it helps us to better understand complex systems.

These are the three main ways AI and collective intelligence are currently being used together for the SDGs:

1. Efficiency and scale of data processing

AI is being effectively incorporated into collective intelligence projects where timing is paramount and a key insight is buried deep within large volumes of unstructured data. This combination of AI and collective intelligence is most useful when decision makers require an early warning to help them manage risks and distribute public resources more effectively. For example, Dataminr’s First Alert system uses pre-trained machine learning models to sift through text and images scraped from the internet, as well as other data streams, such as audio broadcasts, to isolate early signals that anticipate emergency events…(More)”. (See also: Where and when AI and CI meet: exploring the intersection of artificial and collective intelligence towards the goal of innovating how we govern).