Stefaan Verhulst

Why Is This Tool Unique?

- Only source of information on how foundations are supporting U.S. democracy

- Uses a common framework for understanding what activities foundations are funding

- Provides direct access to available funding data

- Delivers fresh and timely data every week

How Can You Use It?

- Understand who is funding what, where

- Analyze funder and nonprofit networks

- Compare foundation funding for issues you care about

- Support your knowledge about the field

- Discover new philanthropic partners…(More)”

Survey by Pew Global: “Signs of political discontent are increasingly common in many Western nations, with anti-establishment parties and candidates drawing significant attention and support across the European Union and in the United States. Meanwhile, as previous Pew Research Center surveys have shown, in emerging and developing economies there is widespread dissatisfaction with the way the political system is working.

As a new nine-country Pew Research Center survey on the strengths and limitations of civic engagement illustrates, there is a common perception that government is run for the benefit of the few, rather than the many in both emerging democracies and more mature democracies that have faced economic challenges in recent years. In eight of nine nations surveyed, more than half say government is run for the benefit of only a few groups in society, not for all people.1

As a new nine-country Pew Research Center survey on the strengths and limitations of civic engagement illustrates, there is a common perception that government is run for the benefit of the few, rather than the many in both emerging democracies and more mature democracies that have faced economic challenges in recent years. In eight of nine nations surveyed, more than half say government is run for the benefit of only a few groups in society, not for all people.1

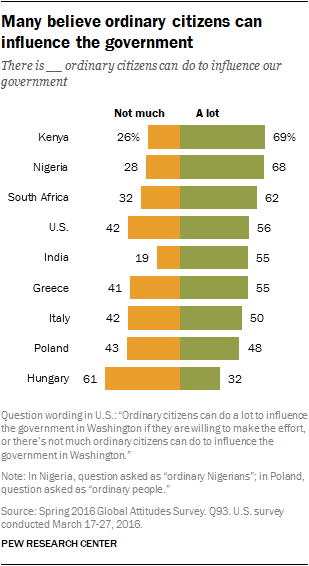

However, this skeptical outlook on government does not mean people have given up on democracy or the ability of average citizens to have an impact on how the country is run. Roughly half or more in eight nations – Kenya, Nigeria, South Africa, the U.S., India, Greece, Italy and Poland – say ordinary citizens can have a lot of influence on government. Hungary, where 61% say there is little citizens can do, is the lone nation where pessimism clearly outweighs optimism on this front.

Many people in these nine nations say they could potentially be motivated to become politically engaged on a variety of issues, especially poor health care, poverty and poor-quality schools. When asked what types of issues could get them to take political action, such as contacting an elected official or taking part in a protest, poor health care is the top choice among the six issues tested in six of eight countries. Health care, poverty and education constitute the top three motivators in all nations except India and Poland….(More)

Eric Gordon in Governing: “…But here’s the problem: The institutional language of engagement has been defined by its measurement. Chief engagement officers in corporations are measuring milliseconds on web pages, and clicks on ads, and not relations among people. This is disproportionately influencing the values of democracy and the responsibility of public institutions to protect them.

Too often, when government talks about engagement, it is talking those things that are measurable, but it is providing mandates to employees imbued with ambiguity. For example, the executive order issued by Mayor Murray in Seattle is a bold directive for the “timely implementation by all City departments of equitable outreach and engagement practices that reaffirm the City’s commitment to inclusive participation.”

This extraordinary mayoral mandate reflects clear democratic values, but it lacks clarity of methods. It reflects a need to use digital technology to enhance process, but it doesn’t explain why. This in no way is meant as a criticism of Seattle’s effort; rather, it is simply meant to illustrate the complexity of engagement in practice. Departments are rewarded for quantifiable efficiency, not relationships. Just because something is called engagement, this fundamental truth won’t change.

Government needs to be much more clear about what it really means when it talks about engagement. In 2015, Living Cities and the Citi Foundation launched the City Accelerator on Public Engagement, which was an effort to source and support effective practices of public engagement in city government. This 18-month project, based on a cohort of five cities throughout the United States, is just now coming to an end. Out of it came several lasting insights, one of which I will share here. City governments are institutions in transition that need to ask why people should care.

After the election, who is going to care about government? How do you get people to care about the services that government provides? How do you get people to care about the health outcomes in their neighborhoods? How do you get people to care about ensuring accessible, high-quality public education?

I want to propose that when government talks about civic engagement, it is really talking about caring. When you care about something, you make a decision to be attentive to that thing. But “caring about” is one end of what I’ll call a spectrum of caring. On the other end, there is “caring for,” when, as described by philosopher Nel Noddings, “what we do depends not upon rules, or at least not wholly on rules — not upon a prior determination of what is fair or equitable — but upon a constellation of conditions that is viewed through both the eyes of the one-caring and the eyes of the cared-for.”

In short, caring-for is relational. When one cares for another, the outcomes of an encounter are not predetermined, but arise through relation….(More)”.

How does it do that? In a word: data.

Using a series of surveys and evaluations, Repair learned that once people participate in two volunteer opportunities, they’re more likely to continue volunteering regularly. Repair has used that and other findings to inform its operations and strategy, and to accelerate its work to encourage individuals to make an enduring commitment to public service.

Many purpose-driven organizations like Repair the World are committing more brainpower, time, and money to gathering data, and nonprofit and foundation professionals alike are recognizing the importance of that effort.

And yet there is a difference between just having data and using it well. Recent surveys have found that 94 percent of nonprofit professionals felt they were not using data effectively, and that 75 percent of foundation professionals felt that evaluations conducted by and submitted to grant makers did not provide any meaningful insights.

To remedy this, the Charles and Lynn Schusterman Family Foundation (one of Repair the World’s donors) developed the Data Playbook, a new tool to help more organizations harness the power of data to make smarter decisions, gain insights, and accelerate progress….

In the purpose-driven sector, our work is critically important for shaping lives and strengthening communities. Now is the time for all of us to commit to using the data at our fingertips to advance the broad range of causes we work on — education, health care, leadership development, social-justice work, and much more…

We are in this together. Let’s get started. (More)”

Caleb Crain in the New Yorker: “Roughly a third of American voters think that the Marxist slogan “From each according to his ability to each according to his need” appears in the Constitution. About as many are incapable of naming even one of the three branches of the United States government. Fewer than a quarter know who their senators are, and only half are aware that their state has two of them.

Democracy is other people, and the ignorance of the many has long galled the few, especially the few who consider themselves intellectuals. Plato, one of the earliest to see democracy as a problem, saw its typical citizen as shiftless and flighty:

Sometimes he drinks heavily while listening to the flute; at other times, he drinks only water and is on a diet; sometimes he goes in for physical training; at other times, he’s idle and neglects everything; and sometimes he even occupies himself with what he takes to be philosophy.

It would be much safer, Plato thought, to entrust power to carefully educated guardians. To keep their minds pure of distractions—such as family, money, and the inherent pleasures of naughtiness—he proposed housing them in a eugenically supervised free-love compound where they could be taught to fear the touch of gold and prevented from reading any literature in which the characters have speaking parts, which might lead them to forget themselves. The scheme was so byzantine and cockamamie that many suspect Plato couldn’t have been serious; Hobbes, for one, called the idea “useless.”

A more practical suggestion came from J. S. Mill, in the nineteenth century: give extra votes to citizens with university degrees or intellectually demanding jobs. (In fact, in Mill’s day, select universities had had their own constituencies for centuries, allowing someone with a degree from, say, Oxford to vote both in his university constituency and wherever he lived. The system wasn’t abolished until 1950.) Mill’s larger project—at a time when no more than nine per cent of British adults could vote—was for the franchise to expand and to include women. But he worried that new voters would lack knowledge and judgment, and fixed on supplementary votes as a defense against ignorance.

In the United States, élites who feared the ignorance of poor immigrants tried to restrict ballots. In 1855, Connecticut introduced the first literacy test for American voters. Although a New York Democrat protested, in 1868, that “if a man is ignorant, he needs the ballot for his protection all the more,” in the next half century the tests spread to almost all parts of the country. They helped racists in the South circumvent the Fifteenth Amendment and disenfranchise blacks, and even in immigrant-rich New York a 1921 law required new voters to take a test if they couldn’t prove that they had an eighth-grade education. About fifteen per cent flunked. Voter literacy tests weren’t permanently outlawed by Congress until 1975, years after the civil-rights movement had discredited them.

Worry about voters’ intelligence lingers, however. …In a new book, “Against Democracy” (Princeton), Jason Brennan, a political philosopher at Georgetown, has turned Estlund’s hedging inside out to create an uninhibited argument for epistocracy. Against Estlund’s claim that universal suffrage is the default, Brennan argues that it’s entirely justifiable to limit the political power that the irrational, the ignorant, and the incompetent have over others. To counter Estlund’s concern for fairness, Brennan asserts that the public’s welfare is more important than anyone’s hurt feelings; after all, he writes, few would consider it unfair to disqualify jurors who are morally or cognitively incompetent. As for Estlund’s worry about demographic bias, Brennan waves it off. Empirical research shows that people rarely vote for their narrow self-interest; seniors favor Social Security no more strongly than the young do. Brennan suggests that since voters in an epistocracy would be more enlightened about crime and policing, “excluding the bottom 80 percent of white voters from voting might be just what poor blacks need.”…(More)”

Mark Forman in NextGov: “…Whether agencies are implementing an application or enterprisewide solution, end-user input (from both citizens and government workers) is a requirement for success. In fact, the only path to success in digital government is the “moment of truth,” the point of interaction when a government delivers a service or solves a problem for its citizens.

A recent example illustrates this challenge. A national government recently deployed a new application that enables citizens to submit questions to agency offices using their mobile devices. The mobile application, while functional and working to specifications, failed to address the core issue: Most citizens prefer asking questions via email, an option that was terminated when the new app was deployed.

Digital technologies offer government agencies numerous opportunities to cut costs and improve citizen services. But in the rush to implement new capabilities, IT professionals often neglect to consider fully their users’ preferences, knowledge, limitations and goals.

When developing new ways to deliver services, designers must expand their focus beyond the agency’s own operating interests to ensure they also create a satisfying experience for citizens. If not, the applications will likely be underutilized or even ignored, thus undermining the anticipated cost-savings and performance gains that set the project in motion.

Government executives also must recognize merely relying on user input creates a risk of “paving the cowpath”: innovations cannot significantly improve the customer experience if users do not recognize the value of new technologies in simplifying, making more worthwhile, or eliminating a task.

Many digital government playbooks and guidance direct IT organizations to create a satisfying citizen experience by incorporating user-centered design methodology into their projects. UCD is a process for ensuring a new solution or tool is designed from the perspective of users. Rather than forcing government workers or the public to adapt to the new solution, UCD helps create a solution tailored to their abilities, preferences and needs….effective UCD is built upon four primary principles or guidelines:

- Focus on the moment of truth. A new application or service must actually be something that citizens want and need via the channel used, and not just easy to use.

- Optimize outcomes, not just processes. True transformation occurs when citizens’ expectations and needs remain the constant center of focus. Merely overlaying new technology on business as usual may provide a prettier interface, but success requires a clear benefit for the public at the moment of truth in the interaction with government.

- Evolve processes over time to help citizens adapt to new applications. In most instances, citizens will make a smoother transition to new services when processes are changed gradually to be more intuitive rather than with an abrupt, flip-of-the-switch approach.

- Combine UCD with robust DevOps. Agencies need a strong DevOps process to incorporate what they learn about citizens’ preferences and needs as they develop, test and deploy new citizen services….(More)”

Nigel Shadbolt in The Guardian: “…here are three areas where action by the UK government can help to support and promote a flourishing open data economy

Strengthen our data infrastructure

We are used to thinking of areas like transport and energy requiring physical infrastructure. From roads and rail networks to the national grid and power stations, we understand that investment and management of these vital parts of an infrastructure are essential to the economic wellbeing and future prosperity of the nation.

This is no less true of key data assets. Our data infrastructure is a core part of our national infrastructure. From lists of legally constituted companies to the country’s geospatial data, our data infrastructure needs to be managed, maintained, in some cases built and in all cases made as widely available as possible.

To maximise the benefits to the UK’s economy and to reduce costs in delivery of public services, the data we rely on needs to be adaptable, trustworthy, and as open as possible….

While we do have some excellent examples of infrastructure data from the likes of Companies House, Land Registry, Ordnance Survey and Defra, core parts of the data infrastructure that we need within the UK are missing, unreliable, or of a low quality. The government must invest here just as it invests in our other traditional infrastructure.

Support and promote data innovation

If we are to make best use of data, we need a bridge between academic research, public, private and third sectors, and a thriving startup ecosystem where new ideas and approaches can grow.

We have learned that properly targeted challenges could identify potential savings for government – similar to Prescribing Analytics, an ODI-incubated startup which used publicly available data to identify £200m in prescriptions savings per year for the NHS – but, more importantly, translate that potential into procurable products and services that could deliver those savings.

A data challenge series run at a larger scale, funded by Innovate UK, openly contested and independently managed, would stimulate the creation of new companies, jobs, products and services. It would also act as a forcing function to strengthen data infrastructure around key challenges, and raise awareness and capacity for those working to solve them. The data needed to satisfy the challenges would have to be made available and usable, bringing data innovation into government and bolstering the offer of the startups and SMEs who take part.

Invest in data literacy

In order to take advantage of the data revolution, policymakers, businesses and citizens need to understand how to make use of data. In other words, they must become data literate.

Data literacy is needed through our whole educational system and society more generally. Crucially, policymakers are going to need to be informed by insights that can only be gleaned through understanding and analysing data effectively….(More)”

“The advent of social apps, smart phones and ubiquitous computing has brought a great transformation to our day-to-day life. The incredible pace with which the new and disruptive services continue to emerge challenges our perception of privacy. To keep apace with this rapidly evolving cyber reality, we need to devise agile methods and frameworks for developing privacy-preserving systems that align with evolving user’s privacy expectations.

Previous efforts have tackled this with the assumption that privacy norms are provided through existing sources such law, privacy regulations and legal precedents. They have focused on formally expressing privacy norms and devising a corresponding logic to enable automatic inconsistency checks and efficient enforcement of the logic.

However, because many of the existing regulations and privacy handbooks were enacted well before the Internet revolution took place, they often lag behind and do not adequately reflect the application of logic in modern systems. For example, the Family Rights and Privacy Act (FERPA) was enacted in 1974, long before Facebook, Google and many other online applications were used in an educational context. More recent legislation faces similar challenges as novel services introduce new ways to exchange information, and consequently shape new, unconsidered information flows that can change our collective perception of privacy.

Crowdsourcing Contextual Privacy Norms

Armed with the theory of Contextual Integrity (CI) in our work, we are exploring ways to uncover societal norms by leveraging the advances in crowdsourcing technology.

In our recent paper, we present the methodology that we believe can be used to extract a societal notion of privacy expectations. The results can be used to fine tune the existing privacy guidelines as well as get a better perspective on the users’ expectations of privacy.

CI defines privacy as collection of norms (privacy rules) that reflect appropriate information flows between different actors. Norms capture who shares what, with whom, in what role, and under which conditions. For example, while you are comfortable sharing your medical information with your doctor, you might be less inclined to do so with your colleagues.

We use CI as a proxy to reason about privacy in the digital world and a gateway to understanding how people perceive privacy in a systematic way. Crowdsourcing is a great tool for this method. We are able to ask hundreds of people how they feel about a particular information flow, and then we can capture their input and map it directly onto the CI parameters. We used a simple template to write Yes-or-No questions to ask our crowdsourcing participants:

“Is it acceptable for the [sender] to share the [subject’s] [attribute] with [recipient] [transmission principle]?”

For example:

“Is it acceptable for the student’s professor to share the student’s record of attendance with the department chair if the student is performing poorly? ”

In our experiments, we leveraged Amazon’s Mechanical Turk (AMT) to ask 450 turkers over 1400 such questions. Each question represents a specific contextual information flow that users can approve, disapprove or mark under the Doesn’t Make Sense category; the last category could be used when 1) the sender is unlikely to have the information, 2) the receiver would already have the information, or 3) the question is ambiguous….(More)”