Stefaan Verhulst

Report by Access Partnerships: “AI is reshaping work at a pace that most labor market information systems were not built to measure. Against this backdrop, the pressing question is not simply “who works where?” as it used to be in the past, but what people actually do, what skills they use, and how AI is changing tasks inside roles.

Today, many countries still rely on infrequent surveys, broad occupational categories, and siloed administrative datasets. That makes it harder to spot early signals of changing skills demand, target training investment, or support employers and workers as AI adoption accelerates.

Modernizing labor market data for the AI age

Our report, developed in partnership with Workday, helps governments modernize labor market data systems to better navigate AI-driven change. It establishes a global baseline across 21 countries, identifies system gaps, and sets out a practical pathway to strengthen readiness over time.

At the center is a maturity framework benchmarking countries across six dimensions of AI-ready labor market data: Forecasting readiness, Labor market granularity, Accessibility, Interoperability and integration, and Real-time responsiveness (FLAIR)…(More)”.

Paper by Anush Ganesh, and Krusha Bhatt: “As society advances toward a digital economy with increasing dependence on internet-based services, data has attained prominence as an essential currency supporting market power. This paper examines the emerging jurisprudence on excessive data collection by dominant digital platforms, comparing approaches developed in India and the European Union. The Indian approach, exemplified by the WhatsApp Privacy (2025) decision, integrates competition law with constitutional protections, particularly the right to privacy under Article 21 of the Indian Constitution. Meanwhile, the European approach, crystallized in the Facebook Germany case, integrates competition law with data protection principles enshrined in the General Data Protection Regulation (GDPR). Despite their different legal foundations, these approaches display convergence in recognizing that dominant platforms’ data collection practices can constitute abusive exploitation of market power. This paper argues that this convergence creates opportunities for a unified analytical framework that respects jurisdictional diversity while enabling more effective global platform regulation…(More)”.

Paper by Daniel Thilo Schroeder et al: “Advances in artificial intelligence (AI) offer the prospect of manipulating beliefs and behaviors on a population-wide level. Large language models (LLMs) and autonomous agents let influence campaigns reach unprecedented scale and precision. Generative tools can expand propaganda output without sacrificing credibility and inexpensively create falsehoods that are rated as more human-like than those written by humans. Techniques meant to refine AI reasoning, such as chain-of-thought prompting, can be used to generate more convincing falsehoods. Enabled by these capabilities, a disruptive threat is emerging: swarms of collaborative, malicious AI agents. Fusing LLM reasoning with multiagent architectures, these systems are capable of coordinating autonomously, infiltrating communities, and fabricating consensus efficiently. By adaptively mimicking human social dynamics, they threaten democracy. Because the resulting harms stem from design, commercial incentives, and governance, we prioritize interventions at multiple leverage points, focusing on pragmatic mechanisms over voluntary compliance…(More)”.

Article by EIT Urban Mobility: “Cities want to improve urban mobility for residents and achieve their urgent climate goals, but with a dizzying array of possibilities and local constraints, how can local authorities make sure they invest in solutions that respond to users’ real needs? More than that, how can they respond to residents’ true behaviours, not just their reported ones?

Whether it’s winning public trust in the shift to autonomous transport or supporting local communities to be less dependent on cars, citizen engagement puts local communities at the centre of urban transformation, enabling residents to define their unique needs. …

Take the city of Nantes in western France. The city’s urban development agency Samoa wanted to make it easier for people to get around without a car when cycling would not be an option; for those with reduced mobility or in the instances of transporting heavy items, for example.

Supported by EIT Urban Mobility’s RAPTOR programme, Samoa worked with active mobility startup Sanka Cycle to pilot its electric-assisted ‘BOB’ light vehicle to see if and how it could be deployed to replace traditional internal combustion vehicle trips.

In collaboration with urban change agency Humankind, the project deployed citizen engagement tools to better understand how users perceived the light vehicle’s value and what motivators or barriers could influence its acceptance.

Through a mix of field observations with in-depth and flash interviews Samoa and Sanka Cycle were able to gain valuable insights into how people saw the BOB vehicles in comparison to other forms of mobility in order to explore how it might be able to act as a bridge between cars and bicycles. …

Citizen engagement is increasingly gaining traction as a key tool in supporting the shift to sustainable urban mobility.

For the Interact project, which developed a Human Machine Interface (eHMI) for automated public transport buses, communication and trust with passengers was central for successful and ethical deployment. The core challenge however was when the safety driver was removed from the buses, the soft skills drivers bring to their role and their communication with passengers would also be removed. Therefore, learning more about how passengers felt about safety and trust was essential for making this new form of sustainable mobility socially acceptable.

Thus, Interact implemented citizen engagement with Humankind through on-site research and guerilla testing, observations and interviews, and a digital survey in the pilot sites of Rotterdam, the Netherlands, and Stavanger, Norway. The activity engaged both passengers and safety drivers through interviews to capture their unique perspectives. Important lessons were also learned about the nuances of how people felt about the technology.

In Stavanger, for instance, passengers raised concerns about how extreme weather would impact the system and brought up past experiences with automated systems failing as temperatures dropped. ..(More)”.

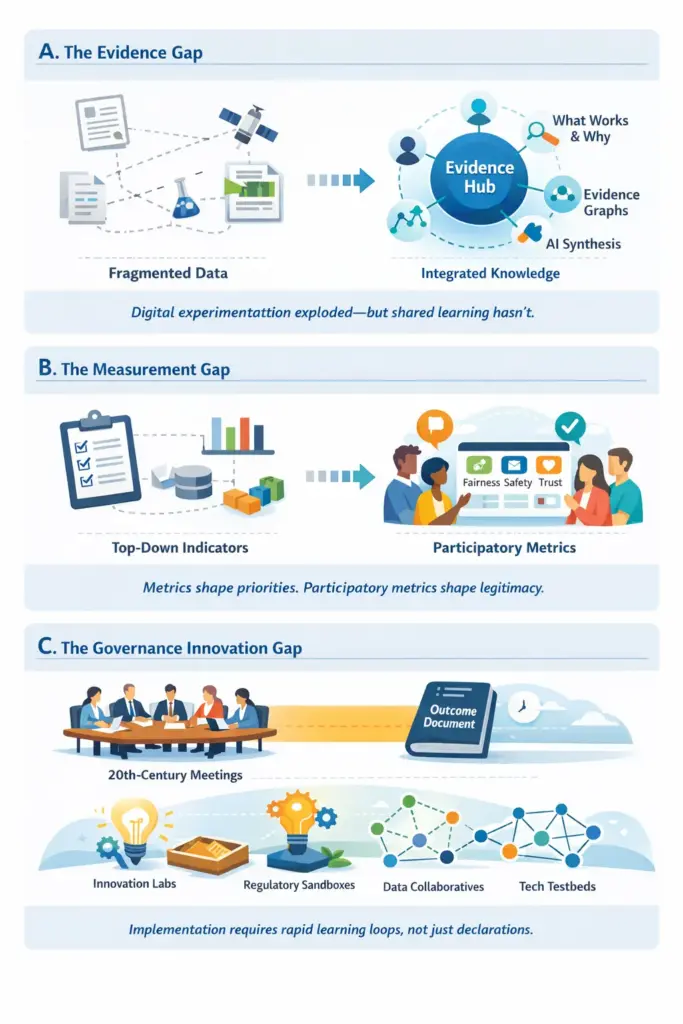

Article by Lea Kaspar and Stefaan G. Verhulst: “As the dust settles on the World Summit on the Information Society (WSIS) 20-year Review, observers are once again asking what the final outcome document—adopted by consensus on 17 December 2025—really delivers. Much like the WSIS+10 review a decade earlier, negotiations were characterized by pragmatism rather than ambition and by a desire to preserve the WSIS framework amid a radically altered technological landscape. And, as in previous cycles, the most critical questions were pushed to the margins in the final days of negotiation, leaving implementation—not text—as the arena where credibility will be tested.

Whether WSIS remains a living framework for digital cooperation—or recedes into diplomatic ritual—will depend less on consensus language and more on the ability of governments, civil society, researchers, and private actors to translate commitments into accountable practice.

This was the core point emphasized during the WSIS+20 High-Level Event intervention: legitimacy in digital governance is not earned by adoption, but by delivery. Participation, rights-anchoring, and accountability are needed at every stage of implementation—not just during negotiation.

Yet twenty years after Geneva and Tunis, the WSIS process still relies on a governance and operational model conceived for another era. The world now operates in a datafied, AI-augmented environment defined by real-time systems, cross-border infrastructures, and unprecedented concentration of digital power. The question is not whether WSIS principles remain relevant—they do—but whether the mechanisms used to operationalize them are fit for purpose.

Below, we’ll outline the same core challenges and opportunities we identified a decade ago, but updated with the realities, tools, and governance needs of 2026…(More)”

Article by Lauren Leek: ” I needed a restaurant recommendation, so I did what every normal person would do: I scraped every single restaurant in Greater London and built a machine-learning model.

It started as a very reasonable problem. I was tired of doom-scrolling Google Maps, trying to disentangle genuinely good food from whatever the algorithm had decided to push at me that day. Somewhere along the way, the project stopped being about dinner and became about something slightly more unhinged: how digital platforms quietly redistribute economic survival across cities.

Because once you start looking at London’s restaurant scene through data, you stop seeing all those cute independents and hot new openings. You start seeing an algorithmic market – one where visibility compounds, demand snowballs, and who gets to survive is increasingly decided by code.

Google Maps Is Not a Directory. It’s a Market Maker.

The public story of Google Maps is that it passively reflects “what people like.” More stars, more reviews, better food. But that framing obscures how the platform actually operates. Google Maps is not just indexing demand – it is actively organising it through a ranking system built on a small number of core signals that Google itself has publicly acknowledged: relevance, distance, and prominence.

“Relevance” is inferred from text matching between your search query and business metadata. “Distance” is purely spatial. But “prominence” is where the political economy begins. Google defines prominence using signals such as review volume, review velocity, average rating, brand recognition, and broader web visibility. In other words, it is not just what people think of a place – it is how often people interact with it, talk about it, and already recognise it…(More)”.

Article by Cosima Lenz, Stefaan Verhulst, and Roshni Singh: “Women’s health has long been constrained not simply by a lack of research or investment, but by the absence of a clear, collectively defined set of priorities. Across the field, the most urgent questions – those that reflect women’s lived experiences, diverse needs and evolving health challenges – are too often unidentified, under-articulated or overshadowed by legacy agendas. Consequently, decision-makers struggle to allocate resources effectively, researchers work without a shared compass and innovation efforts risk overlooking the areas of greatest impact.

That’s why identifying and prioritising the questions that matter is essential. Questions shape what gets studied, what gets measured and whose needs are addressed. Yet historically, these questions have been selected in fragmented or opaque ways, driven by the interests of a limited set of stakeholders. This has left major evidence gaps, particularly in areas that disproportionately affect women, and has perpetuated inconsistencies in diagnosis, treatment and care…

The GovLab’s 100 Questions Initiative is such a global, multi-phase process that seeks to collectively identify and prioritise the ten most consequential questions in a given field. In partnership with CEPS, and with support from the Gates-funded Research & Innovation (R&I) project, this methodology was applied to women’s health innovation to define the Top 10 Questions guiding future research and innovation.

The process combined topic mapping with experts across research, policy, technology and advocacy, followed by collecting and refining candidate questions to address gaps in evidence, practice and lived experience. More than 70 global domain and data experts contributed, followed by a public voting phase that prioritised questions seen as both urgent and actionable. The full methodology is detailed in a pre-publication report on SSRN.

The Top 10 Questions are diagnostic tools, revealing evidence gaps, system failures and persistent assumptions shaping women’s health. By asking better questions, the initiative creates the conditions for more relevant research and improved outcomes…(More)”.

Article by Anirudh Dinesh: “Only last week, the state government of Madhya Pradesh signed(opens in new window) a partnership with Digital India’s Bhashini Division(opens in new window) (DIBD) to integrate multilingual AI tools across the state’s digital governance platforms. The agreement, formalized at a regional AI conference in the capital city Bhopal, aims to enable citizens to interact with public services in their own languages rather than defaulting to English or Hindi.

While most mainstream AI systems are trained primarily on English and optimized for English-speaking contexts, the Bhashini division is building infrastructure specifically designed for linguistic diversity – treating language access not as an afterthought but as foundational to building inclusive AI. This is part of a broader movement in India around building Digital Public Infrastructure (DPI) shaped(opens in new window) by openness, accessibility, and inclusion.

Bhashini(opens in new window), short for “BHASHa INterface for India”, was launched by Prime Minister Modi in 2022. It is a platform that treats language access as public infrastructure exposed through standardized APIs, combining translation across all 22 constitutionally recognized Indian languages, speech recognition, and text-to-speech tools that developers, governments, and nonprofits can use freely.

Crowdsourced Translations: BhashaDaan

Modern AI learns from vast quantities of data. But for many Indian languages, such as Konkani and Bodo, that data does not exist digitally. BhashaDaan(opens in new window) means “Language Donation.”..(More)”

Report by OneData: “Data is the lifeblood of the 21 st century economy: powering decisions, fueling economic growth, shaping algorithms, and spurring scientific innovation.

But vast inequalities have emerged, and not just in financial wealth. Access to data—and the ability to extract meaning from it—are deeply unequal. As is the frequency, quality, and availability of answers to basic questions in large parts of the world. Take the world’s public finance data, for instance. It is fragmented and often outdated, hard to find and use, and locked in formats and products that don’t reach the people who need them. As a result, the “last mile” from data to usable insight is weak, forcing decision-makers and advocates to frequently rely on partial evidence, outdated snapshots, or anecdotes.

A growing set of initiatives point to a different model, one that moves away from static reports toward a living evidence infrastructure, with shared data backbones, reusable analytical workflows, and applications designed around real policy tasks. This paper describes the problem and this emerging approach, and highlights ONE Data as one example of how organisations are attempting to reduce friction between credible evidence and day-to-day policy, media, and advocacy work…(More)”.

Press Release (Canada): “The People’s Consultation on AI launches today, a collaborative civil society initiative created in response to the federal government’s failure to provide a meaningful consultation process during the development of a national AI strategy in October 2025.

In 2025, the government hastily assembled a task force to develop its AI national strategy. It was heavily skewed towards industry with very few participants able to speak to the broader ethical, social and political implications of the technology.

An accompanying public consultation allowed just 30 days for feedback, impeding those most impacted by AI from effectively participating. The government’s consultation survey questions prioritized economic benefits over AI’s many negative impacts and responses are now being assessed by AI rather than public officials.

These and other shortcomings were detailed in an open letter signed by over 160 civil society organizations and experts in October 2025, protesting the government’s “national sprint” on AI and documenting the many negative impacts that are already occurring as AI technologies become embedded in every aspect of Canadian society.

The People’s Consultation on AI offers a meaningful alternative. Beginning today, public interest groups, academics, impacted communities, and people across Canada have a meaningful opportunity to have their say on whether–and how–AI should be adopted and governed in Canada.

Designed for broad participation, the People’s Consultation on AI welcomes everything from the results of neighbourhood discussions about AI’s everyday impacts to in-depth expert analyses. The consultation website provides resources on current implications of AI alongside general guidance and community facilitation tools to help people craft submissions collaboratively…(More)”.