Stefaan Verhulst

Anne-Marie Slaughter in the Financial Times: “It is time to celebrate a new breed of triathletes, who work in technology. When I was dean in the public affairs school at Princeton, I would tell students to aim to work in the public, private and civic sectors over the course of their careers.

Solving public problems requires collaboration among government, business and civil society. Aspiring problem solvers need the culture and language of all three sectors and to develop a network of contacts in each.

The public problems we face, in the US and globally, require lawyers, economists and issue experts but also technologists. A lack of technologists capable of setting up HealthCare.gov, a website designed to implement the Affordable Care act, led President Barack Obama to create the US Digital Service, which deploys Swat tech teams to address specific problems in government agencies.

But functioning websites that deliver government services effectively are only the most obvious technological need for the public sector.

Government can reinvent how it engages with citizens entirely, for example by personalising public education with digital feedback or training jobseekers. But where to find the talent? The market for engineers, designers and project managers sees big tech companies competing for graduates from the world’s best universities.

Governments can offer only a fraction of those salaries, combined with a rigid work environment, ingrained resistance to innovation and none of the amenities and perks so dear to Silicon Valley .

Government’s comparative advantage, however, is mission and impact, which is precisely what Todd Park sells…Still, demand outstrips supply. ….The goal is to create an ecosystem for public interest technology comparable to that in public interest law. In the latter, a number of American philanthropists created role models, educational opportunities and career paths for aspiring lawyers who want to change the world.

That process began in the 1960s, and today every great law school has a public interest programme with scholarships for the most promising students. Many branches of government take on top law school graduates. Public interest lawyers coming out of government find jobs with think-tanks and advocacy organisations and take up research fellowships, often at the law schools that educated them. When they need to pay the mortgage or send their kids to college, they can work at large law firms with pro bono programmes….We need much more. Every public policy school at a university with a computer science, data science or technology design programme should follow suit. Every think-tank should also become a tech tank. Every non-governmental organisation should have at least one technologist on staff. Every tech company should have a pro bono scheme rewarding public interest work….(More)”

Extract from Homo Deus: A Brief History of Tomorrow by Yuval Noah Harari: “There’s an emerging market called Dataism, which venerates neither gods nor man – it worships data. From a Dataist perspective, we may interpret the entire human species as a single data-processing system, with individual humans serving as its chips. If so, we can also understand the whole of history as a process of improving the efficiency of this system, through four basic methods:

1. Increasing the number of processors. A city of 100,000 people has more computing power than a village of 1,000 people.

2. Increasing the variety of processors. Different processors may use diverse ways to calculate and analyse data. Using several kinds of processors in a single system may therefore increase its dynamism and creativity. A conversation between a peasant, a priest and a physician may produce novel ideas that would never emerge from a conversation between three hunter-gatherers.

3. Increasing the number of connections between processors. There is little point in increasing the mere number and variety of processors if they are poorly connected. A trade network linking ten cities is likely to result in many more economic, technological and social innovations than ten isolated cities.

4. Increasing the freedom of movement along existing connections. Connecting processors is hardly useful if data cannot flow freely. Just building roads between ten cities won’t be very useful if they are plagued by robbers, or if some autocratic despot doesn’t allow merchants and travellers to move as they wish.

These four methods often contradict one another. The greater the number and variety of processors, the harder it is to freely connect them. The construction of the sapiens data-processing system accordingly passed through four main stages, each of which was characterised by an emphasis on different methods.

The first stage began with the cognitive revolution, which made it possible to connect unlimited sapiens into a single data-processing network. This gave sapiens an advantage over all other human and animal species. Although there is a limit to the number of Neanderthals, chimpanzees or elephants you can connect to the same net, there is no limit to the number of sapiens.

Sapiens used their advantage in data processing to overrun the entire world. However, as they spread into different lands and climates they lost touch with one another, and underwent diverse cultural transformations. The result was an immense variety of human cultures, each with its own lifestyle, behaviour patterns and world view. Hence the first phase of history involved an increase in the number and variety of human processors, at the expense of connectivity: 20,000 years ago there were many more sapiens than 70,000 years ago, and sapiens in Europe processed information differently from sapiens in China. However, there were no connections between people in Europe and China, and it would have seemed utterly impossible that all sapiens may one day be part of a single data-processing web.

The second stage began with agriculture and continued until the invention of writing and money. Agriculture accelerated demographic growth, so the number of human processors rose sharply, while simultaneously enabling many more people to live together in the same place, thereby generating dense local networks that contained an unprecedented number of processors. In addition, agriculture created new incentives and opportunities for different networks to trade and communicate.

Nevertheless, during the second phase, centrifugal forces remained predominant. In the absence of writing and money, humans could not establish cities, kingdoms or empires. Humankind was still divided into innumerable little tribes, each with its own lifestyle and world view. Uniting the whole of humankind was not even a fantasy.

The third stage kicked off with the appearance of writing and money about 5,000 years ago, and lasted until the beginning of the scientific revolution. Thanks to writing and money, the gravitational field of human co-operation finally overpowered the centrifugal forces. Human groups bonded and merged to form cities and kingdoms. Political and commercial links between different cities and kingdoms also tightened. At least since the first millennium BC – when coinage, empires, and universal religions appeared – humans began to consciously dream about forging a single network that would encompass the entire globe.

This dream became a reality during the fourth and last stage of history, which began around 1492. Early modern explorers, conquerors and traders wove the first thin threads that encompassed the whole world. In the late modern period, these threads were made stronger and denser, so that the spider’s web of Columbus’s days became the steel and asphalt grid of the 21st century. Even more importantly, information was allowed to flow increasingly freely along this global grid. When Columbus first hooked up the Eurasian net to the American net, only a few bits of data could cross the ocean each year, running the gauntlet of cultural prejudices, strict censorship and political repression.

But as the years went by, the free market, the scientific community, the rule of law and the spread of democracy all helped to lift the barriers. We often imagine that democracy and the free market won because they were “good”. In truth, they won because they improved the global data-processing system.

So over the last 70,000 years humankind first spread out, then separated into distinct groups and finally merged again. Yet the process of unification did not take us back to the beginning. When the different human groups fused into the global village of today, each brought along its unique legacy of thoughts, tools and behaviours, which it collected and developed along the way. Our modern larders are now stuffed with Middle Eastern wheat, Andean potatoes, New Guinean sugar and Ethiopian coffee. Similarly, our language, religion, music and politics are replete with heirlooms from across the planet.

If humankind is indeed a single data-processing system, what is its output? Dataists would say that its output will be the creation of a new and even more efficient data-processing system, called the Internet-of-All-Things. Once this mission is accomplished, Homo sapiens will vanish….(More)“

John Buntin at Governing: “…A generation ago, governments across the United States embarked on ambitious efforts to use performance measures to “reinvent” how government worked. Much of the inspiration for this effort came from the bestselling 1992 book Reinventing Government: How the Entrepreneurial Spirit Is Transforming the Public Sector by veteran city manager Ted Gaebler and journalist David Osborne. Gaebler and Osborne challenged one of the most common complaints about public administration — that government agencies were irredeemably bureaucratic and resistant to change. The authors argued that that need not be the case. Government managers and employees could and should, the authors wrote, be as entrepreneurial as their private-sector counterparts. This meant embracing competition; measuring outcomes rather than inputs or processes; and insisting on accountability.

For public-sector leaders, Gaebler and Osborne’s book was a revelation. “I would say it has been the most influential book of the past 25 years,” says Robert J. O’Neill Jr., the executive director of the International City/County Management Association (ICMA). At the federal level, Reinventing Government inspired Vice President Al Gore’s National Performance Review. But it had its greatest impact on state and local governments. Public-sector officials across the country read Reinventing Government and ingested its ideas. Osborne joined the consulting firm Public Strategies Group and began hiring himself out as an adviser to governments.

There’s no question states and localities function differently today than they did 25 years ago. Performance management systems, though not universally beloved, have become widespread. Departments and agencies routinely measure customer satisfaction. Advances in information technology have allowed governments to develop and share outcomes more easily than ever before. Some watchdog groups consider linking outcomes to budgets — also known as performance-based budgeting — to be a best practice. Government executives in many places talk about “innovation” as if they were Silicon Valley executives. This represents real, undeniable change.

Yet despite a generation of reinvention, government is less trusted than ever before. Performance management systems are sometimes seen not as an instrument of reform but as an obstacle to it. Performance-based budgeting has had successes, but they have rarely been sustained. Some of the most innovative efforts to improve government today are pursuing quite different approaches, emphasizing grassroots employee initiatives rather than strict managerial accountability. All of this raises a question: Has the reinventing government movement left a legacy of greater effectiveness, or have the systems it generated become roadblocks that today’s reformers must work around? Or is the answer somehow “yes” to both of those questions?

Reinventing Government presented dozens of examples of “entrepreneurial” problem-solving, organized into 10 chapters. Each chapter illustrated a theme, such as results-oriented government or enterprising government. This structure — concrete examples grouped around larger themes — reflected the distinctive sensibilities of each author. Gaebler, as a city manager, had made a name for himself by treating constraints such as funding shortfalls or bureaucratic rules as opportunities. His was a bottom-up, let-a-hundred-flowers-bloom sensibility. He wanted his fellow managers to create cultures where risks could be taken and initiative could be rewarded.

Osborne, a journalist, was more of a systematizer, drawn to sweeping ideas. In his previous book, Laboratories of Democracy, he had profiled six governors who he believed were developing new approaches for delivering services that constituted a “third way” between big government liberalism and anti-government conservatism.Reinventing Government suggested how that would work in practice. It also offered readers a daring and novel vision of what government’s core mission should be. Government, the book argued, should focus less on operating programs and more on overseeing them. Instead of “rowing” (stressing administrative detail), senior public officials should do more “steering” (concentrating on overall strategy). They should contract out more, embrace competition and insist on accountability. This aspect of Osborne’s thinking became more pronounced as time went by.

“Today we are well beyond the experimental approach,” Osborne and Peter Hutchinson, a former Minnesota finance commissioner, wrote in their 2004 book, The Price of Government: Getting the Results We Need in an Age of Permanent Fiscal Crisis. A decade of experience had produced a proven set of strategies, the book continued. The foremost should be to turn the budget process “on its head, so that it starts with the results we demand and the price we are willing to pay rather than the programs we have and the costs they incur.” In other words, performance-based budgeting. Then, they continued, “we must cut government down to its most effective size and shape, through strategic reviews, consolidation and reorganization.”

Assessing the influence and efficacy of these ideas is difficult. According to the U.S. Census, the United States has 90,106 state and local governments. Tens of thousands of public employees read Reinventing Government and the books that followed. Surveys have shown that the use of performance measurement systems is widespread across state, county and municipal government. Yet only a handful of studies have sought to evaluate systematically the impact of Reinventing Government’s core ideas. Most have focused on just one, the idea highlighted in The Price of Government: budgeting for outcomes.

To evaluate the reinventing government movement primarily by assessing performance-based budgeting might seem a bit narrow. But paying close attention to the budgeting process is the key to understanding the impact of the entire enterprise. It reveals the difficulty of sustaining even successful innovations….

“Reinventing government was relatively blind to the role of legislatures in general,” says University of Maryland public policy professor and Governing columnist Donald F. Kettl. “There was this sense that the real problem was that good people were trapped in a bad system and that freeing administrators to do what they knew how to do best would yield vast improvements. What was not part of the debate was the role that legislatures might have played in creating those constraints to begin with.”

Over time, a pattern emerged. During periods of crisis, chief executives were able to implement performance-based budgeting. Often, it worked. But eventually legislatures pushed back….

There was another problem. Measuring results, insisting on accountability — these were supposed to spur creative problem-solving. But in practice, says Blauer, “whenever the budget was invoked in performance conversations, it automatically chilled innovative thinking; it chilled engagement,” she says. Agencies got defensive. Rather than focusing on solving hard problems, they focused on justifying past performance….

The fact that reinventing government never sparked a revolution puzzles Gaebler to this day. “Why didn’t more of my colleagues pick it up and run with it?” he asks. He thinks the answer may be that many public managers were simply too risk-averse….(More)”.

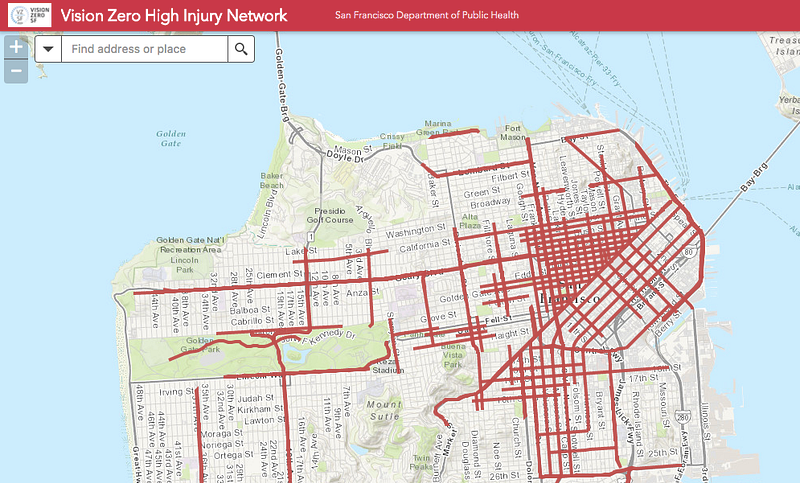

abhi nemani at Medium: “…Auto collisions with bikes (and also pedestrians) poses a real threat to the safety and wellbeing of residents. But more than temporary injuries, auto collisions with bikes and pedestrians can kill people. And it does at an alarming rate. According to the city, “Every year in San Francisco, about 30 people lose their lives and over 200 more are seriously injured while traveling on city streets.”…

Problem -> Data Analysis

The city government, in good fashion, made a commitment to do something about. But in better fashion, they decided to do so in a data-driven way. And they tasked the Department of Public Health in collaboration with theDepartment of Transportation to develop policy. What’s impressive is that instead of some blanket policy or mandate, they opted to study the problem,take a nuanced approach, and put data first.

The SF team ran a series of data-driven analytics to determine the causes of these collisions. They developed TransBase to continuously map and visualize traffic incidents throughout the city. Using this platform, then, they developed the “high injury network” — they key places where most problems happen; or as they put it, “to identify where the most investments in engineering, education and enforcement should be focused to have the biggest impact in reducing fatalities and severe injuries.” Turns out that, just12 percent of intersections result in 70% of major injuries. This is using data to make what might seem like an intractable problem, tractable….

Data Analysis -> Policy

So now what? Well, this month, Mayor Ed Lee signed an executive directive to challenge the city to implement these findings under the banner of“Vision Zero”: a goal of reducing auto/pedestrian/bike collision deaths to zero by 2024….

Fortunately, San Francisco took the next step: they put their data to work.

Policy -> Implementation

This week, the city of San Francisco announced plans to build its first“Protected Intersection”:

“Protected intersections use a simple design concept to make everyone safer.Under this configuration, features like concrete islands placed at the cornersslow turning cars and physically separate people biking and driving. They alsoposition turning drivers at an angle that makes it easier for them to see andyield to people walking and biking crossing their path.”

That’s apparently just the start: plans are underway for other intersections,protected bike lanes, and more. Biking and walking in San Francisco is about to become much safer. (Though maybe not easier: the hills — they’rethe worst.)

***

There is ample talk of “Data-Driven Policy” — indeed, I’ve written about it myself — but too often we get lost in the abstract or theoretical….(More)”

Michael McHugh at Federal Times: “According to Gartner, “Design thinking is a multidisciplinary process that builds solutions for complex, intractable problems in a technically feasible, commercially sustainable and emotionally meaningful way.”

Design thinking as an approach puts the focus on people — their likes, dislikes, desires and experience — for designing new services and products. It encourages a free flow of ideas within a team to build and test prototypes by setting a high tolerance for failure. The approach is more holistic, as it considers both human and technological aspects to cater to mission-critical needs. Due to its innovative and agile problem-solving technique, design thinking inspires teams to collaborate and contribute towards driving mission goals.

How Can Design Thinking Help Agencies?

Whether it is problem solving, streamlining a process or increasing the adoption rate of a new service, design thinking calls for agencies to be empathetic towards people’s needs while being open to continuous learning and a willingness to fail — fast. A fail-fast model enables agencies to detect errors during the course of finding a solution, in which they learn from the possible mistakes and then proceed to develop a more suitable solution that is likely to add value to the user.

Consider an example of a federal agency whose legacy inspection application was affecting the productivity of its inspectors. By leveraging an agile approach, the agency built a mobile inspection solution to streamline and automate the inspection process. The methodology involved multiple iterations based on observations and findings from inspector actions. Here is a step-by-step synopsis of this methodology:

- Problem presentation: Identifying the problems faced by inspectors.

- Empathize with users: Understanding the needs and challenges of inspectors.

- Define the problem: Redefining the problem based on input from inspectors.

- Team collaboration: Brainstorming and discussing multiple solutions.

- Prototype creation: Determining and building viable design solutions.

- Testing with constituents: Releasing the prototype and testing it with inspectors.

- Collection of feedback: Incorporating feedback from pilot testing and making required changes.

The insights drawn from each step helped the agency to design a secure platform in the form of a mobile inspection tool, optimized for tablets with a smartphone companion app for enhanced mobility. Packed with features like rich media capture with video, speech-to-text and photographs, the mobile inspection tool dramatically reduces manual labor and speeds up the on-site inspection process. It delivers significant efficiencies by improving processes, increasing productivity and enhancing the visibility of information. Additionally, its integration with legacy systems helps leverage existing investments, therefore justifying the innovation, which is based on a tightly defined test and learn cycle….(More)”

This political season, citizens will be determining who will represent them in the government. This, of course, includes deciding who will be the next president, but also who will serve in thousands of less prominent positions.

But is voting the only job of a citizen? And if there are others, what are they? Who decides who will do the other jobs – and how they should be done?

The concept of “civic intelligence” tries to address such questions.

I’ve been researching and teaching the concept of “civic intelligence” for over 15 years. Civic intelligence can help us understand how decisions in democratic societies are made now and, more importantly, how they could be made in the future.

For example, my students and I used civic intelligence as the focus for comparing colleges and universities. We wanted to see how well schools helped educate their students for civic engagement and social innovation and how well the schools themselves supported this work within the broader community.

My students also practiced civic intelligence, as the best way of learning it is through “real world” projects such as developing a community garden at a high school for incarcerated youth….

The term “civic intelligence” was first used in English in 1898 by an American clergyman Josiah Strong in his book “The Twentieth Century City” when he wrote of a “dawning social self-consciousness.”

Untold numbers of people have been thinking and practicing civic intelligence without using the term. …There are more contemporary approaches as well. These include:

- Sociologist Xavier de Souza Briggs’ research on how people from around the world have integrated the efforts of civil society, grassroots organizations and government to create sustainable communities.

- With a slightly different lens, researcher Jason Corburn has examined how “ordinary” people in economically underprivileged neighborhoods have used “Street Science” to understand and reduce disease and environmental degradation in their communities.

- Elinor Ostrom, recently awarded the Nobel Prize in economics, has studied how groups of people from various times and places managed resources such as fishing grounds, woodlots and pastures by working together collectively to preserve the livelihoods’ sources for future generations.

Making use of civic intelligence

Civic intelligence is generally an attribute of groups. It’s a collective capability to think and work together.

Advocates and practitioners of civic intelligence (as well as many others) note that the risks of the 21st century, which include climate change, environmental destruction and overpopulation, are quantitatively and qualitatively unlike the risks of prior times. They hypothesize that these risks are unlikely to be addressed satisfactorily by government and other leaders without substantial citizen engagement….

At a basic level, “governance” happens when neighborhood groups, nonprofit organizations or a few friends come together to help address a shared concern.

Their work can take many forms, including writing, developing websites, organizing events or demonstrations, petitioning, starting organizations and, even, performing tasks that are usually thought of as “jobs for the government.”

And sometimes “governance” could even mean breaking some rules, possibly leading to far-reaching reforms. For example, without civil disobedience, the U.S. might still be a British colony. And African-Americans might still be forced to ride in the back of the bus.

As a discipline, civic intelligence provides a broad focus that incorporates ideas and findings from many fields of study. It involves people from all walks of life, different cultures and circumstances.

A focus on civic intelligence could lead directly to social engagement. I believe understanding civic intelligence could help address the challenges we must face today and tomorrow….(More)”

New report by Beth Simone Noveck and Stefaan Verhulst: “…With rates of trust in government at an all-time low, technology and innovation will be essential to achieve the next administration’s goals and to deliver services more effectively and efficiently. The next administration must prioritize using technology to improve governing and must develop plans to do so in the transition… This paper provides analysis and a set of concrete recommendations, both for the period of transition before the inauguration, and for the start of the next presidency, to encourage and sustain innovation in government. Leveraging the insights from the experts who participated in a day-long discussion, we endeavor to explain how government can improve its use of using digital technologies to create more effective policies, solve problems faster and deliver services more effectively at the federal, state and local levels….

The broad recommendations are:

- Scale Data Driven Governance: Platforms such as data.gov represent initial steps in the direction of enabling data-driven governance. Much more can be done, however, to open-up data and for the agencies to become better consumers of data, to improve decision-making and scale up evidence-based governance. This includes better use of predictive analytics, more public engagement; and greater use of cutting-edge methods like machine learning.

- Scale Collaborative Innovation: Collaborative innovation takes place when government and the public work together, thus widening the pool of expertise and knowledge brought to bear on public problems. The next administration can reach out more effectively, not just to the public at large, but to conduct targeted outreach to public officials and citizens who possess the most relevant skills or expertise for the problems at hand.

- Promote a Culture of Innovation: Institutionalizing a culture of technology-enabled innovation will require embedding and institutionalizing innovation and technology skills more widely across the federal enterprise. For example, contracting, grants and personnel officials need to have a deeper understanding of how technology can help them do their jobs more efficiently, and more people need to be trained in human-centered design, gamification, data science, data visualization, crowdsourcing and other new ways of working.

- Utilize Evidence-Based Innovation: In order to better direct government investments, leaders need a much better sense of what works and what doesn’t. The government spends billions on research in the private and university sectors, but very little experimenting with, testing, and evaluating its own programs. The next administration should continue developing an evidence-based approach to governance, including a greater use of methods like A/B testing (a method of comparing two versions of a webpage or app against each other to determine which one performs the best); establishing a clearinghouse for success and failure stories and best practices; and encouraging overseers to be more open to innovation.

- Make Innovation a Priority in the Transition: The transition period represents a unique opportunity to seed the foundations for long-lasting change. By explicitly incorporating innovation into the structure, goals and activities of the transition teams, the next administration can get a fast start in implementing policy goals and improving government operations through innovation approaches….(More)”

Mohana Ravindranath at NextGov: “Since May, the White House has been exploring the use of artificial intelligence and machine learning for the public: that is, how the federal government should be investing in the technology to improve its own operations. The technologies, often modeled after the way humans take in, store and use new information, could help researchers find patterns in genetic data or help judges decide sentences for criminals based on their likelihood to end up there again, among other applications. …

Here’s a look at how some federal groups are thinking about the technology:

- Police data: At a recent White House workshop, Office of Science and Technology Policy Senior Adviser Lynn Overmann said artificial intelligence could help police departments comb through hundreds of thousands of hours of body-worn camera footage, potentially identifying the police officers who are good at de-escalating situations. It also could help cities determine which individuals are likely to end up in jail or prison and officials could rethink programs. For example, if there’s a large overlap between substance abuse and jail time, public health organizations might decide to focus their efforts on helping people reduce their substance abuse to keep them out of jail.

- Explainable artificial intelligence: The Pentagon’s research and development agency is looking for technology that can explain to analysts how it makes decisions. If people can’t understand how a system works, they’re not likely to use it, according to a broad agency announcement from the Defense Advanced Research Projects Agency. Intelligence analysts who might rely on a computer for recommendations on investigative leads must “understand why the algorithm has recommended certain activity,” as do employees overseeing autonomous drone missions.

- Weather detection: The Coast Guard recently posted its intent to sole-source a contract for technology that could autonomously gather information about traffic, crosswind, and aircraft emergencies. That technology contains built-in artificial intelligence technology so it can “provide only operational relevant information.”

- Cybersecurity: The Air Force wants to make cyber defense operations as autonomous as possible, and is looking at artificial intelligence that could potentially identify or block attempts to compromise a system, among others.

While there are endless applications in government, computers won’t completely replace federal employees anytime soon….(More)”

For years, science-fiction moviemakers have been making us fear the bad things that artificially intelligent machines might do to their human creators. But for the next decade or two, our biggest concern is more likely to be that robots will take away our jobs or bump into us on the highway.

Now five of the world’s largest tech companies are trying to create a standard of ethics around the creation of artificial intelligence. While science fiction has focused on the existential threat of A.I. to humans,researchers at Google’s parent company, Alphabet, and those from Amazon,Facebook, IBM and Microsoft have been meeting to discuss more tangible issues, such as the impact of A.I. on jobs, transportation and even warfare.

Tech companies have long overpromised what artificially intelligent machines can do. In recent years, however, the A.I. field has made rapid advances in a range of areas, from self-driving cars and machines that understand speech, like Amazon’s Echo device, to a new generation of weapons systems that threaten to automate combat.

The specifics of what the industry group will do or say — even its name —have yet to be hashed out. But the basic intention is clear: to ensure thatA.I. research is focused on benefiting people, not hurting them, according to four people involved in the creation of the industry partnership who are not authorized to speak about it publicly.

The importance of the industry effort is underscored in a report issued onThursday by a Stanford University group funded by Eric Horvitz, a Microsoft researcher who is one of the executives in the industry discussions. The Stanford project, called the One Hundred Year Study onArtificial Intelligence, lays out a plan to produce a detailed report on the impact of A.I. on society every five years for the next century….The Stanford report attempts to define the issues that citizens of a typicalNorth American city will face in computers and robotic systems that mimic human capabilities. The authors explore eight aspects of modern life,including health care, education, entertainment and employment, but specifically do not look at the issue of warfare..(More)”

Karim Tadjeddine and Martin Lundqvist of McKinsey: “Like companies in the private sector, governments from national to local can smooth the process of digital transformation—and improve services to their “customers,” the public—by adhering to certain core principles. Here’s a road map.

By virtue of their sheer size, visibility, and economic clout, national, state or provincial, and local governments are central to any societal transformation effort, in particular a digital transformation. Governments at all levels, which account for 30 to 50 percent of most countries’ GDP, exert profound influence not only by executing their own digital transformations but also by catalyzing digital transformations in other societal sectors (Exhibit 1).

The tremendous impact that digital services have had on governments and society has been the subject of extensive research that has documented the rapid, extensive adoption of public-sector digital services around the globe. We believe that the coming data revolution will be even more deeply transformational and that data enablement will produce a radical shift in the public sector’s quality of service, empowering governments to deliver better constituent service, better policy outcomes, and more-productive operations….(More)”