Stefaan Verhulst

Grace Dobush in Quartz: “Taking a bus in Latin America can be a disorienting experience. Whilethe light rail systems in places like Mexico City, Buenos Aires, and Riode Janeiro have system maps that are fairly easy to understand, mostcities lack a comprehensive bus map.

Several factors have kept bus maps from taking root in much of LatinAmerica. One reason is that bus lines running through cities tend to beoperated by a collection of private companies, so they have lessincentive to coordinate. Newspapers and local governmentsoccasionally attempt to create system maps, but none have stuck.

In Managua, Nicaragua, this absence has had a constricting effect onresidents’ lives. Most people know the bus lines that take them toschool or to work, but don’t stray far from their usual route. “Mobilityin Managua is very difficult,” says Felix Delattre, a German programmerand amateur cartographer who moved to Nicaragua 10 years ago towork for an NGO. “People don’t go out at night because they don’t havethe information on when the bus is coming and where it’s going.”

Delattre led a group of volunteers who teamed up with universitystudents in Managua to create what they believe to be the firstcomprehensive bus map in Latin America. The mapping venture is anindependent project connected to the Humanitarian OpenStreetMapTeam, a nonprofit group that uses open-source technology andcrowdsourcing to create badly needed maps around the world. The teamspent almost two years mapping out Managua and Ciudad Sandino andrecording its 46 bus routes….(More)

Lena Groeger at ProPublica: “We’ve seen how design can keep us away from harm and save our lives. But there is a more subtle way that design influences our daily decisions and behavior – whether we know it or not. It’s not sexy or trendy or flashy in any way. I’m talking about defaults.

Defaults are the settings that come out of the box, the selections you make on your computer by hitting enter, the assumptions that people make unless you object, the options easily available to you because you haven’t changed them.

They might not seem like much, but defaults (and their designers) hold immense power – they make decisions for us that we’re not even aware of making. Consider the fact that most people never change the factory settings on their computer, the default ringtone on their phones, or the default temperature in their fridge. Someone, somewhere, decided what those defaults should be – and it probably wasn’t you.

Another example: In the U.S. when you register for your driver’s license, you’re asked whether or not you’d like to be an organ donor. We operate on an opt-in basis: that is, the default is that you are not an organ donor. If you want to donate your organs, you need to actively check a box on the DMV questionnaire. Only about 40 percent of the population is signed up to be an organ donor.

In other countries such as Spain, Portugal and Austria, the default is that you’re an organ donor unless you explicitly choose not to be. And in many of those countries over 99 percent of the population is registered. A recent study found that countries with opt-out or “presumed consent” policies don’t just have more people who sign up to be donors, they also have consistently higher numbers of transplants.

Of course, there are plenty of other factors that influence the success of organ donation systems, but the opt-in versus opt-out choice seems to have a real effect on our collective behavior. An effect that could potentially make the difference between someone getting a life-saving transplant or not.

Behavioral economist Richard Thaler and legal scholar Cass Sunstein pretty much wrote the book on the implications of defaults on human behavior. Nudge: Improving Decisions About Health, Wealth, and Happiness is full of ways in which default options can steer human choices, even if we have no idea it’s happening. Besides organ donation, the list of potential “nudges” include everything from changing the order of menu itemsto encourage people to pick certain dishes to changing the default temperature of office thermostats to save on energy.

But my favorite has to do with getting kids to eat their vegetables.

What if I told you there was one simple change you could make in a school cafeteria to get children to eat more salad? It doesn’t cost anything, force anyone to eat anything they don’t want, and it takes only a few minutes to fix. And it happened in real life: a middle school in New York moved their salad bar away from its default location against a walland put it smack in the middle of the room (and prominently in front of the two cash registers, as seen in the diagram below). Salad sales more than tripled….(More)”

Rose Eveleth at Motherboard: “For centuries judges have had to make guesses about the people in front of them.Will this person commit a crime again? Or is this punishment enough to deter them?Do they have the support they need at home to stay safe and healthy and away from crime? Or will they be thrust back into a situation that drives them to their old ways? Ultimately, judges have to guess.

But recently, judges in states including California and Florida have been given a new piece of information to aid in that guess work: a “risk assessment score” determined by an algorithm. These algorithms take a whole suite of variables into account, and spit out a number (usually between 1 and 10) that estimates the risk that the person in question will wind up back in jail.

If you’ve read this column before, you probably know where this is going. Algorithms aren’t unbiased, and a recent ProPublica investigation suggests what researchers have long been worried about: that these algorithms might contain latent racial prejudice. According to ProPublica’s evaluation of a particular scoring method called the COMPAS system, which was created by a company called Northpointe, people of color are more likely to get higher scores than white people for essentially the same crimes.

Bias against folks of color isn’t a new phenomenon in the judicial system. (This might be the understatement of the year.) There’s a huge body of research that shows that judges, like all humans, are biased. Plenty of studies have shown that for the same crime, judges are more likely to sentence a black person more harshly than a white person. It’s important to question biases of all kinds, both human and algorithmic, but it’s also important to question them in relation to one another. And nobody has done that.

I’ve been doing some research of my own into these recidivism algorithms, and whenI read the ProPublica story, I came out with the same question I’ve had since I started looking into this: these algorithms are likely biased against people of color. But so are judges. So how do they compare? How does the bias present in humans stack up against the bias programmed into algorithms?

This shouldn’t be hard to find out: ideally you would divide judges in a single county in half, and give one half access to a scoring system, and have the other half carry on as usual. If you don’t want to A/B test within a county—and there are some questions about whether that’s an ethical thing to do—then simply compare two counties with similar crime rates, in which one county uses rating systems and the other doesn’t. In either case, it’s essential to test whether these algorithmic recidivism scores exacerbate, reduce, or otherwise change existing bias.

Most of the stories I’ve read about these sentencing algorithms don’t mention any such studies. But I assumed that they existed, they just didn’t make the cut in editing.

I was wrong. As far as I can find, and according to everybody I’ve talked to in the field,nobody has done this work, or anything like it. These scores are being used by judges to help them sentence defendants and nobody knows whether the scores exacerbate existing racial bias or not….(More)”

Bradley J. Fikes in the San Diego Union Tribune: “The modern era’s dramatic advances in medical care relies on more than scientists, doctors and biomedical companies. None of it could come to fruition without patients willing to risk trying experimental therapies to see if they are safe and effective.

More than 220,000 clinical trials are taking place worldwide, with more than 81,000 of them in the United States, according to the federal government’s registry, clinicaltrials.gov. That poses a huge challenge for recruitment.

Companies are offering a variety of inducements to coax patients into taking part. Some rely on that good old standby, cash. Others remove obstacles. Axovant Sciences, which is preparing to test an Alzheimer’s drug, is offering patients transportation from the ridesharing service Lyft.

In addition, non-cash rewards such as iPads, opt-out enrollment in low-risk trials or even guaranteeing patients they will be informed about the clinical trial results should be considered, say a group of researchers who suggest testing these incentives scientifically.

In an article published Wednesday in Science Translational Medicine, the researchers present a matrix of these options, their benefits, and potential drawbacks. They urge companies to track the outcomes of these incentives to find out what works best.

The goal, the article states, is to “nudge” patients into participating, but not so far as to turn the nudge into a coercive shove. Go to j.mp/nudgeclin for the article.

For a nudge, the researchers suggest the wording of a consent form could include options such as a choice of preferred appointment times, such as “Yes, morning appointments,” with a number of similarly worded statements. That wording would “imply that enrollment is normative,” or customary, the article stated.

Researchers could go so far as to vary the offers to patients in a single clinical trial and measure which incentives produce the best responses, said Eric M. Van Epps, one of the researchers. In effect, that would provide a clinical trial of clinical trial incentives.

As part of that tracking, companies need to gain insight into why some patients are reluctant to take part, and those reasons vary, said Van Epps, of the Michael J. Crescenz Veterans Affairs Medical Center in Philadelphia.

“Sometimes they’re not made aware of the clinical trials, they might not understand how clinical trials work, they might want more control over their medication regimen or how they’re going to proceed,” Van Epps said.

At other times, patients may be overwhelmed by the volume of paperwork required. Some paperwork is necessary for legal and ethical reasons. Patients must be informed about the trial’s purpose, how it might help them, and what harm might happen. However, it could be possible to simplify the informed consent paperwork to make it more understandable and less intimidating….(More)”

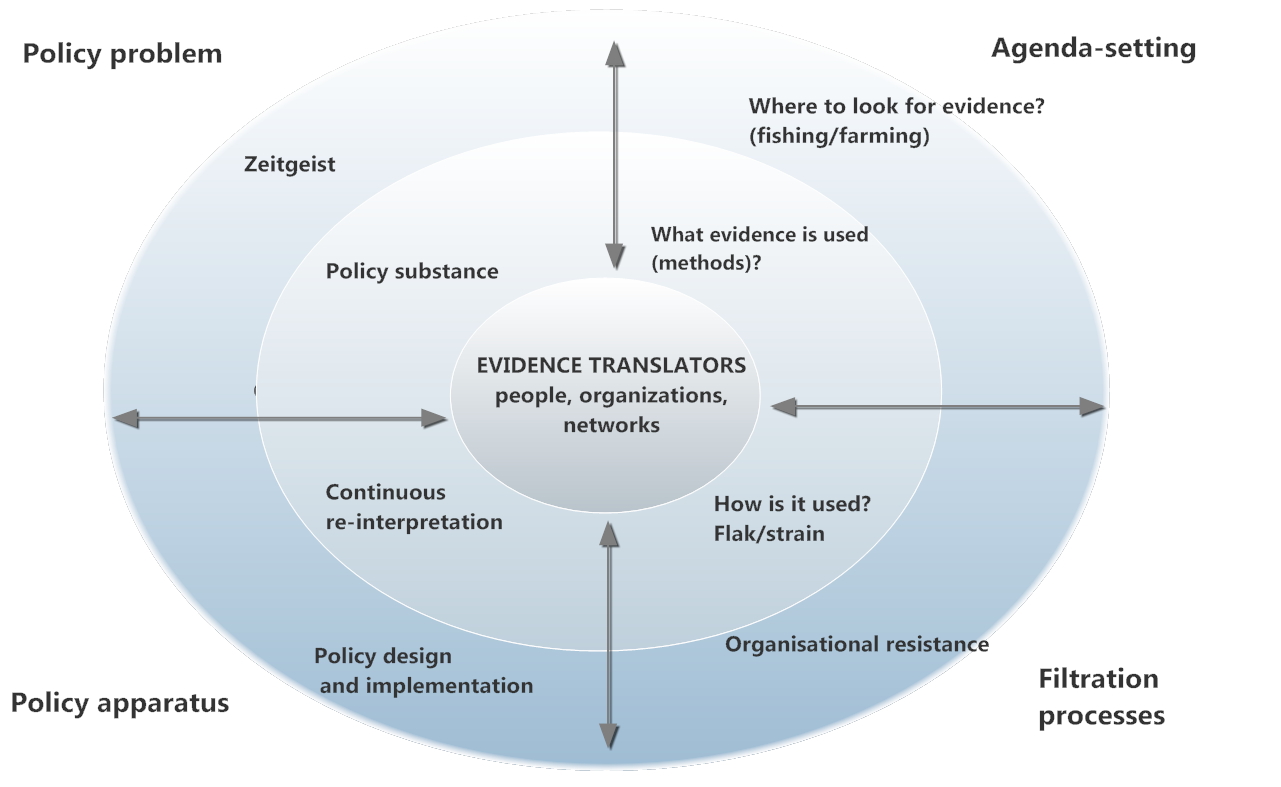

Jo Ingold and Mark Monaghan at the LSE Politics and Policy Blog: “It’s fair to say that research has never monopolised the policy process to the extent that policies are formulated solely, or even primarily, upon evidence. At the same time, research is never entirely absent from the process nor is it always exploited to justify a pre-existing policy stance as those who pronounce that we that we are now in an era of policy based evidence would have us believe. Often the reality lies somewhere in the middle. A number of studies have looked at how evidence may or may not have impacted on the policy decision-making process. Learning from other contexts, or ‘policy transfer’ is one other way of harnessing particular kinds of evidence, focusing on the migration of policies from one jurisdiction to another, whether within or across countries. Studies have begun to move away from theories of direct transfer to consider the processes involved in movement of ideas from one area to another. In effect, they consider the ‘translation’ of evidence and policy.

Our research brings together the evidence-based policymaking and ‘policy as translation’ literatures to try to shed light on the process by which evidence is used in policymaking. Although these literatures have developed separately (and to a large extent remain so) we see both as, at root, being concerned with the same issues, in particular how ideas, evidence and knowledge are integrated into the policymaking process. With EBPM there is a stated desire to formulate policies based on the best available evidence, while ‘policy as translation’ focuses on the ‘travel of ideas’ and views the policy process as fluid, dynamic and continually re-constituting, rather than a linear or rational ‘transfer’ process….

The Evidence Translation Model is intended to be recursive and includes five key dimensions which influence how evidence, ideas and knowledge are used in policy:

- The substantive nature of the policy problem in the context of the Zeitgeist

- Agenda-setting – where evidence is sought (fishing/farming) and what evidence is used

- The filtration processes which shape and mould how evidence is used (flak/strain)

- The policy apparatus for policy design and implementation

- The role of ‘evidence translators’

Evidence Translation Model

Source: Policy & Politics 2016 (44:2)

Our research draws attention to what is perceived to be evidence and at what stage of the policymaking process it is used….(More; See also authors’ article in Policy & Politics)”.

Tom Symons at NESTA: “How data can help councils provide more personalised, effective and efficient services.

Key findings

- After years of hype and little delivery, councils are now using big and small data in a range of innovative ways to improve decision making and inform public service transformation.

- Emerging trends in the use of data include predictive algorithms, mass data integration across the public sector, open data and smart cities.

- We identify seven ways in which councils can get more value from the data they hold.

What does the data revolution mean for councils and local public services? Local government collects huge amounts of data, about everything from waste collection to procurement processes to care services for some of the most vulnerable people in society. By using council data better, is there potential to make these services more personalised, more effective and more efficient?

Nesta’s Local Datavores research programme aims to answer this question. Where, how and to what extent can better data use can help councils to achieve their strategic objectives? This report is the first in a series, aimed primarily at helping local public sector staff, from senior commissioners through to frontline professionals, get more value from the data they hold….(More)”-

Daniel E. O’Leary at IEEE Intelligent Systems: “This article investigates some issues associated with big data and analytics (big data) and ethics, examining how big data ethics are different than computer ethics and other more general ethical frameworks. In so doing, the author briefly investigates some of the previous research in “computer ethics,” reviews some codes of ethics, analyzes the application of two frameworks on ethics to big data, and provides a definition of big data ethics. Ultimately, the author argues that in order to get sufficient specificity, a code of ethics for big data is necessary….(More)”

Putri, D.A., CH Karlina, M., Tanaya, J., at the Centre for Innovation Policy and Governance, Indonesia: “In 2011, Indonesia started its Open Government journey when along with seven other countries it initiated Open Government Partnership. Following the global declaration, Indonesia launched the Open Government Indonesia (OGI) in January 2012 with the aim to introduce open government reforms, including open data. This initiative is supported by Law No. 14/2008 on Freedom of Information. Despite its early stage, the implementation of Open Government in Indonesia has shown promising developments, with three action plans enacted in the last four years. In the Southeast Asian region, Indonesia could be considered a pioneer in implementing the open data initiative at national as well as sub-national levels. In some cases, the open data initiative at sub- national level has even surpassed the progress at the national level. Jakarta, for example, became the first city to have its own gubernatorial bylaw on data and system management, which requires the city administration and agencies to open its public data, thus leading to the birth of open data initiatives in the city. The city also have Jakarta Smart City that connect sub-districts officials with the citizen. Jakarta Smart City is an initiative that promote openness of the government through public service delivery. This paper aims to take a closer look on the dynamics of citizens-generated data in Jakarta and how Jakarta smart city program contributes to the implementation of open data….(More)”

Big City is watching you.

It will do it with camera-equipped drones that inspect municipal powerlines and robotic cars that know where people go. Sensor-laden streetlights will change brightness based on danger levels. Technologists and urban planners are working on a major transformation of urban landscapes over the next few decades.

A White House report published in February identified advances in transportation, energy and manufacturing, among other developments, that will bring on what it termed “a new era of change.”

Much of the change will also come from the private sector, which is moving faster to reach city dwellers, and is more skilled in collecting and responding to data. That is leading cities everywhere to work more closely than ever with private companies, which may have different priorities than the government.

Shared vehicles are not parked as much, and with more automation, they will know where parking spaces are available, eliminating the need to drive in search of a space.

“Office complexes won’t need parking lots with twice the footprint of their buildings,” said Sebastian Thrun, who led Google’s self-driving car project in its early days and now runs Udacity, an online learning company. “Whenwe started on self-driving cars, we talked all the time about cutting the number of cars in a city by a factor of three,” or a two-thirds reduction.

In addition, police, fire, and even library services will seek greater responsiveness by tracking their own assets, and partly by looking at things like social media. Later, technologies like three-dimensional printing, new materials and robotic construction and demolition will be able to reshape skylines in a matter of weeks.

At least that is the plan. So much change afoot creates confusion….

The new techno-optimism is focused on big data and artificial intelligence.“Futurists used to think everyone would have their own plane,” said ErickGuerra, a professor of city and regional planning at the University ofPennsylvania. “We never have a good understanding of how things will actually turn out.”

He recently surveyed the 25 largest metropolitan planning organizations in the country and found that almost none have solid plans for modernizing their infrastructure. That may be the right way to approach the challenges of cities full of robots, but so far most clues are coming from companies that also sell the technology.

The big tech companies say they are not interested in imposing the sweeping “smart city” projects they used to push, in part because things are changing too quickly. But they still want to build big, and they view digital surveillance as an essential component…(More)”

Kent Smetters in The Hill: ” Big Data and analytics are driving advancements that touch nearly every part of our lives. From improving disaster relief efforts following a storm, to enhancing patient response to specific medications to criminal justice reform and real-time traffic reporting, Big Data is saving lives, reducing costs and improving productivity across the private and the public sector.Yet when our elected officials draft policy they lack access to advanced data and analytics that would help them understand the economic implications of proposed legislation. Instead of using Big Data to inform and shape vital policy questions, Members of Congress typically don’t receive a detailed analysis of a bill until after it has been written, and after they have sought support for it. That’s when a policy typically undergoes a detailed budgetary analysis. And even then, these assessments often ignore the broader impact on jobs and the economy.

Yet when our elected officials draft policy they lack access to advanced data and analytics that would help them understand the economic implications of proposed legislation. Instead of using Big Data to inform and shape vital policy questions, Members of Congress typically don’t receive a detailed analysis of a bill until after it has been written, and after they have sought support for it. That’s when a policy typically undergoes a detailed budgetary analysis. And even then, these assessments often ignore the broader impact on jobs and the economy.

We must do better. Just as modern marketing firms use deep analytical tools to make smart business decisions, policymakers in Washington should similarly have access to modern tools for analyzing important policy questions.

Will Social Security be solvent for our grandchildren? How will changes to immigration policy influence the number of jobs and the GDP? How will tax reform impact the budget, economic growth and the income distribution? What is the impact of new investments in health care, education and roads? These are big questions that must be answered with reliable data and analysis while legislation is being written, not afterwards. The absence leaves us with ideology-driven partisanship.

Simply put, Washington needs better tools to evaluate these complex factors. Imagine the productive conversations we could have if we applied the kinds of tools that are commonplace in the business world to help Washington make more informed choices.

For example, with the help of a nonpartisan budget model from the Wharton School of the University of Pennsylvania, policymakers and the public can uncover some valuable—and even surprising—information about our choices surrounding Social Security, immigration and other issues.

By analyzing more than 4,000 different Social Security policy options, for example, the model projects that the Social Security Trust Fund will be depleted three years earlier than the Social Security Administration’s projections, barring any changes in current law. The tool’s projected shortfalls are larger than the SSA’s, in fact—because it takes into account how changes over time will affect the outcome. We also learn that many standard policy options fail to significantly move the Trust Fund exhaustion date, as these policies phase in too slowly or are too small. Securing Social Security, we now know, requires a range of policy combinations and potentially larger changes than we may have been considering.

Immigration policy, too, is an area where we could all benefit from greater understanding. The political left argues that legalizing undocumented workers will have a positive impact on jobs and the economy. The political right argues for just the opposite—deportation of undocumented workers—for many of the same reasons. But, it turns out, the numbers don’t offer much support to either side.

On one hand, legalization actually slightly reduces the number of jobs. The reason is simple: legal immigrants have better access to school and college, and they can spend more time looking for the best job match. However, because legal immigrants can gain more skills, the actual impact on GDP from legalization alone is basically a wash.

The other option being discussed, deportation, also reduces jobs, in this case because the number of native-born workers can’t rise enough to absorb the job losses caused by deportation. GDP also declines. Calculations based on 125 different immigration policy combinations show that increasing the total amount of legal immigrants—especially those with higher skills—is the most effective policy for increasing employment rates and GDP….(More)”