Stefaan Verhulst

Clinton Nguyen for TechInsider: “When counselors are helping someone in the midst of an emotional crisis, they must not only know how to talk – they also must be willing to text.

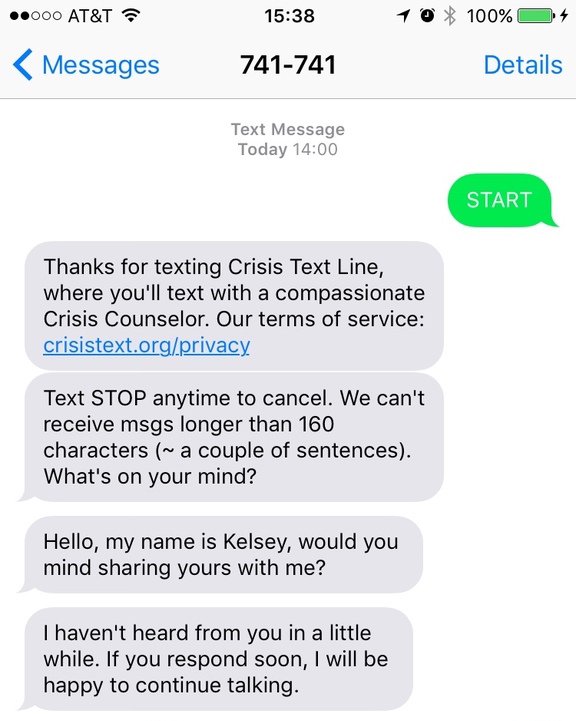

Crisis Text Line, a non-profit text-message-based counseling service, operates a hotline for people who find it safer or easier to text about their problems than make a phone call or send an instant message. Over 1,500 volunteers are on hand 24/7 to lend support about problems including bullying, isolation, suicidal thoughts, bereavement, self-harm, or even just stress.

But in addition to providing a new outlet for those who prefer to communicate by text, the service is gathering a wellspring of anonymized data.

“We look for patterns in historical conversations that end up being higher risk for self harm and suicide attempts,” Liz Eddy, a Crisis Text Line spokesperson, tells Tech Insider. “By grounding in historical data, we can predict the risk of new texters coming in.

According to Fortune, the organization is using machine learning to prioritize higher-risk individuals for quicker and more effective responses. But Crisis Text Line is also wielding the data it gathers in other ways – the company has published a page of trends that tells the public which hours or days people are more likely to be affected by certain issues, as well as which US states are most affected by specific crises or psychological states.

According to the data, residents of Alaska reach out to the Text Line for LGBTQ issues more than those in other states, and Maine is one of the most stressed out states. Physical abuse is most commonly reported in North Dakota and Wyoming, while depression is more prevalent in texters from Kentucky and West Virginia.

The research comes at an especially critical time. According to studies from the National Center for Health Statistics, US suicide rates have surged to a 30-year high. The study noted a rise in suicide rates for all demographics except black men over the age of 75. Alarmingly, the suicide rate among 10- to 14-year-old girls has tripled since 1999….(More)”

Stephen Goldsmith and Jane Wiseman in Governing: “By using data analytics to make decisions about pretrial detention, local governments could find substantial savings while making their communities safer….

Few areas of local government spending present better opportunities for dramatic savings than those that surround pretrial detention. Cities and counties are wasting more than $3 billion a year, and often inducing crime and job loss, by holding the wrong people while they await trial. The problem: Only 10 percent of jurisdictions use risk data analytics when deciding which defendants should be detained.

As a result, dangerous people are out in our communities, while many who could be safely in the community are behind bars. Vast numbers of people accused of petty offenses spend their pretrial detention time jailed alongside hardened convicts, learning from them how to be better criminals….

In this era of big data, analytics not only can predict and prevent crime but also can discern who should be diverted from jail to treatment for underlying mental health or substance abuse issues. Avoided costs aggregating in the billions could be better spent on detaining high-risk individuals, more mental health and substance abuse treatment, more police officers and other public safety services.

Jurisdictions that do use data to make pretrial decisions have achieved not only lower costs but also greater fairness and lower crime rates. Washington, D.C., releases 85 percent of defendants awaiting trial. Compared to the national average, those released in D.C. are two and a half times more likely to remain arrest-free and one and a half times as likely to show up for court.

Louisville, Ky., implemented risk-based decision-making using a tool developed by the Laura and John Arnold Foundation and now releases 70 percent of defendants before trial. Those released have turned out to be twice as likely to return to court and to stay arrest-free as those in other jurisdictions. Mesa County, Colo., and Allegheny County, Pa., both have achieved significant savings from reduced jail populations due to data-driven release of low-risk defendants.

Data-driven approaches are beginning to produce benefits not only in the area of pretrial detention but throughout the criminal justice process. Dashboards now in use in a handful of jurisdictions allow not only administrators but also the public to see court waiting times by offender type and to identify and address processing bottlenecks….(More)”

Book by Helen Kennedy that “…argues that as social media data mining becomes more and more ordinary, as we post, mine and repeat, new data relations emerge. These new data relations are characterised by a widespread desire for numbers and the troubling consequences of this desire, and also by the possibility of doing good with data and resisting data power, by new and old concerns, and by instability and contradiction. Drawing on action research with public sector organisations, interviews with commercial social insights companies and their clients, focus groups with social media users and other research, Kennedy provides a fascinating and detailed account of living with social media data mining inside the organisations that make up the fabric of everyday life….(More)”

Press Release: “A groundbreaking report published today by ideas42 reveals several innovations that college administrators and policymakers can leverage to significantly improve college graduation rates at a time where completion is more out of reach than ever for millions of students.

The student path through college to graduation day is strewn with subtle, often invisible barriers that, over time, hinder students’ progress and cause some of them to drop out entirely. In Nudging for Success: Using Behavioral Science to Improve the Postsecondary Student Journey, ideas42 focuses on simple, low-cost ways to combat these unintentional obstacles and support student persistence and success at every stage in the college experience, from pre-admission to post-graduation. Teams worked with students, faculty and administrators at colleges around the country.

Even for students whose tuition is covered by financial aid, whose academic preparation is exemplary, and who are able to commit themselves full-time to their education, the subtle logistical and psychological sticking points can have a huge impact on their ability to persist and fully reap the benefits of a higher education.

Less than 60% of full-time students graduate from four-year colleges within six years, and less than 30% graduate from community colleges within three years. There are a myriad of factors often cited as deterrents to finishing school, such as the cost of tuition or the need to juggle family and work obligations, but behavioral science and the results of this report demonstrate that lesser-known dynamics like self-perception are also at play.

From increasing financial aid filing to fostering positive friend groups and a sense of belonging on campus, the 16 behavioral solutions outlined in Nudging for Success represent the potential for significant impact on the student experience and persistence. At Arizona State University, sending behaviorally-designed email reminders to students and parents about the Free Application for Federal Student Aid (FAFSA) priority deadline increased submissions by 72% and led to an increase in grant awards. Freshman retention among low-income, first generation, under-represented or other students most at risk of dropping out increased by 10% at San Francisco State University with the use of a testimonial video, self-affirming exercises, and monthly messaging aimed at first-time students.

“This evidence demonstrates how behavioral science can be the key to uplifting millions of Americans through education,” said Alissa Fishbane, Managing Director at ideas42. “By approaching the completion crisis from the whole experience of students themselves, administrators and policymakers have the opportunity to reduce the number of students who start, but do not finish, college—students who take on the financial burden of tuition but miss out on the substantial benefits of earning a degree.”

The results of this work drive home the importance of examining the college experience from the student perspective and through the lens of human behavior. College administrators and policymakers can replicate these gains at institutions across the country to make it simpler for students to complete the degree they started in ways that are often easier and less expensive to implement than existing alternatives—paving the way to stronger economic futures for millions of Americans….(More)”

Lizzie MacWillie at NextCity: “…By crowdsourcing neighborhood boundaries, residents can put themselves on the map in critical ways.

Why does this matter? Neighborhoods are the smallest organizing element in any city. A strong city is made up of strong neighborhoods, where the residents can effectively advocate for their needs. A neighborhood boundary marks off a particular geography and calls out important elements within that geography: architecture, street fabric, public spaces and natural resources, to name a few. Putting that line on a page lets residents begin to identify needs and set priorities. Without boundaries, there’s no way to know where to start.

Knowing a neighborhood’s boundaries and unique features allows a group to list its assets. What buildings have historic significance? What shops and restaurants exist? It also helps highlight gaps: What’s missing? What does the neighborhood need more of? What is there already too much of? Armed with this detailed inventory, residents can approach a developer, city council member or advocacy group with hard numbers on what they know their neighborhood needs.

With a precisely defined geography, residents living in a food desert can point to developable vacant land that’s ideal for a grocery store. They can also cite how many potential grocery shoppers live within the neighborhood.

In addition to being able to organize within the neighborhood, staking a claim to a neighborhood, putting it on a map and naming it, can help a neighborhood control its own narrative and tell its story — so someone else doesn’t.

Our neighborhood map project was started in part as a response to consistent misidentification of Dallas neighborhoods by local media, which appears to be particularly common in stories about majority-minority neighborhoods. This kind of oversight can contribute to a false narrative about a place, especially when the news is about crime or violence, and takes away from residents’ ability to tell their story and shape their neighborhood’s future. Even worse is when neighborhoods are completely left off of the map, as if they have no story at all to tell.

Cities across the country — including Dallas, Boston, New York, Chicago,Portland and Seattle — have crowdsourced mapping projects people can contribute to. For cities lacking such an effort, tools like Google Map Maker have been effective….(More)”.

Digital Communities Special Report: “With urban areas continuing to grow at a substantial rate — from 30 percent of the world’s population in 1930 to a projected 66 percent by 2050, according to the United Nations — getting the urban experience right has become paramount. To help understand the building blocks to a successful digital city, The Digital Communities Special Report looks at five key technologies — broadband, open data, GIS, CRM and analytics — and provides a window into how they are helping city governments cope with economic, educational and societal demands.

The good news is that these essential technologies are getting cheaper, faster and better all the time. But technologies like these still cost money, need talent to run them and are dependent on the right policies if they are going to succeed. In other words, digital cities need smart thinking in order to work. Part one of this series examines the importance of broadband as a critical infrastructure and the challenges cities face in reaching universal adoption.

Part 1 | Broadband: 21st Century Infrastructure

Part 2 | Open Data & APIs: Collecting and Consuming What Cities Produce

Part 3 | GIS: An Established Technology Finds New Purpose

Part 4 | Customer Relationship Management: Diversity in Service

Part 5 | Analytics: Making Sense of City Data…(More)”

Burak Arikan at Medium: “Big data is the term used to define the perpetual and massive data gathered by corporations and governments on consumers and citizens. When the subject of data is not necessarily individuals but governments and companies themselves, we can call it civic data, and when systematically generated in large amounts, civic big data. Increasingly, a new generation of initiatives are generating and organizing structured data on particular societal issues from human rights violations, to auditing government budgets, from labor crimes to climate justice.

These civic data initiatives diverge from the traditional civil society organizations in their outcomes,that they don’t just publish their research as reports, but also open it to the public as a database.Civic data initiatives are quite different in their data work than international non-governmental organizations such as UN, OECD, World Bank and other similar bodies. Such organizations track social, economical, political conditions of countries and concentrate upon producing general statistical data, whereas civic data initiatives aim to produce actionable data on issues that impact individuals directly. The change in the GDP value of a country is useless for people struggling for free transportation in their city. Incarceration rate of a country does not help the struggle of the imprisoned journalists. Corruption indicators may serve as a parameter in a country’s credit score, but does not help to resolve monopolization created with public procurement. Carbon emission statistics do not prevent the energy deals between corrupt governments that destroy the nature in their region.

Needless to say, civic data initiatives also differ from governmental institutions, which are reluctant to share any more that they are legally obligated to. Many governments in the world simply dump scanned hardcopies of documents on official websites instead of releasing machine-readable data, which prevents systematic auditing of government activities.Civic data initiatives, on the other hand, make it a priority to structure and release their data in formats that are both accessible and queryable.

Civic data initiatives also deviate from general purpose information commons such as Wikipedia. Because they consistently engage with problems, closely watch a particular societal issue, make frequent updates,even record from the field to generate and organize highly granular data about the matter….

Several civic data initiatives generate data on variety of issues at different geographies, scopes, and scales. The non-exhaustive list below have information on founders, data sources, and financial support. It is sorted according to each initiative’s founding year. Please send your suggestions to contact at graphcommons.com. See more detailed information and updates on the spreadsheet of civic data initiatives.

Open Secrets tracks data about the money flow in the US government, so it becomes more accessible for journalists, researchers, and advocates.Founded as a non-profit in 1983 by Center for Responsive Politics, gets support from variety of institutions.

PolitiFact is a fact-checking website that rates the accuracy of claims by elected officials and others who speak up in American politics. Uses on-the-record interviews as its data source. Founded in 2007 as a non-profit organization by Tampa Bay Times. Supported by Democracy Fund, Bill &Melinda Gates Foundation, John S. and James L. Knight Foundation, FordFoundation, Knight Foundation, Craigslist Charitable Fund, and the CollinsCenter for Public Policy…..

La Fabrique de La loi (The Law Factory) maps issues of local-regional socio-economic development, public investments, and ecology in France.Started in 2014, the project builds a database by tracking bills from government sources, provides a search engine as well as an API. The partners of the project are CEE Sciences Po, médialab Sciences Po, RegardsCitoyens, and Density Design.

Mapping Media Freedom identifies threats, violations and limitations faced by members of the press throughout European Union member states,candidates for entry and neighbouring countries. Initiated by Index onCensorship and European Commission in 2004, the project…(More)”

Paper by Jennifer Larson et al for Political Networks Workshops & Conference 2016: “Pinning down the role of social ties in the decision to protest has been notoriously elusive, largely due to data limitations. The era of social media and its global use by protesters offers an unprecedented opportunity to observe real-time social ties and online behavior, though often without an attendant measure of real-world behavior. We collect data on Twitter activity during the 2015 Charlie Hebdo protests in Paris which, unusually, record both real-world protest attendance and high-resolution network structure. We specify a theory of participation in which an individual’s decision depends on her exposure to others’ intentions, and network position determines exposure. Our findings are strong and consistent with this theory, showing that, relative to comparable Twitter users, protesters are significantly more connected to one another via direct, indirect, triadic, and reciprocated ties. These results offer the first large-scale empirical support for the claim that social network structure influences protest participation….(More)’

Anupam Chander in the Michigan Law Review (2017 Forthcoming) : “Are we on the verge of an apartheid by algorithm? Will the age of big data lead to decisions that unfairly favor one race over others, or men over women? At the dawn of the Information Age, legal scholars are sounding warnings about the ubiquity of automated algorithms that increasingly govern our lives. In his new book, The Black Box Society: The Hidden Algorithms Behind Money and Information, Frank Pasquale forcefully argues that human beings are increasingly relying on computerized algorithms that make decisions about what information we receive, how much we can borrow, where we go for dinner, or even whom we date. Pasquale’s central claim is that these algorithms will mask invidious discrimination, undermining democracy and worsening inequality. In this review, I rebut this prominent claim. I argue that any fair assessment of algorithms must be made against their alternative. Algorithms are certainly obscure and mysterious, but often no more so than the committees or individuals they replace. The ultimate black box is the human mind. Relying on contemporary theories of unconscious discrimination, I show that the consciously racist or sexist algorithm is less likely than the consciously or unconsciously racist or sexist human decision-maker it replaces. The principal problem of algorithmic discrimination lies elsewhere, in a process I label viral discrimination: algorithms trained or operated on a world pervaded by discriminatory effects are likely to reproduce that discrimination.

I argue that the solution to this problem lies in a kind of algorithmic affirmative action. This would require training algorithms on data that includes diverse communities and continually assessing the results for disparate impacts. Instead of insisting on race or gender neutrality and blindness, this would require decision-makers to approach algorithmic design and assessment in a race and gender conscious manner….(More)“

Cass R. Sunstein and Lucia A. Reisch in the Oxford Research Encyclopedia of Climate Science (Forthcoming): “Careful attention to choice architecture promises to open up new possibilities for reducing greenhouse gas emissions – possibilities that go well beyond, and that may supplement or complement, the standard tools of economic incentives, mandates, and bans. How, for example, do consumers choose between climate-friendly products or services and alternatives that are potentially damaging to the climate but less expensive? The answer may well depend on the default rule. Indeed, climate-friendly default rules may well be a more effective tool for altering outcomes than large economic incentives. The underlying reasons include the power of suggestion; inertia and procrastination; and loss aversion. If well-chosen, climate-friendly defaults are likely to have large effects in reducing the economic and environmental harms associated with various products and activities. In deciding whether to establish climate-friendly defaults, choice architects (subject to legal constraints) should consider both consumer welfare and a wide range of other costs and benefits. Sometimes that assessment will argue strongly in favor of climate-friendly defaults, particularly when both economic and environmental considerations point in their direction. Notably, surveys in the United States and Europe show that majorities in many nations are in favor of climate-friendly defaults….(More)”