Stefaan Verhulst

Blog by Elena Murray, Moiz Shaikh, and Stefaan G. Verhulst: “Young people seeking essential services — whether mental health support, education, or government benefits — often face a critical challenge: they are asked to share their data without having a say in how it is used or for what purpose. While the responsible use of data can help tailor services to better meet their needs and ensure that vulnerable populations are not overlooked, a lack of trust in data collection and usage can have the opposite effect.

When young people feel uncertain or uneasy about how their data is being handled, they may adopt privacy-protective behaviors — choosing not to seek services at all or withholding critical information out of fear of misuse. This risks deepening existing inequalities rather than addressing them.

To build trust, those designing and delivering services must engage young people meaningfully in shaping data practices. Understanding their concerns, expectations, and values is key to aligning data use with their preferences. But how can this be done effectively?

This question was at the heart of a year-long global collaboration through the NextGenData project, which brought together partners worldwide to explore solutions. Today, we are releasing a key deliverable of that project: The Youth Engagement Toolkit for Responsible Data Reuse:

Based on a methodology developed and piloted during the NextGenData project, the Toolkit describes an innovative methodology for engaging young people on responsible data reuse practices, to improve services that matter to them…(More)”.

UN-Habitat: “…The guidelines aim to support national, regional and local governments, as well as relevant stakeholders, in leveraging digital technology for a better quality of life in cities and human settlements, while mitigating the associated risks to achieve global visions of sustainable urban development, in line with the New Urban Agenda, the 2030 Agenda for Sustainable Development and other relevant global agendas.

The aim is to promote a people-centred smart cities approach that is consistent with the purpose and the principles of the Charter of the United Nations, including full respect for international law and the Universal Declaration of Human Rights, to ensure that innovation and digital technologies are used to help cities and human settlements in order to achieve the Sustainable Development Goals and the New Urban Agenda.

The guidelines serve as a reference for Member States to implement people-centred smart city approaches in the preparation and implementation of smart city regulations, plans and strategies to promote equitable access to, and life-long education and training of all people in, the opportunities provided by data, digital infrastructure and digital services in cities and human settlements, and to favour transparency and accountability.

The guidelines recognize local and regional governments (LRGs) as pivotal actors in ensuring closing digital divides and localizing the objectives and principles of these guidelines as well as the Global Digital Compact for an open, safe, sustainable and secure digital future. The guidelines are intended to complement existing global principles on digital development through a specific additional focus on the key role of local and regional governments, and local action, in advancing people-centred smart city development also towards the vision of global digital compact…(More)”.

Article by Margaret Talbot: “If you have spent time on Wikipedia—and especially if you’ve delved at all into the online encyclopedia’s inner workings—you will know that it is, in almost every aspect, the inverse of Trumpism. That’s not a statement about its politics. The thousands of volunteer editors who write, edit, and fact-check the site manage to adhere remarkably well, over all, to one of its core values: the neutral point of view. Like many of Wikipedia’s s principles and procedures, the neutral point of view is the subject of a practical but sophisticated epistemological essay posted on Wikipedia. Among other things, the essay explains, N.P.O.V. means not stating opinions as facts, and also, just as important, not stating facts as opinions. (So, for example, the third sentence of the entry titled “Climate change” states, with no equivocation, that “the current rise in global temperatures is driven by human activities, especially fossil fuel burning since the Industrial Revolution.”)…So maybe it should come as no surprise that Elon Musk has lately taken time from his busy schedule of dismantling the federal government, along with many of its sources of reliable information, to attack Wikipedia. On January 21st, after the site updated its page on Musk to include a reference to the much-debated stiff-armed salute he made at a Trump inaugural event, he posted on X that “since legacy media propaganda is considered a ‘valid’ source by Wikipedia, it naturally simply becomes an extension of legacy media propaganda!” He urged people not to donate to the site: “Defund Wikipedia until balance is restored!” It’s worth taking a look at how the incident is described on Musk’s page, quite far down, and judging for yourself. What I see is a paragraph that first describes the physical gesture (“Musk thumped his right hand over his heart, fingers spread wide, and then extended his right arm out, emphatically, at an upward angle, palm down and fingers together”), goes on to say that “some” viewed it as a Nazi or a Roman salute, then quotes Musk disparaging those claims as “politicized,” while noting that he did not explicitly deny them. (There is also now a separate Wikipedia article, “Elon Musk salute controversy,” that goes into detail about the full range of reactions.)

This is not the first time Musk has gone after the site. In December, he posted on X, “Stop donating to Wokepedia.” And that wasn’t even his first bad Wikipedia pun. “I will give them a billion dollars if they change their name to Dickipedia,” he wrote, in an October, 2023, post. It seemed to be an ego thing at first. Musk objected to being described on his page as an “early investor” in Tesla, rather than as a founder, which is how he prefers to be identified, and seemed frustrated that he couldn’t just buy the site. But lately Musk’s beef has merged with a general conviction on the right that Wikipedia—which, like all encyclopedias, is a tertiary source that relies on original reporting and research done by other media and scholars—is biased against conservatives.

The Heritage Foundation, the think tank behind the Project 2025 policy blueprint, has plans to unmask Wikipedia editors who maintain their privacy using pseudonyms (these usernames are displayed in the article history but don’t necessarily make it easy to identify the people behind them) and whose contributions on Israel it deems antisemitic…(More)”.

Article by Solveig Bjørkholt: “This article presents an original database on international standards, constructed using modern data gathering methods. StanDat facilitates studies into the role of standards in the global political economy by (1) being a source for descriptive statistics, (2) enabling researchers to assess scope conditions of previous findings, and (3) providing data for new analyses, for example the exploration of the relationship between standardization and trade, as demonstrated in this article. The creation of StanDat aims to stimulate further research into the domain of standards. Moreover, by exemplifying data collection and dissemination techniques applicable to investigating less-explored subjects in the social sciences, it serves as a model for gathering, systematizing, and sharing data in areas where information is plentiful yet not readily accessible for research…(More)”.

Explainer by Perthusasia Centre: “Disinformation has been a tool of manipulation and control for centuries, from ancient military strategies to Cold War propaganda. With the rapid advancement of technology,

it has evolved into a sophisticated and pervasive security threat that transcends traditional boundaries.

This explainer takes the definitions and examples from our recent Indo-Pacific Analysis Brief, Disinformation and cognitive warfare by Senior Fellow Alana Ford, and creates an simple, standalone guide for quick reference…(More)”.

Policy Briefing by Emma Karoune and Malvika Sharan: The interdisciplinary nature of the data science workforce extends beyond the traditional notion of a “data scientist.” A successful data science team requires a wide range of technical expertise, domain knowledge and leadership capabilities. To strengthen such a team-based approach, this note recommends that institutions, funders and policymakers invest in developing and professionalising diverse roles, fostering a resilient data science ecosystem for the future.

By recognising the diverse specialist roles that collaborate within interdisciplinary teams, organisations can leverage deep expertise across multiple skill sets, enhancing responsible decision-making and fostering innovation at all levels. Ultimately, this note seeks to shift the perception of data science professionals from the conventional view of individual data scientists to a competency-based model of specialist roles within a team, each essential to the success of data science initiatives…(More)”.

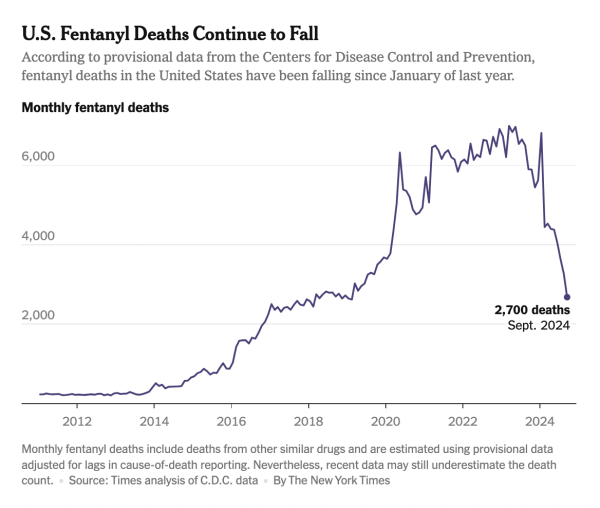

Article by Josh Katz and Margot Sanger-Katz: “One month ago, President Trump agreed to delay tariffs on Canada and Mexico after the two countries agreed to help stem the flow of fentanyl into the United States. On Tuesday, the Trump administration imposed the tariffs anyway, saying that the countries had failed to do enough — and claiming that tariffs would be lifted only when drug deaths fall.

But the administration has seemingly established an impossible standard. Real-time, national data on fentanyl overdose deaths does not exist, so there is no way to know whether Canada and Mexico were able to “adequately address the situation” since February, as the White House demanded.

“We need to see material reduction in autopsied deaths from opioids,” said Howard Lutnick, the commerce secretary, in an interview on CNBC on Tuesday, indicating that such a decline would be a precondition to lowering tariffs. “But you’ve seen it — it has not been a statistically relevant reduction of deaths in America.”

In a way, Mr. Lutnick is correct that there is no evidence that overdose deaths have fallen in the last month — since there is no such national data yet. His stated goal to measure deaths again in early April will face similar challenges.

But data through September shows that fentanyl deaths had already been falling at a statistically significant rate for months, causing overall drug deaths to drop at a pace unlike any seen in more than 50 years of recorded drug overdose mortality data.

The declines can be seen in provisional data from the Centers for Disease Control and Prevention, which compiles death records from states, which in turn collect data from medical examiners and coroners in cities and towns. Final national data generally takes more than a year to produce. But, as the drug overdose crisis has become a major public health emergency in recent years, the C.D.C. has been publishing monthly data, with some holes, at around a four-month lag…(More)”.

Book by Samir Saran and Anirban Sarma: “In an era defined by rapid technological change, a seismic shift is underway. From the rise of digital platforms that mediate our interactions—with markets, with governments and perhaps most importantly with each other as citizens— to the growing tension between our online personas and our real-world identities, the forces of technology, geography and society are colliding in ways we are only beginning to understand.

Even as technology opens up new opportunities for civic engagement, it simultaneously disrupts the very foundations of societal cohesion. The digital age has given rise to a new stage for global drama—one where surveillance, the weaponization of information and the erosion of trust in national and multilateral institutions are playing out in real time. But as these forces evolve, so too must our understanding of how individuals and societies can navigate them.

Will digital societies endure, or are they doomed to collapse under the weight of their own contradictions? Can democracy as we know it survive in a world where power is increasingly concentrated in the hands of a few tech giants? And as nations grapple with the changing dynamics of governance, how will international norms, laws and institutions adapt?

In GeoTechnoGraphy, Samir Saran and Anirban Sarma offer a compelling analysis of the forces reshaping the modern world. Drawing on groundbreaking research and incisive insights, they examine how the convergence of geography and technology—geotechnography—is redefining power and writing new rules for its exercise…(More)”

Article by Ben Casselman and Colby Smith: “Comments from a member of President Trump’s cabinet over the weekend have renewed concerns that the new administration could seek to interfere with federal statistics — especially if they start to show that the economy is slipping into a recession.

In an interview on Fox News on Sunday, Howard Lutnick, the commerce secretary, suggested that he planned to change the way the government reports data on gross domestic product in order to remove the impact of government spending.

“You know that governments historically have messed with G.D.P.,” he said. “They count government spending as part of G.D.P. So I’m going to separate those two and make it transparent.”

It wasn’t immediately clear what Mr. Lutnick meant. The basic definition of gross domestic product is widely accepted internationally and has been unchanged for decades. It tallies consumer spending, private-sector investment, net exports, and government investment and spending to arrive at a broad measure of all goods and services produced in a country.The Bureau of Economic Analysis, which is part of Mr. Lutnick’s department, already produces a detailed breakdown of G.D.P. into its component parts. Many economists focus on a measure — known as “final sales to private domestic purchasers” — that excludes government spending and is often seen as a better indicator of underlying demand in the economy. That measure has generally shown stronger growth in recent quarters than overall G.D.P. figures.

In recent weeks, however, there have been mounting signs elsewhere that the economy could be losing momentum. Consumer spending fell unexpectedly in January, applications for unemployment insurance have been creeping upward, and measures of housing construction and home sales have turned down. A forecasting model from the Federal Reserve Bank of Atlanta predicts that G.D.P. could contract sharply in the first quarter of the year, although most private forecasters still expect modest growth.

Cuts to federal spending and the federal work force could act as a further drag on economic growth in coming months. Removing federal spending from G.D.P. calculations, therefore, could obscure the impact of the administration’s policies…(More)”.

Paper by Julian “Iñaki” Goñi: “Calls for democratising technology are pervasive in current technological discourse. Indeed, participating publics have been mobilised as a core normative aspiration in Science and Technology Studies (STS), driven by a critical examination of “expertise”. In a sense, democratic deliberation became the answer to the question of responsible technological governance, and science and technology communication. On the other hand, calls for technifying democracy are ever more pervasive in deliberative democracy’s discourse. Many new digital tools (“civic technologies”) are shaping democratic practice while navigating a complex political economy. Moreover, Natural Language Processing and AI are providing novel alternatives for systematising large-scale participation, automated moderation and setting up participation. In a sense, emerging digital technologies became the answer to the question of how to augment collective intelligence and reconnect deliberation to mass politics. In this paper, I explore the mutual shaping of (deliberative) democracy and technology (studies), highlighting that without careful consideration, both disciplines risk being reduced to superficial symbols in discourses inclined towards quick solutionism. This analysis highlights the current disconnect between Deliberative Democracy and STS, exploring the potential benefits of fostering closer links between the two fields. Drawing on STS insights, the paper argues that deliberative democracy could be enriched by a deeper engagement with the material aspects of democratic processes, the evolving nature of civic technologies through use, and a more critical approach to expertise. It also suggests that STS scholars would benefit from engaging more closely with democratic theory, which could enhance their analysis of public participation, bridge the gap between descriptive richness and normative relevance, and offer a more nuanced understanding of the inner functioning of political systems and politics in contemporary democracies…(More)”.