Introduction to Special Issue of California Management Review by Boyd Cohen, Esteve Almirall, and Henry Chesbrough: “This article introduces the special issue on the increasing role of cities as a driver for (open) innovation and entrepreneurship. It frames the innovation space being cultivated by proactive cities. Drawing on the diverse papers selected in this special issue, this introduction explores a series of tensions that are emerging as innovators and entrepreneurs seek to engage with local governments and citizens in an effort to improve the quality of life and promote local economic growth…Urbanization, the democratization of innovation and technology, and collaboration are converging paradigms helping to drive entrepreneurship and innovation in urban areas around the globe. These three factors are converging to drive innovation and entrepreneurship in cities and have been referred to as the urbanpreneur spiral….(More)”

Open-Sourcing Google Earth Enterprise

Geo Developers Blog: “We are excited to announce that we are open-sourcing Google Earth Enterprise (GEE), the enterprise product that allows developers to build and host their own private maps and 3D globes. With this release, GEE Fusion, GEE Server, and GEE Portable Server source code (all 470,000+ lines!) will be published on GitHub under the Apache2 license in March.

Originally launched in 2006, Google Earth Enterprise provides customers the ability to build and host private, on-premise versions of Google Earth and Google Maps. In March 2015, we announced the deprecation of the product and the end of all sales. To provide ample time for customers to transition, we have provided a two year maintenance period ending on March 22, 2017. During this maintenance period, product updates have been regularly shipped and technical support has been available to licensed customers….

GCP is increasingly used as a source for geospatial data. Google’s Earth Engine has made available over a petabyte of raster datasets which are readily accessible and available to the public on Google Cloud Storage. Additionally, Google uses Cloud Storage to provide data to customers who purchase Google Imagerytoday. Having access to massive amounts of geospatial data, on the same platform as your flexible compute and storage, makes generating high quality Google Earth Enterprise Databases and Portables easier and faster than ever.

We will be sharing a series of white papers and other technical resources to make it as frictionless as possible to get open source GEE up and running on Google Cloud Platform. We are excited about the possibilities that open-sourcing enables, and we trust this is good news for our community. We will be sharing more information when we launch the code in March on GitHub. For general product information, visit the Google Earth Enterprise Help Center. Review the essential and advanced training for how to use Google Earth Enterprise, or learn more about the benefits of Google Cloud Platform….(More)”

How States Engage in Evidence-Based Policymaking

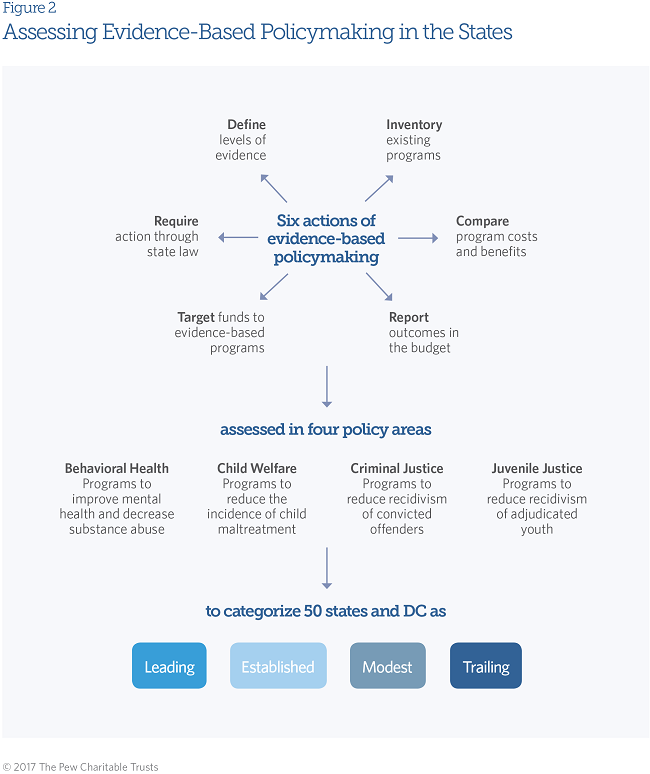

The Pew Charitable Trusts: “Evidence-based policymaking is the systematic use of findings from program evaluations and outcome analyses (“evidence”) to guide government policy and funding decisions. By focusing limited resources on public services and programs that have been shown to produce positive results, governments can expand their investments in more cost-effective options, consider reducing funding for ineffective programs, and improve the outcomes of services funded by taxpayer dollars.

While the term “evidence-based policymaking” is growing in popularity in state capitols, there is limited information about the extent to which states employ the approach. This report seeks to address this gap by: 1) identifying six distinct actions that states can use to incorporate research findings into their decisions, 2) assessing the prevalence and level of these actions within four human service policy areas across 50 states and the District of Columbia, and 3) categorizing each state based on the final results….

Although many states are embracing evidence-based policymaking, leaders often face challenges in embedding this approach into the decision-making process of state and local governments. This report identifies how staff and stakeholder education, strong data infrastructure, and analytical and technical capacity can help leaders build and sustain support for this work and achieve better outcomes for their communities.

What Does Big Data Mean For Sustainability?

Saurabh Tyagi at Sustainable Brands: “Everything around us is impacted by big data today. The phenomenon took shape earlier in this decade and there are now a growing number of compelling ways in which big data analytics is being applied to solve real-world problems….Out of the many promises of big data, environment sustainability is one of the most important ones to implement and maintain. Why so?

Climate change has moved to the top of the list of global risks, affecting every country and disrupting economies. While a major part of this damage is irreversible, it is still possible with use of a wide range of technological measures to control the global increase in temperature. Big data can generate useful insights that can be as relevant towards fostering environment sustainability as they have been to other sectors such as healthcare.

Understanding operations

Big data’s usefulness is in its ability to help businesses understand and act on the environmental impacts of their operations. Some of these are within their boundaries while others are outside their direct control. Previously, this information was dispersed across different formats, locations and sites. However, now businesses are trying to make out the end-to-end impact of their operations throughout the value chain. This includes things that are outside of their direct control, including raw material sourcing, employee travels, product disposal, and the like.

Assessing environmental risks

Big data is also useful in assessing environmental risks. For example, Aqueduct is an interactive water-risk mapping tool from the World Resources Institute that monitors and calculates water risk anywhere in the world based on various parameters related to the water’s quantity, quality and other changing regulatory issue in that area. With this free online, users can choose the factors on which they want to focus and also zoom in at a particular location.

Big data is also enabling environmental sustainability by helping us to understand the demand for energy and food as the world population increases and climate change reduces these resources by every passing year.

Optimizing resource usage

Another big contribution of big data to the corporate world is its ability to help them optimize usage of resources. At the Initiative for Global Environment Leadership (IGEL) conference in 2014, David Parker, VP of Big Data for SAP, discussed how Italian tire company Pirelli uses SAP’s big data management system, HANA, to optimize its inventory. The company uses data generated by sensors in its tires globally to reduce waste, increase profits and reduce the number of defective tires going to landfills, thus doing its bit for environment. Similarly, Dutch energy company Alliander uses HANA to maintain the grid’s peak efficiency, which in turn increases profits and reduces environmental impact. While at one time it used to take 10 weeks for the company to optimize the grid, now it takes only three days to accomplish the same; a task which Alliander used to do once in a year now can be accomplished once every month….

Big data helps better regulation

Big data can also be integrated into government policies to ensure better environmental regulation. Governments can now implement the latest sensor technology and adopt real-time reporting of environmental quality data. This data can be used monitor the emissions of large utility facilities and if required put some regulatory framework in place to regularize the emissions. The firms are given complete freedom to experiment and chose the best possible mean of achieving the required result….(More)”

Documenting Hate

Shan Wang at NiemanLab: “A family’s garage vandalized with an image of a swastika and a hateful message targeted at Arabs. Jewish community centers receiving bomb threats. These are just a slice of the incidents of hate across the country after the election of Donald Trump — but getting reliable data on the prevalence of hate and bias crimes to answer questions about whether these sorts of crimes are truly on the rise is nearly impossible.

ProPublica, which led an effort of more than a thousand reporters and students across the U.S. to cover voting problems on Election Day as part of its Electionland project, is now leaning on the collaborative and data-driven Electionland model to track and cover hate crimes.

Documenting Hate, launched last week, is a hate and bias crime-tracking project headed up by ProPublica and supported by a coalition of news and digital media organizations, universities, and civil rights groups like Southern Poverty Law Center (which has been tracking hate groups across the country). Like Electionland, the project is seeking local partners, and will share its data with and guide local reporters interested in writing relevant stories.

“Hate crimes are inadequately tracked,” Scott Klein, assistant manager editor at ProPublica, said. “Local police departments do not report up hate crimes in any consistent way, so the federal data is woefully inadequate, and there’s no good national data on hate crimes. The data is at best locked up by local police departments, and the best we can know is a local undercount.”

Documenting Hate offers a form for anyone to report a hate or bias crime (emphasizing that “we are not law enforcement and will not report this information to the police,” nor will it “share your name and contact information with anybody outside our coalition without your permission”). ProPublica is working with Meedan (whose verification platform Check it also used for Electionland) and crowdsourced crisis-mapping group Ushahidi, as well as several journalism schools, to verify reports coming in through social channels. Ken Schwencke, who helped build the infrastructure for Electionland, is now focused on things like building backend search databases for Documenting Hate, which can be shared with local reporters. The hope is that many stories, interactives, and a comprehensive national database will emerge and paint a fuller picture of the scope of hate crimes in the U.S.

ProPublica is actively seeking local partners, who will have access to the data as well as advice on how to report on sensitive information (no partners to announce just yet, though there’s been plenty of inbound interest, according to Klein). Some of the organizations working with ProPublica were already seeking reader stories of their own….(More)”.

Mass Observation: The amazing 80-year experiment to record our daily lives

William Cook at BBC Arts: “Eighty years ago, on 30th January 1937, the New Statesman published a letter which launched the largest (and strangest) writers’ group in British literary history.

An anthropologist called Tom Harrisson, a journalist called Charles Madge and a filmmaker called Humphrey Jennings wrote to the magazine asking for volunteers to take part in a new project called Mass Observation. Over a thousand readers responded, offering their services. Remarkably, this ‘scientific study of human social behaviour’ is still going strong today.

Mass Observation was the product of a growing interest in the social sciences, and a growing belief that the mass media wasn’t accurately reflecting the lives of so-called ordinary people. Instead of entrusting news gathering to jobbing journalists, who were under pressure to provide the stories their editors and proprietors wanted, Mass Observation recruited a secret army of amateur reporters, to track the habits and opinions of ‘the man in the street.’

Ironically, the three founders of this egalitarian movement were all extremely well-to-do. They’d all been to public schools and Oxbridge, but this was the ‘Age of Anxiety’, when capitalism was in chaos and dangerous demagogues were on the rise (plus ça change…).

For these idealistic public schoolboys, socialism was the answer, and Mass Observation was the future. By finding out what ‘ordinary’ folk were really doing, and really thinking, they would forge a new society, more attuned to the needs of the common man.

Mass Observation selected 500 citizen journalists, and gave them regular ‘directives’ to report back on virtually every aspect of their daily lives. They were guaranteed anonymity, which gave them enormous freedom. People opened up about themselves (and their peers) to an unprecedented degree.

Even though they were all unpaid, correspondents devoted a great deal of time to this endeavour – writing at great length, in great detail, over many years. As well as its academic value, Mass Observation proved that autobiography is not the sole preserve of the professional writer. For all of us, the urge to record and reflect upon our lives is a basic human need.

The Second World War was the perfect forum for this vast collective enterprise. Mass Observation became a national diary of life on the home front. For historians, the value of such uncensored revelations is enormous. These intimate accounts of air raids and rationing are far more revealing and evocative than the jolly state-sanctioned reportage of the war years.

After the war, Mass Observation became more commercial, supplying data for market research, and during the 1960s this extraordinary experiment gradually wound down. It was rescued from extinction by the historian Asa Briggs….

The founders of Mass Observation were horrified by what they called “the revival of racial superstition.” Hitler, Franco and Mussolini were in the forefront of their minds. “We are all in danger of extinction from such outbursts of atavism,” they wrote, in 1937. “We look to science to help us, only to find that science is too busy forging new weapons of mass destruction.”

For its founders, Mass Observation was a new science which would build a better future. For its countless correspondents, however, it became something more than that – not merely a social science, but a communal work of art….(More)”.

Using data and design to support people to stay in work

Cat Drew at Civil Service Quarterly: “…Data and digital are fairly understandable concepts in policy-making. But design? Why is it one of the three Ds?

Policy Lab believes that design approaches are particularly suited to complex issues that have multiple causes and for which there is no one, simple answer. Design encourages people to think about the user’s needs (not just the organisation’s needs), brings in different perspectives to innovate new ideas, and then prototypes (mocks them up and tries them out) to iteratively improve ideas until they find one that can be scaled up.

Policy Lab also recognises that data alone cannot solve policy problems, and has been experimenting with how to combine numerical and more human practices. Data can explain what is happening, while design research methods – such as ethnography, observing people’s behaviours – can explain why things are happening. Data can be used to automate and tailor public services; while design means frontline delivery staff and citizens will actually know about and use them. Data-rich evidence is highly valued by policy-makers; and design can make it understandable and accessible to a wider group of people, opening up policy-making in the process.

The Lab is also experimenting with new data methods.

Data science can be used to look at complex, unstructured data (social media data, for example), in real time. Digital data, such as social media data or internet searches, can reveal how people behave (rather than how they say they behave). It can also look at huge amounts of data far quicker than humans, and find unexpected patterns hidden in the data. Powerful computers can identify trends from historical data and use these to predict what might happen in the future.

Supporting people in work project

The project took a DDD approach to generating insight and then creating ideas. The team (including the data science organisation Mastodon C and design agency Uscreates) used data science techniques together with ethnography to create a rich picture about what was happening. Then it used design methods to create ideas for digital services with the user in mind, and these were prototyped and tested with users.

The data science confirmed many of the known risk factors, but also revealed some new insights. It told us what was happening at scale, and the ethnography explained why.

- The data science showed that people were more likely to go onto sickness benefits if they had been in the job a shorter time. The ethnography explained that the relationship with the line manager and a sense of loyalty were key factors in whether someone stayed in work or went onto benefits.

- The data science showed that women with clinical depression were less likely to go onto sickness benefits than men with the same condition. The ethnography revealed how this played out in real life:

- For example, Ella [not her real name], a teacher from London who had been battling with depression at work for a long time but felt unable to go to her boss about it. She said she was “relieved” when she got cancer, because she could talk to her boss about a physical condition and got time off to deal with both illnesses.

- The data science also allowed the segmentation of groups of people who said they were on health-related benefits. Firstly, the clustering revealed that two groups had average health ratings, indicating that other non-health-related issues might be driving this. Secondly, it showed that these two groups were very different (one older group of men with previously high pay and working hours; the other of much younger men with previously low pay and working hours). The conclusion was that their motivations and needs to stay in work – and policy interventions – would be different.

- The ethnography highlighted other issues that were not captured in the data but would be important in designing solutions, such as: a lack of shared information across the system; the need of the general practitioner (GP) to refer patients to other non-health services as well as providing a fit note; and the importance of coaching, confidence-building and planning….(More)”

GSK and MIT Flumoji app tracks influenza outbreaks with crowdsourcing

Beth Snyder Bulik at FiercePharma: “It’s like Waze for the flu. A new GlaxoSmithKline-sponsored app called Flumoji uses crowdsourced data to track influenza movement in real time.

Developed with MIT’s Connection Science, the Flumoji app gathers data passively and identifies fluctuations in users’ activity and social interactions to try to identify when a person gets the flu. The activity data is combined with traditional flu tracking data from the Centers for Disease Control to help determine outbreaks. The Flumoji study runs through April, when it will be taken down from the Android app store and no more data will be collected from users.

To make the app more engaging for users, Flumoji uses emojis to help users identify how they’re feeling. If it’s a flu day, symptom faces with thermometers, runny noses and coughs can be chosen, while on other days, users can show how they’re feeling with more traditional mood emojis.

The app has been installed on 500-1,000 Android phones, according to Google Play data.

“Mobile phones are a widely available and efficient way to monitor patient health. GSK has been using them in its studies to monitor activity and vital signs in study patients, and collect patient feedback to improve decision making in the development of new medicines. Tracking the flu is just the latest test of this technology,” Mary Anne Rhyne, a GSK director of external communications for R&D in the U.S., told FiercePharma in an email interview…(More)”

Quantifying scenic areas using crowdsourced data

Chanuki Illushka Seresinhe, Helen Susannah Moat and Tobias Preis in Environment and Planning B: Urban Analytics and City Science: “For centuries, philosophers, policy-makers and urban planners have debated whether aesthetically pleasing surroundings can improve our wellbeing. To date, quantifying how scenic an area is has proved challenging, due to the difficulty of gathering large-scale measurements of scenicness. In this study we ask whether images uploaded to the website Flickr, combined with crowdsourced geographic data from OpenStreetMap, can help us estimate how scenic people consider an area to be. We validate our findings using crowdsourced data from Scenic-Or-Not, a website where users rate the scenicness of photos from all around Great Britain. We find that models including crowdsourced data from Flickr and OpenStreetMap can generate more accurate estimates of scenicness than models that consider only basic census measurements such as population density or whether an area is urban or rural. Our results provide evidence that by exploiting the vast quantity of data generated on the Internet, scientists and policy-makers may be able to develop a better understanding of people’s subjective experience of the environment in which they live….(More)”

Conceptualizing Big Social Data

Ekaterina Olshannikova, Thomas Olsson, Jukka Huhtamäki and Hannu Kärkkäinen in the Journal of Big Data: “The popularity of social media and computer-mediated communication has resulted in high-volume and highly semantic data about digital social interactions. This constantly accumulating data has been termed as Big Social Data or Social Big Data, and various visions about how to utilize that have been presented. However, as relatively new concepts, there are no solid and commonly agreed definitions of them. We argue that the emerging research field around these concepts would benefit from understanding about the very substance of the concept and the different viewpoints to it. With our review of earlier research, we highlight various perspectives to this multi-disciplinary field and point out conceptual gaps, the diversity of perspectives and lack of consensus in what Big Social Data means. Based on detailed analysis of related work and earlier conceptualizations, we propose a synthesized definition of the term, as well as outline the types of data that Big Social Data covers. With this, we aim to foster future research activities around this intriguing, yet untapped type of Big Data

Conceptual map of various BSD/SBD interpretations in the related literature. This illustration depicts four main domains, which were studied by different researchers from various perspectives and intersections of science field/data types….(More)”.