Stefaan Verhulst

Drew Lindsay at the Chronicle of Philanthropy: “Fresh from municipal bankruptcy and locked in a court-mandated spending plan, Central Falls, R.I., controlled little of its budget. But officials found wiggle room in their fiscal straitjacket by borrowing a new idea from the world of philanthropy.

About a year ago, they launched a crowdfunding campaign similar to what’s found on Kickstarter, the online platform where artists, entrepreneurs, and others seek donations to bankroll creative projects. Using a Kickstarter-like website, the former mill town posted a proposal to beautify and clean up its landmark park. It promoted the project through videos, mass emails, and social and mainstream media—typical fundraising tools. Within weeks, it had raised $10,000 to buy new bins for trash and recycling in the park, designed by local artists as public art.

Central Falls is one of dozens of municipalities that has gone hat in hand online in recent years. They are part of a niche group of local governments, nonprofits, and community groups experimenting with “civic crowdfunding” campaigns to raise cash for programs and infrastructure designed for the common good.

The campaigns are typically small, aiming to raise from $5,000 to $30,000 and pay for things that might not even merit a line item in a municipal budget. In Philadelphia’s first successful crowdfunding campaign, for instance, it raised $2,163 for a youth garden program—this when the city spends about $4.5-billion a year.

The architects of civic crowdfunding campaigns are using the cash raised online to attract bigger dollars from state and federal sources. They’re also earning grants from private foundations that see robust crowdfunding as evidence of community backing for a project….

Several online platforms devoted to civic crowdfunding have launched in the United States in recent years, among them Citizinvestor, which worked with Central Falls and Philadelphia; ioby, a partner in the Denver bike-lane campaign; and Neighbor.ly, whose projects include neighborhood-based crowdfunding campaigns to expand a Kansas City, Mo., bike-sharing program.

Each typically takes a small commission from funds raised—usually 5 percent or less, plus a smaller percentage to cover credit-card transaction fees…. (More).

New book by Frank Pasquale on “The Secret Algorithms That Control Money and Information”: “Every day, corporations are connecting the dots about our personal behavior—silently scrutinizing clues left behind by our work habits and Internet use. The data compiled and portraits created are incredibly detailed, to the point of being invasive. But who connects the dots about what firms are doing with this information? The Black Box Society argues that we all need to be able to do so—and to set limits on how big data affects our lives.

Hidden algorithms can make (or ruin) reputations, decide the destiny of entrepreneurs, or even devastate an entire economy. Shrouded in secrecy and complexity, decisions at major Silicon Valley and Wall Street firms were long assumed to be neutral and technical. But leaks, whistleblowers, and legal disputes have shed new light on automated judgment. Self-serving and reckless behavior is surprisingly common, and easy to hide in code protected by legal and real secrecy. Even after billions of dollars of fines have been levied, underfunded regulators may have only scratched the surface of this troubling behavior….(More).”

Springwise: “We’ve already seen Harvard Pilgrim Health Care’s EatRight rewards scheme use tracking technology to monitor employee’s food shopping habits and Alfa-Bank Alfa-Bank in Russia — which rewards customers for every step they run. Now, Oscar Insurance is providing customers with a free Misfit Flash fitness tracker and encouraging them to reach their recommended 10,000 steps a day – rewarding them with up to USD 240 per year in Amazon vouchers.

The New York-based startup were inspired by the US Surgeon Generals’ recommendation that walking every day can have a real impact on many of the top killers in US — such as obesity, diabetes and high blood pressure. Oscar Insurance’s new policy is distinctive amongst similar initiatives in that it aims to encourage regular, gentle exercise with a small reward — USD 1 per day or USD 20 per day in Amazon gift cards — but has no built it financial punishments.

To begin, customers download the companion app which automatically syncs with their free wristband. They are then set a personal daily goal — influenced by their current fitness and sometimes as low as 2000 steps per day. The initial goal gradually increases much like a normal fitness regime. This is the latest addition to Oscar Insurance’s technology driven policies, which also enable the customer to connect with healthcare professionals in their area and allow patients and doctors to track and review their healthcare details….”

Article by Cem Dilmegani, Bengi Korkmaz, and Martin Lundqvist from McKinsey: “Citizens and businesses now expect government information to be readily available online, easy to find and understand, and at low or no cost. Governments have many reasons to meet these expectations by investing in a comprehensive public-sector digital transformation. Our analysis suggests that capturing the full potential of government digitization could free up to $1 trillion annually in economic value worldwide, through improved cost and operational performance. Shared services, greater collaboration and integration, improved fraud management, and productivity enhancements enable system-wide efficiencies. At a time of increasing budgetary pressures, governments at national, regional, and local levels cannot afford to miss out on those savings.

Indeed, governments around the world are doing their best to meet citizen demand and capture benefits. More than 130 countries have online services. For example, Estonia’s 1.3 million residents can use electronic identification cards to vote, pay taxes, and access more than 160 services online, from unemployment benefits to property registration. Turkey’s Social Aid Information System has consolidated multiple government data sources into one system to provide citizens with better access and faster decisions on its various aid programs. The United Kingdom’s gov.uk site serves as a one-stop information hub for all government departments. Such online services also provide greater access for rural populations, improve quality of life for those with physical infirmities, and offer options for those whose work and lifestyle demands don’t conform to typical daytime office hours.

However, despite all the progress made, most governments are far from capturing the full benefits of digitization. To do so, they need to take their digital transformations deeper, beyond the provision of online services through e-government portals, into the broader business of government itself. That means looking for opportunities to improve productivity, collaboration, scale, process efficiency, and innovation….

While digital transformation in the public sector is particularly challenging, a number of successful government initiatives show that by translating private-sector best practices into the public context it is possible to achieve broader and deeper public-sector digitization. Each of the six most important levers is best described by success stories….(More).”

at the SmartChicagoCollaborative: “…Having vast stores of government data is great, but to make this data useful – powerful – takes a different type of approach. The next step in the open data movement will be about participatory data.

Systems that talk back

One of the great advantages behind Chicago’s 311 ServiceTracker is that when you submit something to the system, the system has the capacity to talk back giving you a tracking number and an option to get email updates about your request. What also happens is that as soon as you enter your request, the data get automatically uploaded into the city’s data portal giving other 311 apps like SeeClickFix and access to the information as well…

Participatory Legislative Apps

We already see a number of apps that allow user to actively participate using legislative data.

At the Federal level, apps like PopVox allow users to find and track legislation that’s making it’s way through Congress. The app then allows users to vote if they approve or disapprove of a particular bill. You can then send explain your reasoning in a message that will be sent to all of your elected officials. The app makes it easier for residents to send feedback on legislation by creating a user interface that cuts through the somewhat difficult process of keeping tabs on legislation.

At the state level, New York’s OpenLegislation site allows users to search for state legislation and provide commentary on each resolution.

At the local level, apps like Councilmatic allows users to post comments on city legislation – but these comments aren’t mailed or sent to alderman the same way PopVox does. The interaction only works if the alderman are also using Councilmatic to receive feedback…

Crowdsourced Data

Chicago has hardwired several datasets into their computer systems, meaning that this data is automatically updated as the city does the people’s business.

But city governments can’t be everywhere at once. There are a number of apps that are designed to gather information from residents to better understand what’s going on their cities.

In Gary, the city partnered with the University of Chicago and LocalData to collect information on the state of buildings in Gary, IN. LocalData is also being used in Chicago, Houston, and Detroit by both city governments and non-profit organizations.

Another method the City of Chicago has been using to crowdsource data has been to put several of their datasets on GitHub and accept pull requests on that data. (A pull request is when one developer makes a change to a code repository and asks the original owner to merge the new changes into the original repository.) An example of this is bikers adding private bike rack locations to the city’s own bike rack dataset.

Going from crowdsourced to participatory

Shareabouts is a mapping platform by OpenPlans that gives city the ability to collect resident input on city infrastructure. Chicago’s Divvy Bikeshare program is using the tool to collect resident feedback on where the new Divvy stations should go. The app allows users to comment on suggested locations and share the discussion on social media.

But perhaps the most unique participatory app has been piloted by the City of South Bend, Indiana. CityVoice is a Code for America fellowship project designed to get resident feedback on abandoned buildings in South Bend…. (More)”

Antonio Salazar at BBVA Open Mind: “…A good infographic can save lives. Or almost, like the map drawn by Dr. Snow, who in 1854 tried to prove —with not much success, it’s true— that cholera spread by water. Not all graphics can be so ambitious, but when they hope to become a very powerful vehicle for knowledge, these are three elements that an excellent infographic should have. In order:

1. Rigor

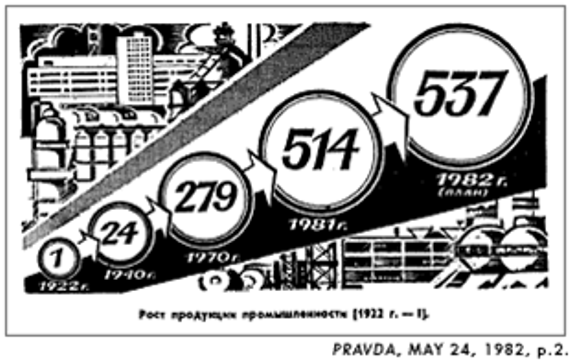

This is taken for granted. Or not. This chart from Pravda (not Miuccia, the Soviet daily) is manipulated graphically to give the impression of growth:

But rigor does not only mean accuracy, it means respect for the data in a broader sense. Letting them speak beyond the ornamentation, avoiding the “ducks” referred to by Edward R. Tufte. Guiding our eye so the reader can discover them one step at a time. This brings us to the second quality:

2. Depth

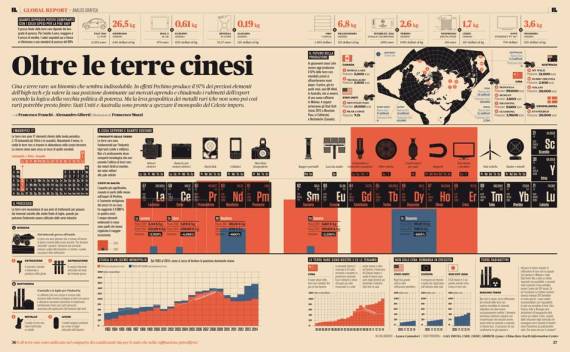

The beauty of the data sometimes lies in their implications and their connections. This is why a good infographic should be understandable at first sight, but also, when viewed for a second time, enable the reader to take pleasure in the data. Perhaps in the process of reasoning, both analytical and visual, that a graphic requires, we may come to a conclusion we hadn’t imagined. This is why a good infographic should have layers of information. Any infographic by Francesco Franchi is a wonder of visual richness and provides hours of entertainment and learning.

3. Narration

Storytelling in today’s parlance. When time, space and even meteorology are combined skillfully to tell a story through the data….”

New report by the Worldbank: “Data provide critical inputs in designing effective development policy recommendations, supporting their implementation, and evaluating results. In this new report “Big Data in Action for Development,” the World Bank Group collaborated with Second Muse, a global innovation agency, to explore big data’s transformative potential for socioeconomic development. The report develops a conceptual framework to work with big data in the development sector and presents a variety of case studies that lay out big data’s innovations, challenges, and opportunities.”

Eric B. Steiner at Wired: “A few weeks ago, I asked the internet to guess how many coins were in a huge jar…The mathematical theory behind this kind of estimation game is apparently sound. That is, the mean of all the estimates will be uncannily close to the actual value, every time. James Surowiecki’s best-selling book, Wisdom of the Crowd, banks on this principle, and details several striking anecdotes of crowd accuracy. The most famous is a 1906 competition in Plymouth, England to guess the weight of an ox. As reported by Sir Francis Galton in a letter to Nature, no one guessed the actual weight of the ox, but the average of all 787 submitted guesses was exactly the beast’s actual weight….

So what happened to the collective intelligence supposedly buried in our disparate ignorance?

Most successful crowdsourcing projects are essentially the sum of many small parts: efficiently harvested resources (information, effort, money) courtesy of a large group of contributors. Think Wikipedia, Google search results, Amazon’s Mechanical Turk, and KickStarter.

But a sum of parts does not wisdom make. When we try to produce collective intelligence, things get messy. Whether we are predicting the outcome of an election, betting on sporting contests, or estimating the value of coins in a jar, the crowd’s take is vulnerable to at least three major factors: skill, diversity, and independence.

A certain amount of skill or knowledge in the crowd is obviously required, while crowd diversity expands the number of possible solutions or strategies. Participant independence is important because it preserves the value of individual contributors, which is another way of saying that if everyone copies their neighbor’s guess, the data are doomed.

Failure to meet any one of these conditions can lead to wildly inaccurate answers, information echo, or herd-like behavior. (There is more than a little irony with the herding hazard: The internet makes it possible to measure crowd wisdom and maybe put it to use. Yet because people tend to base their opinions on the opinions of others, the internet ends up amplifying the social conformity effect, thereby preventing an accurate picture of what the crowd actually thinks.)

What’s more, even when these conditions—skill, diversity, independence—are reasonably satisfied, as they were in the coin jar experiment, humans exhibit a whole host of other cognitive biases and irrational thinking that can impede crowd wisdom. True, some bias can be positive; all that Gladwellian snap-judgment stuff. But most biases aren’t so helpful, and can too easily lead us to ignore evidence, overestimate probabilities, and see patterns where there are none. These biases are not vanquished simply by expanding sample size. On the contrary, they get magnified.

Given the last 60 years of research in cognitive psychology, I submit that Galton’s results with the ox weight data were outrageously lucky, and that the same is true of other instances of seemingly perfect “bean jar”-styled experiments….”

Lindsay Clark in ComputerWeekly: “Open data, a movement which promises access to vast swaths of information held by public bodies, has started getting its hands dirty, or rather its feet.

Before a spade goes in the ground, construction and civil engineering projects face a great unknown: what is down there? In the UK, should someone discover anything of archaeological importance, a project can be halted – sometimes for months – while researchers study the site and remove artefacts….

During an open innovation day hosted by the Science and Technologies Facilities Council (STFC), open data services and technology firm Democrata proposed analytics could predict the likelihood of unearthing an archaeological find in any given location. This would help developers understand the likely risks to construction and would assist archaeologists in targeting digs more accurately. The idea was inspired by a presentation from the Archaeological Data Service in the UK at the event in June 2014.

The proposal won support from the STFC which, together with IBM, provided a nine-strong development team and access to the Hartree Centre’s supercomputer – a 131,000 core high-performance facility. For natural language processing of historic documents, the system uses two components of IBM’s Watson – the AI service which famously won the US TV quiz show Jeopardy. The system uses SPSS modelling software, the language R for algorithm development and Hadoop data repositories….

The proof of concept draws together data from the University of York’s archaeological data, the Department of the Environment, English Heritage, Scottish Natural Heritage, Ordnance Survey, Forestry Commission, Office for National Statistics, the Land Registry and others….The system analyses sets of indicators of archaeology, including historic population dispersal trends, specific geology, flora and fauna considerations, as well as proximity to a water source, a trail or road, standing stones and other archaeological sites. Earlier studies created a list of 45 indicators which was whittled down to seven for the proof of concept. The team used logistic regression to assess the relationship between input variables and come up with its prediction….”