Stefaan Verhulst

Chapter by Mireille Hildebrandt: “… investigates the link between the contestability that is key to constitutional democracies on the one hand and the falsifiability of scientific theories on the other hand, with regard to large language models (LLMs). Legally relevant decision-making that is based on the deployment of applications that involve LLMs must be contestable in a court of law. The current flavour of such contestability is focused on transparency, usually framed in terms of the explainability of the model (explainable AI). In the long run, however, the fairness and reliability of these models should be tested in a more scientific manner, based on the falsifiability of the theoretical framework that should underpin the model. This requires that researchers in the domain of LLMs learn to abduct theoretical frameworks, based on the output models of LLMs and the real world patterns they imply, while this abduction should be such that the theory can be inductively tested in a way that allows for falsification. On top of that, researchers need to conduct empirical research to enable such inductive testing. The chapter thus argues that the contestability required under the Rule of Law, should move beyond explanations of how the model generates its output, to whether the real world patterns represented in the model output can falsify the theoretical framework that should inform the model…(More)”.

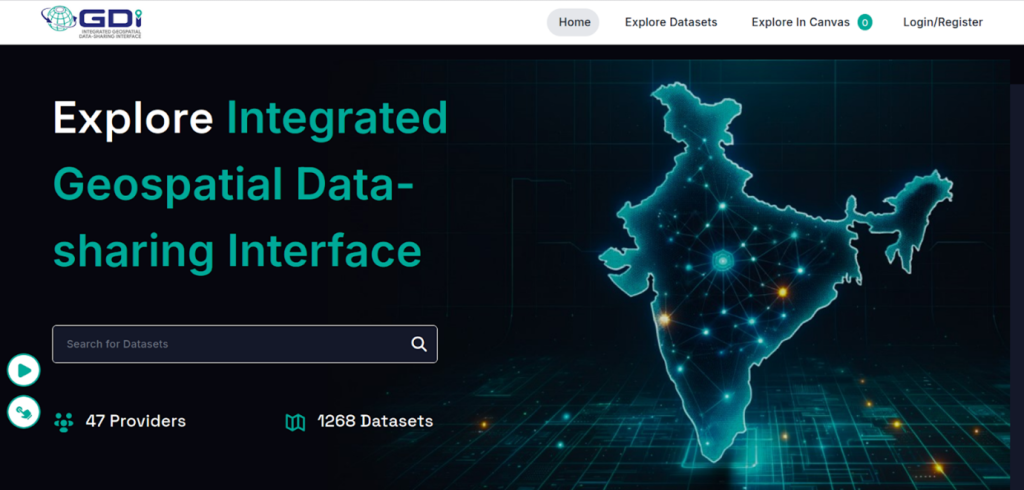

Article by Bryan Paul Robert, Mahidhar Chellamani, and Jyotirmoy Dutta: “For years, some of India’s most valuable geospatial datasets remained scattered across government departments, research institutes, or private organizations. They held immense potential to transform logistics, strengthen climate resilience, and support smarter urban planning, but they remained difficult to access, buried in different formats and lacking interoperability.

Recognizing this challenge, the Government of India through the Department of Science and Technology (DST) tasked the Centre of Data for Public Good (CDPG) at the Indian Institute of Science (IISc) with a bold vision: to develop a standards-based geospatial data exchange platform. The result was the Integrated Geospatial Data Exchange Interface (GDI) – a unified, open-access system built on OGC APIs, designed to make metadata-rich, analysis-ready geospatial data available for application developers, researchers, startups, and policymakers alike…

Designed to treat publicly funded geospatial data as a common good, GDI has established itself as a unified, open-access platform for interoperable, consent-based, analysis-ready, and metadata-rich data exchange using globally accepted standards. As a contribution to the third layer of the India stack, it enables seamless sharing of geospatial data, accelerating applications in data science and empowering developers.

GDI catalogue (catalogue.geospatial.org.in)

Unlike traditional open data portals, GDI emphasizes secure, consent-based data sharing, where data providers retain control over how their datasets are accessed and used. Robust auditing mechanisms ensure transparency and accountability, while an integrated analytics engine allows users to derive insights without always downloading raw datasets. The platform is also capable of onboarding large volumes of data and supports fine-grained access control for different user groups. By adhering to OGC standards, GDI ensures interoperability and fosters collaboration by allowing value-added services like data fusion, visualization, and application development. In doing so, it functions not just as a data exchange platform but as an ecosystem that drives trust, innovation, and responsible use of geospatial data.

To achieve these goals, CDPG developed a new GIS server based on the latest OGC REST APIs with the Intelligent Universal Data Exchange (IUDX) platform at its core – a technology stack developed in-house…(More)”

Paper by ESIR (European Union): “…proposes a new approach to policymaking in the, introducing the concept of “policy acupuncture” or “pressure points” to address the complex and interconnected challenges facing Europe. It argues that by identifying and acting on these high-leverage points, the EU can unlock systemic change and achieve greater impact and presents ten policy pressure point interventions to enable Europe’s twin green and digital transitions, increase competitiveness, and build resilience…(More)”.

Report by by Perry Hewitt, Ginger Zielinskie, Danil Mikhailov, Ph.D.: “Social impact organizations are already using AI to help solve some of the world’s biggest challenges—but not yet at the speed we need. Informed by our global network of partners and five years of program delivery at data.org, the 2025 Accelerate Report is a roadmap for how data and AI can be used innovatively and intentionally to drive social impact. From fair finance to workforce upskilling, 2025 Accelerate highlights the people, practices, and technology accelerating progress where it’s needed most.

Recommendations to Accelerate

- Stay focused on how AI can assist you and your mission. Don’t lose sight of what you’re trying to accomplish with technology. Incorporate data and AI in ways that fuel both operational excellence and impact strategies.

- Harness the power of partnerships. Partnerships help us all go farther by sharing best practices, curating important information, and facilitating sharing of data, talent, and infrastructure.

- Remember who you’re serving. Building trust and leading with localism are essential to ensuring the tools, technology, and systems you’re building are responsible, inclusive, and sustainable…(More)”.

Paper by Luciano Floridi and Anna Ascani: “Debates on AI governance often focus on regulating risks. This article shifts perspective to examine how AI can augment democratic processes, presenting a critical analysis of the Italian Chamber of Deputies ‘pioneering AI initiative. We detail the 2024 project that produced three prototype systems-NORMA (legislative analysis), MSE (drafting assistance), and DepuChat (citizen engagement)-which embed principles of transparency, human oversight, and privacy-by-design. We introduce the project’s third way development model, a public-academic partnership that contrasts with full in-house or commercial approaches. Using this initiative as a critical case study, we move beyond merely applying the concept of “augmented democracy”. We argue that this real-world implementation reveals key tensions between the goals of efficiency and the preservation of deliberative friction essential to democratic practice. The analysis highlights risks, including staff deskilling and the digitalisation of inequalities, and situates the Italian approach in an international context. We conclude by offering a theoretical refinement of augmented democracy, informed by the practical lessons of its implementation in a complex legislative environment…(More)”.

Essay by Steven Levy: “For decades, Mark Lemley’s life as an intellectual property lawyer was orderly enough. He’s a professor at Stanford University and has consulted for Amazon, Google, and Meta. “I always enjoyed that the area I practice in has largely been apolitical,” Lemley tells me. What’s more, his democratic values neatly aligned with those of the companies that hired him.

But in January, Lemley made a radical move. “I have struggled with how to respond to Mark Zuckerberg and Facebook’s descent into toxic masculinity and Neo-Nazi madness,” he posted on LinkedIn. “I have fired Meta as a client.”

This is the Silicon Valley of 2025. Zuckerberg, now 41, had turned into a MAGA-friendly mixed martial arts fan who didn’t worry so much about hate speech on his platforms and complained that corporate America wasn’t masculine enough. He stopped fact-checking and started hanging out at Mar-a-Lago. And it wasn’t only Zuckerberg. A whole cohort of billionaires seemed to place their companies’ fortunes over the well-being of society…It should be the best of times for the tech world, supercharged by a boom in artificial intelligence. But a shadow has fallen over Silicon Valley. The community still overwhelmingly leans left. But with few exceptions, its leaders are responding to Donald Trump by either keeping quiet or actively courting the government. One indelible image of this capture is from Trump’s second inauguration, where a decisive quorum of tech’s elite, after dutifully kicking in million-dollar checks, occupied front-row seats.

“Everyone in the business world fears repercussions, because this administration is vindictive,” says venture capitalist David Hornik, one of the few outspoken voices of resistance. So Silicon Valley’s elite are engaged in a dangerous dance with a capricious administration—or as Michael Moritz, one of the Valley’s iconic VCs, put it to me, “They’re doing their best to avoid being held up in a protection racket.”..(More)”.

Essay by Seth Lazar & Mariano-Florentino Cuéllar: “Computational progress has always been Janus-faced for democracy. The spread and networking of computing power bolster the epistemic and communicative practices on which democracies rely. Yet the same tools are among the most sophisticated instruments of coercion and control ever devised.

Every landmark in computing—from the first digital machines to the PC, the internet, and now artificial intelligence (AI)—has provoked anguished reassessment of this tension. In 1984, Langdon Winner described an enduring divide: “computer romantics” who dream that each leap forward will finally realize computing’s unkept promise for democracy; and skeptics, like himself, who think that more powerful technologies always serve the powerful first, best, and perhaps only.

For computing’s first half-century, the romantics seemed to have the better of the argument. Computational and democratic progress proceeded hand in hand. In the 21st century, however, this picture has darkened. Democratic ideals face acute pressure: just over a quarter of humanity now lives in electoral or liberal democracies, down from almost half in 2016. Countries sliding toward autocracy now double those moving toward democracy. And while computational progress has accelerated, the public’s endorsement of the social role of computing in general, and of technology companies in particular, has recently faltered. Through the mid-2010s, big-tech companies ranked among society’s most trusted institutions. Since then, a cross-national backlash against platform power and digital harms has spurred heavy regulation and, even in the United States, a bipartisan conviction that too few companies wield too much power.

Policymakers and the public today face another digital revolution. In the last decade, research progress in AI has taken off. We have already developed extraordinarily powerful and economically valuable analytical and generative AI tools. We are now on the cusp of building autonomous AI systems that can carry out almost any task that competent humans can currently use digital technologies to perform. Our democracies will soon be infused with AI agents.

In this paper, we explore how AI agents might benefit, advance, and complicate the realization of democratic values. We aim to consider both faces of the computational Janus, avoiding both Panglossian optimism and ahistorical catastrophizing…(More)”.

Issue paper by Paola Daniore: “…we outline the urgent need to foster the secondary use of health data as a strategic priority for Switzerland’s health and innovation ecosystem. In the face of rising healthcare costs, increasing international restrictions on data, and concerns over data privacy, a targeted national strategy is needed to bridge health data and privacy policies. When managed responsibly, the secondary use of health data drives research innovation, fosters evidence-based patient care, and informs public-health programs. These societal benefits of secondary use of health data must be realized and the needs guaranteed to protect sensitive health-data from misuse…(More)”.

Article by Noora Al-Emadi, et al: “Artificial intelligence (AI) has demonstrated its potential across a wide range of humanitarian tasks, offering scalable and data-driven solutions to some of the world’s most pressing challenges. For instance, in addressing climate change, AI-powered models are being used for faster and more accurate global weather forecasting, control and design of renewable energy, and monitoring deforestation. Machine learning algorithms analyze satellite imagery and socioeconomic data to predict poverty and promote sustainable development. AI has also transformed disaster response and conflict monitoring, providing innovative solutions for assessing damage and urgent needs and tracking displacement.

This article showcases two projects that exemplify the potential of AI-driven approaches for disaster response and displacement monitoring with case studies from the Arab World. The first case study highlights how social media data was utilized to support disaster response efforts during the devastating 2023 Türkiye-Syria earthquake.By processing vast amounts of unstructured social media content, AI-powered tools enabled real-time situational awareness, facilitating more targeted and efficient humanitarian interventions. The second case study focuses on the use of satellite imagery to monitor internal displacement during the Syrian civil war. Through the development of a region-specific vehicle detection model, we demonstrate how vehicle counts in satellite images can serve as proxies for tracking population movements. This innovative approach addresses critical data gaps in conflict-affected regions, offering a scalable solution for monitoring displacement trends in near real time…(More)”

Paper by Martin Wählisch and Felix Kufus: “The integration of artificial intelligence (AI)-driven technologies into peace dialogues offers both innovative possibilities and critical challenges for contemporary peacebuilding practice. This article proposes a context-sensitive taxonomy of digital deliberation tools designed to guide the selection and adaptation of AI-assisted platforms in conflict-affected environments. Moving beyond static typologies, the framework accounts for variables such as scale, digital literacy, inclusivity, security, and the depth of AI integration. By situating digital peace dialogues within broader peacebuilding and digital democracy frameworks, the article examines how AI can enhance participation, scale deliberation, and support knowledge synthesis, —while also highlighting emerging concerns around algorithmic bias, digital exclusion, and cybersecurity threats. Drawing on case studies involving the United Nations (UN) and civil society actors, the article underscores the limitations of one-size-fits-all approaches and makes the case for hybrid models that balance AI capabilities with human facilitation to foster trust, legitimacy, and context-responsive dialogue. The analysis contributes to peacebuilding scholarship by engaging with the ethics of AI, the politics of digital diplomacy, and the sustainability of technological interventions in peace processes. Ultimately, the study argues for a dynamic, adaptive approach to AI integration, continuously attuned to the ethical, political, and socio-cultural dimensions of peacebuilding practice…(More)”.