Stefaan Verhulst

A classification system by the Dubai Future Foundation (DFF): “… that supports a visual representation of the evolving human-machine collaboration in research, design and publications.

Described in detail in our white paper, our aim is to support transparency in research and provide – at a glance – a standard mechanism that allows readers, researchers and decision-makers to see the extent to which research outputs have been shaped by machines, i.e. a process based approach. While we recognise that research, design and publications in the future may increasingly rely on autonomous processes, this shift may not be uniformly applied across all contexts, fields, functions and industries during the transitional period, a time frame that may last a couple of years or up to (and perhaps even longer than) 10 years.

Effective from the date of the white paper, every DFF research report will display respective icons for human-machine collaboration, demonstrating our commitment to transparency and establishing a new standard for ethical research practices…(More)”

Article by Eman Alashwali: “…aims to shed light on the often-overlooked difference between two main types of privacy from a control perspective: Privacy between a user and other users, and privacy between a user and institutions. I discuss why this difference is important and what we need to do from here…

Raynes-Goldie coined the term social privacy as opposed to institutional privacy. The former is about controlling access to personal information while the latter is about controlling how institutions such as Facebook and their partners might use this information. Heyman et al. defined the term privacy as subject to refer to controlling a user’s personal information disclosure to other users, and privacy as object to refer to controlling information disclosure to third parties, which represent the user as an object in a data mining process. Brandimarte et al. classified privacy controls according to purpose, where release controls refer to controlling information disclosure between users, while usage controls refer to controlling the use of users’ information, for example, by the service providers or third parties. Bazarova and Masur introduced multiple approaches to privacy, which include the networked approach where information flows in a horizontal direction between users, and the institutional approach where information flows in a vertical direction between a user and institution.

I will use the terms user-to-user privacy and user-to-institution privacy. In user-to-user, the other users could be family, friends, coworkers, and others. In user-to-institution, the institution could be a service provider, organization, government, and so forth.

In recent years, many service providers, for example, social media platforms, have improved the privacy controls provided to users. However, they may have improved one type of privacy controls: the user-to-user.3 Ignoring the difference between the two types of privacy controls may lead users to have an illusory sense of control over their privacy. For example, users’ perceived control over user-to-user privacy may result in fewer privacy concerns as a result of an incomplete assessment of the associated risks of data sharing, ignoring what Stutzman called “silent listeners.”..(More)”.

Report by Dan Ciuriak: “Data is widely acknowledged as the essential capital asset of the modern economy, yet its value remains largely invisible in corporate balance sheets and understated in national economic accounts. This paper argues that conventional valuation approaches — particularly those based on the costs of datafication — capture only part of the story. While expenditures on datafication

enter GDP as investment in intangible assets, they do not reflect the substantial economic rents

generated by the effective use of data within firms. These rents arise from data’s distinctive economic characteristics, including non-rivalry and combinatorial scalability, and its role in creating information asymmetries that give data-rich firms a competitive advantage. As a result, data contributes to enterprise value not through direct transactions, but by enhancing profitability, accelerating innovation through machine learning, and enabling the creation of machine knowledge capital.

Drawing on trends in the US economy, the paper estimates that data rents alone account for more

than two percent of GDP — representing a layer of value in addition to the investment flows currently captured in GDP. This has profound implications for national accounting methodologies, which

underestimate the value contribution of data. It also flags risks for economic policy in small open

economies that lack the scale to effectively capture data rents, since investing in datafication at less than critical scale may not recover costs and may result in negative productivity outcomes…(More)”.

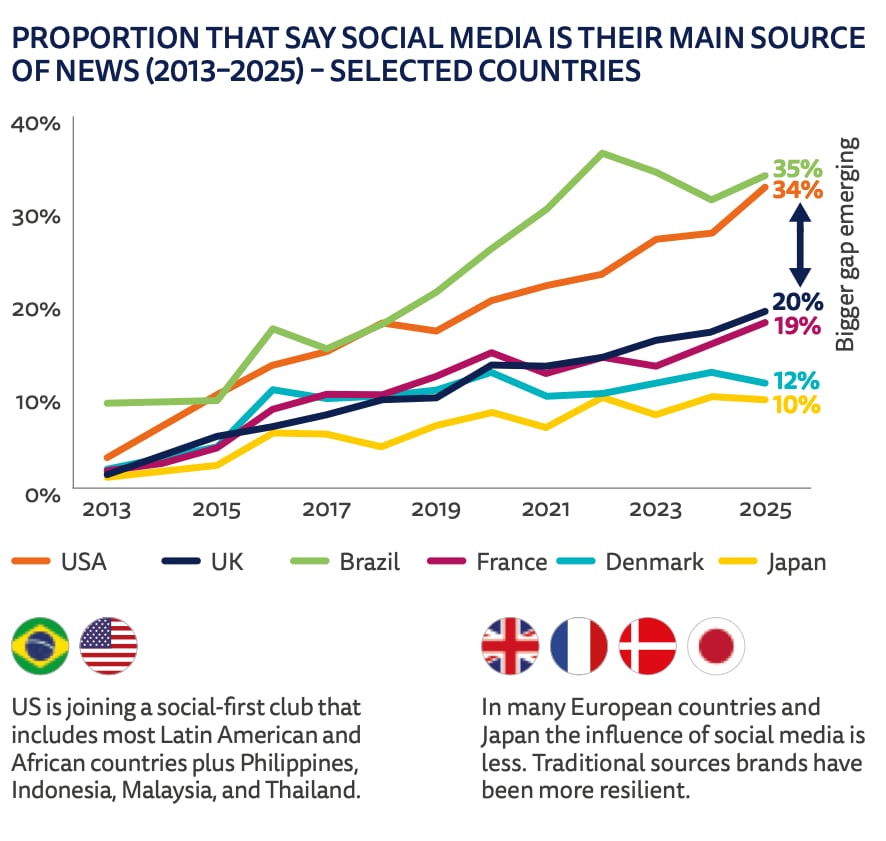

Article by David Elliott: “How do people today stay informed about what’s happening in the world? In most countries, TV, print and websites are becoming less popular, according to a report from the Reuters Institute.

The 2025 Digital News Report, which distills data from six continents and 48 markets, finds that these traditional news media sources are struggling to connect with the public, with declining engagement, low trust and stagnating subscriptions.

So where are people getting their news in 2025? And what might be the impact of these shifts?

Amid political and economic uncertainty, the climate crisis and ongoing conflicts around the world, there is certainly no lack of stories to report on. But audiences are continuing to go to new places to find them – namely, social media, video platforms and online aggregators.

Social media use for news is rising across many countries, although this is more pronounced in the United States, Latin America, Africa and some Southeast Asian countries. In the US, for example, the proportion of people that say social media is their main source of news has risen significantly in the past decade, from around 4% in 2015 to 34% in 2025. The proportion of people accessing news via social media and video networks in the US overtook both TV news and news websites for the first time.

In many European countries, traditional news sources have been more resilient but social media use for news is still rising. In the UK and France, for example, about a fifth of people in each country now use social media as their primary news source compared to well below 10% a decade ago.

Across all of the markets studied by the report, the proportion consuming video continues to grow. And dependence on social media and video networks for news is highest with younger groups – 44% of 18- to 24-year-olds and 38% of 25- to 34-year-olds say these are their main sources of news…(More)”.

Book Review by Gordon LaForge of “The Technological Republic: Hard Power, Soft Belief, and the Future of the West By Alexander C. Karp and Nicholas W. Zamiska”: “…Karp laments that the government has stepped away from technology development, “a remarkable and near-total placement of faith in the market.” In his view, Silicon Valley, which owes its existence to federal investment and worked hand-in-glove with the state to produce the breakthroughs of the post-Sputnik era, has “lost its way.” Instead, founders who claim to want to change the world have created food-delivery apps, photo-sharing platforms, and other trivial consumer products.

Less resonant is Karp’s diagnosis of the source of the problem. In his view, America’s tech leaders have become soft and timid. They fear doing anything that might invite controversy or disapproval, like taking on a military contract or supporting a national mission. They are of a generation that has abandoned “belief or conviction in broader political projects,” he writes, trained to simply mimic what has come before and conform to prevailing sentiment.

This all has its roots, Karp argues, in a “systematic attack and attempt to dismantle any conception of American or Western identity during the 1960s and 1970s.”…

Karp’s claims feel divorced from reality. Debates about justice and national identity run riot in America today. A glance at Elon Musk’s X feed or Meta’s content moderation policies dispels the idea that controversy avoidance is the tech industry’s North Star. Internal contradictions in Karp’s argument abound. For instance, in one part of the book he criticizes tech leaders for sheep-like conformity, while in another he lionizes the “unwillingness to conform” as the quintessence of Silicon Valley. It doesn’t help that Karp makes his case not so much with evidence but with repetition of his claims and biographical snippets of historical figures.

Karp’s preoccupation with what he calls “soft belief” misses the deeper structural reality. Innovation is not merely a function of the mindset of individual founders; it depends on an ecosystem of public and private institutions—tax policy, regulations, the financial system, education, labor markets, and so on. In the United States, the public aspects of that ecosystem have weakened over time, while the private sector and its attendant interests have flourished….Karp’s treatise seems to spring from a belief that he expressed in a February earnings call: “Whatever is good for America will be good for Americans and very good for Palantir.” This conflation of the gains of private companies with the good of the country explains much of what’s gone wrong in the United States today—whether in technological innovation or elsewhere…(More)”.

Report comparing EU, US and Chinese approaches by the European Commission: “This policy brief focusses on technology monitoring and assessment (TMA). TMA is important for R&I policy orientation, and has significant socioeconomic impacts, especially in terms of emerging technologies. After outlining the advantages and features of TMA systems, this brief compares the TMA systems in China, the US and the EU, and outlines the structural and methodological challenges faced by the EU TMA approach. This brief concludes by providing recommendations to EU policymakers to transform the EU TMA system into a distinct advantage in the competition for global innovation leadership…(More)”.

Paper by Ioannis Lianos: “The EU legal framework for data access and portability has undergone significant evolution, particularly in the realm odata, with recent initiatives like the European Health Data Space (EHDS) and competition law enforcement expanding data-sharing obligations across various economic actors. This evolution reflects a shift from an initial emphasis on individuals’ fundamental rights to access and port their health data—rooted in privacy protection, personal data rights, and digital sovereignty—towards a more utilitarian perspective. This newer approach extends data-sharing obligations to cover co-generated data involving end-users, business users, and complementors within digital health ecosystems, promoting a concept of data co-use or co-ownership rather than private ownership. Furthermore, the regulatory framework has proactively established ‘data commons’ to foster cumulative innovation and broader industry transformation. The increasing prominence of a fairness rhetoric in EU regulatory and competition law underscores a transformational intent, aiming not only to acknowledge stakeholders’ contributions to data generation but also to ensure equal economic opportunities within the digital health space and facilitate the EU’s digital transition. This study adopts a law and political economy perspective to examine the competition-related bottleneck issues specific to health data, considering the economic structure of its generation, capture, and exploitation. It then analyses the distributive implications of current regulations (including the DMA, Data Act, EHDS, Digital Governance Act, and Competition Law) by exploring relationships between key economic players: digital platforms and end users, platforms and their ecosystem complementors, and external third-party businesses interacting with the digital health ecosystem…(More)”.

Conference Proceedings edited by Josef Drexl, Moritz Hennemann, Patricia Boshe, and Klaus Wiedemann: “The increasing relevance of data is now recognized all over the world. The large number of regulatory acts and proposals in the field of data law serves as a testament to the significance of data processing for the economies of the world. The European Union’s Data Strategy, the African Union’s Data Policy Framework and the Australian Data Strategy only serve as examples within a plethora of regulatory actions. Yet, the purposeful and sensible use of data does not only play a role in economic terms, e.g. regarding the welfare or competitiveness of economies. The implications for society and the common good are at least equally relevant. For instance, data processing is an integral part of modern research methodology and can thus help to address the problems the world is facing today, such as climate change.

The conference was the third and final event of the Global Data Law Conference Series. Legal scholars from all over the world met, presented and exchanged their experiences on different data-related regulatory approaches. Various instruments and approaches to the regulation of data – personal or non-personal – were discussed, without losing sight of the global effects going hand-in-hand with different kinds of regulation.

In compiling the conference proceedings, this book does not only aim at providing a critical and analytical assessment of the status quo of data law in different countries today, it also aims at providing a forward-looking perspective on the pressing issues of our time, such as: How to promote sensible data sharing and purposeful data governance? Under which circumstances, if ever, do data localisation requirements make sense? How – and by whom – should international regulation be put in place? The proceedings engage in a discussion on future-oriented ideas and actions, thereby promoting a constructive and sensible approach to data law around the world…(More)”.

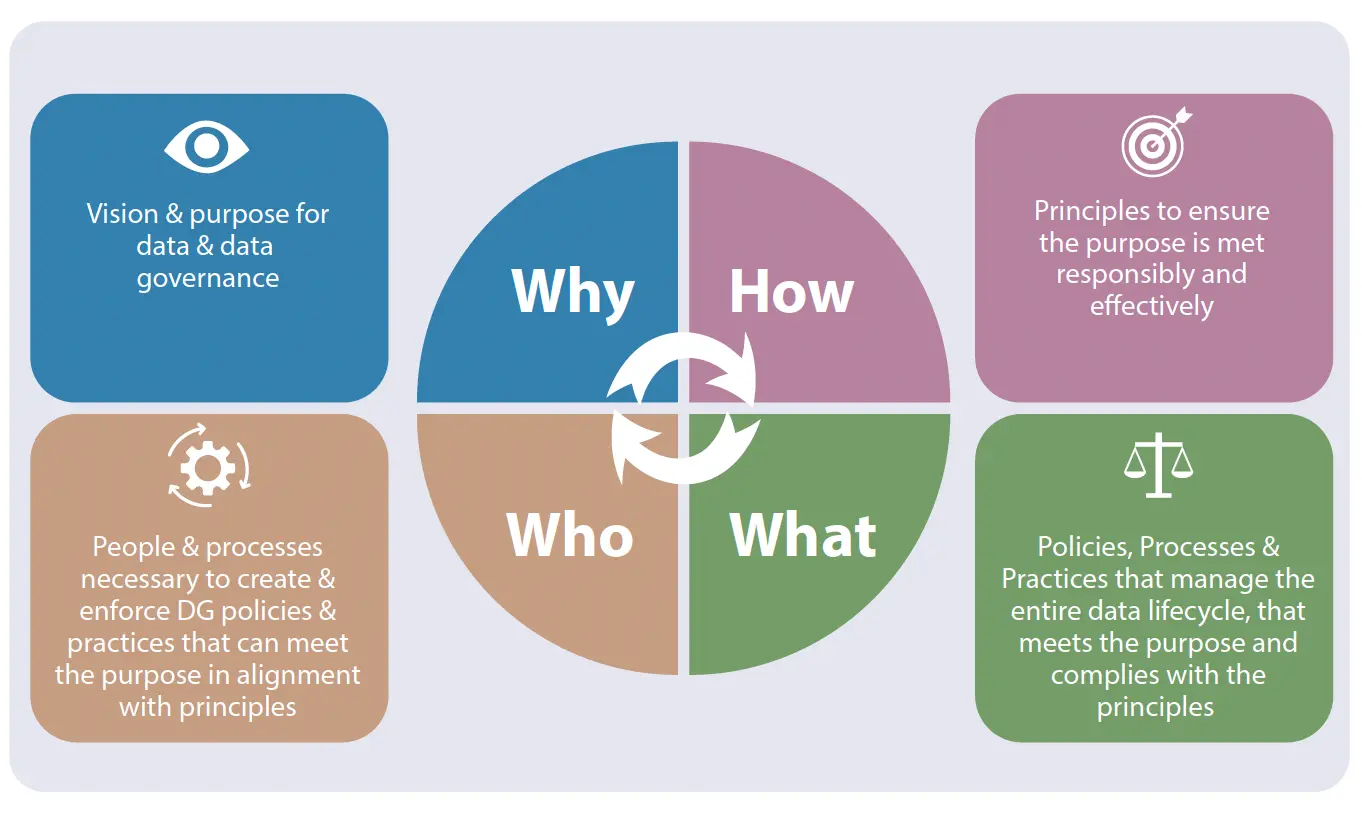

Toolkit by the Broadband Commission Working Group on Data Governance: “.. the Toolkit serves as a practical, capacity-building resource for policymakers, regulators, and governments. It offers actionable guidance on key data governance priorities — including legal frameworks, institutional roles, cross-border data flows, digital self-determination, and data for AI.

As a key capacity building resource, the Toolkit aims to empower policymakers, regulators and data practitioners to navigate the complexities of data governance in the digital era. Plans are currently underway to translate the Toolkit into French, Spanish, Chinese, and Arabic to ensure broader global accessibility and impact. Pilot implementation at country level is also being explored for Q4 2025 to support national-level uptake.

The Data Governance Toolkit

The Data Governance Toolkit: Navigating Data in the Digital Age offers a practical, rights-based guide to help governments, institutions, and stakeholders make data work for all.

The Toolkit is organized around four foundational data governance components—referred to as the 4Ps of Data Governance:

- Why (Purpose): How to define a vision and purpose for data governance in the context of AI, digital transformation, and sustainable development.

- How (Principles): What principles should guide a governance framework to balance innovation, security, and ethical considerations.

- Who (People and Processes): Identifying the stakeholders, institutions, and processes required to build and enforce responsible governance structures.

- What (Practices and Mechanisms): Policies and best practices to manage data across its entire lifecycle while ensuring privacy, interoperability, and regulatory compliance.

The Toolkit also includes:

- A self-assessment framework to help organizations evaluate their current capabilities;

- A glossary of key terms to foster shared understanding;

- A curated list of other toolkits and frameworks for deeper engagement.

Designed to be adaptable across regions and sectors, the Data Governance Toolkit is not a one-size-fits-all manual—but a modular resource to guide smarter, safer, and fairer data use in the digital age…(More)”

Report by Ofcom (UK): “…We outline three potential policy options and models for facilitating greater researcher access, which include:

- Clarify existing legal rules: Relevant authorities, could provide additional guidance on what is already legally permitted for researcher access on important issues, such as data donations and research-related scraping.

- Create new duties, enforced by a backstop regulator: Services could be required to put in place systems and processes to operationalise data access. This could include new duties on regulated services to create standard procedures for researcher accreditation. Services would be responsible for providing researchers with data directly or providing the interface through which they can access it and offering appeal and redress mechanisms. A backstop regulator could enforce these duties – either an existing or new body.

- Enable and manage access via independent intermediary: New legal powers could be granted to a trusted third party which would facilitate and manage researchers’ access to data. This intermediary – which could again be an existing or new body – would accredit researchers and provide secure access.

Our report describes three types of intermediary that could be considered – direct access intermediary, notice to service intermediary and repository intermediary models.

- Direct access intermediary. Researchers could request data with an intermediary facilitating secure access. In this model, services could retain responsibility for hosting and providing data while the intermediary maintains the interface by which researchers request access.

- Notice to service intermediary. Researchers could apply for accreditation and request access to specific datasets via the intermediary. This could include data that would not be accessible in direct access models. The intermediary would review and refuse or approve access. Services would then be required to provide access to the approved data.

- Repository intermediary. The intermediary could itself provide direct access to data, by providing an interface for data access and/or hosting the data itself and taking responsibility for data governance. This could also include data that would not be accessible in direct access models…(More)”.