Stefaan Verhulst

Nathan Yau at FlowingData: “Data is a representation of real life. It’s an abstraction, and it’s impossible to encapsulate everything in a spreadsheet, which leads to uncertainty in the numbers.

How well does a sample represent a full population? How likely is it that a dataset represents the truth? How much do you trust the numbers?

Statistics is a game where you figure out these uncertainties and make estimated judgements based on your calculations. But standard errors, confidence intervals, and likelihoods often lose their visual space in data graphics, which leads to judgements based on simplified summaries expressed as means, medians, or extremes.

That’s no good. You miss out on the interesting stuff. The important stuff. So here are some visualization options for the uncertainties in your data, each with its pros, cons, and examples….(More)”.

Michael Byrne at Motherboard:”… in a larger sense it’s worth wondering to what degree the larger news feed is being diluted by news stories that are not “content dense.” That is, what’s the real ratio between signal and noise, objectively speaking? To start, we’d need a reasonably objective metric of content density and a reasonably objective mechanism for evaluating news stories in terms of that metric.

In a recent paper published in the Journal of Artificial Intelligence Research, computer scientists Ani Nenkova and Yinfei Yang, of Google and the University of Pennsylvania, respectively, describe a new machine learning approach to classifying written journalism according to a formalized idea of “content density.” With an average accuracy of around 80 percent, their system was able to accurately classify news stories across a wide range of domains, spanning from international relations and business to sports and science journalism, when evaluated against a ground truth dataset of already correctly classified news articles.

At a high level this works like most any other machine learning system. Start with a big batch of data—news articles, in this case—and then give each item an annotation saying whether or not that item falls within a particular category. In particular, the study focused on article leads, the first paragraph or two in a story traditionally intended to summarize its contents and engage the reader. Articles were drawn from an existing New York Times linguistic dataset consisting of original articles combined with metadata and short informative summaries written by researchers….(More)”.

Automation and artificial intelligence technologies are transforming manufacturing, corporate work and the retail business, providing new opportunities for companies to explore and posing major threats to those that don’t adapt to the times. Equally daunting challenges confront colleges and universities, but they’ve been slower to acknowledge them.

At present, colleges and universities are most worried about competition from schools or training systems using online learning technology. But that is just one aspect of the technological changes already under way. For example, some companies are moving toward requiring workers have specific skills trainings and certifications – as opposed to college degrees.

As a professor who researches artificial intelligence and offers distance learning courses, I can say that online education is a disruptive challenge for which colleges are ill-prepared. Lack of student demand is already closing 800 out of roughly 10,000 engineering colleges in India. And online learning has put as many as half the colleges and universities in the U.S. at risk of shutting down in the next couple decades as remote students get comparable educations over the internet – without living on campus or taking classes in person. Unless universities move quickly to transform themselves into educational institutions for a technology-assisted future, they risk becoming obsolete….(More)”

“From-Congress is an attempt to collect letters sent by representatives to their constituents. These letters often contain statements by the rep about positions that might otherwise be difficult to discover.

This project exists to increase the amount of transparency and accountability of representatives in their districts….

If you would like to send a letter to your congress person, I would heavily recommend resistbot. If you receive a reply, please consider uploading the reply here.

If in the past year you’ve received a correspondence from your congress person, I would encourage you to upload them as well.

In the future, we would like to transcribe these letters (hopefully automatically) and put the text in each article. Along with providing accessibility for visually-impaired readers, this will also allow searching of politicians’ view points….(More)”

See also: Project Legisletters

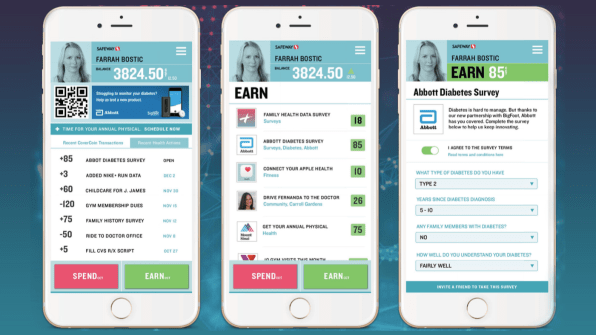

Ben Schiller at FastCompany: “Americans could do with new ways to save on healthcare….

CoverUS, a startup, has one idea: monetizing our health-related data. Through a new blockchain-based data marketplace, it hopes to generate revenue that could effectively make insurance cheaper and perhaps even encourage us to become healthier, thus cutting the cost of the system overall.

It works like this: When you sign up, you download a digital wallet to your phone. Then you populate that wallet with data from an electronic health record (EHR), for which, starting in January 2018, system operators are legally obliged to offer an open API. At the same time, you can also allow wearables and other health trackers to automatically add data to the platform, and answer questions about your health and consumption habits.

Why bother? To create a richer picture of your health than is currently held by the EHR systems, health providers, and data brokerages that buy and sell data from doctors, clinics, pharmacies, and other sources. By collecting all our data in one place, CoverUS wants to give us more autonomy over who uses our personal information and who makes money from it….

(Open Access) Book by Ralph Schroeder: “The internet has fundamentally transformed society in the past 25 years, yet existing theories of mass or interpersonal communication do not work well in understanding a digital world. Nor has this understanding been helped by disciplinary specialization and a continual focus on the latest innovations. Ralph Schroeder takes a longer-term view, synthesizing perspectives and findings from various social science disciplines in four countries: the United States, Sweden, India and China. His comparison highlights, among other observations, that smartphones are in many respects more important than PC-based internet uses.

Social Theory after the Internet focuses on everyday uses and effects of the internet, including information seeking and big data, and explains how the internet has gone beyond traditional media in, for example, enabling Donald Trump and Narendra Modi to come to power. Schroeder puts forward a sophisticated theory of the role internet plays, and how both technological and social forces shape its significance. He provides a sweeping and penetrating study, theoretically ambitious and at the same time always empirically grounded….(More)”.

Elisabeth A. Mason in The New York Times: “When it comes to artificial intelligence and jobs, the prognostications are grim. The conventional wisdom is that A.I. might soon put millions of people out of work — that it stands poised to do to clerical and white collar workers over the next two decades what mechanization did to factory workers over the past two. And that is to say nothing of the truckers and taxi drivers who will find themselves unemployed or underemployed as self-driving cars take over our roads.

But it’s time we start thinking about A.I.’s potential benefits for society as well as its drawbacks. The big-data and A.I. revolutions could also help fight poverty and promote economic stability.

Poverty, of course, is a multifaceted phenomenon. But the condition of poverty often entails one or more of these realities: a lack of income (joblessness); a lack of preparedness (education); and a dependency on government services (welfare). A.I. can address all three.

First, even as A.I. threatens to put people out of work, it can simultaneously be used to match them to good middle-class jobs that are going unfilled. Today there are millions of such jobs in the United States. This is precisely the kind of matching problem at which A.I. excels. Likewise, A.I. can predict where the job openings of tomorrow will lie, and which skills and training will be needed for them….

Second, we can bring what is known as differentiated education — based on the idea that students master skills in different ways and at different speeds — to every student in the country. A 2013 study by the National Institutes of Health found that nearly 40 percent of medical students held a strong preference for one mode of learning: Some were listeners; others were visual learners; still others learned best by doing….

Third, a concerted effort to drag education and job training and matching into the 21st century ought to remove the reliance of a substantial portion of the population on government programs designed to assist struggling Americans. With 21st-century technology, we could plausibly reduce the use of government assistance services to levels where they serve the function for which they were originally intended…(More)”.

Jennifer Bradley at Next City: “Many city governments in the U.S. and elsewhere are torn when it comes to innovation. On the one hand, constituents live in a world that increasingly demands flexibility, interaction, and iteration, and governments want to be seen as responsive to new ideas and services. On the other, the “move fast and break things” ethos of many technology companies seems wildly inappropriate when public health and safety are at stake. Cities are bound by regulatory processes developed decades ago and designed for predictability, stability, and protection—not for speed, ease, and invention. In addition, regulations have accumulated over time to respond to the urgent concerns of years or even decades ago, which might be irrelevant today.

The real work for city leaders today is to create not just new rules, but new ways of writing and adjusting regulations that better fit the dynamism and pace of change of cities themselves. Regulations are a big part of the city’s operating system, and, like an operating system, they should be data-informed, continually tweaked, and regularly refreshed to respond to bugs and new use cases.

We have recently launched a site with recommendations and case studies in four areas where technology is both pushing up against the limits of the current regulatory system and offering new tools to make enforcing and following rules easier: food safety, permitting, procurement, and transportation….(More) (Innovation Regulation site)“.

Chun-Yin San at Nesta: “In 2011, Lord Martin Rees, the British Astronomer-Royal, launched a scathing critique on the UK Government’s long-term thinking capabilities. “It is depressing,” he argued, “that long-term global issues of energy, food, health and climate get trumped on the political agenda by the short term”. We are facing more and more complex, intergenerational issues like climate change, or the impact of AI, which require long-term, joined-up thinking to solve.

But even when governments do invest in foresight and strategic planning, there is a bigger question around whose vision of the future it is. These strategic plans tend to be written in opaque and complex ways by ‘experts’, with little room for scrutiny, let alone input, by members of the public….

There have been some great examples of more democratic futures exercises in the past. Key amongst them was the Hawai’i 2000 project in the 1970s, which bought together Hawaiians from different walks of life to debate the sort of place that Hawai’i should become over the next 30 years. It generated some incredibly inspiring and creative collective visions of the future of the tropical American state, and also helped embed long-term strategic thinking into policy-making instruments – at least for a time.

A more recent example took place over 2008 in the Dutch Caribbean nation of Aruba, which engaged some 50,000 people from all parts of Aruban society. The Nos Aruba 2025 project allowed the island nation to develop a more sustainable national strategic plan than ever before – one based on what Aruba and its people had to offer, responding to the potential and needs of a diverse community. Like Hawai’i 2000, what followed Nos Aruba 2025 was a fundamental change in the nature of participation in the country’s governance, with community engagement becoming a regular feature in the Aruban government’s work….

These examples demonstrate how futures work is at its best when it is participatory. …However, aside from some of the projects above, examples of genuine engagement in futures remain few and far between. Even when activities examining a community’s future take place in the public domain – such as the Museum of London’s ongoing City Now City Future series – the conversation can often seem one-sided. Expert-generated futures are presented to people with little room for them to challenge these ideas or contribute their own visions in a meaningful way. This has led some, like academics Denis Loveridge and Ozcan Saritas, to remark that futures and foresight can suffer from a serious case of ‘democratic deficit‘.

There are three main reasons for this:

-

Meaningful participation can be difficult to do, as it is expensive and time-consuming, especially when it comes to large-scale exercises meant to facilitate deep and meaningful dialogue about a community’s future.

-

Participation is not always valued in the way it should be, and can be met with false sincerity from government sponsors. This is despite the wide-reaching social and economic benefits to building collective future visions, which we are currently exploring further in our work.

-

Practitioners may not necessarily have the know-how or tools to do citizen engagement effectively. While there are plenty of guides to public engagement and a number of different futures toolkits, there are few openly available resources for participatory futures activities….(More)”

Thomas Kingsley at the National Neighborhood Indicators Partnership (NNIP): “Thanks to IndyVitals – an award-winning online data tool – residents and organizations can actively contribute to continued planning to achieve Marion County’s vision for 2020. The NNIP Partner, the Polis Center at Indiana University-Purdue University at Indianapolis, leveraged their years of experience in providing actionable data through their Social Assets and Vulnerabilities Indicators (SAVI) to create this new resource for the county.

IndyVitals supports Plan 2020: the initiative of the City of Indianapolis, the Greater Indianapolis Progress Committee and others to revitalize the city’s plans and planning processes in recognition of its 2020 bicentennial. These groups decided to give neighborhood data a considerably more pivotal role in their approach than it has typically played in local planning efforts in the past.

SAVI was one of the first comprehensive online and interactive neighborhood indicators systems ever developed for any city. But IndyVitals incorporates three notable changes to past practice. First is a new configuration of neighborhood geographies for the city. Indianapolis has nearly 500 self-defined neighborhood associations registered with the City, with many overlapping boundaries. Neighborhoods defined at that level would be too small and fragmented to motivate coherent action. Accordingly, the City defined a set of 99 larger “neighborhood areas” that all actors who influence neighborhood change – community groups, public agencies, nonprofit service providers, private businesses – could understand, build their own plans around, and use as a basis for coordinating with each other to achieve progress. The City intends to use the new neighborhood areas as building blocks for revising the boundaries of its police districts, public works areas and other internal administrative units.

The second change pertains to the indicators selected and the tools developed to make use of them. A set of over 50 indicators for IndyVitals was selected to be regularly updated and monitored in the future (drawn from the literally hundreds of possible indicators that could be created with SAVI data). SAVI staff suggested a list of candidates which was then vetted and modified by an advisory committee made up of representatives of community and other stakeholder organizations. The 50 include measures that help explain the forces causing neighborhood change as well as those considered to be markers of goal achievement. They include well known indicators on population characteristics, but also a number of metrics that have powerful implications: for example, percent of families with access to a quality preschool or percent of residents employed in their own neighborhood; percent of students graduating from high school on time, neighborhood “walkability” ratings, crimes committed by minors per 1,000 population, demolitions ordered due to hazardous building conditions….

The third, and probably most important change in practice, is the type of data-informed planning and implementation process envisaged….(More)”.