Stefaan Verhulst

Article by Sam Cole: “Almost two dozen repositories of research and public health data supported by the National Institutes of Health are marked for “review” under the Trump administration’s direction, and researchers and archivists say the data is at risk of being lost forever if the repositories go down.

“The problem with archiving this data is that we can’t,” Lisa Chinn, Head of Research Data Services at the University of Chicago, told 404 Media. Unlike other government datasets or web pages, downloading or otherwise archiving NIH data often requires a Data Use Agreement between a researcher institution and the agency, and those agreements are carefully administered through a disclosure risk review process.

A message appeared at the top of multiple NIH websites last week that says: “This repository is under review for potential modification in compliance with Administration directives.”

Repositories with the message include archives of cancer imagery, Alzheimer’s disease research, sleep studies, HIV databases, and COVID-19 vaccination and mortality data…

“So far, it seems like what is happening is less that these data sets are actively being deleted or clawed back and more that they are laying off the workers whose job is to maintain them, update them and maintain the infrastructure that supports them,” a librarian affiliated with the Data Rescue Project told 404 Media. “In time, this will have the same effect, but it’s really hard to predict. People don’t usually appreciate, much less our current administration, how much labor goes into maintaining a large research dataset.”…(More)”.

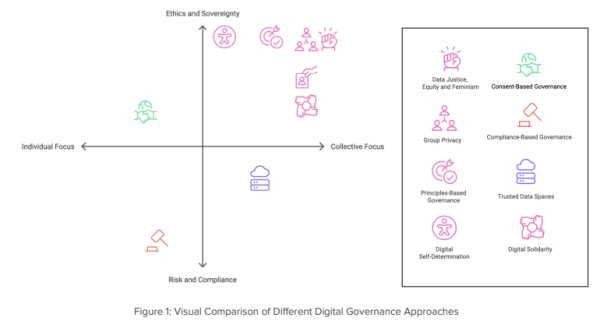

Paper by Sara Marcucci and Stefaan Verhulst: “In today’s increasingly complex digital landscape, traditional data governance models-such as consent-based, ownership-based, and sovereignty-based approaches-are proving insufficient to address the evolving ethical, social, and political dimensions of data use. These frameworks, often grounded in static and individualistic notions of control, struggle to keep pace with the fluidity and relational nature of contemporary data ecosystems. This paper proposes Digital Self-Determination (DSD) as a complementary and necessary evolution of existing models, offering a more participatory, adaptive, and ethically grounded approach to data governance. Centering ongoing agency, collective participation, and contextual responsiveness, DSD builds on foundational principles of consent and control while addressing their limitations. Drawing on comparisons with a range of governance models-including risk-based, compliance-oriented, principles-driven, and justice-centered frameworks-this paper highlights DSD’s unique contribution: its capacity to enable individuals and communities to actively shape how data about them is used, shared, and governed over time. In doing so, it reimagines data governance as a living, co-constructed practice grounded in trust, accountability, and care. Through this lens, the paper offers a framework for comparing different governance approaches and embedding DSD into existing paradigms, inviting policymakers and practitioners to consider how more inclusive and responsive forms of digital governance might be realized…(More)”.

Article by Elena Murray, Moiz Shaikh and Stefaan G. Verhulst: “Young people seeking essential services — like mental health care, education, or public benefits — are often asked to share personal data in order to access the service, without having any say in how it is being collected, shared or used, or why. If young people distrust how their data is being used, they may avoid services or withhold important information, fearing misuse. This can unintentionally widen the very gaps these services aim to close.

To build trust, service providers and policymakers must involve young people in co-designing how their data is collected and used. Understanding their concerns, values, and expectations is key to developing data practices that reflect their needs. Empowering young people to develop the conditions for data re-use and design solutions to their concerns enables digital self determination.

The question is then: what does meaningful engagement actually look like — and how can we get it right?

To answer that question, we engaged four partners in four different countries and conducted:

- 1000 hours of youth participation, involving more than 70 young people.

- 12 youth engagement events.

- Six expert talks and mentorship sessions.

These activities were undertaken as part of the NextGenData project, a year-long global collaboration supported by the Botnar Foundation, that piloted a methodology for youth engagement on responsible data reuse in Moldova, Tanzania, India and Kyrgyzstan.

A key outcome of our work was a youth engagement methodology, which we recently launched. In the below, we reflect on what we learnt — and how we can apply these learnings to ensure that the future of data-driven services both serves the needs of, and is guided by, young people.

Lessons Learnt:…(More)”

Report by Beatriz Botero Arcila: “…explores how liability law can help solve the “problem of many hands” in AI: that is, determining who is responsible for harm that has been dealt in a value chain in which a variety of different companies and actors might be contributing to the development of any given AI system. This is aggravated by the fact that AI systems are both opaque and technically complex, making their behavior hard to predict.

Why AI Liability Matters

To find meaningful solutions to this problem, different kinds of experts have to come together. This resource is designed for a wide audience, but we indicate how specific audiences can best make use of different sections, overviews, and case studies.

Specifically, the report:

- Proposes a 3-step analysis to consider how liability should be allocated along the value chain: 1) The choice of liability regime, 2) how liability should be shared amongst actors along the value chain and 3) whether and how information asymmetries will be addressed.

- Argues that where ex-ante AI regulation is already in place, policymakers should consider how liability rules will interact with these rules.

- Proposes a baseline liability regime where actors along the AI value chain share responsibility if fault can be demonstrated, paired with measures to alleviate or shift the burden of proof and to enable better access to evidence — which would incentivize companies to act with sufficient care and address information asymmetries between claimants and companies.

- Argues that in some cases, courts and regulators should extend a stricter regime, such as product liability or strict liability.

- Analyzes liability rules in the EU based on this framework…(More)”.

Article by Ellen O’Regan: “Europe’s most famous technology law, the GDPR, is next on the hit list as the European Union pushes ahead with its regulatory killing spree to slash laws it reckons are weighing down its businesses.

The European Commission plans to present a proposal to cut back the General Data Protection Regulation, or GDPR for short, in the next couple of weeks. Slashing regulation is a key focus for Commission President Ursula von der Leyen, as part of an attempt to make businesses in Europe more competitive with rivals in the United States, China and elsewhere.

The EU’s executive arm has already unveiled packages to simplify rules around sustainability reporting and accessing EU investment. The aim is for companies to waste less time and money on complying with complex legal and regulatory requirements imposed by EU laws…Seven years later, Brussels is taking out the scissors to give its (in)famous privacy law a trim.

There are “a lot of good things about GDPR, [and] privacy is completely necessary. But we don’t need to regulate in a stupid way. We need to make it easy for businesses and for companies to comply,” Danish Digital Minister Caroline Stage Olsen told reporters last week. Denmark will chair the work in the EU Council in the second half of 2025 as part of its rotating presidency.

The criticism of the GDPR echoes the views of former Italian Prime Minister Mario Draghi, who released a landmark economic report last September warning that Europe’s complex laws were preventing its economy from catching up with the United States and China. “The EU’s regulatory stance towards tech companies hampers innovation,” Draghi wrote, singling out the Artificial Intelligence Act and the GDPR…(More)”.

Chapter by Nathalie Colasanti, Chiara Fantauzzi, Rocco Frondizi & Noemi Rossi: “Governance paradigms have undergone a deep transformation during the COVID-19 pandemic, necessitating agile, inclusive, and responsive mechanisms to address evolving challenges. Participatory governance has emerged as a guiding principle, emphasizing inclusive decision-making processes and collaboration among diverse stakeholders. In the outbreak context, digital technologies have played a crucial role in enabling participatory governance to flourish, democratizing participation, and facilitating the rapid dissemination of accurate information. These technologies have also empowered grassroots initiatives, such as civic hacking, to address societal challenges and mobilize communities for collective action. This study delves into the realm of bottom-up participatory initiatives at the local level, focusing on two emblematic cases of civic hacking experiences launched during the pandemic, the first in Wuhan, China, and the second in Italy. Through a comparative lens, drawing upon secondary sources, the aim is to analyze the dynamics, efficacy, and implications of these initiatives, shedding light on the evolving landscape of participatory governance in times of crisis. Findings underline the transformative potential of civic hacking and participatory governance in crisis response, highlighting the importance of collaboration, transparency, and inclusivity…(More)”.

Blog by Frank Hamerlinck: “When it comes to data sharing, there’s often a gap between ambition and reality. Many organizations recognize the potential of data collaboration, yet when it comes down to sharing their own data, hesitation kicks in. The concern? Costs, risks, and unclear returns. At the same time, there’s strong enthusiasm for accessing data.

This is the paradox we need to break. Because if data egoism rules, real innovation is out of reach, making the need for data altruism more urgent than ever.

…More and more leaders recognize that unlocking data is essential to staying competitive on a global scale, and they understand that we must do so while upholding our European values. However, the real challenge lies in translating this growing willingness into concrete action. Many acknowledge its importance in principle, but few are ready to take the first step. And that’s a challenge we need to address – not just as organizations but as a society…

To break down barriers and accelerate data-driven innovation, we’re launching the FTI Data Catalog – a step toward making data sharing easier, more transparent, and more impactful.

The catalog provides a structured, accessible overview of available datasets, from location data and financial data to well-being data. It allows organizations to discover, understand, and responsibly leverage data with ease. Whether you’re looking for insights to fuel innovation, enhance decision-making, drive new partnerships or unlock new value from your own data, the catalog is built to support open and secure data exchange.

Feeling curious? Explore the catalog

By making data more accessible, we’re laying the foundation for a culture of collaboration. The road to data altruism is long, but it’s one worth walking. The future belongs to those who dare to share!..(More)”

Paper by Joshua P. White: “While tracking-data analytics can be a goldmine for institutions and companies, the inherent privacy concerns also form a legal, ethical and social minefield. We present a study that seeks to understand the extent and circumstances under which tracking-data analytics is undertaken with social licence — that is, with broad community acceptance beyond formal compliance with legal requirements. Taking a University campus environment as a case, we enquire about the social licence for Wi-Fi-based tracking-data analytics. Staff and student participants answered a questionnaire presenting hypothetical scenarios involving Wi-Fi tracking for university research and services. Our results present a Bayesian logistic mixed-effects regression of acceptability judgements as a function of participant ratings on 11 privacy dimensions. Results show widespread acceptance of tracking-data analytics on campus and suggest that trust, individual benefit, data sensitivity, risk of harm and institutional respect for privacy are the most predictive factors determining this acceptance judgement…(More)”.

Book by Diane Coyle: “The ways that statisticians and governments measure the economy were developed in the 1940s, when the urgent economic problems were entirely different from those of today. In The Measure of Progress, Diane Coyle argues that the framework underpinning today’s economic statistics is so outdated that it functions as a distorting lens, or even a set of blinkers. When policymakers rely on such an antiquated conceptual tool, how can they measure, understand, and respond with any precision to what is happening in today’s digital economy? Coyle makes the case for a new framework, one that takes into consideration current economic realities.

Coyle explains why economic statistics matter. They are essential for guiding better economic policies; they involve questions of freedom, justice, life, and death. Governments use statistics that affect people’s lives in ways large and small. The metrics for economic growth were developed when a lack of physical rather than natural capital was the binding constraint on growth, intangible value was less important, and the pressing economic policy challenge was managing demand rather than supply. Today’s challenges are different. Growth in living standards in rich economies has slowed, despite remarkable innovation, particularly in digital technologies. As a result, politics is contentious and democracy strained.

Coyle argues that to understand the current economy, we need different data collected in a different framework of categories and definitions, and she offers some suggestions about what this would entail. Only with a new approach to measurement will we be able to achieve the right kind of growth for the benefit of all…(More)”.

Resource by The Data for Children Collaborative: “… excited to share a consolidation of 5 years of learning in one handy how-to-guide. Our ethos is to openly share tools, approaches and frameworks that may benefit others working in the Data for Good space. We have developed this guide specifically to support organisations working on complex challenges that may have data-driven solutions. The How-to-Guide provides advice and examples of how to plan and execute collaboration on a data project effectively…

Data collaboration can provide organisations with high-quality, evidence-based insights that drive policy and practice while bringing together diverse perspectives to solve problems. It also fosters innovation, builds networks for future collaboration, and ensures effective implementation of solutions on the ground…(More)”.