Stefaan Verhulst

Article by William Maloney, Daniel Rogger, and Christian Schuster: ” Governments across Latin America and the Caribbean are grappling with deep governance challenges that threaten progress and stability, including the need to improve efficiency, accountability and transparency.

Amid these obstacles, however, the region possesses a powerful, often underutilized asset: the administrative data it collects as a part of its everyday operations.

When harnessed effectively using data analytics, this data has the potential to drive transformative change, unlock new opportunities for growth and help address some of the most pressing issues facing the region. It’s time to tap into this potential and use data to chart a path forward. To help governments make the most of the opportunities that this data presents, the World Bank has embarked on a decade-long project to synthesize the latest knowledge on how to measure and improve government performance. We have found that governments already have a lot of the data they need to dramatically improve public services while conserving scarce resources.

But it’s not enough to collect data. It must also be put to good use to improve decision making, design better public policy and strengthen public sector functioning. We call these tools and practices for repurposing government data government analytics…(More)”.

Blueprint by Hannah Chafetz, Andrew J. Zahuranec, and Stefaan Verhulst: “In today’s rapidly evolving AI landscape, it is critical to broaden access to diverse and high-quality data to ensure that AI applications can serve all communities equitably. Yet, we are on the brink of a potential “data winter,” where valuable data assets that could drive public good are increasingly locked away or inaccessible.

Data commons — collaboratively governed ecosystems that enable responsible sharing of diverse datasets across sectors — offer a promising solution. By pooling data under clear standards and shared governance, data commons can unlock the potential of AI for public benefit while ensuring that its development reflects the diversity of experiences and needs across society.

To accelerate the creation of data commons, The Open Data Policy, today, releases “A Blueprint to Unlock New Data Commons for AI” — a guide on how to steward data to create data commons that enable public-interest AI use cases…the document is aimed at supporting libraries, universities, research centers, and other data holders (e.g. governments and nonprofits) through four modules:

- Mapping the Demand and Supply: Understanding why AI systems need data, what data can be made available to train, adapt, or augment AI, and what a viable data commons prototype might look like that incorporates stakeholder needs and values;

- Unlocking Participatory Governance: Co-designing key aspects of the data commons with key stakeholders and documenting these aspects within a formal agreement;

- Building the Commons: Establishing the data commons from a practical perspective and ensure all stakeholders are incentivized to implement it; and

- Assessing and Iterating: Evaluating how the commons is working and iterating as needed.

These modules are further supported by two supplementary taxonomies. “The Taxonomy of Data Types” provides a list of data types that can be valuable for public-interest generative AI use cases. The “Taxonomy of Use Cases” outlines public-interest generative AI applications that can be developed using a data commons approach, along with possible outcomes and stakeholders involved.

A separate set of worksheets can be used to further guide organizations in deploying these tools…(More)”.

Democracy Funders Network: “For too many Americans, the prospect of engaging with lawmakers about the important issues in their lives is either logistically inaccessible, or unsatisfactory in result. Exploring An Innovative Approach to Democratic Governance: A Funder’s Guide to Citizens’ Assemblies, produced by Democracy Funders Network and New America, explores the potential for citizens’ assemblies to transform and strengthen democratic processes in the U.S. The guide offers philanthropists and in-depth look at the potential opportunities and challenges citizens’ assemblies present for building civic power at the local level and fomenting authentic civic engagement within communities.

Citizens’ assemblies belong in the broader field of collaborative governance, an umbrella term for public engagement that shifts governing power and builds trust by bringing together government officials and community members to collaborate on policy outcomes through shared decision-making…(More)”.

Article by Manon Revel and Théophile Pénigaud: “This article unpacks the design choices behind longstanding and newly proposed computational frameworks aimed at finding common grounds across collective preferences and examines their potential future impacts, both technically and normatively. It begins by situating AI-assisted preference elicitation within the historical role of opinion polls, emphasizing that preferences are shaped by the decision-making context and are seldom objectively captured. With that caveat in mind, we explore AI-facilitated collective judgment as a discovery tool for fostering reasonable representations of a collective will, sense-making, and agreement-seeking. At the same time, we caution against dangerously misguided uses, such as enabling binding decisions, fostering gradual disempowerment or post-rationalizing political outcomes…(More)”.

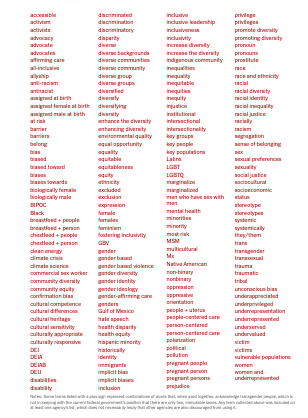

Article by Karen Yourish et al: “As President Trump seeks to purge the federal government of “woke” initiatives, agencies have flagged hundreds of words to limit or avoid, according to a compilation of government documents.

The above terms appeared in government memos, in official and unofficial agency guidance and in other documents viewed by The New York Times. Some ordered the removal of these words from public-facing websites, or ordered the elimination of other materials (including school curricula) in which they might be included.

In other cases, federal agency managers advised caution in the terms’ usage without instituting an outright ban. Additionally, the presence of some terms was used to automatically flag for review some grant proposals and contracts that could conflict with Mr. Trump’s executive orders.

The list is most likely incomplete. More agency memos may exist than those seen by New York Times reporters, and some directives are vague or suggest what language might be impermissible without flatly stating it.

All presidential administrations change the language used in official communications to reflect their own policies. It is within their prerogative, as are amendments to or the removal of web pages, which The Times has found has already happened thousands of times in this administration…(More)”

Review by Abby Smith Rumsey: “How does a democracy work if its citizens do not have a shared sense of reality? Not very well. A country whose people cannot agree on where they stand now will not agree on where they are going. This is where Americans find themselves in 2025, and they did not arrive at this juncture yesterday. The deep divisions that exist have grown over the decades, dating at least to the end of the Cold War in 1991, and are now metastasizing at an alarming rate. These divisions have many causes, from climate change to COVID-19, unchecked migration to growing wealth inequality, and other factors. People who live with chronic division and uncertainty are vulnerable. It may not take much to get them to sign on to a politics of certainty…

Take the United States. By this fractured logic, Make America Great Again (MAGA) means that America once was great, is no longer, but can be restored to its prelapsarian state, when whites sat firmly at the top of the ethnic hierarchy that constitutes the United States. Jason Stanley, a professor of philosophy and self-identified liberal, is deeply troubled that many liberal democracies across the globe are morphing into illiberal democracies before our very eyes. In “Erasing History: How Fascists Rewrite the Past to Control the Future,” he argues that all authoritarian regimes know the value of a unified, if largely mythologized, view of past, present, and future. He wrote his book to warn us that we in the United States are on the cusp of becoming an authoritarian nation or, in Stanley’s account, fascist. By explaining “the mechanisms by which democracy is attacked, the ways myths and lies are used to justify actions such as wars, and scapegoating of groups, we can defend against these attacks, and even reverse the tide.”…

The fabrication of the past is also the subject of Steve Benen’s book “Ministry of Truth. Democracy, Reality, and the Republicans’ War on the Recent Past.” Benen, a producer on the Rachel Maddow Show, keeps his eye tightly focused on the past decade, still fresh in the minds of readers. His account tracks closely how the Republican Party conducted “a war on the recent past.” He attempts an anatomy of a very unsettling phenomenon: the success of a gaslighting campaign Trump and his supporters perpetrated against the American public and even against fellow Republicans who are not MAGA enough for Trump…(More)”

Coimisiún na Meán: “Article 40 of the Digital Services Act (DSA) makes provision for researchers to access data from Very Large Online Platforms (VLOPs) or Very Large Online Search Engines (VLOSEs) for the purposes of studying systemic risk in the EU and assessing mitigation measures. There are two ways that researchers that are studying systemic risk in the EU can get access to data under Article 40 of the DSA.

Non-public data, known as “vetted researcher data access”, under Article 40(4)-(11). This is a process where a researcher, who has been vetted or assessed by a Digital Services Coordinator to have met the criteria as set out in DSA Article 40(8), can request access to non-public data held by a VLOP/VLOSE. The data must be limited in scope and deemed necessary and proportionate to the purpose of the research.

Public data under Article 40(12). This is a process where a researcher who meets the relevant criteria can apply for data access directly from a VLOP/VLOSE, for example, access to a content library or API of public posts…(More)”.

Article by Lauren Kent: “A vital, US-run monitoring system focused on spotting food crises before they turn into famines has gone dark after the Trump administration slashed foreign aid.

The Famine Early Warning Systems Network (FEWS NET) monitors drought, crop production, food prices and other indicators in order to forecast food insecurity in more than 30 countries…Now, its work to prevent hunger in Sudan, South Sudan, Somalia, Yemen, Ethiopia, Afghanistan and many other nations has been stopped amid the Trump administration’s effort to dismantle the US Agency for International Development (USAID).

“These are the most acutely food insecure countries around the globe,” said Tanya Boudreau, the former manager of the project.

Amid the aid freeze, FEWS NET has no funding to pay staff in Washington or those working on the ground. The website is down. And its treasure trove of data that underpinned global analysis on food security – used by researchers around the world – has been pulled offline.

FEWS NET is considered the gold-standard in the sector, and it publishes more frequent updates than other global monitoring efforts. Those frequent reports and projections are key, experts say, because food crises evolve over time, meaning early interventions save lives and save money…The team at the University of Colorado Boulder has built a model to forecast water demand in Kenya, which feeds some data into the FEWS NET project but also relies on FEWS NET data provided by other research teams.

The data is layered and complex. And scientists say pulling the data hosted by the US disrupts other research and famine-prevention work conducted by universities and governments across the globe.

“It compromises our models, and our ability to be able to provide accurate forecasts of ground water use,” Denis Muthike, a Kenyan scientist and assistant research professor at UC Boulder, told CNN, adding: “You cannot talk about food security without water security as well.”

“Imagine that that data is available to regions like Africa and has been utilized for years and years – decades – to help inform divisions that mitigate catastrophic impacts from weather and climate events, and you’re taking that away from the region,” Muthike said. He cautioned that it would take many years to build another monitoring service that could reach the same level…(More)”.

Report by Project Evident: “…looks at how philanthropic funders are approaching requests to fund the use of AI… there was common recognition of AI’s importance and the tension between the need to learn more and to act quickly to meet the pace of innovation, adoption, and use of AI tools.

This research builds on the work of a February 2024 Project Evident and Stanford Institute for Human-Centered Artificial Intelligence working paper, Inspiring Action: Identifying the Social Sector AI Opportunity Gap. That paper reported that more practitioners than funders (by over a third) claimed their organization utilized AI.

“From our earlier research, as well as in conversations with funders and nonprofits, it’s clear there’s a mismatch in the understanding and desire for AI tools and the funding of AI tools,” said Sarah Di Troia, Managing Director of Project Evident’s OutcomesAI practice and author of the report. “Grantmakers have an opportunity to quickly upskill their understanding – to help nonprofits improve their efficiency and impact, of course, but especially to shape the role of AI in civil society.”

The report offers a number of recommendations to the philanthropic sector. For example, funders and practitioners should ensure that community voice is included in the implementation of new AI initiatives to build trust and help reduce bias. Grantmakers should consider funding that allows for flexibility and innovation so that the social and education sectors can experiment with approaches. Most importantly, funders should increase their capacity and confidence in assessing AI implementation requests along both technical and ethical criteria…(More)”.

Book edited by Sanna Ojanperä, Eduardo López, and Mark Graham: “…explores the uses of large-scale data in the contexts of development, in particular, what techniques, data sources, and possibilities exist for harnessing large datasets and new online data to address persistent concerns regarding human development, inequality, exclusion, and participation.

Employing a global perspective to explore the latest advances at the intersection of big data analysis and human development, this volume brings together pioneering voices from academia, development practice, civil society organizations, government, and the private sector. With a two-pronged focus on theoretical and practical research on big data and computational approaches in human development, the volume covers such themes as data acquisition, data management, data mining and statistical analysis, network science, visual analytics, and geographic information systems and discusses them in terms of practical applications in development projects and initiatives. Ethical considerations surrounding these topics are visited throughout, highlighting the tradeoffs between benefitting and harming those who are the subjects of these new approaches…(More)”