Stefaan Verhulst

Google’s Official Blog: “The recent Zika virus outbreak has caused concern around the world. We’ve seen more than a 3,000 percent increase in global search interest since November, and last month, the World Health Organization (WHO) declared a Public Health Emergency. The possible correlation with Zika, microcephaly and other birth defects is particularly alarming.

But unlike many other global pandemics, the spread of Zika has been harder to identify, map and contain. It’s believed that 4 in 5 people with the virus don’t show any symptoms, and the primary transmitter for the disease, the Aedes mosquito species, is both widespread and challenging to eliminate. That means that fighting Zika requires raising awareness on how people can protect themselves, as well as supporting organizations who can help drive the development of rapid diagnostics and vaccines. We also have to find better ways to visualize the threat so that public health officials and NGO’s can support communities at risk….

A volunteer team of Google engineers, designers, and data scientists is helping UNICEF build a platform to process data from different sources (i.e., weather and travel patterns) in order to visualize potential outbreaks. Ultimately, the goal of this open source platform is to identify the risk of Zika transmission for different regions and help UNICEF, governments and NGO’s decide how and where to focus their time and resources. This set of tools is being prototyped for the Zika response, but will also be applicable to future emergencies….

We already include robust information for 900+ health conditions directly on Search for people in the U.S. We’ve now also added extensive information about Zika globally in 16 languages, with an overview of the virus, symptom information, and Public Health Alerts from that can be updated with new information as it becomes available.

We’re also working with popular YouTube creators across Latin America, including Sesame Street and Brazilian physician Drauzio Varella, to raise awareness about Zika prevention via their channels.

We hope these efforts are helpful in fighting this new public health emergency, and we will continue to do our part to help combat this outbreak.

And if you’re curious about what that 3,000 percent search increase looks like, take a look:….(More)

The Centre for Public Impact: “Traditional approaches to policymaking have left policymakers and citizens looking for alternative solutions. Despite the best of intentions, the standard model of dispassionate expert analysis and subsequent implementation by a professional bureaucracy has, generally, led to siloed solutions and outcomes for citizens that fall short of what might be possible.

The discipline of design may well provide an answer to this problem by offering a collection of methods which allow civil servants to generate insights based on citizens’ needs, aspirations and behaviours. In doing so, it changes the view of citizens from seeing them as anonymous entities to complex humans with complex needs to match. The potential of this new approach is already becoming clear – just ask the medical teams and patients at Norway’s Oslo University Hospital. Women with a heightened risk of developing breast cancer had previously been forced to wait up to three months before receiving an appointment for examination and diagnosis. A redesign reduced this wait to just three days.

In-depth user research identified the principal issues and pinpointed the lack of information about the referral process as a critical problem. The designers also interviewed 40 hospital employees of all levels to find out about their daily schedules and processes. Governments have always drawn inspiration from fields such as sociology and economics. Design methods are not (yet) part of the policymaking canon, but such examples help explain why this may be about to change….(More)”

About HeroX, “The World’s Problem Solver Community”: “On October 21, 2004, Scaled Composites’ SpaceShipOne reached the edge of space, an altitude of 100km, becoming the first privately built spacecraft to perform this feat, twice within two weeks.

In so doing, they won the $10 million Ansari XPRIZE, ushering in a new era of commercial space exploration and applications.

It was the inaugural incentive prize competition of the XPRIZE Foundation, which has gone on to create an incredible array of incentive prizes to solve the world’s Grand Challenges — ocean health, literacy, space exploration, among many others.

In 2011, City Light Capital partnered with XPRIZE to envision a platform that would make the power of incentive challenges available to anyone. The result was the spin-off of HeroX in 2013.

HeroX was co-founded in 2013 by XPRIZE founder Peter Diamandis, challenge designer Emily Fowler and entrepreneur Christian Cotichini as a means to democratize the innovation model of XPRIZE.

HeroX exists to enable anyone, anywhere in the world, to create a challenge that addresses any problem or opportunity, build a community around that challenge and activate the circumstances that can lead to a breakthrough innovation.

This innovation model has existed for centuries.

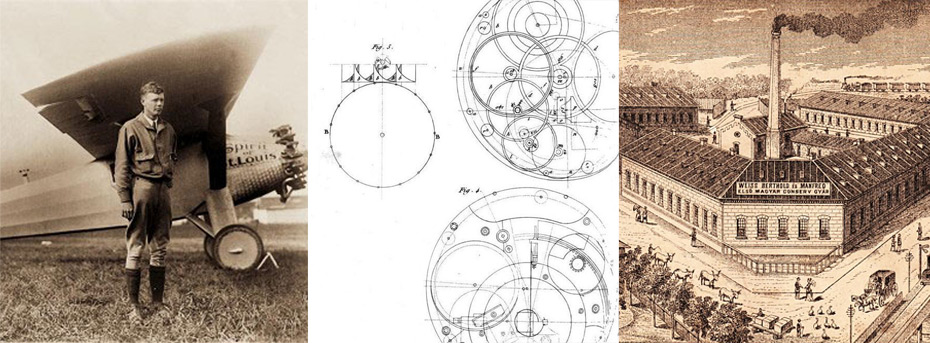

The Ansari XPRIZE was inspired by the 1927 Orteig Prize, in which Charles Lindbergh crossed the Atlantic in the Spirit of St. Louis. The $25,000 prize had been offered by hotelier Raymond Orteig to spur tourism. Lindbergh’s flight lead to a boom in air travel the world over.

A similar challenge had launched 200 years earlier with the 1716 Longitude Prize, which sought a technology to more accurately measure longitude at sea. Nearly 60 years later, a British clockmaker named John Harrison invented the chronometer, which spurred Trans-Atlantic migration on a massive scale.

In 1795, Napoleon offered a 12,000 franc prize for a better method of preserving food, which was often spoiled by the time it reached the front lines of his armies. The breakthrough innovation to Napoleon’s prize led to the creation of the canning industry.

HeroX incentive prize challenges are designed to do the same — to harness the collective mind power of a community to innovate upon any problem or opportunity. Anyone can change the world. HeroX can help.

The only question is, “What do you want to solve?”… (More)

Book edited by Jarman, Holly and Luna-Reyes, Luis F: “This book investigates the ways in which these systems can promote public value by encouraging the disclosure and reuse of privately-held data in ways that support collective values such as environmental sustainability. Supported by funding from the National Science Foundation, the authors’ research team has been working on one such system, designed to enhance consumers ability to access information about the sustainability of the products that they buy and the supply chains that produce them. Pulled by rapidly developing technology and pushed by budget cuts, politicians and public managers are attempting to find ways to increase the public value of their actions. Policymakers are increasingly acknowledging the potential that lies in publicly disclosing more of the data that they hold, as well as incentivizing individuals and organizations to access, use, and combine it in new ways. Due to technological advances which include smarter phones, better ways to track objects and people as they travel, and more efficient data processing, it is now possible to build systems which use shared, transparent data in creative ways. The book adds to the current conversation among academics and practitioners about how to promote public value through data disclosure, focusing particularly on the roles that governments, businesses and non-profit actors can play in this process, making it of interest to both scholars and policy-makers….(More)”

Gabriel Puron-Cid et al in the International Journal on Public Administration in the Digital Age: “Contemporary societies face complex problems that challenge the sustainability of their social and economic systems. Such problems may require joint efforts from the public and private sectors as well as from the society at large in order to find innovative solutions. In addition, the open government movement constitutes a revitalized wave of access to data to promote innovation through transparency, participation and collaboration. This paper argues that currently there is an opportunity to combine emergent information technologies, new analytical methods, and open data in order to develop innovative solutions to some of the pressing problems in modern societies. Therefore, the objective is to propose a conceptual model to better understand policy innovations based on three pillars: data, information technologies, and analytical methods and techniques. The potential benefits generated from the creation of organizations with advanced analytical capabilities within governments, universities, and non-governmental organizations are numerous and the expected positive impacts on society are significant. However, this paper also discusses some important political, organizational, and technical challenges…(More).

New report by Open Knowledge and Civicus: “The information systems of public institutions play a crucial role in how we collectively look at and act in the world. They shape the way decisions are made, progress is evaluated, resources are allocated, issues are flagged, debates are framed and action is taken. As a United Nations (UN) report recently put it, “Data are the lifeblood of decision-making and the raw material for accountability.”1

Every information system renders certain aspects of the world visible and lets others recede into the background. Datasets highlight some things and not others. They make the world comprehensible and navigable in their own way – whether for the purposes of policy evaluation, public service delivery, administration or governance.

Given the critical role of public information systems, what happens when they leave out parts of the picture that civil society groups consider vital? What can civil society actors do to shape or influence these systems so they can be used to advance progress around social, democratic and environmental issues?

This report looks at how citizens and civil society groups can generate data as a means to influence institutional data collection. In the following pages, we profile citizen generated and civil society data projects and how they have been used as advocacy instruments to change institutional data collection – including looking at the strategies, methods, technologies and resources that have been mobilised to this end. We conclude with a series of recommendations for civil society groups, public institutions, policy-makers and funders….(More)”

Ben Miller at Government Technology: “Government produces a lot of data — reams of it, roomfuls of it, rivers of it. It comes in from citizen-submitted forms, fleet vehicles, roadway sensors and traffic lights. It comes from utilities, body cameras and smartphones. It fills up servers and spills into the cloud. It’s everywhere.

And often, all that data sits there not doing much. A governing entity might have robust data collection and it might have an open data policy, but that doesn’t mean it has the computing power, expertise or human capital to turn those efforts into value.

The amount of data available to government and the computing public promises to continue to multiply — the growing smart cities trend, for example, installs networks of sensors on everything from utility poles to garbage bins.

As all this happens, a movement — a new spin on an old concept — has begun to take root: partnerships between government and research institutes. Usually housed within universities and laboratories, these partnerships aim to match strength with strength. Where government has raw data, professors and researchers have expertise and analytics programs.

Several leaders in such partnerships, spanning some of the most tech-savvy cities in the country, see increasing momentum toward the concept. For instance, the John D. and Catherine T. MacArthur Foundation in September helped launch the MetroLab Network, an organization of more than 20 cities that have partnered with local universities and research institutes for smart-city-oriented projects….

Two recurring themes in projects that universities and research organizations take on in cooperation with government are project evaluation and impact analysis. That’s at least partially driven by the very nature of the open data movement: One reason to open data is to get a better idea of how well the government is operating….

Open data may have been part of the impetus for city-university partnerships, in that the availability of more data lured researchers wanting to work with it and extract value. But those partnerships have, in turn, led to government officials opening more data than ever before for useful applications.

Sort of.

“I think what you’re seeing is not just open data, but kind of shades of open — the desire to make the data open to university researchers, but not necessarily the broader public,” said Beth Noveck, co-founder of New York University’s GovLab.

GOVLAB: DOCKER FOR DATA

Much of what GovLab does is about opening up access to data, and that is the whole point of Docker for Data. The project aims to simplify and quicken the process of extracting and loading large data sets so they will respond to Structured Query Language commands by moving the computing power of that process to the cloud. The docker can be installed with a single line of code, and its website plays host to already-extracted data sets. Since its inception, the website has grown to include more than 100 gigabytes of data from more than 8,000 data sets. From Baltimore, for example, one can easily find information on public health, water sampling, arrests, senior centers and more. Photo via Shutterstock.

That’s partially because researchers are a controlled group who can be forced to sign memorandums of understanding and trained to protect privacy and prevent security breaches when government hands over sensitive data. That’s a top concern of agencies that manage data, and it shows in the GovLab’s work.

It was something Noveck found to be very clear when she started working on a project she simply calls “Arnold” because of project support from the Laura and John Arnold Foundation. The project involves building a better understanding of how different criminal justice jurisdictions collect, store and share data. The motivation is to help bridge the gaps between people who manage the data and people who should have easy access to it. When Noveck’s center conducted a survey among criminal justice record-keepers, the researchers found big differences between participants.

“There’s an incredible disparity of practices that range from some jurisdictions that have a very well established, formalized [memorandum of understanding] process for getting access to data, to just — you send an email to a guy and you hope that he responds, and there’s no organized way to gain access to data, not just between [researchers] and government entities, but between government entities,” she said….(More)

Book edited by Fiona Spotswood: “‘Behaviour change’ has become a buzz phrase of growing importance to policymakers and researchers. There is an increasing focus on exploring the relationship between social organisation and individual action, and on intervening to influence societal outcomes like population health and climate change. Researchers continue to grapple with methodologies, intervention strategies and ideologies around ‘social change’. Multidisciplinary in approach, this important book draws together insights from a selection of the principal thinkers in fields including public health, transport, marketing, sustainability and technology. The book explores the political and historical landscape of behaviour change, and trends in academic theory, before examining new innovations in both practice and research. It will be a valuable resource for academics, policy makers, practitioners, researchers and students wanting to locate their thinking within this rapidly evolving field….(More)”

Speech by David Fricker, the director general of the National Archives of Australia: “No-one can deny that we are in an age of information abundance. More and more we rely on information from a variety of sources and channels. Digital information is seductive, because it’s immediate, available and easy to move around. But digital information can be used for nefarious purposes. Social issues can be at odds with processes of government in this digital age. There is a tension between what is the information, where it comes from and how it’s going to be used.

How do we know if the information has reached us without being changed, whether that’s intentional or not?

How do we know that government digital information will be the authoritative source when the pace of information exchange is so rapid? In short, how do we know what to trust?

Consider the challenges and risks that come with the digital age: what does it really mean to have transparency and integrity of government in today’s digital environment?…

What does the digital age mean for government? Government should be delivering services online, which means thinking about location, timeliness and information accessibility. It’s about getting public-sector data out there, into the public, making it available to fuel the digital economy. And it’s about a process of change across government to make sure that we’re breaking down all of those silos, and the duplication and fragmentation which exist across government agencies in the application of information, communications, and technology…..

The digital age is about the digital economy, it’s about rethinking the economy of the nation through the lens of information that enables it. It’s understanding that a nation will be enriched, in terms of culture life, prosperity and rights, if we embrace the digital economy. And that’s a weighty responsibility. But the responsibility is not mine alone. It’s a responsibility of everyone in the government who makes records in their daily work. It’s everyone’s responsibly to contribute to a transparent government. And that means changes in our thinking and in our actions….

What has changed about democracy in the digital age? Once upon a time if you wanted to express your anger about something, you might write a letter to the editor of the paper, to the government department, or to your local member and then expect some sort of an argument or discussion as a response. Now, you can bypass all of that. You might post an inflammatory tweet or blog, your comment gathers momentum, you pick the right hashtag, and off we go. It’s all happening: you’re trending on Twitter…..

If I turn to transparency now, at the top of the list is the basic recognition that government information is public information. The information of the government belongs to the people who elected that government. It’s a fundamental of democratic values. It also means that there’s got to be more public participation in the development of public policy, which means if you’re going to have evidence-based, informed, policy development; government information has to be available, anywhere, anytime….

Good information governance is at the heart of managing digital information to provide access to that information into the future — ready access to government information is vital for transparency. Only when information is digital and managed well can government share it effectively with the Australian community, to the benefit of society and the economy.

There are many examples where poor information management, or poor information governance, has led to failures — both in the private and public sectors. Professor Peter Shergold’s recent report, Learning from Failure, why large government policy initiatives have gone so badly wrong in the past and how the chances of success in the future can be improved, highlights examples such as the Home Insulation Program, the NBN and Building the Education Revolution….(Full Speech)