Blog by Andrew Zahuranec: “It’s been a long year. Back in March, The GovLab released a Call for Action to build the data infrastructure and ecosystem we need to tackle pandemics and other dynamic societal and environmental threats. As part of that work, we launched a Data4COVID19 repository to monitor progress and curate projects that reused data to address the pandemic. At the time, it was hard to say how long it would remain relevant. We did not know how long the pandemic would last nor how many organizations would publish dashboards, visualizations, mobile apps, user tools, and other resources directed at the crisis’s worst consequences.

Seven months later, the COVID-19 pandemic is still with us. Over one million people around the world are dead and many countries face ever-worsening social and economic costs. Though the frequency with which data reuse projects are announced has slowed since the crisis’s early days, they have not stopped. For months, The GovLab has posted dozens of additions to an increasingly unwieldy GoogleDoc.

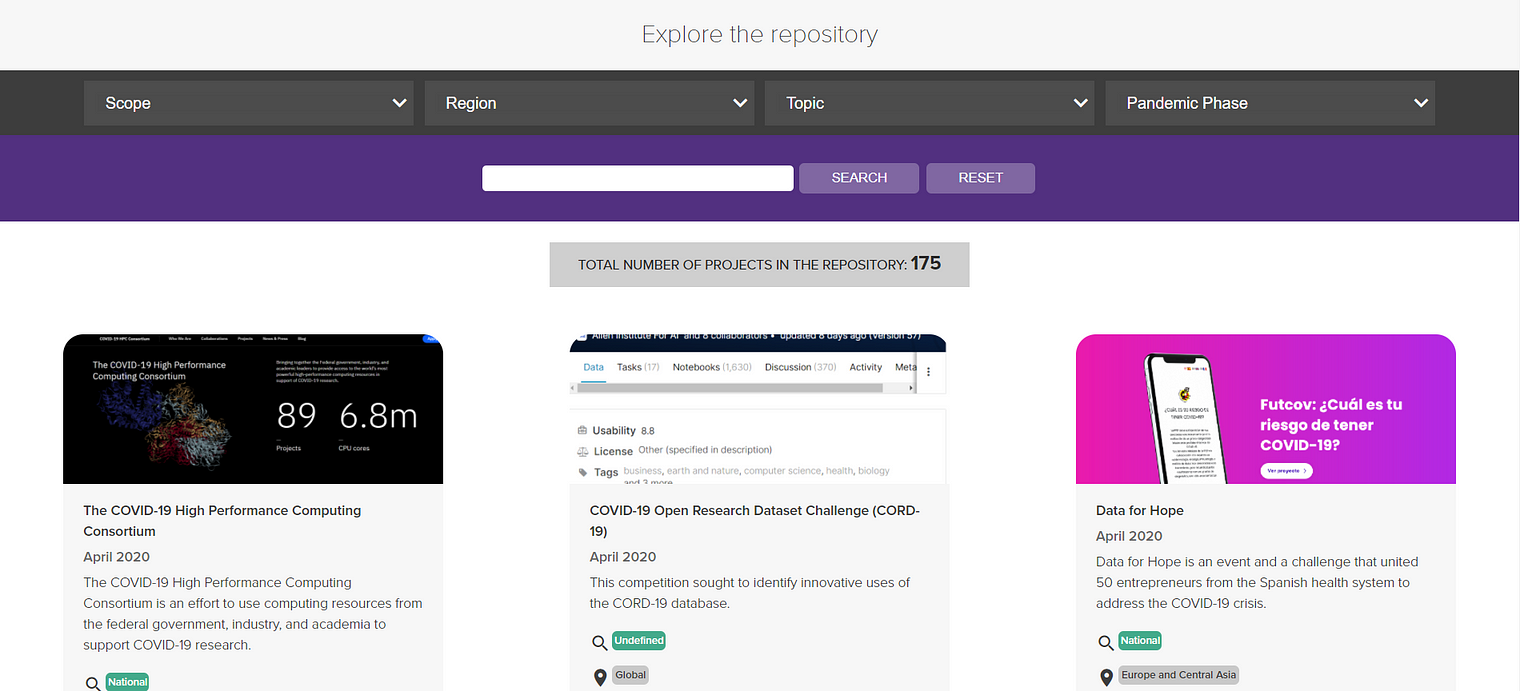

Today, we are making a change. Given the pandemic’s continued urgency and relevance into 2021 and beyond, The GovLab is pleased to release the new Data4COVID19 Living Repository. The upgraded platform allows people to more easily find and understand projects related to the COVID-19 pandemic and data reuse.

On the platform, visitors will notice a few improvements that distinguish the repository from its earlier iteration. In addition to a main page with short descriptions of each example, we’ve added improved search and filtering functionality. Visitors can sort through any of the projects by:

- Scope: the size of the target community;

- Region: the geographic area in which the project takes place;

- Topic: the aspect of the crisis the project seeks to address; and

- Pandemic Phase: the stage of pandemic response the project aims to address….(More)”.