Paper by Josef Drexl: “On the European level, promoting the free flow of data and access to data has moved to the forefront of the policy goals concerning the digital economy. A particular aspect of this economy is the advent of connected devices that are increasingly deployed and used in the context of the Internet of Things (IoT). As regards these devices, the Commission has identified the particular problem that the manufacturers may try to remain in control of the data and refuse data access to third parties, thereby impeding the development of innovative business models in secondary data-related markets. To address this issue, this paper discusses potential legislation on data access rights of the users of connected devices. The paper conceives refusals of the device manufacturers to grant access to data vis-à-vis users as a form of unfair trading practice and therefore recommends embedding data access rights of users in the context of the European law against unfair competition. Such access rights would be complementary to other access regimes, including sector-specific data access rights of competitors in secondary markets as well as access rights available under contract and competition law. Against the backdrop of ongoing debates to reform contract and competition law for the purpose of enhancing data access, the paper seeks to draw attention to a so far not explored unfair competition law approach….(More)”.

Data for Good: New Tools to Help Small Businesses and Communities During the COVID-19 Pandemic

Blogpost by Laura McGorman and Alex Pompe at Facebook: “Small businesses and people around the world are suffering devastating financial losses due to the ongoing COVID-19 pandemic, and public institutions need real time information to help. Today Facebook is launching new datasets and insights to help support economic recovery through our Data for Good program.

Researchers estimate that over the next five years, the global economy could suffer over $80 trillion in losses due to COVID-19. Small businesses in particular are being hit hard — our Global State of Small Business Report found that over one in four had closed their doors in 2020. Governments around the world are looking to effectively distribute financial aid as well as accurately forecast when and how economies will recover. These four datasets — Business Activity Trends, Commuting Zones, Economic Insights from the Symptom Survey and the latest Future of Business Survey results — will help researchers, nonprofits and local officials identify which areas and businesses may need the most support.

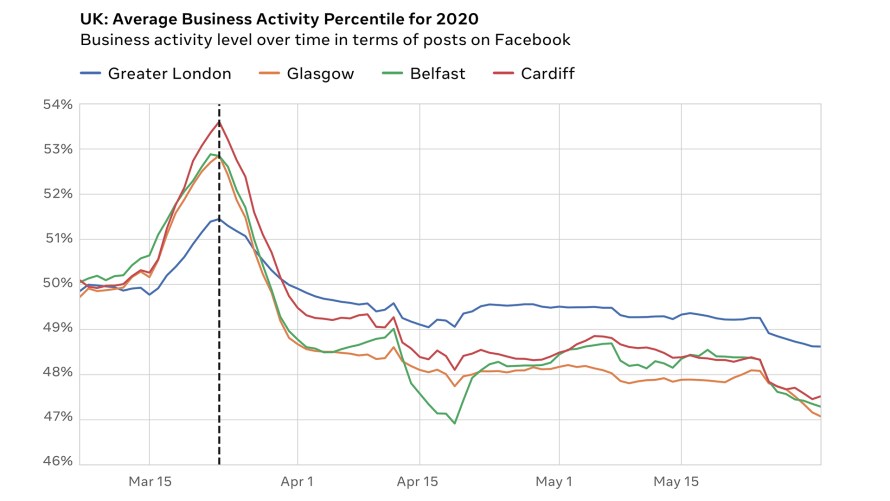

Business Activity Trends

Many factors influence the pandemic’s impact on local economies around the world. However, real time information on business activity is scarce, leaving institutions seeking to provide economic aid with limited information on how to distribute it. To address these information gaps, we partnered with the University of Bristol to aggregate information from Facebook Business Pages to estimate the change in activity among local businesses around the world and how they respond and recover from crises over time.

“Determining whether small and medium businesses are open is very important to assess the recovery after events like mandatory stay-at-home orders,” said Dr. Flavia De Luca, Senior Lecturer in Structural and Earthquake Engineering at the University of Bristol. “The traditional way of collecting this information, such as surveys and interviews, are usually costly, time consuming, and do not scale. By using real time information from Facebook, we hope to make it easier for public institutions to better respond to these events.”…(More)”.

Optimizing Digital Data Sharing in Agriculture

Report by Mercy Corps: “In our digital age, data is increasingly recognized as a powerful tool across all sectors, including agriculture. Historically, data on rural farmers was extremely limited and unreliable; the advent of new digital technologies has allowed more reliable data sources to emerge, from satellites to telecom to the Internet of Things. Private companies—including fintech and agricultural technology innovators—are increasingly utilizing these new data sources to learn more about farmers and to structure new services to meet their needs.

In order to make efficient use of this emerging data, many actors are exploring data-sharing partnerships that combine the power of multiple datasets to create greater impact for smallholder farmers. In a new Learning Brief looking at AgriFin engagements with 14 partners across four different countries, we found that 25% of engagements featured a strong data-sharing component. These engagements spanned various use cases, including credit scoring, targeted training, and open access to information.

Drawing on this broad experience, our research looks at what we’ve learned about data sharing to enhance service delivery for smallholder farmers. We have distilled these lessons into common barriers faced by data-sharing arrangements in order to provide practical guidance and tools for overcoming these barriers to the broader ecosystem of actors involved in optimizing data sharing for agriculture….(More) (Access the full length learning brief)”.

Data Readiness: Lessons from an Emergency

The DELVE Initiative: “Responding to the COVID-19 pandemic has required rapid decision-making in changing circumstances. Those decisions and their effects on the health and wealth of the nation can be better informed with data. Today, technologies that can acquire data are pervasive. Data is continually produced by devices like mobile phones, payment points and road traffic sensors. This creates opportunities for nowcasting of important metrics such as GDP, population movements and disease prevalence, which can be used to design policy interventions that are targeted to the needs of specific sectors or localities. The data collected as a by-product of daily activities is different to epidemiological or other population research data that might be used to drive the decisions of state. These new forms of data are happenstance, in that they are not originally collected with a particular research or policy question in mind but are created through the normal course of events in our digital lives, and our interactions with digital systems and services.

This happenstance data pertains to individual citizens and their daily activities. To be useful it needs to be anonymized, aggregated and statistically calibrated to provide meaningful metrics for robust decision making while managing concerns about individual privacy or business value. This process necessitates particular technical and domain expertise that is often found in academia, but it must be conducted in partnership with the industries, and public sector organisations, that collect or generate the data and government authorities that take action based on those insights. Such collaborations require governance mechanisms that can respond rapidly to emerging areas of need, a common language between partners about how data is used and how it is being protected, and careful stewardship to ensure appropriate balancing of data subjects’ rights and the benefit of using this data. This is the landscape of data readiness; the availability and quality of the UK nation’s data dictates our ability to respond in an agile manner to evolving events….(More)”.

Climate TRACE

About: “We exist to make meaningful climate action faster and easier by mobilizing the global tech community—harnessing satellites, artificial intelligence, and collective expertise—to track human-caused emissions to specific sources in real time—independently and publicly.

Climate TRACE aims to drive stronger decision-making on environmental policy, investment, corporate sustainability strategy, and more.

WHAT WE DO

01 Monitor human-caused GHG emissions using cutting-edge technologies such as artificial intelligence, machine learning, and satellite image processing.

02 Collaborate with data scientists and emission experts from an array of industries to bring unprecedented transparency to global pollution monitoring.

03 Partner with leaders from the private and public sectors to share valuable insights in order to drive stronger climate policy and strategy.

04 Provide the necessary tools for anyone anywhere to make better decisions to mitigate and adapt to the impacts from climate change… (More)”

Consumer Bureau To Decide Who Owns Your Financial Data

Article by Jillian S. Ambroz: “A federal agency is gearing up to make wide-ranging policy changes on consumers’ access to their financial data.

The Consumer Financial Protection Bureau (CFPB) is looking to implement the area of the 2010 Dodd-Frank Wall Street Reform and Consumer Protection Act pertaining to a consumer’s rights to his or her own financial data. It is detailed in section 1033.

The agency has been laying the groundwork on this move for years, from requesting information in 2016 from financial institutions to hosting a symposium earlier this year on the problems of screen scraping, a risky but common method of collecting consumer data.

Now the agency, which was established by the Dodd-Frank Act, is asking for comments on this critical and controversial topic ahead of the proposed rulemaking. Unlike other regulations that affect single industries, this could be all-encompassing because the consumer data rule touches almost every market the agency covers, according to the story in American Banker.

The Trump administration all but ‘systematically neutered’ the agency.

With the ruling, the agency seeks to clarify its compliance expectations and help establish market practices to ensure consumers have access to consumer financial data. The agency sees an opportunity here to help shape this evolving area of financial technology, or fintech, recognizing both the opportunities and the risks to consumers as more fintechs become enmeshed with their data and day-to-day lives.

Its goal is “to better effectuate consumer access to financial records,” as stated in the regulatory filing….(More)”.

Commission proposes measures to boost data sharing and support European data spaces

Press Release: “To better exploit the potential of ever-growing data in a trustworthy European framework, the Commission today proposes new rules on data governance. The Regulation will facilitate data sharing across the EU and between sectors to create wealth for society, increase control and trust of both citizens and companies regarding their data, and offer an alternative European model to data handling practice of major tech platforms.

The amount of data generated by public bodies, businesses and citizens is constantly growing. It is expected to multiply by five between 2018 and 2025. These new rules will allow this data to be harnessed and will pave the way for sectoral European data spaces to benefit society, citizens and companies. In the Commission’s data strategy of February this year, nine such data spaces have been proposed, ranging from industry to energy, and from health to the European Green Deal. They will, for example, contribute to the green transition by improving the management of energy consumption, make delivery of personalised medicine a reality, and facilitate access to public services.

The Regulation includes:

- A number of measures to increase trust in data sharing, as the lack of trust is currently a major obstacle and results in high costs.

- Create new EU rules on neutrality to allow novel data intermediaries to function as trustworthy organisers of data sharing.

- Measures to facilitate the reuse of certain data held by the public sector. For example, the reuse of health data could advance research to find cures for rare or chronic diseases.

- Means to give Europeans control on the use of the data they generate, by making it easier and safer for companies and individuals to voluntarily make their data available for the wider common good under clear conditions….(More)”.

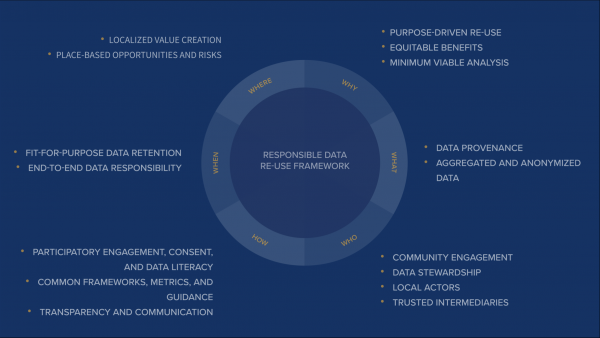

Responsible Data Re-Use for COVID19

” The Governance Lab (The GovLab) at the NYU Tandon School of Engineering, with support from the Henry Luce Foundation, today released guidance to inform decision-making in the responsible re-use of data — re-purposing data for a use other than that for which it was originally intended — to address COVID-19. The findings, recommendations, and a new Responsible Data Re-Use framework stem from The Data Assembly initiative in New York City. An effort to solicit diverse, actionable public input on data re-use for crisis response in the United States, the Data Assembly brought together New York City-based stakeholders from government, the private sector, civic rights and advocacy organizations, and the general public to deliberate on innovative, though potentially risky, uses of data to inform crisis response in New York City. The findings and guidance from the initiative will inform policymaking and practice regarding data re-use in New York City, as well as free data literacy training offerings.

The Data Assembly’s Responsible Data Re-Use Framework provides clarity on a major element of the ongoing crisis. Though leaders throughout the world have relied on data to reduce uncertainty and make better decisions, expectations around the use and sharing of siloed data assets has remained unclear. This summer, along with the New York Public Library and Brooklyn Public Library, The GovLab co-hosted four months of remote deliberations with New York-based civil rights organizations, key data holders, and policymakers. Today’s release is a product of these discussions, to show how New Yorkers and their leaders think about the opportunities and risks involved in the data-driven response to COVID-19….(More)”

See: The Data Assembly Synthesis Report by y Andrew Young, Stefaan G. Verhulst, Nadiya Safonova, and Andrew J. Zahuranec

Data could hold the key to stopping Alzheimer’s

Blog post by Bill Gates: “My family loves to do jigsaw puzzles. It’s one of our favorite activities to do together, especially when we’re on vacation. There is something so satisfying about everyone working as a team to put down piece after piece until finally the whole thing is done.

In a lot of ways, the fight against Alzheimer’s disease reminds me of doing a puzzle. Your goal is to see the whole picture, so that you can understand the disease well enough to better diagnose and treat it. But in order to see the complete picture, you need to figure out how all of the pieces fit together.

Right now, all over the world, researchers are collecting data about Alzheimer’s disease. Some of these scientists are working on drug trials aimed at finding a way to stop the disease’s progression. Others are studying how our brain works, or how it changes as we age. In each case, they’re learning new things about the disease.

But until recently, Alzheimer’s researchers often had to jump through a lot of hoops to share their data—to see if and how the puzzle pieces fit together. There are a few reasons for this. For one thing, there is a lot of confusion about what information you can and can’t share because of patient privacy. Often there weren’t easily available tools and technologies to facilitate broad data-sharing and access. In addition, pharmaceutical companies invest a lot of money into clinical trials, and often they aren’t eager for their competitors to benefit from that investment, especially when the programs are still ongoing.

Unfortunately, this siloed approach to research data hasn’t yielded great results. We have only made incremental progress in therapeutics since the late 1990s. There’s a lot that we still don’t know about Alzheimer’s, including what part of the brain breaks down first and how or when you should intervene. But I’m hopeful that will change soon thanks in part to the Alzheimer’s Disease Data Initiative, or ADDI….(More)“.

Building Trust for Inter-Organizational Data Sharing: The Case of the MLDE

Paper by Heather McKay, Sara Haviland, and Suzanne Michael: “There is increasing interest in sharing data across agencies and even between states that was once siloed in separate agencies. Driving this is a need to better understand how people experience education and work, and their pathways through each. A data-sharing approach offers many possible advantages, allowing states to leverage pre-existing data systems to conduct increasingly sophisticated and complete analyses. However, information sharing across state organizations presents a series of complex challenges, one of which is the central role trust plays in building successful data-sharing systems. Trust building between organizations is therefore crucial to ensuring project success.

This brief examines the process of building trust within the context of the development and implementation of the Multistate Longitudinal Data Exchange (MLDE). The brief is based on research and evaluation activities conducted by Rutgers’ Education & Employment Research Center (EERC) over the past five years, which included 40 interviews with state leaders and the Western Interstate Commission for Higher Education (WICHE) staff, observations of user group meetings, surveys, and MLDE document analysis. It is one in a series of MLDE briefs developed by EERC….(More)”.