Stefaan Verhulst

Paper by Wolff, Annika; Kortuem, Gerd and Cavero, Jose: “A bottom-up approach to smart cities places citizens in an active role of contributing, analysing and interpreting data in pursuit of tackling local urban challenges and building a more sustainable future city. This vision can only be realised if citizens have sufficient data literacy skills and experience of large, complex, messy, ever expanding data sets. Schools typically focus on teaching data handling skills using small, personally collected data sets obtained through scientific experimentation, leading to a gap between what is being taught and what will be needed as big data and analytics become more prevalent. This paper proposes an approach to teaching data literacy in the context of urban innovation tasks, using an idea of Urban Data Games. These are supported by a set of training data and resources that will be used in school trials for exploring the problems people have when dealing with large data and trialling novel approaches for teaching data literacy….(More)”

Paper by Staci M. Zavattaro, P. Edward French, and Somya D. Mohanty: “As social media tools become more popular at all levels of government, more research is needed to determine how the platforms can be used to create meaningful citizen–government collaboration. Many entities use the tools in one-way, push manners. The aim of this research is to determine if sentiment (tone) can positively influence citizen participation with government via social media. Using a systematic random sample of 125 U.S. cities, we found that positive sentiment is more likely to engender digital participation but this was not a perfect one-to-one relationship. Some cities that had an overall positive sentiment score and displayed a participatory style of social media use did not have positive citizen sentiment scores. We argue that positive tone is only one part of a successful social media interaction plan, and encourage social media managers to actively manage platforms to use activities that spur participation….(More)”

Mihir A. Desai in Fortune: “By soliciting ideas from large groups, the U.S. can come up with the right policies to reform the nation’s tax code — similar to the way complex computer systems are managed around the world….

Addressing complexity in the tax code requires analogizing to other complex systems and drawing on the research that demonstrates how to manage that complexity. Indeed, there is a well-developed literature on how to manage complex systems that can provide the foundation for simplifying the tax code. In particular, we know a lot about how to manage the evolution of software codes. This analogy yields two primary lessons.

First, “over the wall” engineering is highly problematic and “concurrent” engineering is preferred. Throwing completed ideas “over the wall” to the next part of the production process limits learning and engenders complexity relative to a concurrent and iterative production process. Currently, policy ideas are often developed without a clear vision of the associated language and with even less attention to the perspective of administrators. As with software, this yields bulky and contradictory language that could be avoided if the practice of policy formulation and drafting were a collaborative activity with the administrative agency in charge of enforcement. While the 1998 IRS Reform Act calls for such an approach, the reality does not live up to the law’s aspiration.

Second, and more radically, we should embark on an effort to crowd source the code. Much as the development of software capitalizes on a distributed talent pool, our legislative and regulatory processes on taxes could be opened up radically during comment and drafting.

Currently, the code is managed much as it was 50 years ago – in a fundamentally closed manner. Laws and regulations are drafted by small groups in a non-transparent way that pays little attention to the overall architecture of the tax system. As a consequence, vested interests can influence the management of complexity toward their advantage and complexity grows by ignoring interrelationships.

Research shows that effective management of complex codes – be it Linux or the tax code – requires three things. First, the code must be mapped so that the interrelationships, technically and conceptually, of different parts of the code and associated regulations and rulings become manifest. Second, this mapping enables modularization whereby the code is reorganized into pieces that reflect these relationships. Finally, this modularization provides the foundation for opening up the code to experts throughout society – so-called crowdsourcing – who contribute suggestions for rationalization and simplification.

By mapping, modularizing and opening the code and associated regulations, we could draw upon widespread expertise, provide transparency on a critical process, address the imbalance in resources between the taxing authority and sophisticated taxpayers and begin the process of simplifying the code and its administrative guidance. In the limit, one could imagine a detailed mapping of the tax code and associated regulations hosted by the IRS much as software code is mapped. This mapping would then serve as a guide to reorganizing laws and regulations over time. While decision making rights would remain with Congress and the IRS, opinions on policies would then be solicited widely and the drafting of laws and regulations could be aided by experts around the country through an open platform.

The commentary and drafting process that is so critical to policy formulation and administration would be completely open in real time. …(More)”

Dennis Glenn at “Chief Learning Officer” Media: “Gamification is one of the hottest topics in corporate learning today, yet we don’t entirely trust it. So before delving into how leaders can take a reasoned, serious approach to use games in learning environments, let’s get one thing straight: Gamification is different from serious gaming.

Gamification places nongame experiences into a gamelike environment. Serious games are educational experiences specifically designed to deliver formative or summative assessments based on predetermined learning objectives. Gamification creates an experience; serious games promote task or concept mastery. The underlying aim of serious games concentrates the user’s effort on mastery of a specific task, with a feedback loop to inform users of their progress toward that goal….

In addition to simulations and gamification, many corporate learning leaders are turning to serious games, which demand social engagement. For instance, consider the World of Warcraft wiki, which has more than 101,000 players and contributors helping others master the online game.

Some of the most important benefits to gaming:

- Accepting failure, which is seen as a benefit to mastery.

- Rewarding players with appropriate and timely feedback.

- Making social connections and feeling part of something bigger.

In serious games, frequent feedback — when accompanied by specific instruction — can dramatically reduce the time to mastery. Because the computer will record all data during the assessment, learning leaders can identify specific pathways to mastery and offer them to learners.

This feedback loop leads to self-reflection and that can be translated into learning, according the 2014 paper titled “Working Paper: Learning by Thinking: How Reflection Aids Performance.” Authors Giada Di Stefano, Francesca Gino, Gary Pisano and Bradley Staats found that individuals performed significantly better on subsequent tasks when thinking about what they learned from the previously completed task.

Social learning is the final link to understanding mastery learning. In a recent massive open online course, titled “Design and Development of Educational Technology MITx: 11.132x,” instructor Scot Osterweil said our understanding of literacy is rooted in a social environment and in interactions with other people and the world. But again, engagement is key. Gaming provides the structure needed to engage with peers, often irrespective of cultural and language differences….(More)”.

Gregory Ferenstein in Pacific Standard: “Improbable as it sounds, McDonald’s may hold the key to getting America’s youth to eat healthier.

A team of medical researchers led by Dr. Robert Siegel took a strategy from the Happy Meal playbook, pairing healthy lunch options at public schools with smiley faces and a toy. The result were extraordinary: voluntary healthy meal purchases quadrupled.

“A two-tiered approach of Emoticons followed by small prizes as an incentive for healthful food selections is very effective in increasing plain white milk, fruit and vegetable selection,” the researchers write in a study presented this week at the annual Pediatric Academic Societies meeting, in San Diego.

Indeed, the popularization of emoticons has been co-opted by researchers lately to see if the colorful balls of happiness can be utilized for socially beneficial ends. One 2014 study found that “emolabeling” could be a major factor in health choice selection by both pre-literate and young children….

This latest research delves further into the power of emoticons in two significant ways. First, the study was tested in some of the most troubled school neighborhoods. (Siegel estimates that a significant portion of the families were either poor or homeless.) Second, knowing that smiling faces alone may not be enough to change behavior, after three months of using emoticons, the team added in a toy to further entice healthy meals.

With emoticons alone, the team found that chocolate milk sales at the school took a noticeable dip, from 87 percent of total milk sales to 78 percent. Later, entire meals known as “Power Plates” were added and paired with a toy. These healthy lunches (with whole grains and vegetables) spiked from less than 10 percent to 42 percent with the introduction of emoticons and a toy.

Interestingly enough, after the toys were taken away, the children continued to select healthy meals. That’s because external rewards can have odd effects on behavior….(More)

DataLandscape.eu: “The European Data Market study aims to define, assess and measure the European data economy, supporting the achievement of the Data Value Chain policy of the European Commission. This strategy is focused on developing a vibrant and innovative data ecosystem of stakeholders driving the growth of this innovative market in Europe. The main results of this study will feed into the annual reviews of the Digital Agenda Scoreboard providing valuable data and information.

Additionally, supporting above-mentioned aims the study also plans to support the development of the community of relevant stakeholders in the EU. Since the “data community” in Europe is already strong albeit very diverse, dispersed in several communities, the study wants to move from disparate communities to a genuine stakeholders’ ecosystem….(More)”

Justin Fox at the Harvard Business Review: “When we make decisions, we make mistakes. We all know this from personal experience, of course. But just in case we didn’t, a seemingly unending stream of experimental evidence in recent years has documented the human penchant for error. This line of research—dubbed heuristics and biases, although you may be more familiar with its offshoot, behavioral economics—has become the dominant academic approach to understanding decisions. Its practitioners have had a major influence on business, government, and financial markets. Their books—Predictably Irrational; Thinking, Fast and Slow; and Nudge, to name three of the most important—have suffused popular culture.

So far, so good. This research has been enormously informative and valuable. Our world, and our understanding of decision making, would be much poorer without it.

It is not, however, the only useful way to think about making decisions. Even if you restrict your view to the academic discussion, there are three distinct schools of thought. Although heuristics and biases is currently dominant, for the past half century it has interacted with and sometimes battled with the other two, one of which has a formal name—decision analysis—and the other of which can perhaps best be characterized as demonstrating that we humans aren’t as dumb as we look.

Adherents of the three schools have engaged in fierce debates, and although things have settled down lately, major differences persist. This isn’t like David Lodge’s aphorism about academic politics being so vicious because the stakes are so small. Decision making is important, and decision scholars have had real influence.

This article briefly tells the story of where the different streams arose and how they have interacted, beginning with the explosion of interest in the field during and after World War II (for a longer view, see “A Brief History of Decision Making,” by Leigh Buchanan and Andrew O’Connell, HBR, January 2006). The goal is to make you a more informed consumer of decision advice—which just might make you a better decision maker….(More)”

Article by Frederic Kaplan in Frontiers: “This article is an attempt to represent Big Data research in Digital Humanities as a structured research field. A division in three concentric areas of study is presented. Challenges in the first circle – focusing on the processing and interpretations of large cultural datasets – can be organized linearly following the data processing pipeline. Challenges in the second circle – concerning digital culture at large – can be structured around the different relations linking massive datasets, large communities, collective discourses, global actors and the software medium. Challenges in the third circle – dealing with the experience of big data – can be described within a continuous space of possible interfaces organized around three poles: immersion, abstraction and language. By identifying research challenges in all these domains, the article illustrates how this initial cartography could be helpful to organize the exploration of the various dimensions of Big Data Digital Humanities research….(More)”

Wassim Zoghlami at Medium: “…Data mining is the process of posing queries and extracting useful patterns or trends often previously unknown from large amounts of data using various techniques such as those from pattern recognition and machine learning. Latelely there has been a big interest on leveraging the use of data mining for counter-terrorism applications

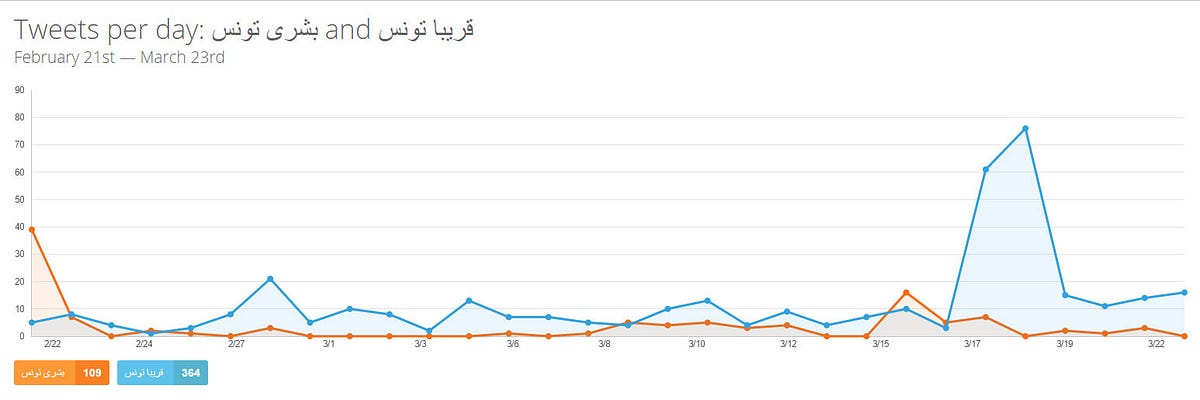

Using the data on more than 50.000+ ISIS connected twitter accounts , I was able to establish an understanding of some factors determined how often ISIS attacks occur , what different types of terror strikes are used in which geopolitical situations, and many other criteria through graphs about the frequency of hashtags usages and the frequency of a particular group of the words used in the tweets.

A simple data mining project of some of the repetitive hashtags and sequences of words used typically by ISIS militants in their tweets yielded surprising results. The results show a rise of some keywords on the tweets that started from Marsh 15, three days before Bardo museum attacks.

Some of the common frequent keywords and hashtags that had a unusual peak since marsh 15 , three days before the attack :

#طواغيت تونس : Tyrants of Tunisia = a reference to the military

بشرى تونس : Good news for Tunisia.

قريبا تونس : Soon in Tunisia.

#إفريقية_للإعلام : The head of social media of Afriqiyah

#غزوة_تونس : The foray of Tunis…

Big Data and Data Mining should be used for national security intelligence

The Tunisian national security has to leverage big data to predict such attacks and to achieve objectives as the volume of digital data. Some of the challenges facing the Data mining techniques are that to carry out effective data mining and extract useful information for counterterrorism and national security, we need to gather all kinds of information about individuals. However, this information could be a threat to the individuals’ privacy and civil liberties…(More)”

As a killer disease, malaria is the world’s third biggest, after only HIV/AIDS and tuberculosis. In 2013, an estimated 584,000 people died of it—90 percent of these deaths in Africa, mostly among children under five years of age.1 And because 3.2 billion people—almost half the world’s population—live in regions where malaria spreads easily, it is very hard to fight.2 Scores of organizations are embroiled in the complex search for solutions, sometimes pursuing conflicting priorities, always competing for scarce resources. Despite the daunting challenges, here’s how Bill Gates, who has already spent more than $2 billion of Gates Foundation money on the problem, characterizes the situation: “This is one of the greatest opportunities the global health world has ever had.”3

Opportunity? It’s a surprising word even for an optimistic mega-philanthropist to describe a scourge that people have been trying to eliminate, unsuccessfully, for hundreds of years. It’s also, however, a fair statement about what is possible in the 21st century. We’re seeing a trend by which many kinds of “wicked problems”—complex, dynamic, and seemingly intractable social challenges—are being reframed and attacked with renewed vigor through solution ecosystems. Unprecedented networks of non-governmental organizations (NGOs), social entrepreneurs, health professionals, governments, and international development institutions—and yes, businesses—are coalescing around them, and recasting them as wicked opportunities….(More)”