Andrew Mandelbaum from OpeningParliament.org: “Around the world, parliaments, governments, civil society organizations, and even individual parliamentarians, are taking measures to make the legislative process more participatory. Some are creating their own tools — often open source, which allows others to use these tools as well — that enable citizens to markup legislation or share ideas on targeted subjects. Others are purchasing and implementing tools developed by private companies to good effect. In several instances, these initiatives are being conducted through collaboration between public institutions and civil society, while many compliment online and offline experiences to help ensure that a broader population of citizens is reached.

The list below provides examples of some of the more prominent efforts to engage citizens in the legislative process.

Brazil

Implementer: Brazilian Chamber of Deputies…

Website: http://edemocracia.camara.gov.br/

Additional Information: OpeningParliament.org Case Study

Estonia

Implementer: Estonian President & Civil Society

Project Name: Rahvakogu (The People’s Assembly)…

Website: http://www.rahvakogu.ee/

Additional Information: Enhancing Estonia’s Democracy Through Rahvakogu

Finland

Implementer: Finnish Parliament

Project Name: Inventing Finland again! (Keksitään Suomi uudelleen!)…

Website: http://www.suomijoukkoistaa.fi/

Additional Information: Democratic Participation and Deliberation in Crowdsourced Legislative Processes: The Case of the Law on Off-Road Traffic in Finland

France

Implementer: SmartGov – Démocratie Ouverte…

Website: https://www.parlement-et-citoyens.fr/

Additional Information: OpeningParliament Case Study

Italy

Implementer: Government of Italy

Project Name: Public consultation on constitutional reform…

Website: http://www.partecipa.gov.it/

Spain

Implementer: Basque Parliament…

Website: http://www.adi.parlamentovasco.euskolegebiltzarra.org/es/

Additional Information: Participation in Parliament

United Kingdom

Implementer: Cabinet Office

Project Name: Open Standards Consultation…

Website: http://consultation.cabinetoffice.gov.uk/openstandards/

Additional Information: Open Policy Making, Open Standards Consulation; Final Consultation Documents

United States

Implementer: OpenGov Foundation

Project Name: The Madison Project

Tool: The Madison Project“

Computational Social Science: Exciting Progress and Future Directions

Duncan Watts in The Bridge: “The past 15 years have witnessed a remarkable increase in both the scale and scope of social and behavioral data available to researchers. Over the same period, and driven by the same explosion in data, the study of social phenomena has increasingly become the province of computer scientists, physicists, and other “hard” scientists. Papers on social networks and related topics appear routinely in top science journals and computer science conferences; network science research centers and institutes are sprouting up at top universities; and funding agencies from DARPA to NSF have moved quickly to embrace what is being called computational social science.

Against these exciting developments stands a stubborn fact: in spite of many thousands of published papers, there’s been surprisingly little progress on the “big” questions that motivated the field of computational social science—questions concerning systemic risk in financial systems, problem solving in complex organizations, and the dynamics of epidemics or social movements, among others.

Of the many reasons for this state of affairs, I concentrate here on three. First, social science problems are almost always more difficult than they seem. Second, the data required to address many problems of interest to social scientists remain difficult to assemble. And third, thorough exploration of complex social problems often requires the complementary application of multiple research traditions—statistical modeling and simulation, social and economic theory, lab experiments, surveys, ethnographic fieldwork, historical or archival research, and practical experience—many of which will be unfamiliar to any one researcher. In addition to explaining the particulars of these challenges, I sketch out some ideas for addressing them….”

New Journal Helps Behavioral Scientists Find Their Way to Washington

The PsychReport: “When it comes to being heard in Washington, classical economists have long gotten their way. Behavioral scientists, on the other hand, haven’t proved so adept at getting their message across.

It isn’t for lack of good ideas. Psychology’s applicability has been gaining momentum in recent years, namely in the U.K.’s Behavioral Insights Team, which has helped prove the discipline’s worth to policy makers. The recent (but not-yet-official) announcement that the White House is creating a similar team is another major endorsement of behavioral science’s value.

But when it comes to communicating those ideas to the public in general, psychologists and other behavioral scientists can’t name so many successes. Part of the problem is PR know-how: writing for a general audience, publicizing good ideas, reaching-out to decision makers. Another is incentive: academics need to publish, and many times publishing means producing long, dense, jargon-laden articles for peer-reviewed journals read by a rarified audience of other academics. And then there’s time, or lack of it.

But a small group of prominent behavioral scientists is working to help other researchers find their way to Washington. The brainchild of UCLA’s Craig Fox and Duke’s Sim Sitkin, Behavioral Science & Policy is a peer-reviewed journal set to launch online this fall and in print early next year, whose mission is to influence policy and practice through promoting high-quality behavioral science research. Articles will be brief, well written, and will all provide straightforward, applicable policy recommendations that serve the public interest.

“What we’re trying to do is create policies that are mindful of how individuals, groups, and organizations behave. How can you create smart policies if you don’t do that?”

In bringing behavioral science to the capital, Fox echoed a similar motivation as David Halpern of the Behavioral Insights Team.

“What we’re trying to do is create policies that are mindful of how individuals, groups, and organizations behave. How can you create smart policies if you don’t do that?” Fox said. “Because after all, all policies affect individuals, groups, and/or organizations.”

Fox has already assembled an impressive team of scientists from around the country for the journal’s advisory board including Richard Thaler and Cass Sunstein, authors of Nudge which helped inspire the creation of the Behavioral Insights Team, The New York Times columnist David Brooks, and Nobel Prize Winner Daniel Kahneman. They’ve created a strong partnership with the prestigious think tank Brookings Institute, who will serve as their publishing partner and who they plan will also co-host briefings for policy makers in Washington…”

The Parable of Google Flu: Traps in Big Data Analysis

David Lazer: “…big data last winter had its “Dewey beats Truman” moment, when the poster child of big data (at least for behavioral data), Google Flu Trends (GFT), went way off the rails in “nowcasting” the flu–overshooting the peak last winter by 130% (and indeed, it has been systematically overshooting by wide margins for 3 years). Tomorrow we (Ryan Kennedy, Alessandro Vespignani, and Gary King) have a paper out in Science dissecting why GFT went off the rails, how that could have been prevented, and the broader lessons to be learned regarding big data.

[We are The Parable of Google Flu (WP-Final).pdf we submitted before acceptance. We have also posted an SSRN paper evaluating GFT for 2013-14, since it was reworked in the Fall.]Key lessons that I’d highlight:

1) Big data are typically not scientifically calibrated. This goes back to my post last month regarding measurement. This does not make them useless from a scientific point of view, but you do need to build into the analysis that the “measures” of behavior are being affected by unseen things. In this case, the likely culprit was the Google search algorithm, which was modified in various ways that we believe likely to have increased flu related searches.

2) Big data + analytic code used in scientific venues with scientific claims need to be more transparent. This is a tricky issue, because there are both legitimate proprietary interests involved and privacy concerns, but much more can be done in this regard than has been done in the 3 GFT papers. [One of my aspirations over the next year is to work together with big data companies, researchers, and privacy advocates to figure out how this can be done.]

3) It’s about the questions, not the size of the data. In this particular case, one could have done a better job stating the likely flu prevalence today by ignoring GFT altogether and just project 3 week old CDC data to today (better still would have been to combine the two). That is, a synthesis would have been more effective than a pure “big data” approach. I think this is likely the general pattern.

4) More generally, I’d note that there is much more that the academy needs to do. First, the academy needs to build the foundation for collaborations around big data (e.g., secure infrastructures, legal understandings around data sharing, etc). Second, there needs to be MUCH more work done to build bridges between the computer scientists who work on big data and social scientists who think about deriving insights about human behavior from data more generally. We have moved perhaps 5% of the way that we need to in this regard.”

How Maps Drive Decisions at EPA

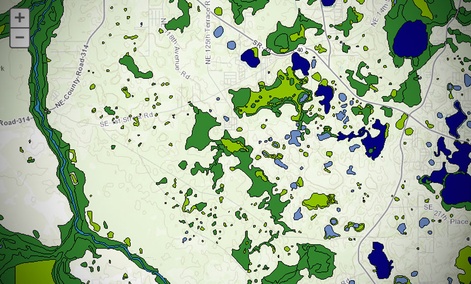

Those spreadsheets detailed places with large oil and gas production and other possible pollutants where EPA might want to focus its own inspection efforts or reach out to state-level enforcement agencies.

During the past two years, the agency has largely replaced those spreadsheets and tables with digital maps, which make it easier for participants to visualize precisely where the top polluting areas are and how those areas correspond to population centers, said Harvey Simon, EPA’s geospatial information officer, making it easier for the agency to focus inspections and enforcement efforts where they will do the most good.

“Rather than verbally going through tables and spreadsheets you have a lot of people who are not [geographic information systems] practitioners who are able to share map information,” Simon said. “That’s allowed them to take a more targeted and data-driven approach to deciding what to do where.”

The change is a result of the EPA Geoplatform, a tool built off Esri’s ArcGIS Online product, which allows companies and government agencies to build custom Web maps using base maps provided by Esri mashed up with their own data.

When the EPA Geoplatform launched in May 2012 there were about 250 people registered to create and share mapping data within the agency. That number has grown to more than 1,000 during the past 20 months, Simon said.

“The whole idea of the platform effort is to democratize the use of geospatial information within the agency,” he said. “It’s relatively simple now to make a Web map and mash up data that’s useful for your work, so many users are creating Web maps themselves without any support from a consultant or from a GIS expert in their office.”

A governmentwide Geoplatform launched in 2012, spurred largely by agencies’ frustrations with the difficulty of sharing mapping data after the 2010 explosion of the Deepwater Horizon oil rig in the Gulf of Mexico. The platform’s goal was twofold. First officials wanted to share mapping data more widely between agencies so they could avoid duplicating each other’s work and to share data more easily during an emergency.

Second, the government wanted to simplify the process for viewing and creating Web maps so they could be used more easily by nonspecialists.

EPA’s geoplatform has essentially the same goals. The majority of the maps the agency builds using the platform aren’t publicly accessible so the EPA doesn’t have to worry about scrubbing maps of data that could reveal personal information about citizens or proprietary data about companies. It publishes some maps that don’t pose any privacy concerns on EPA websites as well as on the national geoplatform and to Data.gov, the government data repository.

Once ArcGIS Online is judged compliant with the Federal Information Security Management Act, or FISMA, which is expected this month, EPA will be able to share significantly more nonpublic maps through the national geoplatform and rely on more maps produced by other agencies, Simon said.

EPA’s geoplatform has also made it easier for the agency’s environmental justice office to share common data….”

Participatory Budgeting Platform

Hollie Gilman: “Stanford’s Social Algorithm’s Lab SOAL has built an interactive Participatory Budgeting Platform that allows users to simulate budgetary decision making on $1 million dollars of public monies. The center brings together economics, computer science, and networking to work on problems and understand the impact of social networking. This project is part of Stanford’s Widescope Project to enable people to make political decisions on the budgets through data driven social networks.

The Participatory Budgeting simulation highlights the fourth annual Participatory Budgeting in Chicago’s 49th ward — the first place to implement PB in the U.S. This year $1 million, out of $1.3 million in Alderman capital funds, will be allocated through participatory budgeting.

One goal of the platform is to build consensus. The interactive geo-spatial mapping software enables citizens to more intuitively identify projects in a given area. Importantly, the platform forces users to make tough choices and balance competing priorities in real time.

The platform is an interesting example of a collaborative governance prototype that could be transformative in its ability to engage citizens with easily accessible mapping software.”

Open Data is a Civil Right

Yo Yoshida, Founder & CEO, Appallicious in GovTech: “As Americans, we expect a certain standardization of basic services, infrastructure and laws — no matter where we call home. When you live in Seattle and take a business trip to New York, the electric outlet in the hotel you’re staying in is always compatible with your computer charger. When you drive from San Francisco to Los Angeles, I-5 doesn’t all-of-a-sudden turn into a dirt country road because some cities won’t cover maintenance costs. If you take a 10-minute bus ride from Boston to the city of Cambridge, you know the money in your wallet is still considered legal tender.

Intelligent Demand: Policy Rationale, Design and Potential Benefits

New OECD paper: “Policy interest in demand-side initiatives has grown in recent years. This may reflect an expectation that demand-side policy could be particularly effective in steering innovation to meet societal needs. In addition, owing to constrained public finances in most OECD countries, the possibility that demand-side policies might be less expensive than direct support measures is attractive. Interest may also reflect some degree of disappointment with the outcomes of traditional supply-side measures. This paper reviews demand-side innovation policies, their rationales and importance across countries, different approaches to their design, the challenges entailed in their implementation and evaluation, and good practices. Three main forms of demand-side policy are considered: innovation-oriented public procurement, innovation-oriented regulations, and standards. Emphasis is placed on innovation-oriented public procurement.”

Procurement and Civic Innovation

Derek Eder: “Have you ever used a government website and had a not-so-awesome experience? In our slick 2014 world of Google, Twitter and Facebook, why does government tech feel like it’s stuck in the 1990s?

The culprit: bad technology procurement.

Procurement is the procedure a government follows to buy something–letting suppliers know what they want, asking for proposals, restricting what kinds of proposal they will consider, limiting what kinds of firms they will do business with, and deciding if what they got what they paid for.

The City of Chicago buys technology about the same way that they buy health insurance, a bridge, or anything else in between. And that’s the problem.

Chicago’s government has a long history of corruption, nepotism and patronage. After each outrage, new rules are piled upon existing rules to prevent that crisis from happening again. Unfortunately, this accumulation of rules does not just protect against the bad guys, it also forms a huge barrier to entry for technology innovators.

So, the firms that end up building our city’s digital public services tend to be good at picking their way through the barriers of the procurement process, not at building good technology. Instead of making government tech contracting fair and competitive, procurement has unfortunately had the opposite effect.

So where does this leave us? Despite Chicago’s flourishing startup scene, and despite having one of the country’s largest community of civic technologists, the Windy City’s digital public services are still terribly designed and far too expensive to the taxpayer.

The Technology Gap

The best way to see the gap between Chicago’s volunteer civic tech community and the technology that the City pays is to look at an entire class of civic apps that are essentially facelifts on existing government websites….

You may have noticed an increase in quality and usability between these three civic apps and their official government counterparts.

Now consider this: all of the government sites took months to build and cost hundreds of thousands of dollars. Was My Car Towed, 2nd City Zoning and CrimeAround.us were all built by one to two people in a matter of days, for no money.

Think about that for a second. Consider how much the City is overpaying for websites its citizens can barely use. And imagine how much better our digital city services would be if the City worked with the very same tech startups they’re trying to nurture.

Why do these civic apps exist? Well, with the City of Chicago releasing hundreds of high quality datasets on their data portal over the past three years (for which they should be commended), a group of highly passionate and skilled technologists have started using their skills to develop these apps and many others.

It’s mostly for fun, learning, and a sense of civic duty, but it demonstrates there’s no shortage of highly skilled developers who are interested in using technology to make their city a better place to live in…

Two years ago, in the Fall of 2011, I learned about procurement in Chicago for the first time. An awesome group of developers, designers and I had just built ChicagoLobbyists.org – our very first civic app – for the City of Chicago’s first open data hackathon….

Since then, the City has often cited ChicagoLobbyists.org as evidence of the innovation-sparking potential of open data.

Shortly after our site launched, a Request For Proposals, or RFP, was issued by the City for an ‘Online Lobbyist Disclosure System.’

Hey! We just built one of those! Sure, we would need to make some updates to it—adding a way for lobbyists to log in and submit their info—but we had a solid start. So, our scrappy group of tech volunteers decided to respond to the RFP.

After reading all 152 pages of the document, we realized we had no chance of getting the bid. It was impossible for the ChicagoLobbyists.org group to meet the legal requirements (as it would have been for any small software shop):

- audited financial statements for the past 3 years

- an economic disclosure statement (EDS) and affidavit

- proof of $500k workers compensation and employers liability

- proof of $2 million in professional liability insurance”

Making digital government better

An McKinsey Insight interview with Mike Bracken (UK): “When it comes to the digital world, governments have traditionally placed political, policy, and system needs ahead of the people who require services. Mike Bracken, the executive director of the United Kingdom’s Government Digital Service, is attempting to reverse that paradigm by empowering citizens—and, in the process, improve the delivery of services and save money. In this video interview, Bracken discusses the philosophy behind the digital transformation of public services in the United Kingdom, some early successes, and next steps.

Interview transcript

Putting users first

Government around the world is pretty good at thinking about its own needs. Government puts its own needs first—they often put their political needs followed by the policy needs. The actual machine of government comes second. The third need then generally becomes the system needs, so the IT or whatever system’s driving it. And then out of those four, the user comes a poor fourth, really.

And we’ve inverted that. So let me give you an example. At the moment, if you want to know about tax in the UK , you’re probably going to know that Her Majesty’s Revenue and Customs is a part of government that deals with tax. You’re probably going to know that because you pay tax, right?

But why should you have to know that? Because, really, it’s OK to know that, for that one—but we’ve got 300 agencies, more than that; we’ve got 24 parts of government. If you want to know about, say, gangs, is that a health issue or is that a local issue? Is it a police issue? Is it a social issue, an education issue? Well, actually it’s all of those issues. But you shouldn’t have to know how government is constructed to know what each bit of government is doing about an esoteric issue like gangs.

What we’ve done with gov.uk, and what we’re doing with our transactions, is to make them consistent at the point of user need. Because there’s only one real user need of government digitally, and that’s to recognize that at the point of need, users need to deal with the government. Not a department name or an agency name, they’re dealing with the government. And when they do that, they need it to be consistent, and they need it to be easy to find. Ninety-five percent of our journeys digitally start with a search.

And so our elegantly constructed and expensively constructed front doors are often completely routed around. We’ve got to recognize that and construct our digital services based on user needs….”