Stefaan Verhulst

Paper by Azadeh Akbari: “Although many data justice projects envision just datafied societies, their focus on participatory ‘solutions’ to remedy injustice leaves important discussions out. For example, there has been little discussion of the meaning of data justice and its participatory underpinnings in authoritarian contexts. Additionally, the subjects of data justice are treated as universal decision-making individuals unaffected by the procedures of datafication itself. To tackle such questions, this paper starts with studying the trajectory of data justice as a concept and reflects on both its data and justice elements. It conceptualises data as embedded within a network of associations opening up a multi-level, multi-actor, intersectional understanding of data justice. Furthermore, it discusses five major conceptualisations of data justice based on social justice, capabilities, structural, sphere transgression, and abnormality of justice approaches. Discussing the limits and potentials of each of these categories, the paper argues that many of the existing participatory approaches are formulated within the neoliberal binary of choice: exit or voice (Hirschman, Citation1970). Transcending this binary and using postcolonial theories, the paper discusses the dehumanisation of individuals and groups as an integral part of datafication and underlines the inadequacy of digital harms, data protection, and privacy discourses in that regard. Finally, the paper reflects on the politics of data justice as an emancipatory concept capable of transforming standardised concepts such as digital literacy to liberating pedagogies for reclaiming the lost humanity of the oppressed (Freire, Citation1970) or evoking the possibility for multiple trajectories beyond the emerging hegemony of data capitalism…(More)”.

Article by Melissa Heikkilä and Stephanie Arnett: “AI is all about data. Reams and reams of data are needed to train algorithms to do what we want, and what goes into the AI models determines what comes out. But here’s the problem: AI developers and researchers don’t really know much about the sources of the data they are using. AI’s data collection practices are immature compared with the sophistication of AI model development. Massive data sets often lack clear information about what is in them and where it came from.

The Data Provenance Initiative, a group of over 50 researchers from both academia and industry, wanted to fix that. They wanted to know, very simply: Where does the data to build AI come from? They audited nearly 4,000 public data sets spanning over 600 languages, 67 countries, and three decades. The data came from 800 unique sources and nearly 700 organizations.

Their findings, shared exclusively with MIT Technology Review, show a worrying trend: AI’s data practices risk concentrating power overwhelmingly in the hands of a few dominant technology companies.

In the early 2010s, data sets came from a variety of sources, says Shayne Longpre, a researcher at MIT who is part of the project.

It came not just from encyclopedias and the web, but also from sources such as parliamentary transcripts, earning calls, and weather reports. Back then, AI data sets were specifically curated and collected from different sources to suit individual tasks, Longpre says.

Then transformers, the architecture underpinning language models, were invented in 2017, and the AI sector started seeing performance get better the bigger the models and data sets were. Today, most AI data sets are built by indiscriminately hoovering material from the internet. Since 2018, the web has been the dominant source for data sets used in all media, such as audio, images, and video, and a gap between scraped data and more curated data sets has emerged and widened.

“In foundation model development, nothing seems to matter more for the capabilities than the scale and heterogeneity of the data and the web,” says Longpre. The need for scale has also boosted the use of synthetic data massively.

The past few years have also seen the rise of multimodal generative AI models, which can generate videos and images. Like large language models, they need as much data as possible, and the best source for that has become YouTube.

For video models, as you can see in this chart, over 70% of data for both speech and image data sets comes from one source.

This could be a boon for Alphabet, Google’s parent company, which owns YouTube. Whereas text is distributed across the web and controlled by many different websites and platforms, video data is extremely concentrated in one platform.

“It gives a huge concentration of power over a lot of the most important data on the web to one company,” says Longpre…(More)”.

Paper by Sarah Ahannach et al: “Women’s health research is receiving increasing attention globally, but considerable knowledge gaps remain. Across many fields of research, active involvement of citizens in science has emerged as a promising strategy to help align scientific research with societal needs. Citizen science offers researchers the opportunity for large-scale sampling and data acquisition while engaging the public in a co-creative approach that solicits their input on study aims, research design, data gathering and analysis. Here, we argue that citizen science has the potential to generate new data and insights that advance women’s health. Based on our experience with the international Isala project, which used a citizen-science approach to study the female microbiome and its influence on health, we address key challenges and lessons for generating a holistic, community-centered approach to women’s health research. We advocate for interdisciplinary collaborations to fully leverage citizen science in women’s health toward a more inclusive research landscape that amplifies underrepresented voices, challenges taboos around intimate health topics and prioritizes women’s involvement in shaping health research agendas…(More)”.

Paper by Lukas Fuchs: “Adding an option is a neglected mechanism for bringing about behavioural change. This mechanism is distinct from nudges, which are changes in the choice architecture, and instead makes it possible to pursue republican paternalism, a unique form of paternalism in which choices are changed by expanding people’s set of options. I argue that this is truly a form of paternalism (albeit a relatively soft one) and illustrate some of its manifestations in public policy, specifically public options and market creation. Furthermore, I compare it with libertarian paternalism on several dimensions, namely respect for individuals’ agency, effectiveness, and efficiency. Finally, I consider whether policymakers have the necessary knowledge to successfully change behaviour by adding options. Given that adding an option has key advantages over nudges in most if not all of these dimensions, it should be considered indispensable in the behavioural policymaker’s toolbox…(More)”.

Article by Debra Lam: “Cities tackle a vast array of responsibilities – from building transit networks to running schools – and sometimes they can use a little help. That’s why local governments have long teamed up with businesses in so-called public-private partnerships. Historically, these arrangements have helped cities fund big infrastructure projects such as bridges and hospitals.

However, our analysis and research show an emerging trend with local governments engaged in private-sector collaborations – what we have come to describe as “community-centered, public-private partnerships,” or CP3s. Unlike traditional public-private partnerships, CP3s aren’t just about financial investments; they leverage relationships and trust. And they’re about more than just building infrastructure; they’re about building resilient and inclusive communities.

As the founding executive director of the Partnership for Inclusive Innovation, based out of the Georgia Institute of Technology, I’m fascinated with CP3s. And while not all CP3s are successful, when done right they offer local governments a powerful tool to navigate the complexities of modern urban life.

Together with international climate finance expert Andrea Fernández of the urban climate leadership group C40, we analyzed community-centered, public-private partnerships across the world and put together eight case studies. Together, they offer valuable insights into how cities can harness the power of CP3s.

4 keys to success

Although we looked at partnerships forged in different countries and contexts, we saw several elements emerge as critical to success over and over again.

• 1. Clear mission and vision: It’s essential to have a mission that resonates with everyone involved. Ruta N in Medellín, Colombia, for example, transformed the city into a hub of innovation, attracting 471 technology companies and creating 22,500 jobs.

This vision wasn’t static. It evolved in response to changing local dynamics, including leadership priorities and broader global trends. However, the core mission of entrepreneurship, investment and innovation remained clear and was embraced by all key stakeholders, driving the partnership forward.

2. Diverse and engaged partners: Successful CP3s rely on the active involvement of a wide range of partners, each bringing their unique expertise and resources to the table. In the U.K., for example, the Hull net-zero climate initiative featured a partnership that included more than 150 companies, many small and medium-size. This diversity of partners was crucial to the initiative’s success because they could leverage resources and share risks, enabling it to address complex challenges from multiple angles.

Similarly, Malaysia’s Think City engaged community-based organizations and vulnerable populations in its Penang climate adaptation program. This ensured that the partnership was inclusive and responsive to the needs of all citizens…(More)”.

Paper by Steven Bird: “How do we roll out language technologies across a world with 7,000 languages? In one story, we scale the successes of NLP further into ‘low-resource’ languages, doing ever more with less. However, this approach does not recognise the fact that – beyond the 500 institutional languages – the remaining languages are oral vernaculars. These speech communities interact with the outside world using a ‘con-

tact language’. I argue that contact languages are the appropriate target for technologies like speech recognition and machine translation, and that the 6,500 oral vernaculars should be approached differently. I share stories from an Indigenous community where local people reshaped an extractive agenda to align with their relational agenda. I describe the emerging paradigm of Relational NLP and explain how it opens the way to non-extractive methods and to solutions that enhance human agency…(More)”

Report by the World Economic Forum: “AI agents are autonomous systems capable of sensing, learning and acting upon their environments. This white paper explores their development and looks at how they are linked to recent advances in large language and multimodal models. It highlights how AI agents can enhance efficiency across sectors including healthcare, education and finance.

Tracing their evolution from simple rule-based programmes to sophisticated entities with complex decision-making abilities, the paper discusses both the benefits and the risks associated with AI agents. Ethical considerations such as transparency and accountability are emphasized, highlighting the need for robust governance frameworks and cross-sector collaboration.

By understanding the opportunities and challenges that AI agents present, stakeholders can responsibly leverage these systems to drive innovation, improve practices and enhance quality of life. This primer serves as a valuable resource for anyone seeking to gain a better grasp of this rapidly advancing field…(More)”.

Paper by the Centre for Information Policy Leadership: “Contemporary everyday life is increasingly permeated by digital information, whether by creating, consuming or depending on it. Most of our professional and private lives now rely to a large degree on digital interactions. As a result, access to and the use of data, and in particular personal data, are key elements and drivers of the digital economy and society. This has brought us to a significant inflection point on the issue of legitimising the processing of personal data in the wide range of contexts that are essential to our data-driven, AI-enabled digital products and services. The time has come to seriously re-consider the status of consent as a privileged legal basis and to consider alternatives that are better suited for a wide range of essential data processing contexts. The most prominent among these alternatives are the “legitimate interest” and “contractual necessity” legal bases, which have found an equivalent in a number of jurisdictions. One example is Singapore, where revisions to their data protection framework include a legitimate interest exemption…(More)”.

Article by David Spiegelhalter: “Life is uncertain. None of us know what is going to happen. We know little of what has happened in the past, or is happening now outside our immediate experience. Uncertainty has been called the ‘conscious awareness of ignorance’1 — be it of the weather tomorrow, the next Premier League champions, the climate in 2100 or the identity of our ancient ancestors.

In daily life, we generally express uncertainty in words, saying an event “could”, “might” or “is likely to” happen (or have happened). But uncertain words can be treacherous. When, in 1961, the newly elected US president John F. Kennedy was informed about a CIA-sponsored plan to invade communist Cuba, he commissioned an appraisal from his military top brass. They concluded that the mission had a 30% chance of success — that is, a 70% chance of failure. In the report that reached the president, this was rendered as “a fair chance”. The Bay of Pigs invasion went ahead, and was a fiasco. There are now established scales for converting words of uncertainty into rough numbers. Anyone in the UK intelligence community using the term ‘likely’, for example, should mean a chance of between 55% and 75% (see go.nature.com/3vhu5zc).

Attempts to put numbers on chance and uncertainty take us into the mathematical realm of probability, which today is used confidently in any number of fields. Open any science journal, for example, and you’ll find papers liberally sprinkled with P values, confidence intervals and possibly Bayesian posterior distributions, all of which are dependent on probability.

And yet, any numerical probability, I will argue — whether in a scientific paper, as part of weather forecasts, predicting the outcome of a sports competition or quantifying a health risk — is not an objective property of the world, but a construction based on personal or collective judgements and (often doubtful) assumptions. Furthermore, in most circumstances, it is not even estimating some underlying ‘true’ quantity. Probability, indeed, can only rarely be said to ‘exist’ at all…(More)”.

Article by Emilio Mariscal: “…After some exploration, I came up with an idea: what if we could export chat conversations and extract the location data along with the associated messages? The solution would involve a straightforward application where users can upload their exported chats and instantly generate a map displaying all shared locations and messages. No business accounts or complex integrations would be required—just a simple, ready-to-use tool from day one.

ChatMap —chatmap.hotosm.org — is a straightforward and simple mapping solution that leverages WhatsApp, an application used by 2.78 billion people worldwide. Its simplicity and accessibility make it an effective tool for communities with limited technical knowledge. And it even works offline! as it relies on the GPS signal for location, sending all data with the phone to gather connectivity.

This solution provides complete independence, as it does not require users to adopt a technology that depends on third-party maintenance. It’s a simple data flow with an equally straightforward script that can be improved by anyone interested on GitHub.

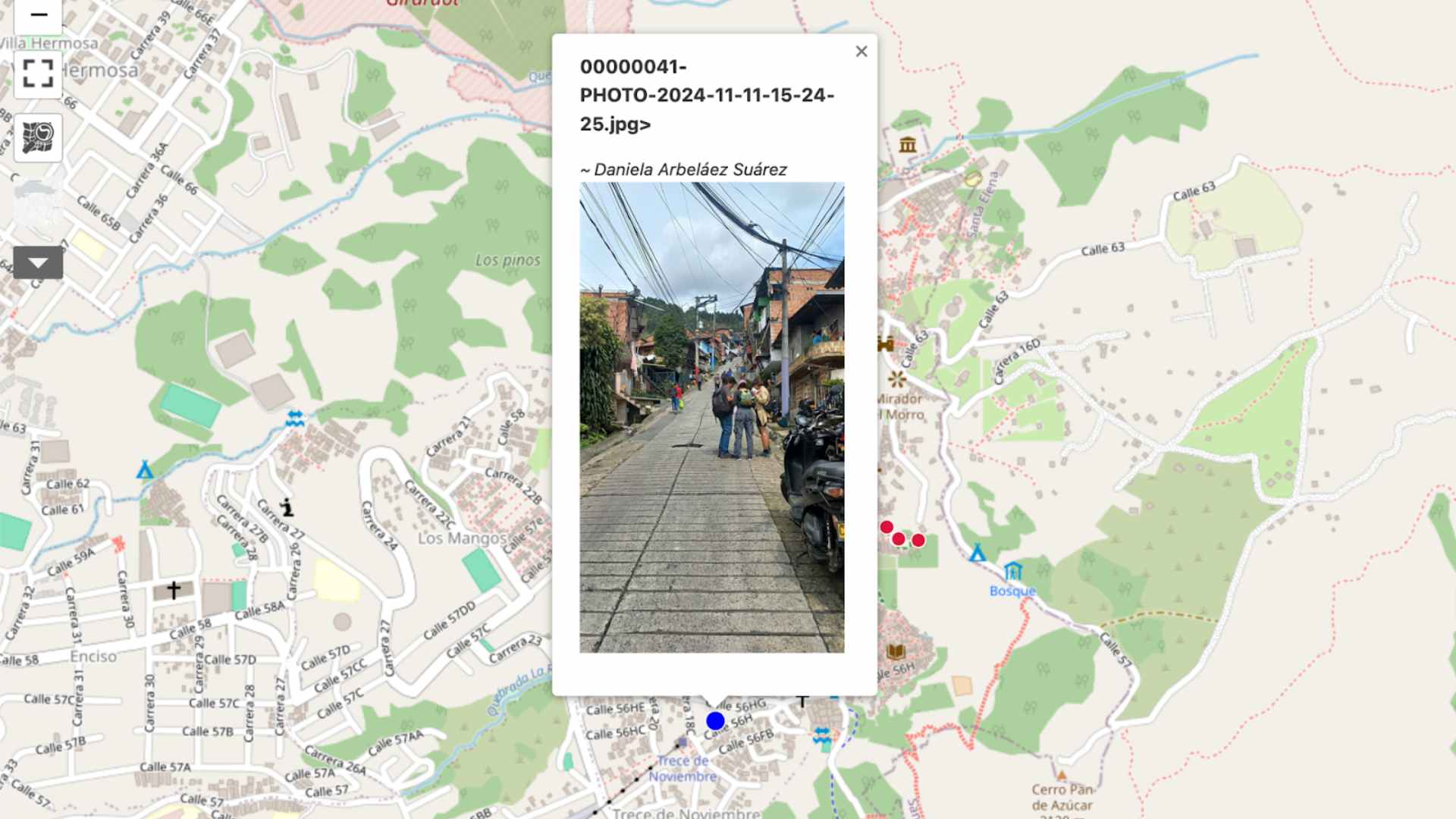

We’re already using it! Recently, as part of a community mapping project to assess the risks in the slopes of Comuna 8 in Medellín, an area vulnerable to repeated flooding, a group of students and local collectives collaborated with the Humanitarian OpenStreetMap (HOT) to map areas affected by landslides and other disaster impacts. This initiative facilitated the identification and characterization of settlements, supporting humanitarian aid efforts.

Photo by Daniela Arbeláez Suárez (source: WhatsApp)

As shown in the picture, the community explored the area on foot, using their phones to take photos and notes, and shared them along with the location. It was incredibly simple!

The data gathered during this activity was transformed 20 minutes later (once getting access to a WIFI network) into a map, which was then uploaded to our online platform powered by uMap (umap.hotosm.org)…(More)”.

See more at https://umap.hotosm.org/en/map/unaula-mapea-con-whatsapp_38