Stefaan Verhulst

Article by Matt Jancer: “About a year ago, AI began outpacing human writers on the internet. For every one article written by a real-life, blood-bag of a meat puppet, slightly more than one was written by a machine. Don’t get all twisted up about “slightly more than one” article; it’s fractions, my friends.

The news was broken when Graphite published a study showing that AI-written articles surpassed human-written articles by a small margin in November 2024.

“We find that in November 2024, the quantity of AI-generated articles being published on the web surpassed the quantity of human-written articles,” reads the report.

“We observe significant growth in AI-generated articles coinciding with the launch of ChatGPT in November 2022. After only 12 months, AI-generated articles accounted for nearly half (39%) of articles published. The raw data for this evaluation is available here.”

The authors randomly selected 65,000 English-language articles using Common Crawl. The criteria for the articles reviewed were that they were at least 100 words long and were published between January 2020 and May 2025. To determine whether they were AI-written, the authors used Surfer’s AI detector.

According to the study’s authors, most of these AI-written articles, though, don’t appear in either Google or ChatGPT. “We do not evaluate whether AI-generated articles are viewed in proportion by real users, but we suspect that they are not.” The authors didn’t speculate on why they weren’t, or for what purposes a largely invisible, AI-written article might be created…(More)”.

Article by By Darryl Jones: “…We are in Brisbane for the biannual Australasian Ornithological Congress. Some of the biggest names in bird science and conservation have turned up to this session, eager to hear about the latest developments in eBird, the flagship program of the Cornell Lab of Ornithology, located in Ithaca, New York state.

Everyone uses eBird….“You know what I find astonishing about this data?” Wood continues. “It’s that the so-called experts, the professional researchers and consultants and full-time birders, people like us, provided a trivial proportion of all this data. What is genuinely exciting is that almost all of it was submitted by ordinary birders dedicating their time to recording birds wherever they are and submitting them. People like this.”

The map vanishes and a video starts. It’s a TikTok “story”, so we are told. Many of us would not know TikTok from Instagram. But the people on the screen certainly do!

A rapidly changing gallery of young people appears. Moving to the beat of the soundtrack, they talk enthusiastically about bird identification. With staccato editing and pulsating music, kids as young as ten rave about the Merlin app, how to use it and what makes it so cool. (Merlin allows anyone to identify any bird around the world on their phone by using guide-book-type identifying features as well as the species calls.)

These are kids! Rapping about bird ID! And giving advice on how to get your ID right! It is a stark example of how much the image of birding has changed…(More)”.

Article by By Andrew Deck and Hanaa’ Tameez: “…News outlets’ homepages are vital historical records, providing a real-time view into what a newsroom deems the most important stories of the moment. From a homepage — headlines, word choice, story placement — readers get a sense of a newsroom’s editorial priorities and how they change over time. If homepages aren’t saved, records of those changes are lost.

The Wayback Machine, an initiative from the nonprofit Internet Archive, has been archiving the webpages of news outlets — alongside millions of other websites — for nearly three decades. Earlier this month, it announced that it will soon archive its trillionth web page. The Internet Archive has long stressed the importance of archiving homepages, particularly to fact-check politicians’ claims. In 2018, for instance, when Donald Trump accused Google of failing to promote his State of the Union address on its homepage, Google used the Wayback Machine’s archive of its homepage to disprove the statement.

“[Google’s] job isn’t to make copies of the homepage every 10 minutes,” Mark Graham, the director of the Wayback Machine, said at the time. “Ours is.”

But a Nieman Lab analysis shows that the Wayback Machine’s snapshots of news outlets’ homepages have plummeted in recent months. Between January 1 and May 15, 2025, the Wayback Machine shows a total of 1.2 million snapshots collected from 100 major news sites’ homepages. Between May 17 and October 1, 2025, it shows 148,628 snapshots from those same 100 sites — a decline of 87%. (You can see our data here.)..(More)”.

Article by Felix Gille and Federica Zavattaro: “Trust is essential for successful digital health initiatives. It does not emerge spontaneously but demands deliberate, targeted efforts. A four-step approach (understand context, identify levers, implement trust indicators and refine actions) supports practical implementation. With a comprehensive understanding of user trust and traits of trustworthy initiatives, it is important to shift from abstraction to practical action using a stepwise method that delivers tangible benefits and keeps trust from remaining theoretical.

Key Points

- Trust is vital for the acceptance of digital health initiatives and the broader digital transformation of health systems.

- Frameworks define principles that support the development and implementation of trustworthy digital health initiatives.

- Trust performance indicators enable ongoing evaluation and improvement of initiatives.

- Building trust demands proactivity, leadership, resources, system thinking and continuous action…(More)”.

White paper by The Impact Licensing Initiative (ILI):”… has introduced impact licensing as a strategic tool to maximise the societal and environmental value of research, innovation, and technology. Through this approach, ILI has successfully enabled the sustainable transfer of five technologies from universities and companies to impact-driven ventures, fostering a robust pipeline of innovations across diverse sectors such as clean energy, sustainable agriculture, healthcare, artificial intelligence, and education. This whitepaper, aimed at investment audiences and policymakers, outlines the basics of impact licensing and based on early applications, demonstrates how its adoption can accelerate positive change in addressing societal challenges…(More)”.

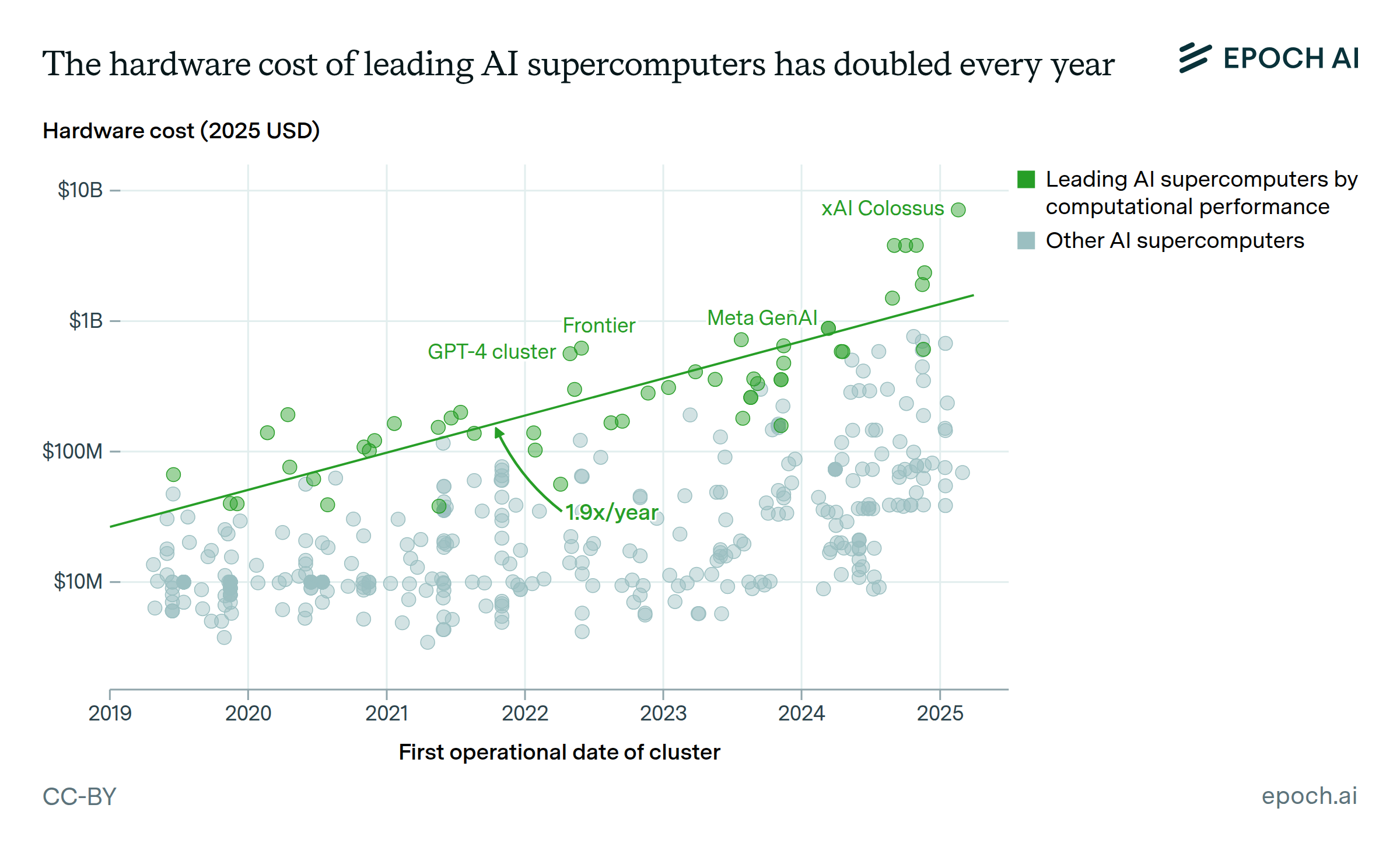

Report by Epoch: “What will happen if AI scaling persists to 2030? We are releasing a report that examines what this scale-up would involve in terms of compute, investment, data, hardware, and energy. We further examine the future AI capabilities this scaling will enable, particularly in scientific R&D, which is a focus for leading AI developers. We argue that AI scaling is likely to continue through 2030, despite requiring unprecedented infrastructure, and will deliver transformative capabilities across science and beyond.

Scaling is likely to continue until 2030: On current trends, frontier AI models in 2030 will require investments of hundreds of billions of dollars, and gigawatts of electrical power. Although these are daunting challenges, they are surmountable. Such investments will be justified if AI can generate corresponding economic returns by increasing productivity. If AI lab revenues keep growing at their current rate, they would generate returns that justify hundred-billion-dollar investments in scaling.

Scaling will lead to valuable AI capabilities: By 2030, AI will be able to implement complex scientific software from natural language, assist mathematicians formalising proof sketches, and answer open-ended questions about biology protocols. All of these examples are taken from existing AI benchmarks showing progress, where simple extrapolation suggests they will be solved by 2030. We expect AI capabilities will be transformative across several scientific fields, although it may take longer than 2030 to see them deployed to full effect…(More)”.

Paper by Jed Sundwall: “The COVID-19 pandemic revealed a striking lack of global data coordination among public institutions. While national governments and the World Health Organization struggled to collect and share timely information, a small team at Johns Hopkins University built a public dashboard that became the world’s most trusted source of COVID-19 data. Their work showed that planetary-scale data infrastructure can emerge quickly when practitioners act, even without formal authority. It also exposed a deeper truth: we cannot build global coordination on data without shared standards, yet it is unclear who gets to define what those standards might be.

This paper examines how the World Wide Web has created the conditions for useful data standards to emerge in the absence of any clear authority. It begins from the premise that standards are not just technical artefacts but the “connective tissue” that enables collaboration across institutions. They extend language itself, allowing people and systems to describe the world in compatible terms. Using the history of the web, the paper traces how small, loosely organised groups have repeatedly developed standards that now underpin global information exchange…(More)”.

Report by the Friedrich Naumann Foundation: “Despite the rapid global advancement of artificial intelligence, a significant disparity exists in its accessibility and benefits, disproportionately affecting low- and middle-income countries facing distinct socioeconomic hurdles. This policy paper compellingly argues for immediate, concerted international and national initiatives focused on strategic investments in infrastructure, capacity development, and ethical governance to ensure these nations can leverage AI as a powerful catalyst for equitable and sustainable progress…(More)”.

Blog by Beth Noveck: “Officials in Hamburg had long struggled with the fact that while citizens submitted thousands of comments on planning projects, only a fraction could realistically be read and processed. Making sense of feedback from a single engagement could once occupy five full-time employees for more than a week and chill any desire to do a follow-up conversation. Learn about how Hamburg built its own open source artificial intelligence to make sense of citizen feedback on a scale and speed that was once unimaginable…The Digital Participation System (DIPAS) is Hamburg, Germany’s integrated digital participation platform, designed to let residents contribute ideas, comments, and feedback on urban development projects online or in workshops. It combines mapping, document sharing, and discussion tools so that citizens can engage directly with concrete plans for their neighborhoods.

City officials had long struggled with the fact that while citizens submitted thousands of comments on planning projects, only a fraction could realistically be read and processed.

“We take the promise of participation seriously,” explained Claudius Lieven, one of DIPAS’s creators in Hamburg’s Ministry of Urban Development and Housing. “If people contribute, their collective intelligence must count. But with so many inputs, we simply couldn’t keep up.”

Making sense of feedback from a single engagement could once occupy five full-time employees for more than a week and chill any desire to do a follow-up conversation.

As a result, Lieven and his team spent three years integrating AI into the open-source system to make the new analytics toolbox more useful and the government more responsive. They combined the fine-tuning of Facebook’s advanced open-source language models LLaMA and RoBERTa with topic modeling and geodata integration.

With AI, DIPAS can cluster and summarize thousands of comments and distinguish between a “major position” about the current situation (for example, “The bike path is unsafe”) and an “idea” proposing what should be done (“The bike path should have better lighting”)…(More)”.

Article by Aivin Solatorio: “As artificial intelligence (AI) becomes a new gateway to development data, a quiet but significant risk has emerged. Large language models (LLMs) can now summarize reports, answer data queries, and interpret indicators in seconds. But while these tools promise convenience, they also raise a fundamental question: How can we ensure that the numbers they produce remain true to the official data they claim to represent?

AI access does not equal data integrity

Many AI systems today use retrieval-augmented generation (RAG), a technique that feeds models with content from trusted sources or databases. While it is widely viewed as a safeguard against hallucinations, it does not eliminate them. Even when an AI model retrieves the correct data, it may still generate outputs that deviate from it. It might round numbers to sound natural, merge disaggregated values, or restate statistics in ways that subtly alter their meaning. These deviations often go unnoticed because the AI still appears confident and precise to the end user.

Developers often measure such errors through evaluation experiments (or “evals”), reporting aggregate accuracy rates. But those averages mean little to a policymaker, journalist, or citizen interacting with an AI tool. What matters is not whether the model is usually correct, but whether the specific number it just produced is faithful to the official data.

Where Proof-Carrying Numbers come in

Proof-Carrying Numbers (PCN), a novel trust protocol developed by the AI for Data – Data for AI team, addresses this gap. It introduces a mechanism for verifying numerical faithfulness — that is, how closely the AI’s numbers match the trusted data they are based on — in real time.

Here’s how it works:

- The data passed to the LLM must include a claim identifier and a policy that defines acceptable behavior (e.g., exact match required, rounding allowed, etc.).

- The model is instructed to follow the PCN protocol when generating numbers based on that data.

- Each numeric output is checked against the reference data on which it was conditioned.

- If the result satisfies the policy, PCN marks it as verified [✓].

- If it deviates, PCN flags it for review [⚠️].

- Any numbers produced without explicit marks are assumed unverified and should be treated cautiously.

This is a fail-closed mechanism, a built-in safeguard that errs on the side of caution. When faithfulness cannot be proven, PCN does not assume correctness; instead, it makes that failure visible. This feature changes how users interact with AI: instead of trusting responses blindly, they can immediately see whether a number aligns with official data…(More)”.