Stefaan Verhulst

(Open Access) book edited by Bitange Ndemo and Tim Weiss: “Presenting rigorous and original research, this volume offers key insights into the historical, cultural, social, economic and political forces at play in the creation of world-class ICT innovations in Kenya. Following the arrival of fiber-optic cables in 2009, Digital Kenya examines why the initial entrepreneurial spirit and digital revolution has begun to falter despite support from motivated entrepreneurs, international investors, policy experts and others. Written by engaged scholars and professionals in the field, the book offers 15 eye-opening chapters and 14 one-on-one conversations with entrepreneurs and investors to ask why establishing ICT start-ups on a continental and global scale remains a challenge on the “Silicon Savannah”. The authors present evidence-based recommendations to help Kenya to continue producing globally impactful ICT innovations that improve the lives of those still waiting on the side-lines, and to inspire other nations to do the same….(More)”

Report for the BBC Trust: “The BBC, as the UK’s main public service broadcaster, has a particularly important role to play in bringing statistics to public attention and helping audiences to digest, understand and apply them to their daily lives. Accuracy and impartiality have a specific meaning when applied to statistics. Reporting accurately and impartially on critical and sometimes controversial topics requires understanding the data that informs them and accurate and impartial presentation of that data.

Overall, the BBC is to be commended in its approach to the use of statistics. People at the BBC place great value on using statistics responsibly. Journalists often go to some lengths to verify the statistics they receive. They exercise judgement when deciding which statistics to cover and the BBC has a strong record in selecting and presenting statistics effectively. Journalists and programme makers often make attempts to challenge conventional wisdom and provide independent assessments of stories reported elsewhere. Many areas of the BBC give careful thought to the way in which statistics are presented for audiences and the BBC has prioritised responsiveness to mistakes in recent years.

Informed by the evidence supporting this report, including Cardiff University’s content analysis and Oxygen Brand Consulting’s audience research study, we have nevertheless identified some areas for improvement. These include the following:

Contextualising statistics: Numbers are sometimes used by the BBC in ways which make it difficult for audiences to understand whether they are really big or small, worrying or not. Audiences have difficulty in particular in interpreting “big numbers”. And a number on its own, without trends or comparisons, rarely means much. We recommend that much more is done to ensure that statistics are always contextualised in such a way that audiences can understand their significance.

Interpreting, evaluating and “refereeing’“statistics: …The BBC needs to get better and braver in interpreting and explaining rival statistics and guiding the audience. Going beyond the headlines There is also a need for more regular, deeper investigation of the figures underlying sources such as press releases. This is especially pertinent as the Government is the predominant source of statistics on the BBC. We cannot expect, and do not suggest it is necessary for, all journalists to have access to and a full understanding of every single statistic which is in the public domain. But there is a need to look beyond the headlines to ask how the figures were obtained and whether they seem sensible. Failure to dig deeper into the data also represents lost opportunities to provide new and broader insights on topical issues. For example, reporting GDP per head of population might give a different perspective of the economy than just GDP alone, and we would like to see such analyses covered by the BBC more often. Geographic breakdowns could enhance reporting on the devolved UK.

We recommend that “Reality Check” becomes a permanent feature of the BBC’s activities, with a prominent online presence, reinforcing the BBC’s commitment to providing well-informed, accurate information on topical and important issues.

…The BBC needs to have the internal capacity to question press releases, relate them to other data sources and, if necessary, do some additional calculations – for example translating relative to absolute risk. There remains a need for basic training on, for example, percentages and percentage change, and nominal and real financial numbers….(More)”

Book Review by Pat Kane in The New Scientist: “The cover of this book echoes its core anxiety. A giant foot presses down on a sullen, Michael Jackson-like figure – a besuited citizen coolly holding off its massive weight. This is a sinister image to associate with a volume (and its author, Cass Sunstein) that should be able to proclaim a decade of success in the government’s use of “behavioural science”, or nudge theory. But doubts are brewing about its long-term effectiveness in changing public behaviour – as well as about its selective account of evolved human nature.

Sunstein insists that the powers that be cannot avoid nudging us. Every shop floor plan, every new office design, every commercial marketing campaign, every public information campaign, is an “architecting of choices”. As anyone who ever tried to leave IKEA quickly will suspect, that endless, furniture-strewn path to the exit is no accident.

Nudges “steer people in particular directions, but also allow them to go their own way”. They are entreaties to change our habits, to accept old or new norms, but they presume thatwe are ultimately free to refuse the request.

However, our freedom is easily constrained by “cognitive biases”. Our brains, say the nudgers, are lazy, energy-conserving mechanisms, often overwhelmed by information. So a good way to ensure that people pay into their pensions, for example, is to set payment as a “default” in employment contracts, so the employee has to actively untick the box. Defaults of all kinds exploit our preference for inertia and the status quo in order to increase future security….

Sunstein makes useful distinctions between nudges and the other things governments and enterprises can do. Nudges are not “mandates” (laws, regulations, punishments). A mandate would be, for example, a rigorous and well-administered carbon tax, secured through a democratic or representative process. A “nudge” puts smiley faces on your energy bill, and compares your usage to that of the eco-efficient Joneses next door (nudgers like to game our herd-like social impulses).

In a fascinating survey section, which asks Americans and others what they actually think about being the subjects of the “architecting” of their choices, Sunstein discovers that “if people are told that they are being nudged, they will react adversely and resist”.

This is why nudge thinking may be faltering – its understanding of human nature unnecessarily (and perhaps expediently) downgrades our powers of conscious thought….(More)

See The Ethics of Influence: Government in the age of behavioral science Cass R. Sunstein, Cambridge University Press

Theo Douglas in GovTech: “U.S. public service agencies are closely eyeing emerging technologies, chiefly advanced analytics and predictive modeling, according to a new report from Accenture, but like their counterparts globally they must address talent and complexity issues before adoption rates will rise.

The report, Emerging Technologies in Public Service, compiled a nine-nation survey of IT officials across all levels of government in policing and justice, health and social services, revenue, border services, pension/Social Security and administration, and was released earlier this week.

It revealed a deep interest in emerging tech from the public sector, finding 70 percent of agencies are evaluating their potential — but a much lower adoption level, with just 25 percent going beyond piloting to implementation….

The revenue and tax industries have been early adopters of advanced analytics and predictive modeling, he said, while biometrics and video analytics are resonating with police agencies.

In Australia, the tax office found using voiceprint technology could save 75,000 work hours annually.

Closer to home, Utah Chief Technology Officer Dave Fletcher told Accenture that consolidating data centers into a virtualized infrastructure improved speed and flexibility, so some processes that once took weeks or months can now happen in minutes or hours.

Nationally, 70 percent of agencies have either piloted or implemented an advanced analytics or predictive modeling program. Biometrics and identity analytics were the next most popular technologies, with 29 percent piloting or implementing, followed by machine learning at 22 percent.

Those numbers contrast globally with Australia, where 68 percent of government agencies have charged into piloting and implementing biometric and identity analytics programs; and Germany and Singapore, where 27 percent and 57 percent of agencies respectively have piloted or adopted video analytic programs.

Overall, 78 percent of respondents said they were either underway or had implemented some machine-learning technologies.

The benefits of embracing emerging tech that were identified ranged from finding better ways of working through automation to innovating and developing new services and reducing costs.

Agencies told Accenture their No. 1 objective was increasing customer satisfaction. But 89 percent said they’d expect a return on implementing intelligent technology within two years. Four-fifths, or 80 percent, agreed intelligent tech would improve employees’ job satisfaction….(More).

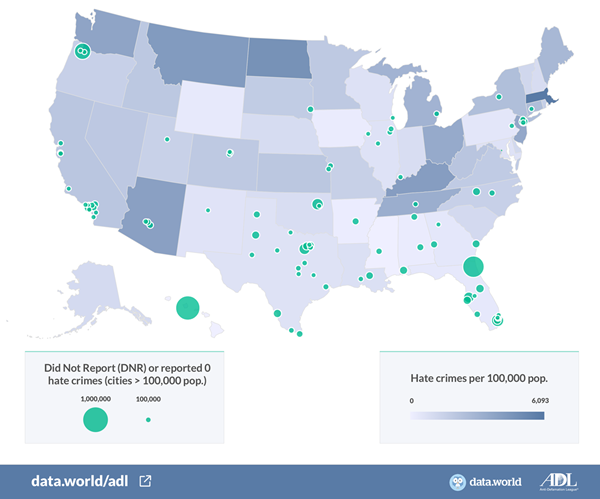

Press Release: “The Anti-Defamation League (ADL) and data.world today announced the launch of a public, open data workspace to help understand and combat the rise of hate crimes. The new workspace offers instant access to ADL data alongside relevant data from the FBI and other authoritative sources, and provides citizens, journalists and lawmakers with tools to more effectively analyze, visualize and discuss hate crimes across the United States.

The new workspace was unveiled at ADL’s inaugural “Never Is Now” Summit on Anti-Semitism, a daylong event bringing together nearly 1,000 people in New York City to hear from an array of experts on developing innovative new ways to combat anti-Semitism and bigotry….

Hate Crime Reporting Gaps

The color scale depicts total reported hate crime incidents per 100,000 people in each state. States with darker shading have more reported incidents of hate crimes while states with lighter shading have fewer reported incidents. The green circles proportionally represent cities that either Did Not Report hate crime data or affirmatively reported 0 hate crimes for the year 2015. Note the lightly shaded states in which many cities either Do Not Report or affirmatively report 0 hate crimes….(More)”

Cary Coglianese: “The widespread public angst that surfaced in the 2016 presidential election revealed how many Americans believe their government has become badly broken. Given the serious problems that continue to persist in society — crime, illiteracy, unemployment, poverty, discrimination, to name a few — widespread beliefs in a governmental breakdown are understandable. Yet such a breakdown is actually far from self-evident. In this paper, I explain how diagnoses of governmental performance depend on the perspective from which current conditions in the country are viewed. Certainly when judged against a standard of perfection, America has a long way to go. But perfection is no meaningful basis upon which to conclude that government has broken down. I offer and assess three alternative, more realistic benchmarks of government’s performance: (1) reliance on a standard of acceptable imperfection; (2) comparisons with other countries or time periods; and (3) the use of counterfactual inferences. Viewed from these perspectives, the notion of an irreparable governmental failure in the United States becomes quite questionable. Although serious economic and social shortcomings certainly exist, the nation’s strong economy and steadily improving living conditions in recent decades simply could not have occurred if government were not functioning well. Rather than embracing despair and giving in to cynicism and resignation, citizens and their leaders would do better to treat the nation’s problems as conditions of disrepair needing continued democratic engagement. It remains possible to achieve greater justice and better economic and social conditions for all — but only if we, the people, do not give up on the pursuit of these goals….(More)”

John Gastil and Robert C. Richards Jr. in Political Science & Politics (Forthcoming): “Recent advances in online civic engagement tools have created a digital civic space replete with opportunities to craft and critique laws and rules or evaluate candidates, ballot measures, and policy ideas. These civic spaces, however, remain largely disconnected from one another, such that tremendous energy dissipates from each civic portal. Long-term feedback loops also remain rare. We propose addressing these limitations by building an integrative online commons that links together the best existing tools by making them components in a larger “Democracy Machine.” Drawing on gamification principles, this integrative platform would provide incentives for drawing new people into the civic sphere, encouraging more sustained and deliberative engagement, and feedback back to government and citizen alike to improve how the public interfaces with the public sector. After describing this proposed platform, we consider the most challenging problems it faces and how to address them….(More)”

“Crowdjury is an online platform that crowdsources judicial proceedings: filing of complaints, evaluation of evidence, trial and jury verdict.

Crowdjury algorithms are optimized to reach a true verdict for each case, quickly and at minimal cost.

Do well by doing good

Jurors and Researchers from the crowd are rewarded in Bitcoin. You help do good, you earn money….

Want to know more? Read the White Paper “

Eric Mack at CNET: “Fighting the scourge of fake news online is one of the unexpected new crusades emerging from the fallout of Donald Trump’s upset presidential election win last week. Not surprisingly, the internet has no shortage of ideas for how to get its own house in order.

Eli Pariser, author of the seminal book “The Filter Bubble”that pre-saged some of the consequences of online platforms that tend to sequester users into non-overlapping ideological silos, is leading an inspired brainstorming effort via this open Google Doc.

Pariser put out the public call to collaborate via Twitter on Thursday and within 24 hours 21 pages worth of bullet-pointed suggestions has already piled up in the doc….

Suggestions ranged from the common call for news aggregators and social media platforms to hire more human editors, to launching more media literacy programs or creating “credibility scores” for shared content and/or users who share or report fake news.

Many of the suggestions are aimed at Facebook, which has taken a heavy heaping of criticism since the election and a recent report that found the top fake election news stories saw more engagement on Facebook than the top real election stories….

In addition to the crowdsourced brainstorming approach, plenty of others are chiming in with possible solutions. Author, blogger and journalism professor Jeff Jarvis teamed up with entrepreneur and investor John Borthwick of Betaworks to lay out 15 concrete ideas for addressing fake news on Medium ….The Trust Project at Santa Clara University is working to develop solutions to attack fake news that include systems for author verification and citations….(More)

Trends in Big Data Research, a Sage Whitepaper: “Information of all kinds is now being produced, collected, and analyzed at unprecedented speed, breadth, depth, and scale. The capacity to collect and analyze massive data sets has already transformed fields such as biology, astronomy, and physics, but the social sciences have been comparatively slower to adapt, and the path forward is less certain. For many, the big data revolution promises to ask, and answer, fundamental questions about individuals and collectives, but large data sets alone will not solve major social or scientific problems. New paradigms being developed by the emerging field of “computational social science” will be needed not only for research methodology, but also for study design and interpretation, cross-disciplinary collaboration, data curation and dissemination, visualization, replication, and research ethics (Lazer et al., 2009). SAGE Publishing conducted a survey with social scientists around the world to learn more about researchers engaged in big data research and the challenges they face, as well as the barriers to entry for those looking to engage in this kind of research in the future. We were also interested in the challenges of teaching computational social science methods to students. The survey was fully completed by 9412 respondents, indicating strong interest in this topic among our social science contacts. Of respondents, 33 percent had been involved in big data research of some kind and, of those who have not yet engaged in big data research, 49 percent (3057 respondents) said that they are either “definitely planning on doing so in the future” or “might do so in the future.”…(More)”