Stefaan Verhulst

SDN: “In our study we have identified five different areas that are relevant for service design: policy making, cultural and organizational change, training and capacity building, citizens engagement and digitization.

Service design is taking a role in “policy creation”. Not only does it bring in-depth insights in the needs and constraints of citizens that help to design policies that really work for citizens – it also enables and facilitates processes of co-creation with different stakeholders. Policies are perceived as a piece of design work that is in a constant development and they are made by people for people.

Service design is also taking a role in the process of cultural and organizational change. It collaborates with other experts in this field in order to enable change by reframing the challenges, by engaging stakeholders in development of scenarios of futures that do not yet exist and by prototyping envisioned scenarios. These processes change the role of public servants from experts to partners. It is no longer the public service that is doing something for the citizens but doing it with them.

This new way of thinking and working demands not only a change in mindset, but also in the way of doing things. Service design helps to build these new capacities. Very often it is a combination of teaching and learning by doing, in the process of capacity building small service design projects can be approached that create a sense of what service design can do and how to do it.

In this sentence service design works along with existing practices of citizens engagement and enriches them by the design approach. People are no longer victims of circumstances but creators of environments.

Very often we find that the digitalization of public services is the entrance door for designers. So enabling designers to expand their capacities and showcase how service design does not only polish the bits and bytes but really changes the way we live and work….(Full report)”

in the New York Times: “…Sharing data, both among the parts of a big police department and between the police and the private sector, “is a force multiplier,” he said.

Companies working with the military and intelligence agencies have long practiced these kinds of techniques, which the companies are bringing to domestic policing, in much the way surplus military gear has beefed upAmerican SWAT teams.

Palantir first built up its business by offering products like maps of social networks of extremist bombers and terrorist money launderers, and figuring out efficient driving routes to avoid improvised explosive devices.

Palantir used similar data-sifting techniques in New Orleans to spot individuals most associated with murders. Law enforcement departments around Salt Lake City used Palantir to allow common access to 40,000 arrest photos, 520,000 case reports and information like highway and airport data — building human maps of suspected criminal networks.

People in the predictive business sometimes compare what they do to controlling the other side’s “OODA loop,” a term first developed by a fighter pilot and military strategist named John Boyd.

OODA stands for “observe, orient, decide, act” and is a means of managing information in battle.

“Whether it’s war or crime, you have to get inside the other side’s decision cycle and control their environment,” said Robert Stasio, a project manager for cyberanalysis at IBM, and a former United States government intelligence official. “Criminals can learn to anticipate what you’re going to do and shift where they’re working, employ more lookouts.”

IBM sells tools that also enable police to become less predictable, for example, by taking different routes into an area identified as a crime hotspot. It has also conducted studies that show changing tastes among online criminals — for example, a move from hacking retailers’ computers to stealing health care data, which can be used to file for federal tax refunds.

But there are worries about what military-type data analysis means for civil liberties, even among the companies that get rich on it.

“It definitely presents challenges to the less sophisticated type of criminal,but it’s creating a lot of what is called ‘Big Brother’s little helpers,’” Mr.Bowman said. For now, he added, much of the data abundance problem is that “most police aren’t very good at this.”…(More)’

Ricardo Hausmann at Project Syndicate: “Public-private cooperation or coordination is receiving considerable attention nowadays. A plethora of centers for the study of business and government relations have been created, and researchers have produced a large literature on the design, analysis, and evaluation of public-private partnerships. Even the World Economic Forum has been transformed into “an international organization for public-private cooperation.”

Of course, private-private coordination has been the essence of economics for the past 250 years. While Adam Smith started us on the optimistic belief that an invisible hand would take care of most coordination issues, in the intervening period economists discovered all sorts of market failures, informational imperfections, and incentive problems, which have given rise to rules, regulations, and other forms of government and societal intervention. This year’s Nobel Prize in Economic Sciences was granted to Oliver Hart and Bengt Holmström for their contribution to understanding contracts, a fundamental device for private-private coordination.

But much less attention has been devoted to public-public coordination. This is surprising, because anyone who has worked in government knows that coordinating the public and private sectors to address a particular issue, while often complicated, is a cakewalk compared to the problem of herding the cats that constitute the panoply of government agencies.

The reason for this difficulty is the other side of Smith’s invisible hand. In the private sector, the market mechanism provides the elements of a self-organizing system, thanks to three interconnected structures: the price system, the profit motive, and capital markets. In the public sector, this mechanism is either non-existent or significantly different and less efficient.

The price system is a decentralized information system that reveals people’s willingness to buy or sell and the wisdom of buying some inputs in order to produce a certain output at the going market price. The profit motive provides an incentive system to respond to the information that prices contain. And capital markets mobilize resources for activities that are expected to be profitable; those that adequately respond to prices.

By contrast, most public services have no prices, there is not supposed to be a profit motive in their provision, and capital markets are not supposed to choose what to fund: the money funds whatever is in the budget.

…addressing most problems in government involves multiple agencies….

One solution is to create a market-like mechanism within the government. The idea is to assign a portion of the budget, say 3-5%, to a central pool of funds to be requested by one ministry but to be executed by another, as if one was buying services from the other. These resources would allow the demand for public goods to permeate the allocation of budgetary resources across ministries….

The central pool of resources is designed to increase the responsiveness of one ministry’s back end to the demands of society as identified by another ministry’s front end, without these resources competing with the priorities that each ministry has for its “own” budget.

By allocating a small proportion of each year’s budget to priorities identified in this way, we may find that, over time, budgets become more responsive and better reflect society’s evolving needs. And public-private coordination may flourish once the public-public bottlenecks are removed….(More)”

Paper by Amy K. Glasmeier and Molly Nebiolo in Sustainability: “… explores the unique challenge of contemporary urban problems and the technologies that vendors have to solve them. An acknowledged gap exists between widely referenced technologies that city managers utilize to optimize scheduled operations and those that reflect the capability of spontaneity in search of nuance–laden solutions to problems related to the reflexivity of entire systems. With regulation, the first issue type succumbs to rehearsed preparation whereas the second hinges on extemporaneous practice. One is susceptible to ready-made technology applications while the other requires systemic deconstruction and solution-seeking redesign. Research suggests that smart city vendors are expertly configured to address the former, but less adept at and even ill-configured to react to and address the latter. Departures from status quo responses to systemic problems depend on formalizing metrics that enable city monitoring and data collection to assess “smart investments”, regardless of the size of the intervention, and to anticipate the need for designs that preserve the individuality of urban settings as they undergo the transformation to become “smart”….(More)”

New report by the Institute for Government (UK): “Making a Success of Digital Government, says that after five years of getting more services online, government is hitting a wall. But despite some public services still running on last century’s computers, the real barrier to progress is not technology but the lack of political drive from the top.

Filling your tax return should be as easy as doing online banking. The technology exists. But outdated practices and policies mean that, in the case of many government services, we are still filling in and posting off forms to be manually processed. Taking digital government to the next level – which the report says would satisfy citizens and potentially save billions in the process – requires leadership. Civil servants need to improve their skills, old systems need to be overhauled, and policies need to be updated.

Daniel Thornton, report author, said:

“Tinkering around the edges of digital government has taken us only so far – now we need a fundamental change in the government’s approach. The starting point is recognising that digital is not just for geeks anymore – everyone in government must work to make it a success. There are huge potential savings to be made if the Government gets this right – which makes it all the more disappointing that the PM and Chancellor have not been as explicit about their commitment to digital government as their predecessors.”…(More)”

Over the past few years, we’ve taken unprecedented action to help Americans engage with their Government in new and meaningful ways.

Using Vote.gov, citizens can now quickly navigate their state’s voter registration process through an easy-to-use site. Veterans can go to Vets.gov to discover, apply for, track and manage their benefits in one, user-friendly place. And for the first time ever, citizens can send a note to President Obama simply by messaging the White House on Facebook.

By harnessing 21st Century technology and innovation, we’re improving the Federal Government’s ability to provide better citizen-centered services and are making the Federal Government smarter, savvier, and more effective for the American people. At the same time, we’re building many of these new digital tools, such as We the People, the White House Facebook bot, and Data.gov, in the open so that as the Government uses technology to re-imagine and improve the way people interact with it, others can too.

The code for these platforms is, after all, the People’s Code – and today we’re excited to announce that it’ll be accessible from one place, Code.gov, for the American people to explore, improve, and innovate.

The launch of Code.gov comes on the heels of the release of the Federal Source Code Policy, which seeks to further improve access to the Federal Government’s custom-developed software. It’s a step we took to help Federal agencies avoid duplicative custom software purchases and promote innovation and cross-agency collaboration. And it’s a step we took to enable the brightest minds inside and outside of government to work together to ensure that Federal code is reliable and effective.

Built in the open, the newly-launched Code.gov already boasts access to nearly 50 open source projects from over 10 agencies – and we expect this number to grow over the coming months as agencies work to implement the Federal Source Code Policy. Further, Code.gov will provide useful tools and best practices to help agencies implement the new policy. For example, starting today agencies can begin populating their enterprise code inventories using the metadata schema on Code.gov, discover various methods on how to build successful open source projects, and much more….(More)”

But the word ‘sharing’ also camouflages commercial or even exploitative relations.Websites say they share data with advertisers, although in reality they sell it, while parts of the sharing economy look a great deal like rental services. Ultimately, it is argued, practices described as sharing and critiques of those practices have common roots. Consequently, the metaphor of sharing now constructs significant swathes of our social practices and provides the grounds for critiquing them; it is a mode of participation in the capitalist order as well as a way of resisting it.

Drawing on nineteenth-century literature, Alcoholics Anonymous, the American counterculture, reality TV, hackers, Airbnb, Facebook and more, The Age of Sharing offers a rich account of a complex contemporary keyword. It will appeal to students and scholars of the Internet, digital culture and linguistics….(More)”

Why Is This Tool Unique?

- Only source of information on how foundations are supporting U.S. democracy

- Uses a common framework for understanding what activities foundations are funding

- Provides direct access to available funding data

- Delivers fresh and timely data every week

How Can You Use It?

- Understand who is funding what, where

- Analyze funder and nonprofit networks

- Compare foundation funding for issues you care about

- Support your knowledge about the field

- Discover new philanthropic partners…(More)”

Survey by Pew Global: “Signs of political discontent are increasingly common in many Western nations, with anti-establishment parties and candidates drawing significant attention and support across the European Union and in the United States. Meanwhile, as previous Pew Research Center surveys have shown, in emerging and developing economies there is widespread dissatisfaction with the way the political system is working.

As a new nine-country Pew Research Center survey on the strengths and limitations of civic engagement illustrates, there is a common perception that government is run for the benefit of the few, rather than the many in both emerging democracies and more mature democracies that have faced economic challenges in recent years. In eight of nine nations surveyed, more than half say government is run for the benefit of only a few groups in society, not for all people.1

As a new nine-country Pew Research Center survey on the strengths and limitations of civic engagement illustrates, there is a common perception that government is run for the benefit of the few, rather than the many in both emerging democracies and more mature democracies that have faced economic challenges in recent years. In eight of nine nations surveyed, more than half say government is run for the benefit of only a few groups in society, not for all people.1

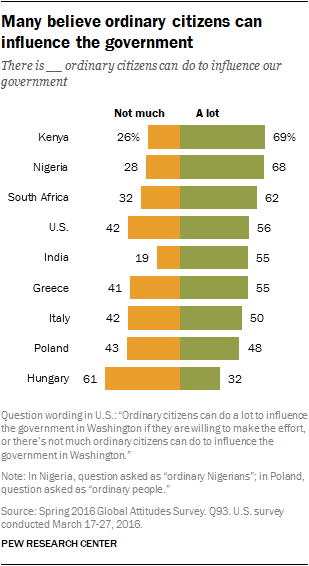

However, this skeptical outlook on government does not mean people have given up on democracy or the ability of average citizens to have an impact on how the country is run. Roughly half or more in eight nations – Kenya, Nigeria, South Africa, the U.S., India, Greece, Italy and Poland – say ordinary citizens can have a lot of influence on government. Hungary, where 61% say there is little citizens can do, is the lone nation where pessimism clearly outweighs optimism on this front.

Many people in these nine nations say they could potentially be motivated to become politically engaged on a variety of issues, especially poor health care, poverty and poor-quality schools. When asked what types of issues could get them to take political action, such as contacting an elected official or taking part in a protest, poor health care is the top choice among the six issues tested in six of eight countries. Health care, poverty and education constitute the top three motivators in all nations except India and Poland….(More)