Stefaan Verhulst

Article by Jessica Hilburn: “This land was made for you and me, and so was the data collected with our taxpayer dollars. Open data is data that is accessible, shareable, and able to be used by anyone. While any person, company, or organization can create and publish open data, the federal and state governments are by far the largest providers of open data.

President Barack Obama codified the importance of government-created open data in his May 9, 2013, executive order as a part of the Open Government Initiative. This initiative was meant to “ensure the public trust and establish a system of transparency, public participation, and collaboration” in furtherance of strengthening democracy and increasing efficiency. The initiative also launched Project Open Data (since replaced by the Resources.data.gov platform), which documented best practices and offered tools so government agencies in every sector could open their data and contribute to the collective public good. As has been made readily apparent, the era of public good through open data is now under attack.

Immediately after his inauguration, President Donald Trump signed a slew of executive orders, many of which targeted diversity, equity, and inclusion (DEI) for removal in federal government operations. Unsurprisingly, a large number of federal datasets include information dealing with diverse populations, equitable services, and inclusion of marginalized groups. Other datasets deal with information on topics targeted by those with nefarious agendas—vaccination rates, HIV/AIDS, and global warming, just to name a few. In the wake of these executive orders, datasets and website pages with blacklisted topics, tags, or keywords suddenly disappeared—more than 8,000 of them. In addition, President Trump fired the National Archivist, and top National Archives and Records Administration officials are being ousted, putting the future of our collective history at enormous risk.

While it is common practice to archive websites and information in the transition between administrations, it is unprecedented for the incoming administration to cull data altogether. In response, unaffiliated organizations are ramping up efforts to separately archive data and information for future preservation and access. Web scrapers are being used to grab as much data as possible, but since this method is automated, data requiring a login or bot challenger (like a captcha) is left behind. The future information gap that researchers will be left to grapple with could be catastrophic for progress in crucial areas, including weather, natural disasters, and public health. Though there are efforts to put out the fire, such as the federal order to restore certain resources, the people’s library is burning. The losses will be permanently felt…Data is a weapon, whether we like it or not. Free and open access to information—about democracy, history, our communities, and even ourselves—is the foundation of library service. It is time for anyone who continues to claim that libraries are not political to wake up before it is too late. Are libraries still not political when the Pentagon barred library access for tens of thousands of American children attending Pentagon schools on military bases while they examined and removed supposed “radical indoctrination” books? Are libraries still not political when more than 1,000 unique titles are being targeted for censorship annually, and soft censorship through preemptive restriction to avoid controversy is surely occurring and impossible to track? It is time for librarians and library workers to embrace being political.

In a country where the federal government now denies that certain people even exist, claims that children are being indoctrinated because they are being taught the good and bad of our nation’s history, and rescinds support for the arts, humanities, museums, and libraries, there is no such thing as neutrality. When compassion and inclusion are labeled the enemy and the diversity created by our great American experiment is lambasted as a social ill, claiming that libraries are neutral or apolitical is not only incorrect, it’s complicit. To update the quote, information is the weapon in the war of ideas. Librarians are the stewards of information. We don’t want to be the Americans who protested in 1933 at the first Nazi book burnings and then, despite seeing the early warning signs of catastrophe, retreated into the isolation of their own concerns. The people’s library is on fire. We must react before all that is left of our profession is ash…(More)”.

Paper by Devansh Saxena, et al: “Local and federal agencies are rapidly adopting AI systems to augment or automate critical decisions, efficiently use resources, and improve public service delivery. AI systems are being used to support tasks associated with urban planning, security, surveillance, energy and critical infrastructure, and support decisions that directly affect citizens and their ability to access essential services. Local governments act as the governance tier closest to citizens and must play a critical role in upholding democratic values and building community trust especially as it relates to smart city initiatives that seek to transform public services through the adoption of AI. Community-centered and participatory approaches have been central for ensuring the appropriate adoption of technology; however, AI innovation introduces new challenges in this context because participatory AI design methods require more robust formulation and face higher standards for implementation in the public sector compared to the private sector. This requires us to reassess traditional methods used in this space as well as develop new resources and methods. This workshop will explore emerging practices in participatory algorithm design – or the use of public participation and community engagement – in the scoping, design, adoption, and implementation of public sector algorithms…(More)”.

Paper by Pietro Gennari: “Over the last few years, the private sector has become a primary generator of data due to widespread digitisation of the economy and society, the use of social media platforms, and advancements of technologies like the Internet of Things and AI. Unlike traditional sources, these new data streams often offer real-time information and unique insights into people’s behaviour, social dynamics, and economic trends. However, the proprietary nature of most private sector data presents challenges for public access, transparency, and governance that have led to fragmented, often conflicting, data governance arrangements worldwide. This lack of coherence can exacerbate inequalities, limit data access, and restrict data’s utility as a global asset.

Within this context, data equity has emerged as one of the key principles at the basis of any proposal of new data governance framework. The term “data equity” refers to the fair and inclusive access, use, and distribution of data so that it benefits all sections of society, regardless of socioeconomic status, race, or geographic location. It involves making sure that the collection, processing, and use of data does not disproportionately benefit or harm any particular group and seeks to address disparities in data access and quality that can perpetuate social and economic inequalities. This is important because data systems significantly influence access to resources and opportunities in society. In this sense, data equity aims to correct imbalances that have historically affected various groups and to ensure that decision-making based on data does not perpetuate these inequities…(More)”.

Toolkit by Maria Claudia Bodino, Nathan da Silva Carvalho, Marcelo Cogo, Arianna Dafne Fini Storchi, and Stefaan Verhulst: “Despite the abundance of data, the excitement around AI, and the potential for transformative insights, many public administrations struggle to translate data into actionable strategies and innovations.

Public servants working with data-related initiatives, need practical, easy-to-use resources designed to enhance the management of data innovation initiatives.

In order to address these needs, the iLab of DG DIGIT from the European Commission is developing an initial set of practical tools designed to facilitate and enhance the implementation of data-driven initiatives. The main building blocks of the first version of the of the Digital Innovation Toolkit include:

- A Repository of educational materials and resources on the latest data innovation approaches from public sector, academia, NGOs and think tanks

- An initial set of practical resources, some examples:

- Workshop Templates to offer structured formats for conducting productive workshops that foster collaboration, ideation, and problem-solving.

- Checklists to ensure that all data journey aspects and steps are properly assessed.

- Interactive Exercises to engage team members in hands-on activities that build skills and facilitate understanding of key concepts and methodologies.

- Canvas Models to provide visual frameworks for planning and brainstorming….(More)”.

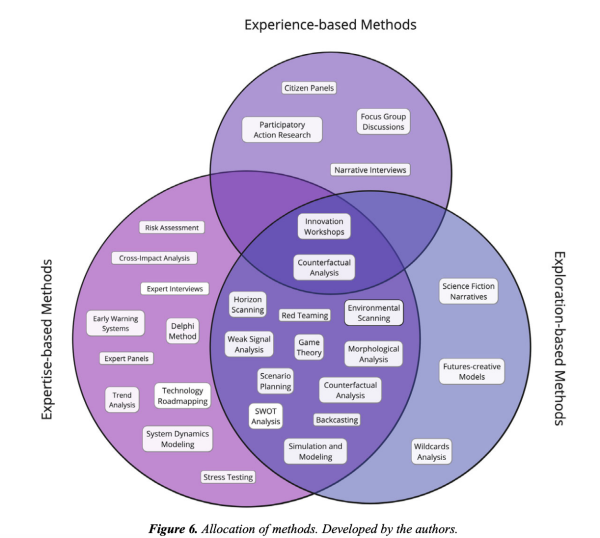

Paper by Sara Marcucci, Stefaan Verhulst and María Esther Cervantes: “The various global refugee and migration events of the last few years underscore the need for advancing anticipatory strategies in migration policy. The struggle to manage large inflows (or outflows) highlights the demand for proactive measures based on a sense of the future. Anticipatory methods, ranging from predictive models to foresight techniques, emerge as valuable tools for policymakers. These methods, now bolstered by advancements in technology and leveraging nontraditional data sources, can offer a pathway to develop more precise, responsive, and forward-thinking policies.

This paper seeks to map out the rapidly evolving domain of anticipatory methods in the realm of migration policy, capturing the trend toward integrating quantitative and qualitative methodologies and harnessing novel tools and data. It introduces a new taxonomy designed to organize these methods into three core categories: Experience-based, Exploration-based, and Expertise-based. This classification aims to guide policymakers in selecting the most suitable methods for specific contexts or questions, thereby enhancing migration policies…(More)”

Nature Editorial: “Of the myriad applications of artificial intelligence (AI), its use in humanitarian assistance is underappreciated. In 2020, during the COVID-19 pandemic, Togo’s government used AI tools to identify tens of thousands of households that needed money to buy food, as Nature reports in a News Feature this week. Typically, potential recipients of such payments would be identified when they apply for welfare schemes, or through household surveys of income and expenditure. But such surveys were not possible during the pandemic, and the authorities needed to find alternative means to help those in need. Researchers used machine learning to comb through satellite imagery of low-income areas and combined that knowledge with data from mobile-phone networks to find eligible recipients, who then received a regular payment through their phones. Using AI tools in this way was a game-changer for the country.Can AI help beat poverty? Researchers test ways to aid the poorest people

Now, with the pandemic over, researchers and policymakers are continuing to see how AI methods can be used in poverty alleviation. This needs comprehensive and accurate data on the state of poverty in households. For example, to be able to help individual families, authorities need to know about the quality of their housing, their children’s diets, their education and whether families’ basic health and medical needs are being met. This information is typically obtained from in-person surveys. However, researchers have seen a fall in response rates when collecting these data.

Missing data

Gathering survey-based data can be especially challenging in low- and middle-income countries (LMICs). In-person surveys are costly to do and often miss some of the most vulnerable, such as refugees, people living in informal housing or those who earn a living in the cash economy. Some people are reluctant to participate out of fear that there could be harmful consequences — deportation in the case of undocumented migrants, for instance. But unless their needs are identified, it is difficult to help them.Leveraging the collaborative power of AI and citizen science for sustainable development

Could AI offer a solution? The short answer is, yes, although with caveats. The Togo example shows how AI-informed approaches helped communities by combining knowledge of geographical areas of need with more-individual data from mobile phones. It’s a good example of how AI tools work well with granular, household-level data. Researchers are now homing in on a relatively untapped source for such information: data collected by citizen scientists, also known as community scientists. This idea deserves more attention and more funding.

Thanks to technologies such as smartphones, Wi-Fi and 4G, there has been an explosion of people in cities, towns and villages collecting, storing and analysing their own social and environmental data. In Ghana, for example, volunteer researchers are collecting data on marine litter along the coastline and contributing this knowledge to their country’s official statistics…(More)”.

OECD article: “Value-added tax (VAT) is a consumption tax applied at each stage of the supply chain whenever value is added to goods or services. Businesses collect and remit VAT. The VAT data that are collected represent a breakthrough in studying production networks because they capture actual transactions between firms at an unprecedented level of detail. Unlike traditional business surveys or administrative data that might tell us about a firm’s size or industry, VAT records show us who does business with whom and for how much.

This data is particularly valuable because of its comprehensive coverage. In Estonia, for example, all VAT-registered businesses must report transactions above €1,000 per month, creating an almost complete picture of significant business relationships in the economy.

At least 15 countries now have such data available, including Belgium, Chile, Costa Rica, Estonia, and Italy. This growing availability creates opportunities for cross-country comparison and broader economic insights…(More)”.

Article by Karen Zraick: “Organic farmers and environmental groups sued the Agriculture Department on Monday over its scrubbing of references to climate change from its website.

The department had ordered staff to take down pages focused on climate change on Jan. 30, according to the suit, which was filed in the United States District Court for the Southern District of New York. Within hours, it said, information started disappearing.

That included websites containing data sets, interactive tools and funding information that farmers and researchers relied on for planning and adaptation projects, according to the lawsuit.

At the same time, the department also froze funding that had been promised to businesses and nonprofits through conservation and climate programs. The purge then “removed critical information about these programs from the public record, denying farmers access to resources they need to advocate for funds they are owed,” it said.

The Agriculture Department referred questions about the lawsuit to the Justice Department, which did not immediately respond to a request for comment.

The suit was filed by lawyers from Earthjustice, based in San Francisco, and the Knight First Amendment Institute at Columbia University, on behalf of the Northeast Organic Farming Association of New York, based in Binghamton; the Natural Resources Defense Council, based in New York; and the Environmental Working Group, based in Washington. The latter two groups relied on the department website for their research and advocacy, the lawsuit said.

Peter Lehner, a lawyer for Earthjustice, said the pages being purged were crucial for farmers facing risks linked to climate change, including heat waves, droughts, floods, extreme weather and wildfires. The websites had contained information about how to mitigate dangers and adopt new agricultural techniques and strategies. Long-term weather data and trends are valuable in the agriculture industry for planning, research and business strategy.

“You can purge a website of the words climate change, but that doesn’t mean climate change goes away,” Mr. Lehner said…(More)”.

Report by the Tony Blair Institute for Global Change: “The United Kingdom should lead the world in artificial-intelligence-driven innovation, research and data-enabled public services. It has the data, the institutions and the expertise to set the global standard. But without the right infrastructure, these advantages are being wasted.

The UK’s data infrastructure, like that of every nation, is built around outdated assumptions about how data create value. It is fragmented and unfit for purpose. Public-sector data are locked in silos, access is slow and inconsistent, and there is no system to connect and use these data effectively, or any framework for deciding what additional data would be most valuable to collect given AI’s capabilities.

As a result, research is stalled, AI adoption is held back, and the government struggles to plan services, target support and respond to emerging challenges. This affects everything from developing new treatments to improving transport, tackling crime and ensuring economic policies help those who need them. While some countries are making progress in treating existing data as strategic assets, none have truly reimagined data infrastructure for an AI-enabled future…(More)”

Paper by Urs Gasser and Viktor Mayer-Schonberger: “…International harmonization of regulation is the right strategy when the appropriate regulatory ends and means are sufficiently clear to reap efficiencies of scale and scope. When this is not the case, a push for efficiency through uniformity is premature and may lead to a suboptimal regulatory lock-in: the establishment of a rule framework that is either inefficient in the use of its means to reach the intended goal, or furthers the wrong goal, or both.

A century ago, economist Joseph Schumpeter suggested that companies have two distinct strategies to achieve success. The first is to employ economies of scale and scope to lower their cost. It’s essentially a push for improved efficiency. The other strategy is to invent a new product (or production process) that may not, at least initially, be hugely efficient, but is nevertheless advantageous because demand for the new product is price inelastic. For Schumpeter this was the essence of innovation. But, as Schumpeter also argued, innovation is not a simple, linear, and predictable process. Often, it happens in fits and starts, and can’t be easily commandeered or engineered.

As innovation is hard to foresee and plan, the best way to facilitate it is to enable a wide variety of different approaches and solutions. Public policies in many countries to foster startups and entrepreneurship stems from this view. Take, for instance, the policy of regulatory sandboxing, i.e. the idea that for a limited time certain sectors should not or only lightly be regulated…(More)”.