Open Data Institute and Professor Nigel Shadbolt (@Nigel_Shadbolt) interviewed by by Alex Howard (@digiphile): “…there are some clear learnings. One that I’ve been banging on about recently has been that yes, it really does matter to turn the dial so that governments have a presumption to publish non-personal public data. If you would publish it anyway, under a Freedom of Information request or whatever your local legislative equivalent is, why aren’t you publishing it anyway as open data? That, as a behavioral change. is a big one for many administrations where either the existing workflow or culture is, “Okay, we collect it. We sit on it. We do some analysis on it, and we might give it away piecemeal if people ask for it.” We should construct publication process from the outset to presume to publish openly. That’s still something that we are two or three years away from, working hard with the public sector to work out how to do and how to do properly.

We’ve also learned that in many jurisdictions, the amount of [open data] expertise within administrations and within departments is slight. There just isn’t really the skillset, in many cases. for people to know what it is to publish using technology platforms. So there’s a capability-building piece, too.

One of the most important things is it’s not enough to just put lots and lots of datasets out there. It would be great if the “presumption to publish” meant they were all out there anyway — but when you haven’t got any datasets out there and you’re thinking about where to start, the tough question is to say, “How can I publish data that matters to people?”

The data that matters is revealed in the fact that if we look at the download stats on these various UK, US and other [open data] sites. There’s a very, very distinctive parallel curve. Some datasets are very, very heavily utilized. You suspect they have high utility to many, many people. Many of the others, if they can be found at all, aren’t being used particularly much. That’s not to say that, under that long tail, there isn’t large amounts of use. A particularly arcane open dataset may have exquisite use to a small number of people.

The real truth is that it’s easy to republish your national statistics. It’s much harder to do a serious job on publishing your spending data in detail, publishing police and crime data, publishing educational data, publishing actual overall health performance indicators. These are tough datasets to release. As people are fond of saying, it holds politicians’ feet to the fire. It’s easy to build a site that’s full of stuff — but does the stuff actually matter? And does it have any economic utility?”

there are some clear learnings. One that I’ve been banging on about recently has been that yes, it really does matter to turn the dial so that governments have a presumption to publish non-personal public data. If you would publish it anyway, under a Freedom of Information request or whatever your local legislative equivalent is, why aren’t you publishing it anyway as open data? That, as a behavioral change. is a big one for many administrations where either the existing workflow or culture is, “Okay, we collect it. We sit on it. We do some analysis on it, and we might give it away piecemeal if people ask for it.” We should construct publication process from the outset to presume to publish openly. That’s still something that we are two or three years away from, working hard with the public sector to work out how to do and how to do properly.

We’ve also learned that in many jurisdictions, the amount of [open data] expertise within administrations and within departments is slight. There just isn’t really the skillset, in many cases. for people to know what it is to publish using technology platforms. So there’s a capability-building piece, too.

One of the most important things is it’s not enough to just put lots and lots of datasets out there. It would be great if the “presumption to publish” meant they were all out there anyway — but when you haven’t got any datasets out there and you’re thinking about where to start, the tough question is to say, “How can I publish data that matters to people?”

The data that matters is revealed in the fact that if we look at the download stats on these various UK, US and other [open data] sites. There’s a very, very distinctive parallel curve. Some datasets are very, very heavily utilized. You suspect they have high utility to many, many people. Many of the others, if they can be found at all, aren’t being used particularly much. That’s not to say that, under that long tail, there isn’t large amounts of use. A particularly arcane open dataset may have exquisite use to a small number of people.

The real truth is that it’s easy to republish your national statistics. It’s much harder to do a serious job on publishing your spending data in detail, publishing police and crime data, publishing educational data, publishing actual overall health performance indicators. These are tough datasets to release. As people are fond of saying, it holds politicians’ feet to the fire. It’s easy to build a site that’s full of stuff — but does the stuff actually matter? And does it have any economic utility?

The Big Data Debate: Correlation vs. Causation

Gil Press: “In the first quarter of 2013, the stock of big data has experienced sudden declines followed by sporadic bouts of enthusiasm. The volatility—a new big data “V”—continues and Ted Cuzzillo summed up the recent negative sentiment in “Big data, big hype, big danger” on SmartDataCollective:

“A remarkable thing happened in Big Data last week. One of Big Data’s best friends poked fun at one of its cornerstones: the Three V’s. The well-networked and alert observer Shawn Rogers, vice president of research at Enterprise Management Associates, tweeted his eight V’s: ‘…Vast, Volumes of Vigorously, Verified, Vexingly Variable Verbose yet Valuable Visualized high Velocity Data.’ He was quick to explain to me that this is no comment on Gartner analyst Doug Laney’s three-V definition. Shawn’s just tired of people getting stuck on V’s.”…

Cuzzillo is joined by a growing chorus of critics that challenge some of the breathless pronouncements of big data enthusiasts. Specifically, it looks like the backlash theme-of-the-month is correlation vs. causation, possibly in reaction to the success of Viktor Mayer-Schönberger and Kenneth Cukier’s recent big data book in which they argued for dispensing “with a reliance on causation in favor of correlation”…

In “Steamrolled by Big Data,” The New Yorker’s Gary Marcus declares that “Big Data isn’t nearly the boundless miracle that many people seem to think it is.”…

Matti Keltanen at The Guardian agrees, explaining “Why ‘lean data’ beats big data.” Writes Keltanen: “…the lightest, simplest way to achieve your data analysis goals is the best one…The dirty secret of big data is that no algorithm can tell you what’s significant, or what it means. Data then becomes another problem for you to solve. A lean data approach suggests starting with questions relevant to your business and finding ways to answer them through data, rather than sifting through countless data sets. Furthermore, purely algorithmic extraction of rules from data is prone to creating spurious connections, such as false correlations… today’s big data hype seems more concerned with indiscriminate hoarding than helping businesses make the right decisions.”

In “Data Skepticism,” O’Reilly Radar’s Mike Loukides adds this gem to the discussion: “The idea that there are limitations to data, even very big data, doesn’t contradict Google’s mantra that more data is better than smarter algorithms; it does mean that even when you have unlimited data, you have to be very careful about the conclusions you draw from that data. It is in conflict with the all-too-common idea that, if you have lots and lots of data, correlation is as good as causation.”

Isn’t more-data-is-better the same as correlation-is-as-good-as-causation? Or, in the words of Chris Andersen, “with enough data, the numbers speak for themselves.”

“Can numbers actually speak for themselves?” non-believer Kate Crawford asks in “The Hidden Biases in Big Data” on the Harvard Business Review blog and answers: “Sadly, they can’t. Data and data sets are not objective; they are creations of human design…

And David Brooks in The New York Times, while probing the limits of “the big data revolution,” takes the discussion to yet another level: “One limit is that correlations are actually not all that clear. A zillion things can correlate with each other, depending on how you structure the data and what you compare. To discern meaningful correlations from meaningless ones, you often have to rely on some causal hypothesis about what is leading to what. You wind up back in the land of human theorizing…”

The Next Great Internet Disruption: Authority and Governance

An essay by David Bollier and John Clippinger as part of their ongoing work of ID3, the Institute for Data-Driven Design : “As the Internet and digital technologies have proliferated over the past twenty years, incumbent enterprises nearly always resist open network dynamics with fierce determination, a narrow ingenuity and resistance….But the inevitable rearguard actions to defend old forms are invariably overwhelmed by the new, network-based ones. The old business models, organizational structures, professional sinecures, cultural norms, etc., ultimately yield to open platforms.

When we look back on the past twenty years of Internet history, we can more fully appreciate the prescience of David P. Reed’s seminal 1999 paper on “Group Forming Networks” (GFNs). “Reed’s Law” posits that value in networks increases exponentially as interactions move from a broadcasting model that offers “best content” (in which value is described by n, the number of consumers) to a network of peer-to-peer transactions (where the network’s value is based on “most members” and mathematically described by n2). But by far the most valuable networks are based on those that facilitate group affiliations, Reed concluded. When users have tools for “free and responsible association for common purposes,” he found, the value of the network soars exponentially to 2n – a fantastically large number. This is the Group Forming Network. Reed predicted that “the dominant value in a typical network tends to shift from one category to another as the scale of the network increases.…”

What is really interesting about Reed’s analysis is that today’s world of GFNs, as embodied by Facebook, Twitter, Wikipedia and other Web 2.0 technologies, remains highly rudimentary. It is based on proprietary platforms (as opposed to open source, user-controlled platforms), and therefore provides only limited tools for members of groups to develop trust and confidence in each other. This suggests a huge, unmet opportunity to actualize greater value from open networks. Citing Francis Fukuyama’ book Trust, Reed points out that “there is a strong correlation between the prosperity of national economies and social capital, which [Fukuyama] defines culturally as the ease with which people in a particular culture can form new associations.”

Measuring Impact of Open and Transparent Governance

Mark Robinson @ OGP blog: “Eighteen months on from the launch of the Open Government Partnership in New York in September 2011, there is growing attention to what has been achieved to date. In the recent OGP Steering Committee meeting in London, government and civil society members were unanimous in the view that the OGP must demonstrate results and impact to retain its momentum and wider credibility. This will be a major focus of the annual OGP conference in London on 31 October and 1 November, with an emphasis on showcasing innovations, highlighting results and sharing lessons.

Mark Robinson @ OGP blog: “Eighteen months on from the launch of the Open Government Partnership in New York in September 2011, there is growing attention to what has been achieved to date. In the recent OGP Steering Committee meeting in London, government and civil society members were unanimous in the view that the OGP must demonstrate results and impact to retain its momentum and wider credibility. This will be a major focus of the annual OGP conference in London on 31 October and 1 November, with an emphasis on showcasing innovations, highlighting results and sharing lessons.

Much has been achieved in eighteen months. Membership has grown from 8 founding governments to 58. Many action plan commitments have been realised for the majority of OGP member countries. The Independent Reporting Mechanism has been approved and launched. Lesson learning and sharing experience is moving ahead….

The third type of results are the trickiest to measure: What has been the impact of openness and transparency on the lives of ordinary citizens? In the two years since the OGP was launched it may be difficult to find many convincing examples of such impact, but it is important to make a start in collecting such evidence.

Impact on the lives of citizens would be evident in improvements in the quality of service delivery, by making information on quality, access and complaint redressal public. A related example would be efficiency savings realised from publishing government contracts. Misallocation of public funds exposed through enhanced budget transparency is another. Action on corruption arising from bribes for services, misuse of public funds, or illegal procurement practices would all be significant results from these transparency reforms. A final example relates to jobs and prosperity, where the utilisation of government data in the public domain by the private sector to inform business investment decisions and create employment.

Generating convincing evidence on the impact of transparency reforms is critical to the longer-term success of the OGP. It is the ultimate test of whether lofty public ambitions announced in country action plans achieve real impacts to the benefit of citizens.”

Innovations in American Government Award

Press Release: “Today the Ash Center for Democratic Governance and Innovation at the John F. Kennedy School of Government, Harvard University announced the Top 25 programs in this year’s Innovations in American Government Award competition. These government initiatives represent the dedicated efforts of city, state, federal, and tribal governments and address a host of policy issues including crime prevention, economic development, environmental and community revitalization, employment, education, and health care. Selected by a cohort of policy experts, researchers, and practitioners, four finalists and one winner of the Innovations in American Government Award will be announced in the fall. A full list of the Top 25 programs is available here.

…A Culture of Innovation

A number of this year’s Top 25 programs foster a new culture of innovation through online collaboration and crowdsourcing. Signaling a new trend in government, these programs encourage the generation of smart solutions to existing and seemingly intractable public policy problems. LAUNCH—a partnership among NASA, USAID, the State Department, and NIKE—identifies and scales up promising global sustainability innovations created by individual citizens and organizations. The General Services Administration’s Challenge.gov uses crowdsourcing contests to solve government issues: government agencies post challenges, and the broader American public is awarded for submitting winning ideas. The Department of Transportation’s IdeaHub also uses an online platform to encourage its employees to communicate new ideas for making the department more adaptable and enterprising.”

An API for "We the People"

The White House Blog: “We can’t talk about We the People without getting into the numbers — more than 8 million users, more than 200,000 petitions, more than 13 million signatures. The sheer volume of participation is, to us, a sign of success.

The White House Blog: “We can’t talk about We the People without getting into the numbers — more than 8 million users, more than 200,000 petitions, more than 13 million signatures. The sheer volume of participation is, to us, a sign of success.

And there’s a lot we can learn from a set of data that rich and complex, but we shouldn’t be the only people drawing from its lessons.

So starting today, we’re making it easier for anyone to do their own analysis or build their own apps on top of the We the People platform. We’re introducing the first version of our API, and we’re inviting you to use it.

Get started here: petitions.whitehouse.gov/developers

This API provides read-only access to data on all petitions that passed the 150 signature threshold required to become publicly-available on the We the People site. For those who don’t need real-time data, we plan to add the option of a bulk data download in the near future. Until that’s ready, an incomplete sample data set is available for download here.”

Frameworks for a Location–Enabled Society

Annual CGA Conference “Location-enabled devices are weaving “smart grids” and building “smart cities;” they allow people to discover a friend in a shopping mall, catch a bus at its next stop, check surrounding air quality while walking down a street, or avoid a rain storm on a tourist route – now or in the near future. And increasingly they allow those who provide services to track, whether we are walking past stores on the street or seeking help in a natural disaster.

The Centre for Spatial Law and Policy based in Washington, DC, the Center for Geographic Analysis, the Belfer Center for Science and International Affairs and the Berkman Center for Internet and Society at Harvard University are co-hosting a two-day program examining the legal and policy issues that will impact geospatial technologies and the development of location-enabled societies. The event will take place at Harvard University on May 2-3, 2013…The goal is to explore the different dimensions of policy and legal concerns in geospatial technology applications, and to begin in creating a policy and legal framework for a location-enabled society. Download the conference program brochure.”

Live Webcast:

Stream videos at Ustream

New book: Disasters and the Networked Economy

Book description: “Mainstream quantitative analysis and simulations are fraught with difficulties and are intrinsically unable to deal appropriately with long-term macroeconomic effects of disasters. In this new book, J.M. Albala-Bertrand develops the themes introduced in his past book, The Political Economy of Large Natural Disasters (Clarendon Press, 1993), to show that societal networking and disaster localization constitute part of an essential framework to understand disaster effects and responses.

Book description: “Mainstream quantitative analysis and simulations are fraught with difficulties and are intrinsically unable to deal appropriately with long-term macroeconomic effects of disasters. In this new book, J.M. Albala-Bertrand develops the themes introduced in his past book, The Political Economy of Large Natural Disasters (Clarendon Press, 1993), to show that societal networking and disaster localization constitute part of an essential framework to understand disaster effects and responses.

The author’s last book argued that disasters were a problem of development, rather than a problem for development. This volume takes the argument forward both in terms of the macroeconomic effects of disaster and development policy, arguing that economy and society are not inert objects, but living organisms. Using a framework based on societal networking and the economic localization of disasters, the author shows that societal functionality (defined as the capacity of a system to survive, reproduce and develop) is unlikely to be impaired by natural disasters.”

Department of Better Technology

Next City reports: “…opening up government can get expensive. That’s why two developers this week launched the Department of Better Technology, an effort to make open government tools cheaper, more efficient and easier to engage with.

Next City reports: “…opening up government can get expensive. That’s why two developers this week launched the Department of Better Technology, an effort to make open government tools cheaper, more efficient and easier to engage with.

As founder Clay Johnson explains in a post on the site’s blog, a federal website that catalogues databases on government contracts, which launched last year, cost $181 million to build — $81 million more than a recent research initiative to map the human brain.

“I’d like to say that this is just a one-off anomaly, but government regularly pays millions of dollars for websites,” writes Johnson, the former director of Sunlight Labs at the Sunlight Foundation and author the 2012 book The Information Diet.

The first undertaking of Johnson and his partner, GovHub co-founder Adam Becker, is a tool meant to make it simpler for businesses to find government projects to bid on, as well as help officials streamline the process of managing procurements. In a pilot experiment, Johnson writes, the pair found that not only were bids coming in faster and at a reduced price, but more people were doing the bidding.

Per Johnson, “many of the bids that came in were from businesses that had not ordinarily contracted with the federal government before.”

The Department of Better Technology will accept five cities to test a beta version of this tool, called Procure.io, in 2013.”

Cities and Data

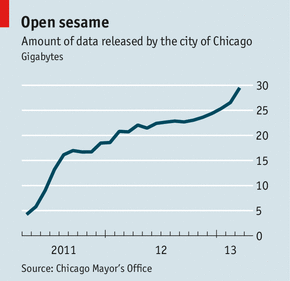

The Economist: “Many cities around the country find themselves in a similar position: they are accumulating data faster than they know what to do with. One approach is to give them to the public. For example, San Francisco, New York, Philadelphia, Boston and Chicago are or soon will be sharing the grades that health inspectors give to restaurants with an online restaurant directory.

The Economist: “Many cities around the country find themselves in a similar position: they are accumulating data faster than they know what to do with. One approach is to give them to the public. For example, San Francisco, New York, Philadelphia, Boston and Chicago are or soon will be sharing the grades that health inspectors give to restaurants with an online restaurant directory.

Another way of doing it is simply to publish the raw data and hope that others will figure out how to use them. This has been particularly successful in Chicago, where computer nerds have used open data to create many entirely new services. Applications are now available that show which streets have been cleared after a snowfall, what time a bus or train will arrive and how requests to fix potholes are progressing.

New York and Chicago are bringing together data from departments across their respective cities in order to improve decision-making. When a city holds a parade it can combine data on street closures, bus routes, weather patterns, rubbish trucks and emergency calls in real time.”