Paper by Paul W. Mungai: “Open data—including open government data (OGD)—has become a topic of prominence during the last decade. However, most governments have not realised the desired value streams or outcomes from OGD. The Kenya Open Data Initiative (KODI), a Government of Kenya initiative, is no exception with some moments of success but also sustainability struggles. Therefore, the focus for this paper is to understand the causal mechanisms that either enable or constrain institutionalisation of OGD initiatives. Critical realism is ideally suited as a paradigm to identify such mechanisms, but guides to its operationalisation are few. This study uses the operational approach of Bygstad, Munkvold & Volkoff’s six‐step framework, a hybrid approach that melds concepts from existing critical realism models with the idea of affordances. The findings suggest that data demand and supply mechanisms are critical in institutionalising KODI and that, underpinning basic data‐related affordances, are mechanisms engaging with institutional capacity, formal policy, and political support. It is the absence of such elements in the Kenya case which explains why it has experienced significant delays…(More)”.

Designing Cognitive Cities

This book illustrates various aspects and dimensions of cognitive cities. Following a comprehensive introduction, the first part of the book explores conceptual considerations for the design of cognitive cities, while the second part focuses on concrete applications. The contributions provide an overview of the wide diversity of cognitive city conceptualizations and help readers to better understand why it is important to think about the design of our cities. The book adopts a transdisciplinary approach since the cognitive city concept can only be achieved through cooperation across different academic disciplines (e.g., economics, computer science, mathematics) and between research and practice. More and more people live in a growing number of ever-larger cities. As such, it is important to reflect on how cities need to be designed to provide their inhabitants with the means and resources for a good life. The cognitive city is an emerging, innovative approach to address this need….(More)”.

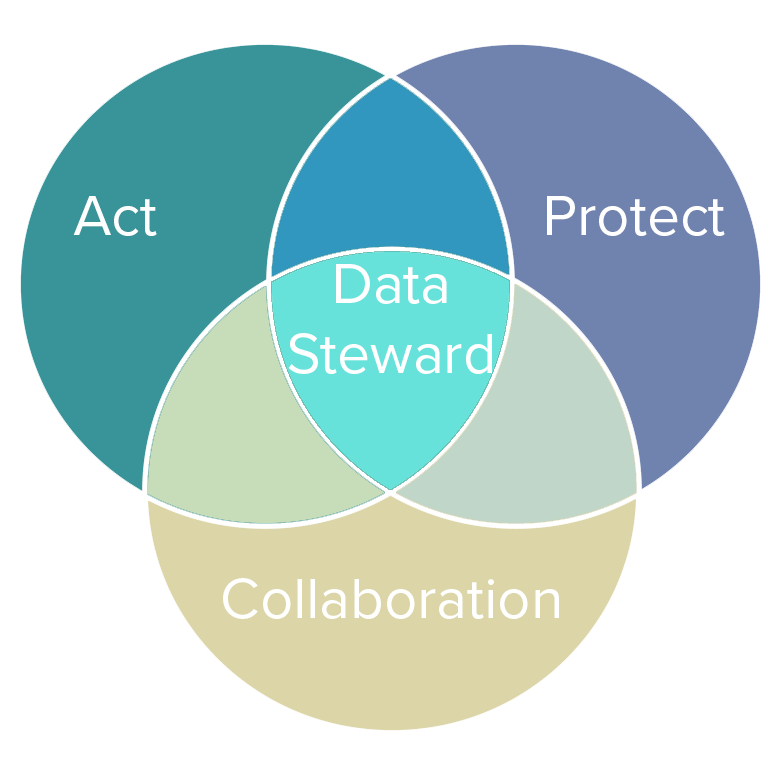

The Three Goals and Five Functions of Data Stewards

Medium Article by Stefaan G. Verhulst: “…Yet even as we see more data steward-type roles defined within companies, there exists considerable confusion about just what they should be doing. In particular, we have noticed a tendency to conflate the roles of data stewards with those of individuals or groups who might be better described as chief privacy, chief data or security officers. This slippage is perhaps understandable, but our notion of the role is somewhat broader. While privacy and security are of course key components of trusted and effective data collaboratives, the real goal is to leverage private data for broader social goals — while preventing harm.

So what are the necessary attributes of data stewards? What are their roles, responsibilities, and goals of data stewards? And how can they be most effective, both as champions of sharing within organizations and as facilitators for leveraging data with external entities? These are some of the questions we seek to address in our current research, and below we outline some key preliminary findings.

The following “Three Goals” and “Five Functions” can help define the aspirations of data stewards, and what is needed to achieve the goals. While clearly only a start, these attributes can help guide companies currently considering setting up sharing initiatives or establishing data steward-like roles.

The Three Goals of Data Stewards

- Collaborate: Data stewards are committed to working and collaborating with others, with the goal of unlocking the inherent value of data when a clear case exists that it serves the public good and that it can be used in a responsible manner.

- Protect: Data stewards are committed to managing private data ethically, which means sharing information responsibly, and preventing harm to potential customers, users, corporate interests, the wider public and of course those individuals whose data may be shared.

- Act: Data stewards are committed to pro-actively acting in order to identify partners who may be in a better position to unlock value and insights contained within privately held data.

…(More)”.

The role of corporations in addressing AI’s ethical dilemmas

Darrell M. West at Brookings: “In this paper, I examine five AI ethical dilemmas: weapons and military-related applications, law and border enforcement, government surveillance, issues of racial bias, and social credit systems. I discuss how technology companies are handling these issues and the importance of having principles and processes for addressing these concerns. I close by noting ways to strengthen ethics in AI-related corporate decisions.

Briefly, I argue it is important for firms to undertake several steps in order to ensure that AI ethics are taken seriously:

- Hire ethicists who work with corporate decisionmakers and software developers

- Develop a code of AI ethics that lays out how various issues will be handled

- Have an AI review board that regularly addresses corporate ethical questions

- Develop AI audit trails that show how various coding decisions have been made

- Implement AI training programs so staff operationalizes ethical considerations in their daily work, and

- Provide a means for remediation when AI solutions inflict harm or damages on people or organizations….(More)”.

Google, T-Mobile Tackle 911 Call Problem

Sarah Krouse at the Wall Street Journal: “Emergency call operators will soon have an easier time pinpointing the whereabouts of Android phone users.

Google has struck a deal with T-Mobile US to pipe location data from cellphones with Android operating systems in the U.S. to emergency call centers, said Fiona Lee, who works on global partnerships for Android emergency location services.

The move is a sign that smartphone operating system providers and carriers are taking steps to improve the quality of location data they send when customers call 911. Locating callers has become a growing problem for 911 operators as cellphone usage has proliferated. Wireless devices now make 80% or more of the 911 calls placed in some parts of the U.S., according to the trade group National Emergency Number Association. There are roughly 240 million calls made to 911 annually.

While landlines deliver an exact address, cellphones typically register only an estimated location provided by wireless carriers that can be as wide as a few hundred yards and imprecise indoors.

That has meant that while many popular applications like Uber can pinpoint users, 911 call takers can’t always do so. Technology giants such as Google and Apple Inc. that run phone operating systems need a direct link to the technology used within emergency call centers to transmit precise location data….

Google currently offers emergency location services in 14 countries around the world by partnering with carriers and companies that are part of local emergency communications infrastructure. Its location data is based on a combination of inputs from Wi-Fi to sensors, GPS and a mobile network information.

Jim Lake, director at the Charleston County Consolidated 9-1-1 Center, participated in a pilot of Google’s emergency location services and said it made it easier to find people who didn’t know their location, particularly because the area draws tourists.

“On a day-to-day basis, most people know where they are, but when they don’t, usually those are the most horrifying calls and we need to know right away,” Mr. Lake said.

In June, Apple said it had partnered with RapidSOS to send iPhone users’ location information to 911 call centers….(More)”

We hold people with power to account. Why not algorithms?

Hannah Fry at the Guardian: “…But already in our hospitals, our schools, our shops, our courtrooms and our police stations, artificial intelligence is silently working behind the scenes, feeding on our data and making decisions on our behalf. Sure, this technology has the capacity for enormous social good – it can help us diagnose breast cancer, catch serial killers, avoid plane crashes and, as the health secretary, Matt Hancock, has proposed, potentially save lives using NHS data and genomics. Unless we know when to trust our own instincts over the output of a piece of software, however, it also brings the potential for disruption, injustice and unfairness.

If we permit flawed machines to make life-changing decisions on our behalf – by allowing them to pinpoint a murder suspect, to diagnose a condition or take over the wheel of a car – we have to think carefully about what happens when things go wrong…

I think it’s time we started treating machines as we would any other source of power. I would like to propose a system of regulation for algorithms, and perhaps a good place to start would be with Tony Benn’s five simple questions, designed for powerful people, but equally applicable to modern AI:

“What power have you got?

“Where did you get it from?

“In whose interests do you use it?

“To whom are you accountable?

“How do we get rid of you?”

Because, ultimately, we can’t just think of algorithms in isolation. We have to think of the failings of the people who design them – and the danger to those they are supposedly designed to serve.

Illuminating GDP

Money and Banking: “GDP figures are ‘man-made’ and therefore unreliable,” reported remarks of Li Keqiang (then Communist Party secretary of the northeastern Chinese province of Liaoning), March 12, 2007.

Satellites are great. It is hard to imagine living without them. GPS navigation is just the tip of the iceberg. Taking advantage of the immense amounts of information collected over decades, scientists have been using satellite imagery to study a broad array of questions, ranging from agricultural land use to the impact of climate change to the geographic constraints on cities (see here for a recent survey).

One of the most well-known economic applications of satellite imagery is to use night-time illumination to enhance the accuracy of various reported measures of economic activity. For example, national statisticians in countries with poor information collection systems can employ information from satellites to improve the quality of their nationwide economic data (see here). Even where governments have relatively high-quality statistics at a national level, it remains difficult and costly to determine local or regional levels of activity. For example, while production may occur in one jurisdiction, the income generated may be reported in another. At a sufficiently high resolution, satellite tracking of night-time light emissions can help address this question (see here).

But satellite imagery is not just an additional source of information on economic activity, it is also a neutral one that is less prone to manipulation than standard accounting data. This makes it is possible to use information on night-time light to monitor the accuracy of official statistics. And, as we suggest later, the willingness of observers to apply a “satellite correction” could nudge countries to improve their own data reporting systems in line with recognized international standards.

As Luis Martínez inquires in his recent paper, should we trust autocrats’ estimates of GDP? Even in relatively democratic countries, there are prominent examples of statistical manipulation (recall the cases of Greek sovereign debt in 2009 and Argentine inflation in 2014). In the absence of democratic checks on the authorities, Martínez finds even greater tendencies to distort the numbers….(More)”.

Government Digital: The Quest to Regain Public Trust

Book by Alex Benay: “Governments all over the world are consistently outpaced by digital change, and are falling behind.

Digital government is a better performing government. It is better at providing services people and businesses need. Receiving benefits, accessing health records, registering companies, applying for licences, voting — all of this can be done online or through digital self-service. Digital technology makes government more efficient, reduces hassle, and lowers costs. But what will it take to make governments digital?

Good governance will take nothing short of a metamorphosis of the public sector. With contributions from industry, academic, and government experts — including Hillary Hartley, chief digital officer for Ontario, and Salim Ismail, founder of Singularity University — Government Digitallays down a blueprint for this radical change….(More)”.

Is Mass Surveillance the Future of Conservation?

Mallory Picket at Slate: “The high seas are probably the most lawless place left on Earth. They’re a portal back in time to the way the world looked for most of our history: fierce and open competition for resources and contested territories. Pirating continues to be a way to make a living.

It’s not a complete free-for-all—most countries require registration of fishing vessels and enforce environmental protocols. Cooperative agreements between countries oversee fisheries in international waters. But the best data available suggests that around 20 percent of the global seafood catch is illegal. This is an environmental hazard because unregistered boats evade regulations meant to protect marine life. And it’s an economic problem for fishermen who can’t compete with boats that don’t pay for licenses or follow the (often expensive) regulations. In many developing countries, local fishermen are outfished by foreign vessels coming into their territory and stealing their stock….

But Henri Weimerskirch, a French ecologist, has a cheap, low-impact way to monitor thousands of square miles a day in real time: He’s getting birds to do it (a project first reported by Hakai). Specifically, albatross, which have a 10-foot wingspan and can fly around the world in 46 days. The birds naturally congregate around fishing boats, hoping for an easy meal, so Weimerskirch is equipping them with GPS loggers that also have radar detection to pick up the ship’s radar (and make sure it is a ship, not an island) and a transmitter to send that data to authorities in real time. If it works, this should help in two ways: It will provide some information on the extent of the unofficial fishing operation in the area, and because the logger will transmit their information in real time, the data will be used to notify French navy ships in the area to check out suspicious boats.

His team is getting ready to deploy about 80 birds in the south Indian Ocean this November.

The loggers attached around the birds’ legs are about the shape and size of a Snickers. The south Indian Ocean is a shared fishing zone, and nine countries, including France (courtesy of several small islands it claims ownership of, a vestige of colonialism), manage it together. But there are big problems with illegal fishing in the area, especially of the Patagonian toothfish (better known to consumers as Chilean seabass)….(More)”

Building block(chain)s for a better planet

PWC report: “…Our research and analysis identified more than 65 existing and emerging blockchain use cases for the environment through desk-based research and interviews with a range of stakeholders at the forefront of applying blockchain across industry, big tech, entrepreneurs, research and government. Blockchain use-case solutions that are particularly relevant across environmental applications tend to cluster around the following cross-cutting themes: enabling the transition to cleaner and more efficient decentralized systems; peer-to-peer trading of resources or permits; supply-chain transparency and management; new financing models for environmental outcomes; and the realization of non-financial value and natural capital. The report also identifies enormous potential to create blockchain-enabled “game changers” that have the ability to deliver transformative solutions to environmental challenges. These game changers have the potential to disrupt, or substantially optimize, the systems that are critical to addressing many environmental challenges. A high-level summary of those game changers is outlined below:

- “See-through” supply chains: blockchain can create undeniable (and potentially unavoidable) transparency in supply chains. …

- Decentralized and sustainable resource management: blockchain can underpin a transition to decentralized utility systems at scale…

- Raising the trillions – new sources of sustainable finance: blockchain-enabled finance platforms could potentially revolutionize access to capital and unlock potential for new investors in projects that address environmental challenges – from retail-level investment in green infrastructure projects through to enabling blended finance or charitable donations for developing countries. …

- Incentivizing circular economies: blockchain could fundamentally change the way in which materials and natural resources are valued and traded, incentivizing individuals, companies and governments to unlock financial value from things that are currently wasted, discarded or treated as economically invaluable. …

- Transforming carbon (and other environmental) markets: blockchain platforms could be harnessed to use cryptographic tokens with a tradable value to optimize existing market platforms for carbon (or other substances) and create new opportunities for carbon credit transactions.

- Next-gen sustainability monitoring, reporting and verification: blockchain has the potential to transform both sustainability reporting and assurance, helping companies manage, demonstrate and improve their performance, while enabling consumers and investors to make better-informed decisions. …

- Automatic disaster preparedness and humanitarian relief: blockchain could underpin a new shared system for multiple parties involved in disaster preparedness and relief to improve the efficiency, effectiveness, coordination and trust of resources. An interoperable decentralized system could enable the sharing of information (e.g. individual relief activities transparent to all other parties within the distributed network) and rapid automated transactions via smart contracts. ..

- Earth-management platforms: new blockchainenabled geospatial platforms, which enable a range of value-based transactions, are in the early stages of exploration and could monitor, manage and enable market mechanisms that protect the global environmental commons – from life on land to ocean health. Such applications are further away in terms of technical and logistical feasibility, but they remain exciting to contemplate….(More)”.