Conference Proceedings edited by Josef Drexl, Moritz Hennemann, Patricia Boshe, and Klaus Wiedemann: “The increasing relevance of data is now recognized all over the world. The large number of regulatory acts and proposals in the field of data law serves as a testament to the significance of data processing for the economies of the world. The European Union’s Data Strategy, the African Union’s Data Policy Framework and the Australian Data Strategy only serve as examples within a plethora of regulatory actions. Yet, the purposeful and sensible use of data does not only play a role in economic terms, e.g. regarding the welfare or competitiveness of economies. The implications for society and the common good are at least equally relevant. For instance, data processing is an integral part of modern research methodology and can thus help to address the problems the world is facing today, such as climate change.

The conference was the third and final event of the Global Data Law Conference Series. Legal scholars from all over the world met, presented and exchanged their experiences on different data-related regulatory approaches. Various instruments and approaches to the regulation of data – personal or non-personal – were discussed, without losing sight of the global effects going hand-in-hand with different kinds of regulation.

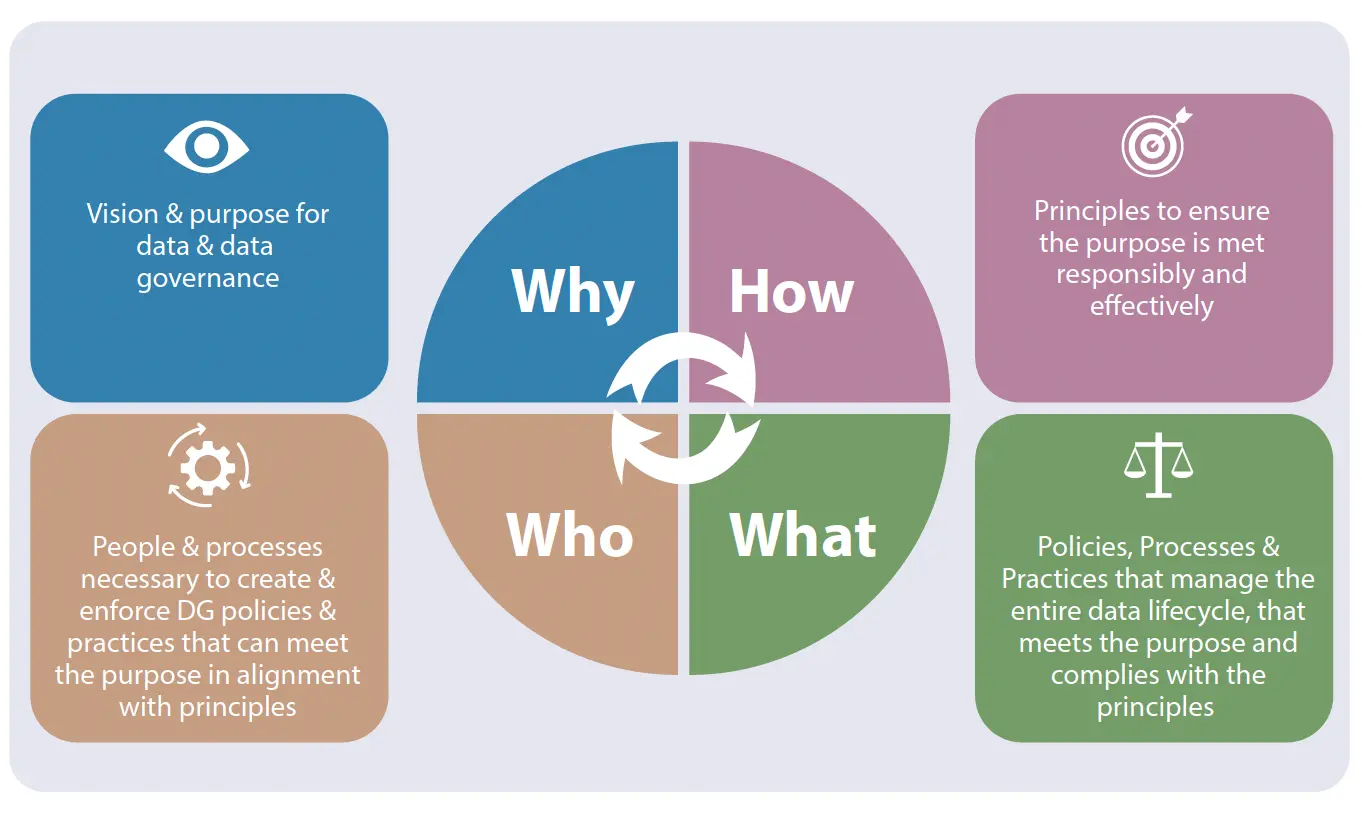

In compiling the conference proceedings, this book does not only aim at providing a critical and analytical assessment of the status quo of data law in different countries today, it also aims at providing a forward-looking perspective on the pressing issues of our time, such as: How to promote sensible data sharing and purposeful data governance? Under which circumstances, if ever, do data localisation requirements make sense? How – and by whom – should international regulation be put in place? The proceedings engage in a discussion on future-oriented ideas and actions, thereby promoting a constructive and sensible approach to data law around the world…(More)”.