Paper by M. Fairbairn, and Z. Kish: “Open data is increasingly being promoted as a route to achieve food security and agricultural development. This article critically examines the promotion of open agri-food data for development through a document-based case study of the Global Open Data for Agriculture and Nutrition (GODAN) initiative as well as through interviews with open data practitioners and participant observation at open data events. While the concept of openness is striking for its ideological flexibility, we argue that GODAN propagates an anti-political, neoliberal vision for how open data can enhance agricultural development. This approach centers values such as private innovation, increased production, efficiency, and individual empowerment, in contrast to more political and collectivist approaches to openness practiced by some agri-food social movements. We further argue that open agri-food data projects, in general, have a tendency to reproduce elements of “data colonialism,” extracting data with minimal consideration for the collective harms that may result, and embedding their own values within universalizing information infrastructures…(More)”.

A new way to look at data privacy

Article by Adam Zewe: “Imagine that a team of scientists has developed a machine-learning model that can predict whether a patient has cancer from lung scan images. They want to share this model with hospitals around the world so clinicians can start using it in diagnosis.

But there’s a problem. To teach their model how to predict cancer, they showed it millions of real lung scan images, a process called training. Those sensitive data, which are now encoded into the inner workings of the model, could potentially be extracted by a malicious agent. The scientists can prevent this by adding noise, or more generic randomness, to the model that makes it harder for an adversary to guess the original data. However, perturbation reduces a model’s accuracy, so the less noise one can add, the better.

MIT researchers have developed a technique that enables the user to potentially add the smallest amount of noise possible, while still ensuring the sensitive data are protected.

The researchers created a new privacy metric, which they call Probably Approximately Correct (PAC) Privacy, and built a framework based on this metric that can automatically determine the minimal amount of noise that needs to be added. Moreover, this framework does not need knowledge of the inner workings of a model or its training process, which makes it easier to use for different types of models and applications.

In several cases, the researchers show that the amount of noise required to protect sensitive data from adversaries is far less with PAC Privacy than with other approaches. This could help engineers create machine-learning models that provably hide training data, while maintaining accuracy in real-world settings…

A fundamental question in data privacy is: How much sensitive data could an adversary recover from a machine-learning model with noise added to it?

Differential Privacy, one popular privacy definition, says privacy is achieved if an adversary who observes the released model cannot infer whether an arbitrary individual’s data is used for the training processing. But provably preventing an adversary from distinguishing data usage often requires large amounts of noise to obscure it. This noise reduces the model’s accuracy.

PAC Privacy looks at the problem a bit differently. It characterizes how hard it would be for an adversary to reconstruct any part of randomly sampled or generated sensitive data after noise has been added, rather than only focusing on the distinguishability problem…(More)”

How do we know how smart AI systems are?

Article by Melanie Mitchell: “In 1967, Marvin Minksy, a founder of the field of artificial intelligence (AI), made a bold prediction: “Within a generation…the problem of creating ‘artificial intelligence’ will be substantially solved.” Assuming that a generation is about 30 years, Minsky was clearly overoptimistic. But now, nearly two generations later, how close are we to the original goal of human-level (or greater) intelligence in machines?

Some leading AI researchers would answer that we are quite close. Earlier this year, deep-learning pioneer and Turing Award winner Geoffrey Hinton told Technology Review, “I have suddenly switched my views on whether these things are going to be more intelligent than us. I think they’re very close to it now and they will be much more intelligent than us in the future.” His fellow Turing Award winner Yoshua Bengio voiced a similar opinion in a recent blog post: “The recent advances suggest that even the future where we know how to build superintelligent AIs (smarter than humans across the board) is closer than most people expected just a year ago.”

These are extraordinary claims that, as the saying goes, require extraordinary evidence. However, it turns out that assessing the intelligence—or more concretely, the general capabilities—of AI systems is fraught with pitfalls. Anyone who has interacted with ChatGPT or other large language models knows that these systems can appear quite intelligent. They converse with us in fluent natural language, and in many cases seem to reason, to make analogies, and to grasp the motivations behind our questions. Despite their well-known unhumanlike failings, it’s hard to escape the impression that behind all that confident and articulate language there must be genuine understanding…(More)”.

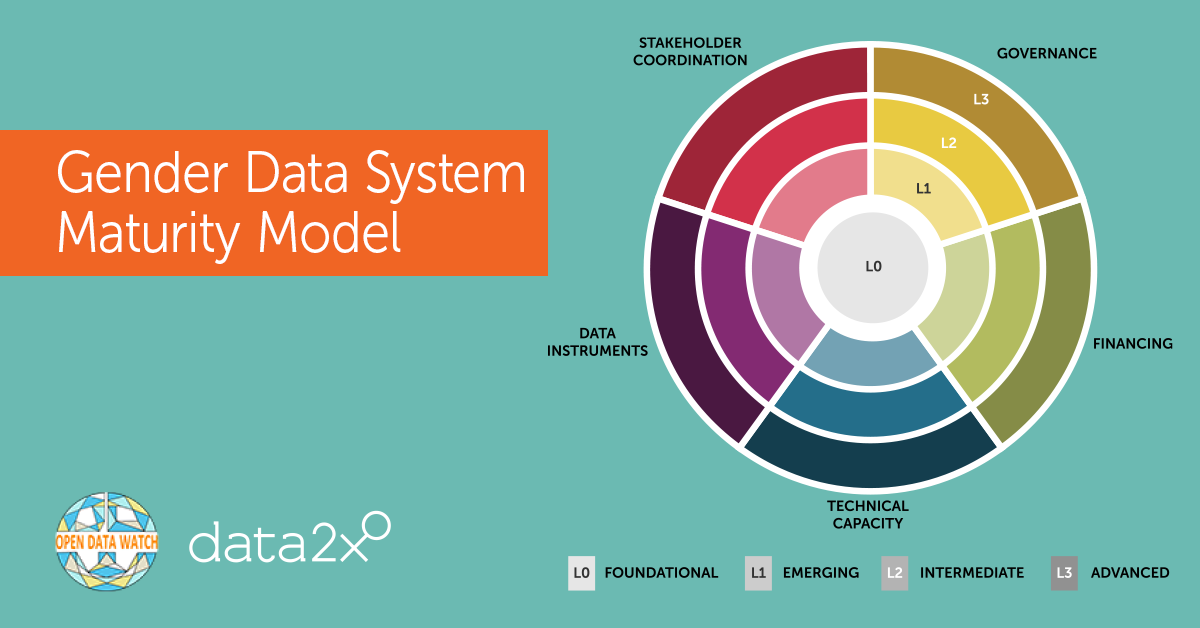

Building Responsive Investments in Gender Equality using Gender Data System Maturity Models

Tools and resources by Data2X and Open Data Watch: “.. to help countries check the maturity of their gender data systems and set priorities for gender data investments. The new Building Responsive Investments in Data for Gender Equality (BRIDGE) tool is designed for use by gender data focal points in national statistical offices (NSOs) of low- and middle- income countries and by their partners within the national statistical system (NSS) to communicate gender data priorities to domestic sources of financing and international donors.

The BRIDGE results will help gender data stakeholders understand the current maturity level of their gender data system, diagnose strengths and weaknesses, and identify priority areas for improvement. They will also serve as an input to any roadmap or action plan developed in collaboration with key stakeholders within the NSS.

Below are links to and explanations of our ‘Gender Data System Maturity Model’ briefs (a long and short version), our BRIDGE assessment and tools methodology, how-to guide, questionnaire, and scoring form that will provide an overall assessment of system maturity and insight into potential action plans to strengthen gender data systems…(More)”.

De Gruyter Handbook of Citizens’ Assemblies

Book edited by Min Reuchamps, Julien Vrydagh and Yanina Welp: “Citizens’ Assemblies (CAs) are flourishing around the world. Quite often composed of randomly selected citizens, CAs, arguably, come as a possible answer to contemporary democratic challenges. Democracies worldwide are indeed confronted with a series of disruptive phenomena such as a widespread perception of distrust and growing polarization as well as low performance. Many actors seek to reinvigorate democracy with citizen participation and deliberation. CAs are expected to have the potential to meet this twofold objective. But, despite deliberative and inclusive qualities of CAs, many questions remain open. The increasing popularity of CAs call for a holistic reflection and evaluation on their origins, current uses and future directions.

The De Gruyter Handbook of Citizens’ Assemblies showcases the state of the art around the study of CAs and opens novel perspectives informed by multidisciplinary research and renewed thinking about deliberative participatory processes. It discusses the latest theoretical, empirical, and methodological scientific developments on CAs and offers a unique resource for scholars, decision-makers, practitioners, and curious citizens to better understand the qualities, purposes, promises but also pitfalls of CAs…(More)”.

Connecting After Chaos: Social Media and the Extended Aftermath of Disaster

Book by Stephen F. Ostertag: “Natural disasters and other such catastrophes typically attract large-scale media attention and public concern in their immediate aftermath. However, rebuilding efforts can take years or even decades, and communities are often left to repair physical and psychological damage on their own once public sympathy fades away. Connecting After Chaos tells the story of how people restored their lives and society in the months and years after disaster, focusing on how New Orleanians used social media to cope with trauma following Hurricane Katrina.

Stephen F. Ostertag draws on almost a decade of research to create a vivid portrait of life in “settling times,” a term he defines as a distinct social condition of prolonged insecurity and uncertainty after disasters. He portrays this precarious state through the story of how a group of strangers began blogging in the wake of Katrina, and how they used those blogs to put their lives and their city back together. In the face of institutional failure, weak authority figures, and an abundance of chaos, the people of New Orleans used social media to gain information, foster camaraderie, build support networks, advocate for and against proposed policies, and cope with trauma. In the efforts of these bloggers, Ostertag finds evidence of the capacity of this and other forms of cultural work to motivate, guide, and energize collective action aimed at weathering the constant instability of extended recovery periods. Connecting After Chaos is both a compelling story of a community in crisis and a broader argument for the power of social media and cultural cooperation to create order when chaos abounds…(More)”.

Meta Ran a Giant Experiment in Governance. Now It’s Turning to AI

Article by Aviv Ovadya: “Late last month, Meta quietly announced the results of an ambitious, near-global deliberative “democratic” process to inform decisions around the company’s responsibility for the metaverse it is creating. This was not an ordinary corporate exercise. It involved over 6,000 people who were chosen to be demographically representative across 32 countries and 19 languages. The participants spent many hours in conversation in small online group sessions and got to hear from non-Meta experts about the issues under discussion. Eighty-two percent of the participants said that they would recommend this format as a way for the company to make decisions in the future.

Meta has now publicly committed to running a similar process for generative AI, a move that aligns with the huge burst of interest in democratic innovation for governing or guiding AI systems. In doing so, Meta joins Google, DeepMind, OpenAI, Anthropic, and other organizations that are starting to explore approaches based on the kind of deliberative democracy that I and others have been advocating for. (Disclosure: I am on the application advisory committee for the OpenAI Democratic inputs to AI grant.) Having seen the inside of Meta’s process, I am excited about this as a valuable proof of concept for transnational democratic governance. But for such a process to truly be democratic, participants would need greater power and agency, and the process itself would need to be more public and transparent.

I first got to know several of the employees responsible for setting up Meta’s Community Forums (as these processes came to be called) in the spring of 2019 during a more traditional external consultation with the company to determine its policy on “manipulated media.” I had been writing and speaking about the potential risks of what is now called generative AI and was asked (alongside other experts) to provide input on the kind of policies Meta should develop to address issues such as misinformation that could be exacerbated by the technology.

At around the same time, I first learned about representative deliberations—an approach to democratic decisionmaking that has taken off like wildfire, with increasingly high-profile citizen assemblies and deliberative polls all over the world. The basic idea is that governments bring difficult policy questions back to the public to decide. Instead of a referendum or elections, a representative microcosm of the public is selected via lottery. That group is brought together for days or even weeks (with compensation) to learn from experts, stakeholders, and each other before coming to a final set of recommendations…(More)”.

AI tools are designing entirely new proteins that could transform medicine

Article by Ewen Callaway: “OK. Here we go.” David Juergens, a computational chemist at the University of Washington (UW) in Seattle, is about to design a protein that, in 3-billion-plus years of tinkering, evolution has never produced.

On a video call, Juergens opens a cloud-based version of an artificial intelligence (AI) tool he helped to develop, called RFdiffusion. This neural network, and others like it, are helping to bring the creation of custom proteins — until recently a highly technical and often unsuccessful pursuit — to mainstream science.

These proteins could form the basis for vaccines, therapeutics and biomaterials. “It’s been a completely transformative moment,” says Gevorg Grigoryan, the co-founder and chief technical officer of Generate Biomedicines in Somerville, Massachusetts, a biotechnology company applying protein design to drug development.

The tools are inspired by AI software that synthesizes realistic images, such as the Midjourney software that, this year, was famously used to produce a viral image of Pope Francis wearing a designer white puffer jacket. A similar conceptual approach, researchers have found, can churn out realistic protein shapes to criteria that designers specify — meaning, for instance, that it’s possible to speedily draw up new proteins that should bind tightly to another biomolecule. And early experiments show that when researchers manufacture these proteins, a useful fraction do perform as the software suggests.

The tools have revolutionized the process of designing proteins in the past year, researchers say. “It is an explosion in capabilities,” says Mohammed AlQuraishi, a computational biologist at Columbia University in New York City, whose team has developed one such tool for protein design. “You can now create designs that have sought-after qualities.”

“You’re building a protein structure customized for a problem,” says David Baker, a computational biophysicist at UW whose group, which includes Juergens, developed RFdiffusion. The team released the software in March 2023, and a paper describing the neural network appears this week in Nature1. (A preprint version was released in late 2022, at around the same time that several other teams, including AlQuraishi’s2 and Grigoryan’s3, reported similar neural networks)…(More)”.

Just Citation

Paper by Amanda Levendowski: “Contemporary citation practices are often unjust. Data cartels, like Google, Westlaw, and Lexis, prioritize profits and efficiency in ways that threaten people’s autonomy, particularly that of pregnant people and immigrants. Women and people of color have been legal scholars for more than a century, yet colleagues consistently under-cite and under-acknowledge their work. Other citations frequently lead to materials that cannot be accessed by disabled people, poor people or the public due to design, paywalls or link rot. Yet scholars and students often understand citation practices as “just” citation and perpetuate these practices unknowingly. This Article is an intervention. Using an intersectional feminist framework for understanding how cyberlaws oppress and liberate oppressed, an emerging movement known as feminist cyberlaw, this Article investigates problems posed by prevailing citation practices and introduces practical methods that bring citation into closer alignment with the feminist values of safety, equity, and accessibility. Escaping data cartels, engaging marginalized scholars, embracing free and public resources, and ensuring that those resources remain easily available represent small, radical shifts that promote just citation. This Article provides powerful, practical tools for pursuing all of them…(More)”.

ChatGPT took people by surprise – here are four technologies that could make a difference next

Article by Fabian Stephany and Johann Laux: “…There are some AI technologies waiting on the sidelines right now that hold promise. The four we think are waiting in the wings are next-level GPT, humanoid robots, AI lawyers, and AI-driven science. Our choices appear ready from a technological point of view, but whether they satisfy all three of the criteria we’ve mentioned is another matter. We chose these four because they were the ones that kept coming up in our investigations into progress in AI technologies.

1. AI legal help

The startup company DoNotPay claims to have built a legal chatbot – built on LLM technology – that can advise defendants in court.

The company recently said it would let its AI system help two defendants fight speeding tickets in real-time. Connected via an earpiece, the AI can listen to proceedings and whisper legal arguments into the ear of the defendant, who then repeats them out loud to the judge.

After criticism and a lawsuit for practising law without a license, the startup postponed the AI’s courtroom debut. The potential for the technology will thus not be decided by technological or economic constraints, but by the authority of the legal system.

Lawyers are well-paid professionals and the costs of litigation are high, so the economic potential for automation is huge. However, the US legal system currently seems to oppose robots representing humans in court.

2. AI scientific support

Scientists are increasingly turning to AI for insights. Machine learning, where an AI system improves at what it does over time, is being employed to identify patterns in data. This enables the systems to propose novel scientific hypotheses – proposed explanations for phenomena in nature. These may even be capable of surpassing human assumptions and biases.

For example, researchers at the University of Liverpool used a machine learning system called a neural network to rank chemical combinations for battery materials, guiding their experiments and saving time.

The complexity of neural networks means that there are gaps in our understanding of how they actually make decisions – the so-called black box problem. Nevertheless, there are techniques that can shed light on the logic behind their answers and this can lead to unexpected discoveries.

While AI cannot currently formulate hypotheses independently, it can inspire scientists to approach problems from new perspectives…(More)”.