New Paper by Shelton, T., Poorthuis, A., Graham, M., and Zook, M. : “Digital social data are now practically ubiquitous, with increasingly large and interconnected databases leading researchers, politicians, and the private sector to focus on how such ‘big data’ can allow potentially unprecedented insights into our world. This paper investigates Twitter activity in the wake of Hurricane Sandy in order to demonstrate the complex relationship between the material world and its digital representations. Through documenting the various spatial patterns of Sandy-related tweeting both within the New York metropolitan region and across the United States, we make a series of broader conceptual and methodological interventions into the nascent geographic literature on big data. Rather than focus on how these massive databases are causing necessary and irreversible shifts in the ways that knowledge is produced, we instead find it more productive to ask how small subsets of big data, especially georeferenced social media information scraped from the internet, can reveal the geographies of a range of social processes and practices. Utilizing both qualitative and quantitative methods, we can uncover broad spatial patterns within this data, as well as understand how this data reflects the lived experiences of the people creating it. We also seek to fill a conceptual lacuna in studies of user-generated geographic information, which have often avoided any explicit theorizing of sociospatial relations, by employing Jessop et al’s TPSN framework. Through these interventions, we demonstrate that any analysis of user-generated geographic information must take into account the existence of more complex spatialities than the relatively simple spatial ontology implied by latitude and longitude coordinates.”

Innovation by Competition: How Challenges and Competition Get the Most Out of the Crowd

Innocentive: “Crowdsourcing has become the 21st century’s alternative to problem solving in place of traditional employee-based strategies. It has become the modern solution to provide for needed services, content, and ideas. Crowdsourced ideas are paving the way for today’s organizations to tackle innovation challenges that confront them in today’s competitive global marketplace. To put it all in perspective, crowds used to be thought of as angry mobs. Today, crowds are more like friendly and helpful contributors. What an interesting juxtaposition, eh?

Case studies proving the effectiveness of crowdsourcing to conquer innovation challenge, particularly in the fields of science and engineering abound. Despite this fact that success stories involving crowdsourcing are plentiful, very few firms are really putting its full potential to use. Advances in ALS and AIDS research have both made huge advances thanks to crowdsourcing, just to name a couple.

Biologists at the University of Washington were able to map the structure of an AIDS related virus thanks to the collaboration involved with crowdsourcing. How did they do this? With the help of gamers playing a game designed to help get the information the University of Washington needed. It was a solution that remained unattainable for over a decade until enough top notch scientific minds were expertly probed from around the world with effective crowdsourcing techniques.

Dr. Seward Rutkove discovered an ALS biomarker to accurately measure the progression of the disease in patients through the crowdsourcing tactics utilized in a prize contest by an organization named Prize4Life, who utilized our Challenge Driven Innovation approach to engage the crowd.

The truth is, the concept of crowdsourcing to innovate has been around for centuries. But, with the growing connectedness of the world due to sheer Internet access, the power and ability to effectively crowdsource has increased exponentially. It’s time for corporations to realize this, and stop relying on stale sources of innovation. ..”

Prospects for Online Crowdsourcing of Social Science Research Tasks: A Case Study Using Amazon Mechanical Turk

New paper by Catherine E. Schmitt-Sands and Richard J. Smith: “While the internet has created new opportunities for research, managing the increased complexity of relationships and knowledge also creates challenges. Amazon.com has a Mechanical Turk service that allows people to crowdsource simple tasks for a nominal fee. The online workers may be anywhere in North America or India and range in ability. Social science researchers are only beginning to use this service. While researchers have used crowdsourcing to find research subjects or classify texts, we used Mechanical Turk to conduct a policy scan of local government websites. This article describes the process used to train and ensure quality of the policy scan. It also examines choices in the context of research ethics.”

Crowdsourcing forecasts on science and technology events and innovations

Kurzweil News: “George Mason University launched today, Jan. 10, the largest and most advanced science and technology prediction market in the world: SciCast.

The federally funded research project aims to improve the accuracy of science and technology forecasts. George Mason research assistant professor Charles Twardy is the principal investigator of the project.

SciCast crowdsources forecasts on science and technology events and innovations from aerospace to zoology.

For example, will Amazon use drones for commercial package delivery by the end of 2017? Today, SciCast estimates the chance at slightly more than 50 percent. If you think that is too low, you can estimate a higher chance. SciCast will use your estimate to adjust the combined forecast.

Forecasters can update their forecasts at any time; in the above example, perhaps after the Federal Aviation Administration (FAA) releases its new guidelines for drones. The continually updated and reshaped information helps both the public and private sectors better monitor developments in a variety of industries. SciCast is a real-time indicator of what participants think is going to happen in the future.

“Combinatorial” prediction market better than simple average

How SciCast works (Credit: George Mason University)

The idea is that collective wisdom from diverse, informed opinions can provide more accurate predictions than individual forecasters, a notion borne out by other crowdsourcing projects. Simply taking an average is almost always better than going with the “best” expert. But in a two-year test on geopolitical questions, the SciCast method did 40 percent better than the simple average.

SciCast uses the first general “combinatorial” prediction market. In a prediction market, forecasters spend points to adjust the group forecast. Significant changes “cost” more — but “pay” more if they turn out to be right. So better forecasters gain more points and therefore more influence, improving the accuracy of the system.

In a combinatorial market like SciCast, forecasts can influence each other. For example, forecasters might have linked cherry production to honeybee populations. Then, if forecasters increase the estimated percentage of honeybee colonies lost this winter, SciCast automatically reduces the estimated 2014 cherry production. This connectivity among questions makes SciCast more sophisticated than other prediction markets.

SciCast topics include agriculture, biology and medicine, chemistry, computational sciences, energy, engineered technologies, global change, information systems, mathematics, physics, science and technology business, social sciences, space sciences and transportation….

Crowdsourcing forecasts on science and technology events and innovations

January 10, 2014

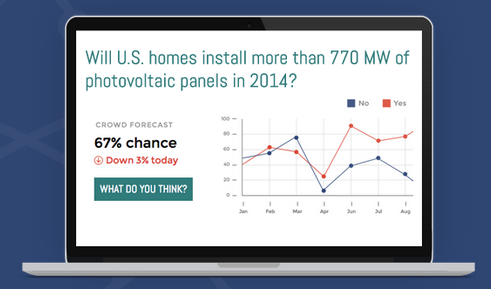

Example of SciCast crowdsourced forecast (credit: George Mason University)

George Mason University launched today, Jan. 10, the largest and most advanced science and technology prediction market in the world: SciCast.

The federally funded research project aims to improve the accuracy of science and technology forecasts. George Mason research assistant professor Charles Twardy is the principal investigator of the project.

SciCast crowdsources forecasts on science and technology events and innovations from aerospace to zoology.

For example, will Amazon use drones for commercial package delivery by the end of 2017? Today, SciCast estimates the chance at slightly more than 50 percent. If you think that is too low, you can estimate a higher chance. SciCast will use your estimate to adjust the combined forecast.

Forecasters can update their forecasts at any time; in the above example, perhaps after the Federal Aviation Administration (FAA) releases its new guidelines for drones. The continually updated and reshaped information helps both the public and private sectors better monitor developments in a variety of industries. SciCast is a real-time indicator of what participants think is going to happen in the future.

“Combinatorial” prediction market better than simple average

How SciCast works (Credit: George Mason University)

The idea is that collective wisdom from diverse, informed opinions can provide more accurate predictions than individual forecasters, a notion borne out by other crowdsourcing projects. Simply taking an average is almost always better than going with the “best” expert. But in a two-year test on geopolitical questions, the SciCast method did 40 percent better than the simple average.

SciCast uses the first general “combinatorial” prediction market. In a prediction market, forecasters spend points to adjust the group forecast. Significant changes “cost” more — but “pay” more if they turn out to be right. So better forecasters gain more points and therefore more influence, improving the accuracy of the system.

In a combinatorial market like SciCast, forecasts can influence each other. For example, forecasters might have linked cherry production to honeybee populations. Then, if forecasters increase the estimated percentage of honeybee colonies lost this winter, SciCast automatically reduces the estimated 2014 cherry production. This connectivity among questions makes SciCast more sophisticated than other prediction markets.

SciCast topics include agriculture, biology and medicine, chemistry, computational sciences, energy, engineered technologies, global change, information systems, mathematics, physics, science and technology business, social sciences, space sciences and transportation.

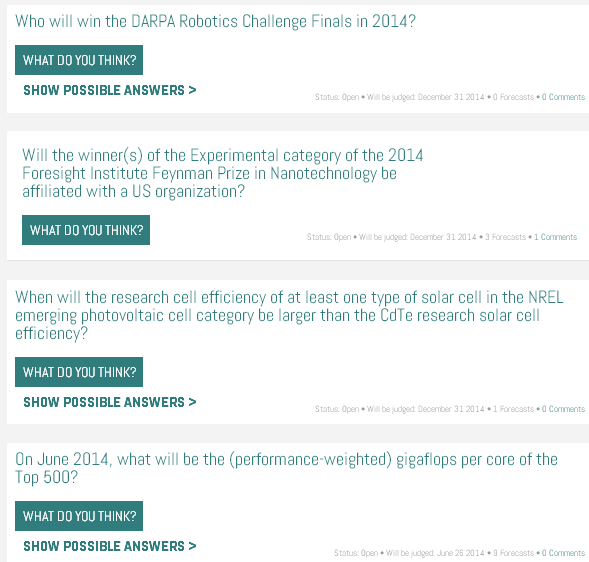

Seeking futurists to improve forecasts, pose questions

(Credit: George Mason University)

“With so many science and technology questions, there are many niches,” says Twardy, a researcher in the Center of Excellence in Command, Control, Communications, Computing and Intelligence (C4I), based in Mason’s Volgenau School of Engineering.

“We seek scientists, statisticians, engineers, entrepreneurs, policymakers, technical traders, and futurists of all stripes to improve our forecasts, link questions together and pose new questions.”

Forecasters discuss the questions, and that discussion can lead to new, related questions. For example, someone asked,Will Amazon deliver its first package using an unmanned aerial vehicle by Dec. 31, 2017?

An early forecaster suggested that this technology is likely to first be used in a mid-sized town with fewer obstructions or local regulatory issues. Another replied that Amazon is more likely to use robots to deliver packages within a short radius of a conventional delivery vehicle. A third offered information about an FAA report related to the subject.

Any forecaster could then write a question about upcoming FAA rulings, and link that question to the Amazon drones question. Forecasters could then adjust the strength of the link.

“George Mason University has succeeded in launching the world’s largest forecasting tournament for science and technology,” says Jason Matheny, program manager of Forecasting Science and Technology at the Intelligence Advanced Research Projects Activity, based in Washington, D.C. “SciCast can help the public and private sectors to better understand a range of scientific and technological trends.”

Collaborative but Competitive

More than 1,000 experts and enthusiasts from science and tech-related associations, universities and interest groups preregistered to participate in SciCast. The group is collaborative in spirit but also competitive. Participants are rewarded for accurate predictions by moving up on the site leaderboard, receiving more points to spend influencing subsequent prognostications. Participants can (and should) continually update their predictions as new information is presented.

SciCast has partnered with the American Association for the Advancement of Science, the Institute of Electrical and Electronics Engineers, and multiple other science and technology professional societies.

Mason members of the SciCast project team include Twardy; Kathryn Laskey, associate director for the C4I and a professor in the Department of Systems Engineering and Operations Research; associate professor of economics Robin Hanson; C4I research professor Tod Levitt; and C4I research assistant professors Anamaria Berea, Kenneth Olson and Wei Sun.

To register for SciCast, visit www.SciCast.org, or for more information, e-mail support@scicast.org. SciCast is open to anyone age 18 or older.”

Why the Nate Silvers of the World Don’t Know Everything

Felix Salmon in Wired: “This shift in US intelligence mirrors a definite pattern of the past 30 years, one that we can see across fields and institutions. It’s the rise of the quants—that is, the ascent to power of people whose native tongue is numbers and algorithms and systems rather than personal relationships or human intuition. Michael Lewis’ Moneyball vividly recounts how the quants took over baseball, as statistical analysis trumped traditional scouting and propelled the underfunded Oakland A’s to a division-winning 2002 season. More recently we’ve seen the rise of the quants in politics. Commentators who “trusted their gut” about Mitt Romney’s chances had their gut kicked by Nate Silver, the stats whiz who called the election days beforehand as a lock for Obama, down to the very last electoral vote in the very last state.

The reason the quants win is that they’re almost always right—at least at first. They find numerical patterns or invent ingenious algorithms that increase profits or solve problems in ways that no amount of subjective experience can match. But what happens after the quants win is not always the data-driven paradise that they and their boosters expected. The more a field is run by a system, the more that system creates incentives for everyone (employees, customers, competitors) to change their behavior in perverse ways—providing more of whatever the system is designed to measure and produce, whether that actually creates any value or not. It’s a problem that can’t be solved until the quants learn a little bit from the old-fashioned ways of thinking they’ve displaced.

No matter the discipline or industry, the rise of the quants tends to happen in four stages. Stage one is what you might call pre-disruption, and it’s generally best visible in hindsight. Think about quaint dating agencies in the days before the arrival of Match .com and all the other algorithm-powered online replacements. Or think about retail in the era before floor-space management analytics helped quantify exactly which goods ought to go where. For a live example, consider Hollywood, which, for all the money it spends on market research, is still run by a small group of lavishly compensated studio executives, all of whom are well aware that the first rule of Hollywood, as memorably summed up by screenwriter William Goldman, is “Nobody knows anything.” On its face, Hollywood is ripe for quantification—there’s a huge amount of data to be mined, considering that every movie and TV show can be classified along hundreds of different axes, from stars to genre to running time, and they can all be correlated to box office receipts and other measures of profitability.

Next comes stage two, disruption. In most industries, the rise of the quants is a recent phenomenon, but in the world of finance it began back in the 1980s. The unmistakable sign of this change was hard to miss: the point at which you started getting targeted and personalized offers for credit cards and other financial services based not on the relationship you had with your local bank manager but on what the bank’s algorithms deduced about your finances and creditworthiness. Pretty soon, when you went into a branch to inquire about a loan, all they could do was punch numbers into a computer and then give you the computer’s answer.

For a present-day example of disruption, think about politics. In the 2012 election, Obama’s old-fashioned campaign operatives didn’t disappear. But they gave money and freedom to a core group of technologists in Chicago—including Harper Reed, former CTO of the Chicago-based online retailer Threadless—and allowed them to make huge decisions about fund-raising and voter targeting. Whereas earlier campaigns had tried to target segments of the population defined by geography or demographic profile, Obama’s team made the campaign granular right down to the individual level. So if a mom in Cedar Rapids was on the fence about who to vote for, or whether to vote at all, then instead of buying yet another TV ad, the Obama campaign would message one of her Facebook friends and try the much more effective personal approach…

After disruption, though, there comes at least some version of stage three: overshoot. The most common problem is that all these new systems—metrics, algorithms, automated decisionmaking processes—result in humans gaming the system in rational but often unpredictable ways. Sociologist Donald T. Campbell noted this dynamic back in the ’70s, when he articulated what’s come to be known as Campbell’s law: “The more any quantitative social indicator is used for social decision-making,” he wrote, “the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.”…

Policing is a good example, as explained by Harvard sociologist Peter Moskos in his book Cop in the Hood: My Year Policing Baltimore’s Eastern District. Most cops have a pretty good idea of what they should be doing, if their goal is public safety: reducing crime, locking up kingpins, confiscating drugs. It involves foot patrols, deep investigations, and building good relations with the community. But under statistically driven regimes, individual officers have almost no incentive to actually do that stuff. Instead, they’re all too often judged on results—specifically, arrests. (Not even convictions, just arrests: If a suspect throws away his drugs while fleeing police, the police will chase and arrest him just to get the arrest, even when they know there’s no chance of a conviction.)…

It’s increasingly clear that for smart organizations, living by numbers alone simply won’t work. That’s why they arrive at stage four: synthesis—the practice of marrying quantitative insights with old-fashioned subjective experience. Nate Silver himself has written thoughtfully about examples of this in his book, The Signal and the Noise. He cites baseball, which in the post-Moneyball era adopted a “fusion approach” that leans on both statistics and scouting. Silver credits it with delivering the Boston Red Sox’s first World Series title in 86 years. Or consider weather forecasting: The National Weather Service employs meteorologists who, understanding the dynamics of weather systems, can improve forecasts by as much as 25 percent compared with computers alone. A similar synthesis holds in economic forecasting: Adding human judgment to statistical methods makes results roughly 15 percent more accurate. And it’s even true in chess: While the best computers can now easily beat the best humans, they can in turn be beaten by humans aided by computers….

That’s what a good synthesis of big data and human intuition tends to look like. As long as the humans are in control, and understand what it is they’re controlling, we’re fine. It’s when they become slaves to the numbers that trouble breaks out. So let’s celebrate the value of disruption by data—but let’s not forget that data isn’t everything.

Entrepreneurs Shape Free Data Into Money

Supporters of such programs often see them as a local economic stimulus plan, allowing software developers and entrepreneurs in cities ranging from San Francisco to South Bend, Ind., to New York, to build new businesses based on the information they get from government websites.

When Los Angeles Mayor Eric Garcetti issued an executive directive last month to launch the city’s open-data program, he cited entrepreneurs and businesses as important beneficiaries. Open-data promotes innovation and “gives companies, individuals, and nonprofit organizations the opportunity to leverage one of government’s greatest assets: public information,” according to the Dec. 18 directive.

A poster child for the movement might be 34-year-old Matt Ehrlichman of Seattle, who last year built an online business in part using Seattle work permits, professional licenses and other home-construction information gathered up by the city’s Department of Planning and Development.

While his website is free, his business, called Porch.com, has more than 80 employees and charges a $35 monthly fee to industry professionals who want to boost the visibility of their projects on the site.

The site gathers raw public data—such as addresses for homes under renovation, what they are doing, who is doing the work and how much they are charging—and combines it with photos and other information from industry professionals and homeowners. It then creates a searchable database for users to compare ideas and costs for projects near their own neighborhood.

…Ian Kalin, director of open-data services at Socrata, a Seattle-based software firm that makes the back-end applications for many of these government open-data sites, says he’s worked with hundreds of companies that were formed around open data.

Among them is Climate Corp., a San Francisco-based firm that collects weather and yield-forecasting data to help farmers decide when and where to plant crops. Launched in 2006, the firm was acquired in October by Monsanto Co. MON -2.90% , the seed-company giant, for $930 million.

Overall, the rate of new business formation declined nationally between 2006 and 2010. But according to the latest data from the Ewing Marion Kauffman Foundation, an entrepreneurship advocacy group in Kansas City, Mo., the rate of new business formation in Seattle in 2011 rose 9.41% in 2011, compared with the national average of 3.9%.

Other cities where new business formation was ahead of the national average include Chicago, Austin, Texas, Baltimore, and South Bend, Ind.—all cities that also have open-data programs. Still, how effective the ventures are in creating jobs is difficult to gauge.

One wrinkle: privacy concerns about the potential for information—such as property tax and foreclosure data—to be misused.

Some privacy advocates fear that government data that include names, addresses and other sensitive information could be used by fraudsters to target victims.”

The Emergence Of The Connected City

Glen Martin at Forbes: “If the modern city is a symbol for randomness — even chaos — the city of the near future is shaping up along opposite metaphorical lines. The urban environment is evolving rapidly, and a model is emerging that is more efficient, more functional, more — connected, in a word.

This will affect how we work, commute, and spend our leisure time. It may well influence how we relate to one another, and how we think about the world. Certainly, our lives will be augmented: better public transportation systems, quicker responses from police and fire services, more efficient energy consumption. But there could also be dystopian impacts: dwindling privacy and imperiled personal data. We could even lose some of the ferment that makes large cities such compelling places to live; chaos is stressful, but it can also be stimulating.

It will come as no surprise that converging digital technologies are driving cities toward connectedness. When conjoined, ISM band transmitters, sensors, and smart phone apps form networks that can make cities pretty darn smart — and maybe more hygienic. This latter possibility, at least, is proposed by Samrat Saha of the DCI Marketing Group in Milwaukee. Saha suggests “crowdsourcing” municipal trash pick-up via BLE modules, proximity sensors and custom mobile device apps.

“My idea is a bit tongue in cheek, but I think it shows how we can gain real efficiencies in urban settings by gathering information and relaying it via the Cloud,” Saha says. “First, you deploy sensors in garbage cans. Each can provides a rough estimate of its fill level and communicates that to a BLE 112 Module.”

As pedestrians who have downloaded custom “garbage can” apps on their BLE-capable iPhone or Android devices pass by, continues Saha, the information is collected from the module and relayed to a Cloud-hosted service for action — garbage pick-up for brimming cans, in other words. The process will also allow planners to optimize trash can placement, redeploying receptacles from areas where need is minimal to more garbage-rich environs….

Garbage can connectivity has larger implications than just, well, garbage. Brett Goldstein, the former Chief Data and Information Officer for the City of Chicago and a current lecturer at the University of Chicago, says city officials found clear patterns between damaged or missing garbage cans and rat problems.

“We found areas that showed an abnormal increase in missing or broken receptacles started getting rat outbreaks around seven days later,” Goldstein said. “That’s very valuable information. If you have sensors on enough garbage cans, you could get a temporal leading edge, allowing a response before there’s a problem. In urban planning, you want to emphasize prevention, not reaction.”

Such Cloud-based app-centric systems aren’t suited only for trash receptacles, of course. Companies such as Johnson Controls are now marketing apps for smart buildings — the base component for smart cities. (Johnson’s Metasys management system, for example, feeds data to its app-based Paoptix Platform to maximize energy efficiency in buildings.) In short, instrumented cities already are emerging. Smart nodes — including augmented buildings, utilities and public service systems — are establishing connections with one another, like axon-linked neurons.

But Goldstein, who was best known in Chicago for putting tremendous quantities of the city’s data online for public access, emphasizes instrumented cities are still in their infancy, and that their successful development will depend on how well we “parent” them.

“I hesitate to refer to ‘Big Data,’ because I think it’s a terribly overused term,” Goldstein said. “But the fact remains that we can now capture huge amounts of urban data. So, to me, the biggest challenge is transitioning the fields — merging public policy with computer science into functional networks.”…”

The future of law and legislation?

prior probability: “Mike Gatto, a legislator in California, recently set up the world’s first Wiki-bill in order to enable private citizens to act as cyber-legislators and help draft an actual law. According to Assemblyman Gatto:

Government has a responsibility to listen to the people and to enable everyone to be an active part of the legislative process. That’s why I’ve created this space for you to draft real legislation. Just like a Wikipedia entry, you can see what the current draft is, and propose minor or major edits. The marketplace of ideas will decide the final draft. We’re starting with a limited topic: probate. Almost everyone will face the prospect of working through the details of a deceased loved one’s finances and estate at some point during their life. I want to hear your ideas for how to make this process less burdensome.”

What Jelly Means

Steven Johnson: “A few months ago, I found this strange white mold growing in my garden in California. I’m a novice gardener, and to make matters worse, a novice Californian, so I had no idea what these small white cells might portend for my flowers.

This is one of those odd blank spots — I used the call them Googleholes in the early days of the service — where the usual Delphic source of all knowledge comes up relatively useless. The Google algorithm doesn’t know what those white spots are, the way it knows more computational questions, like “what is the top-ranked page for “white mold?” or “what is the capital of Illinois?” What I want, in this situation, is the distinction we usually draw between information and wisdom. I don’t just want to know what the white spots are; I want to know if I should be worried about them, or if they’re just a normal thing during late summer in Northern California gardens.

Now, I’m sure I know a dozen people who would be able to answer this question, but the problem is I don’t really know which people they are. But someone in my extended social network has likely experienced these white spots on their plants, or better yet, gotten rid of them. (Or, for all I know, ate them — I’m trying not to be judgmental.) There are tools out there that would help me run the social search required to find that person. I can just bulk email my entire address book with images of the mold and ask for help. I could go on Quora, or a gardening site.

But the thing is, it’s a type of question that I find myself wanting to ask a lot, and there’s something inefficient about trying to figure the exact right tool to use to ask it each time, particularly when we have seen the value of consolidating so many of our queries into a single, predictable search field at Google.

This is why I am so excited about the new app, Jelly, which launched today. …

Jelly, if you haven’t heard, is the brainchild of Biz Stone, one of Twitter’s co-founders. The service launches today with apps on iOS and Android. (Biz himself has a blog post and video, which you should check out.) I’ve known Biz since the early days of Twitter, and I’m excited to be an adviser and small investor in a company that shares so many of the values around networks and collective intelligence that I’ve been writing about since Emergence.

The thing that’s most surprising about Jelly is how fun it is to answer questions. There’s something strangely satisfying in flipping through the cards, reading questions, scanning the pictures, and looking for a place to be helpful. It’s the same broad gesture of reading, say, a Twitter feed, and pleasantly addictive in the same way, but the intent is so different. Scanning a twitter feed while waiting for the train has the feel of “Here we are now, entertain us.” Scanning Jelly is more like: “I’m here. How can I help?”

Open Government Strategy Continues with US Currency Production API

Eric Carter in the ProgrammableWeb: “Last year, the Executive branch of the US government made huge strides in opening up government controlled data to the developer community. Projects such as the Open Data Policy and the Machine Readable Executive Order have led the US government to develop an API strategy. Today, ProgrammableWeb takes a look at another open government API: the Annual Production Figures of United States Currency API.

The US Treasury’s Bureau of Engraving and Printing (BEP) provides the dataset available through the Production Figures API. The data available consists of the number of $1, $5, $10, $20, $50, $100 notes printed each year from 1980 to 2012. With this straightforward, seemingly basic set of data available, the question becomes: “Why is this data useful“? To answer this, one should consider the purpose of the Executive Order:

“Openness in government strengthens our democracy, promotes the delivery of efficient and effective services to the public, and contributes to economic growth. As one vital benefit of open government, making information resources easy to find, accessible, and usable can fuel entrepreneurship, innovation, and scientific discovery that improves Americans’ lives and contributes significantly to job creation.”

The API uses HTTP and can return requests in XML, JSON, or CSV data formats. As stated, the API retrieves the number of bills of a designated currency for the desired year. For more information and code samples, visit the API docs.”