Paper by Tom Storme et al: “In this paper, we report on a citizen science pilot project involving adolescents who digitize and assess their daily home-to-school routes in different school neighborhoods in Flanders (Belgium). As part of this pilot project, a web-based platform, called the “Bike Barometer” (“Fietsbarometer” in Dutch) was developed. We introduce the tool in this paper and summarize the insights gained from the pilot. From the official launch of the platform in March until the end of the pilot in June 2020, 1,256 adolescents from 31 schools digitized 5657 km of roads, of which 3,750 km were evaluated for cycling friendliness and safety. The added value and potential of citizen science in general and the platform in particular are illustrated. The results offer detailed (spatial) insights into local safety conditions for Flanders and for specific school neighborhoods. The potential for mobility policy is twofold: (i) the cycling friendliness and traffic flows in school environments can be monitored over time and (ii) the platform has the potential to create local ecosystems of adolescents and teachers (both considered citizen scientists here) and policymakers. Two key pitfalls are identified as well: the need for a critical mass of citizen scientists and a minimum level of commitment required from local policymakers. By illustrating the untapped potential of citizen science, we argue that the intersection between citizen science and local policymaking in the domain of mobility deserves much more attention….(More)”.

“Co-construction” in Deliberative Democracy: Lessons from the French Citizens’ Convention for Climate

Paper by L.G. Giraudet et al: “Launched in 2019, the French Citizens’ Convention for Climate (CCC) tasked 150 randomly-chosen citizens with proposing fair and effective measures to fight climate change. This was to be fulfilled through an “innovative co-construction procedure,” involving some unspecified external input alongside that from the citizens. Did inputs from the steering bodies undermine the citizens’ accountability for the output? Did co-construction help the output resonate with the general public, as is expected from a citizens’ assembly? To answer these questions, we build on our unique experience in observing the CCC proceedings and documenting them with qualitative and quantitative data. We find that the steering bodies’ input, albeit significant, did not impair the citizens’ agency, creativity and freedom of choice. While succeeding in creating consensus among the citizens who were involved, this co-constructive approach however failed to generate significant support among the broader public. These results call for a strengthening of the commitment structure that determines how follow-up on the proposals from a citizens’ assembly should be conducted…(More)”.

How behavioral science could get people back into public libraries

Article by Talib Visram: “In October, New York City’s three public library systems announced they would permanently drop fines on late book returns. Comprised of Brooklyn, Queens, and New York public libraries, the City’s system is the largest in the country to remove fines. It’s a reversal of a long-held policy intended to ensure shelves stayed stacked, but an outdated one that many major cities, including Chicago, San Francisco, and Dallas, had already scrapped without any discernible downsides. Though a source of revenue—in 2013, for instance, Brooklyn Public Library (BPL) racked up $1.9 million in late fees—the fee system also created a barrier to library access that disproportionately touched the low-income communities that most need the resources.

That’s just one thing Brooklyn’s library system has done to try to make its services more equitable. In 2017, well before the move to eliminate fines, BPL on its own embarked on a partnership with Nudge, a behavioral science lab at the University of West Virginia, to find ways to reduce barriers to access and increase engagement with the book collections. In the first-of-its-kind collaboration, the two tested behavioral science interventions via three separate pilots, all of which led to the library’s long-term implementation of successful techniques. Those involved in the project say the steps can be translated to other library systems, though it takes serious investment of time and resources….(More)”.

Dutch cities to develop European mobility data standard

Cities Today: “Five Dutch cities – Amsterdam, Utrecht, Eindhoven, Rotterdam and The Hague – are collaborating to establish a new standard for the exchange of data between cities and shared mobility operators.

In partnership with the Dutch Ministry of Infrastructure and Water, the five cities, known as the G-5, will develop the City Data Standard – Mobility (CDS-M). The platform will allow information on mobility patterns, including the use of shared vehicles, traffic flows and parking, to be shared in compliance with Europe’s strict General Data Protection Regulation (GDPR).

So far, the working group has been focused on internal capacity-building, and members are set to delve into key thematic areas from early June.

Speaking to Cities Today, Ross Curzon-Butler, Chairperson of the City of Amsterdam’s Data Specification for Mobility Working Group and Chief Technology Officer at Dutch start-up Cargoroo, said:“The key thing is about making sure the data is accessible to cities in a way that is proportional and compliant with GDPR.

“What we have to recognise is that cities are going to ask transport firms for data. This is coming, whether we like it or not. We therefore have the impetus to make sure that the data requested is in a standardised way, and that there’s a standardised understanding of why they’re being asked for that data.”…

Curzon-Butler sees the new standard as complementing, rather than competing with, the Open Mobility Foundation’s Mobility Data Specification (MDS), which is already used in several European cities, including Lisbon….

The CDS-M consists of the “standard”, the technical design, and the “agreement”, that details which organisations are involved in data processing. The agreement framework is now under development and will be established by a working group comprising mobility operators, urban planners, data scientists, code developers, data protection officers, and security experts.

“If you’re a city, or a data processor or a transport operator and you start asking people for different data points and asking for it in different formats, and across different standards, it becomes unmanageable,” Curzon-Butler said.

“And the development time in all of these things is already high enough, so what we’re trying to do is normalise the data flow as much as possible, so that everyone in that data chain doesn’t have these huge overheads that just grow and grow, where you’re then having to manage multiple dialects and standards and trying to understand ‘who’s got what data and what are they really doing with it?’.

“And in Europe we have GDPR, which is a very serious regulation that we have to be very mindful and aware of.”

He referenced a recent case where the Dutch Data Protection Authority (DPA) fined the City of Enschede €600,000 (US$730,000) for its use of Wi-Fi sensors to measure the number of people in the city centre.

It is understood to be the first time the regulator has imposed a fine on a government body under the GDPR but the case could have implications for cities well beyond the Netherlands. Enschede is appealing the decision…(More)”

Public participation in crisis policymaking. How 30,000 Dutch citizens advised their government on relaxing COVID-19 lockdown measures

Paper by Niek Mouter et al: “Following the outbreak of COVID-19, governments took unprecedented measures to curb the spread of the virus. Public participation in decisions regarding (the relaxation of) these measures has been notably absent, despite being recommended in the literature. Here, as one of the exceptions, we report the results of 30,000 citizens advising the government on eight different possibilities for relaxing lockdown measures in the Netherlands. By making use of the novel method Participatory Value Evaluation (PVE), participants were asked to recommend which out of the eight options they prefer to be relaxed. Participants received information regarding the societal impacts of each relaxation option, such as the impact of the option on the healthcare system.

The results of the PVE informed policymakers about people’s preferences regarding (the impacts of) the relaxation options. For instance, we established that participants assign an equal value to a reduction of 100 deaths among citizens younger than 70 years and a reduction of 168 deaths among citizens older than 70 years. We show how these preferences can be used to rank options in terms of desirability. Citizens advised to relax lockdown measures, but not to the point at which the healthcare system becomes heavily overloaded. We found wide support for prioritising the re-opening of contact professions. Conversely, participants disfavoured options to relax restrictions for specific groups of citizens as they found it important that decisions lead to “unity” and not to “division”. 80% of the participants state that PVE is a good method to let citizens participate in government decision-making on relaxing lockdown measures. Participants felt that they could express a nuanced opinion, communicate arguments, and appreciated the opportunity to evaluate relaxation options in comparison to each other while being informed about the consequences of each option. This increased their awareness of the dilemmas the government faces….(More)”.

Many in U.S., Western Europe Say Their Political System Needs Major Reform

Pew Research Center: “A four-nation Pew Research Center survey conducted in November and December of 2020 finds that roughly two-thirds of adults in France and the U.S., as well as about half in the United Kingdom, believe their political system needs major changes or needs to be completely reformed. Calls for significant reform are less common in Germany, where about four-in-ten express this view….

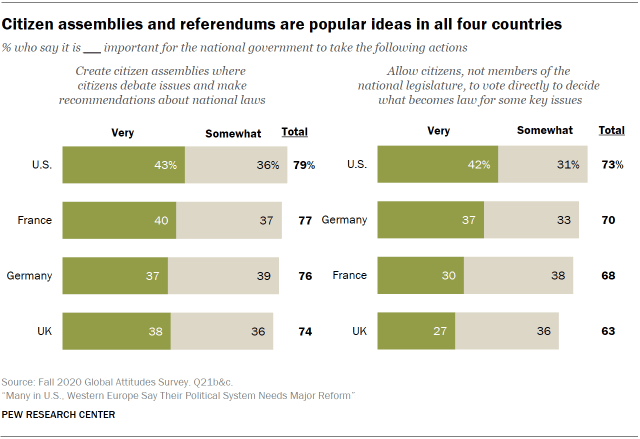

In all four countries, there is considerable interest in political reforms that would potentially allow ordinary citizens to have more power over policymaking. Citizen assemblies, or forums where citizens chosen at random debate issues of national importance and make recommendations about what should be done, are overwhelmingly popular. Around three-quarters or more in each country say it is very or somewhat important for the national government to create citizen assemblies. About four-in-ten say it’s very important. Such processes are in use nationally in France and the UK to debate climate change policy, and they have become increasingly common in nations around the world in recent years.

Citizen assemblies are popular across the ideological spectrum but are especially so among people who place themselves on the political left.1 Those who think their political system needs significant reform are also particularly likely to say it is important to create citizen assemblies.

There are also high levels of support for allowing citizens to vote directly to decide what becomes law for some key issues. About seven-in-ten in the U.S., Germany and France say it is important, in line with previous findings about support for direct democracy. In the UK, where crucial issues such as Scottish independence and Brexit were decided by referendum, support is somewhat lower – 63% say it is important for the government to use referendums to decide some key issues, and just 27% rate this as very important.

These are among the findings of a new Pew Research Center survey conducted from Nov. 10 to Dec. 23, 2020, among 4,069 adults in the France, Germany, the UK and the U.S. This report also includes findings from 26 focus groups conducted in 2019 in the U.S. and UK….(More)”.

UK response to pandemic hampered by poor data practices

Report for the Royal Society: “The UK is well behind other countries in making use of data to have a real time understanding of the spread and economic impact of the pandemic according to Data Evaluation and Learning for Viral Epidemics (DELVE), a multi-disciplinary group convened by the Royal Society.

The report, Data Readiness: Lessons from an Emergency, highlights how data such as aggregated and anonymised mobility and payment transaction data, already gathered by companies, could be used to give a more accurate picture of the pandemic at national and local levels. That could in turn lead to improvements in evaluation and better targeting of interventions.

Maximising the value of big data at a time of crisis requires careful cooperation across the private sector, that is already gathering these data, the public sector, which can provide a base for aggregating and overseeing the correct use of the data and researchers who have the skills to analyse it for the public good. This work needs to be developed in accordance with data protection legislation and respect people’s concerns about data security and privacy.

The report calls on the Government to extend the powers of the Office for National Statistics to enable them to support trustworthy access to ‘happenstance’ data – data that are already gathered but not for a specific public health purpose – and for the Government to fund pathfinder projects that focus on specific policy questions such as how we nowcast economic metrics and how we better understand population movements.

Neil Lawrence, DeepMind Professor of Machine Learning at the University of Cambridge, Senior AI Fellow at The Alan Turing Institute and an author of the report, said: “The UK has talked about making better use of data for the public good, but we have had statements of good intent, rather than action. We need to plan better for national emergencies. We need to look at the National Risk Register through the lens of what data would help us to respond more effectively. We have to learn our lessons from experiences in this pandemic and be better prepared for future crises. That means doing the work now to ensure that companies, the public sector and researchers have pathfinder projects up and running to share and analyse data and help the government to make better informed decisions.”

During the pandemic, counts of the daily flow of people from one place to another between more than 3000 districts in Spain have been available at the click of a button, allowing policy makers to more effectively understand how the movement of people contributes to the spread of the virus. This was based on a collaboration between the country’s three main mobile phone operators. In France, measuring the impact of the pandemic on consumer spending on a daily and weekly scale was possible as a result of coordinated cooperation between the country’s national interbank network.

Professor Lawrence added: “Mobile phone companies might provide a huge amount of anonymised and aggregated data that would allow us a much greater understanding of how people move around, potentially spreading the virus as they go. And there is a wealth of other data, such as from transport systems. The more we understand about this pandemic, the better we can tackle it. We should be able to work together, the private and the public sectors, to harness big data for massive positive social good and do that safely and responsibly.”…(More)”

Review into bias in algorithmic decision-making

Report by the Center for Data Ethics and Innovation (CDEI) (UK): “Unfair biases, whether conscious or unconscious, can be a problem in many decision-making processes. This review considers the impact that an increasing use of algorithmic tools is having on bias in decision-making, the steps that are required to manage risks, and the opportunities that better use of data offers to enhance fairness. We have focused on the use of

algorithms in significant decisions about individuals, looking across four sectors (recruitment, financial services, policing and local government), and making cross-cutting recommendations that aim to help build the right systems so that algorithms improve, rather than worsen, decision-making…(More)”.

Commission proposes measures to boost data sharing and support European data spaces

Press Release: “To better exploit the potential of ever-growing data in a trustworthy European framework, the Commission today proposes new rules on data governance. The Regulation will facilitate data sharing across the EU and between sectors to create wealth for society, increase control and trust of both citizens and companies regarding their data, and offer an alternative European model to data handling practice of major tech platforms.

The amount of data generated by public bodies, businesses and citizens is constantly growing. It is expected to multiply by five between 2018 and 2025. These new rules will allow this data to be harnessed and will pave the way for sectoral European data spaces to benefit society, citizens and companies. In the Commission’s data strategy of February this year, nine such data spaces have been proposed, ranging from industry to energy, and from health to the European Green Deal. They will, for example, contribute to the green transition by improving the management of energy consumption, make delivery of personalised medicine a reality, and facilitate access to public services.

The Regulation includes:

- A number of measures to increase trust in data sharing, as the lack of trust is currently a major obstacle and results in high costs.

- Create new EU rules on neutrality to allow novel data intermediaries to function as trustworthy organisers of data sharing.

- Measures to facilitate the reuse of certain data held by the public sector. For example, the reuse of health data could advance research to find cures for rare or chronic diseases.

- Means to give Europeans control on the use of the data they generate, by making it easier and safer for companies and individuals to voluntarily make their data available for the wider common good under clear conditions….(More)”.

Geospatial Data Market Study

Study by Frontier Economics: “Frontier Economics was commissioned by the Geospatial Commission to carry out a detailed economic study of the size, features and characteristics of the UK geospatial data market. The Geospatial Commission was established within the Cabinet Office in 2018, as an independent, expert committee responsible for setting the UK’s Geospatial Strategy and coordinating public sector geospatial activity. The Geospatial Commission’s aim is to unlock the significant economic, social and environmental opportunities offered by location data. The UK’s Geospatial Strategy (2020) sets out how the UK can unlock the full power of location data and take advantage of the significant economic, social and environmental opportunities offered by location data….

Like many other forms of data, the value of geospatial data is not limited to the data creator or data user. Value from using geospatial data can be subdivided into several different categories, based on who the value accrues to:

Direct use value: where value accrues to users of geospatial data. This could include government using geospatial data to better manage public assets like roadways.

Indirect use value: where value is also derived by indirect beneficiaries who interact with direct users. This could include users of the public assets who benefit from better public service provision.

Spillover use value: value that accrues to others who are not a direct data user or indirect beneficiary. This could, for example, include lower levels of emissions due to improvement management of the road network by government. The benefits of lower emissions are felt by all of society even those who do not use the road network.

As the value from geospatial data does not always accrue to the direct user of the data, there is a risk of underinvestment in geospatial technology and services. Our £6 billion estimate of turnover for a subset of geospatial firms in 2018 does not take account of these wider economic benefits that “spill over” across the UK economy, and generate additional value. As such, the value that geospatial data delivers is likely to be significantly higher than we have estimated and is therefore an area for potential future investment….(More)”.