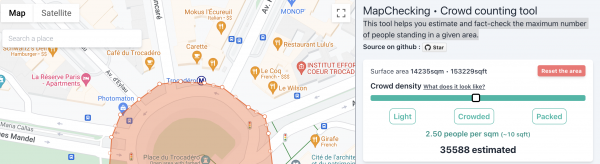

About: “This tool helps you estimate and fact-check the maximum number of people standing in a given area…(More)”

OECD Report: “Governments worldwide are increasingly adopting behavioural science methodologies to address “sludge” – the unjustified frictions impeding people’ access to government services and exacerbating psychological burdens. Sludge audits, grounded in behavioural science, provide a structured approach for identifying, quantifying, and preventing sludge in public services and government processes. This document delineates Good Practice Principles, derived from ten case studies conducted during the International Sludge Academy, aimed at promoting the integration of sludge audit methodologies into public governance and service design. By enhancing government efficiency and bolstering public trust in government, these principles contribute to the broader agenda on administrative simplification, digital services, and public sector innovation…(More)”.

Report by the Tony Blair Institute: “The new government will need to lean in to support the diffusion of AI-era tech across the economy by adopting a pro-innovation, pro-technology stance, as advocated by the Tony Blair Institute for Global Change in our paper Accelerating the Future: Industrial Strategy in the Era of AI.

AI-era tech can also transform public services, creating a smaller, lower-cost state that delivers better outcomes for citizens. New TBI analysis suggests:

The four public-sector use cases outlined above could create substantial fiscal savings for the new government worth £12 billion a year (0.4 per cent of GDP) by the end of this parliamentary term, £37 billion (1.3 per cent of GDP) by the end of the next, and more than £40 billion (1.5 per cent of GDP) by 2040…(More)”.

European Union Report: “…considers how to approach citizen engagement for the EU missions. Engagement and social dialogue should aim to ensure that innovation is human-centred and that missions maintain wide public legitimacy. But citizen engagement is complex and significantly changes the traditional responsibilities of the research and innovation community and calls for new capabilities. This report provides insights to build these capabilities and explores effective ways to help citizens understand their role within the EU missions, showing how to engage them throughout the various stages of implementation. The report considers both the challenges and administrative burdens of citizen engagement and sets out how to overcome them, as well as demonstrated the wider opportunity of “double additionality” where citizen engagement methods serve to fundamentally transform an entire research and innovation portfolio…(More)”.

Report by Thomson Reuters: “First, the productivity benefits we have been promised are now becoming more apparent. As AI adoption has become widespread, professionals can more tangibly tell us about how they will use this transformative technology and the greater efficiency and value it will provide. The most common use cases for AI-powered technology thus far include drafting documents, summarizing information, and performing basic research. Second, there’s a tremendous sense of excitement about the value that new AI-powered technology can bring to the day-to-day lives of the professionals we surveyed. While more than half of professionals said they’re most excited about the benefits that new AI-powered technologies can bring in terms of time-savings, nearly 40% said the new value that will be brought is what excites them the most.

This report highlights how AI could free up that precious commodity of time. As with the adoption of all new technology, change appears moderate and the impact incremental. And yet, within the year, our respondents predicted that for professionals, AI could free up as much as four hours a week. What will they do with 200 extra hours of time a year? They might reinvest that time in strategic work, innovation, and professional development, which could help companies retain or advance their competitive advantage. Imagine the broader impact on the economy and GDP from this increased efficiency. For US lawyers alone, that is a combined 266 million hours of increased productivity. That could translate into $100,000 in new, billable time per lawyer each year, based on current average rates – with similar productivity gains projected across various professions. The time saved can also be reinvested in professional development, nurturing work-life balance, and focusing on wellness and mental health. Moreover, the economic and organizational benefits of these time-savings are substantial. They could lead to reduced operational costs and higher efficiency, while enabling organizations to redirect resources toward strategic initiatives, fostering growth and competitiveness.

Finally, it’s important to acknowledge there’s still a healthy amount of reticence among professionals to fully adopt AI. Respondents are concerned primarily with the accuracy of outputs, and almost two-thirds of respondents agreed that data security is a vital component of responsible use. These concerns aren’t trivial, and they warrant attention as we navigate this new era of technology. While AI can provide tremendous productivity benefits to professionals and generate greater value for businesses, that’s only possible if we build and use this technology responsibly.”…(More)”.

Paper by Adam Meylan-Stevenson, Ben Hawes, and Matt Ryan: “This paper explores the use of online tools to improve democratic participation and deliberation. These tools offer new opportunities for inclusive communication and networking, specifically targeting the participation of diverse groups in decision-making processes. It summarises recent research and published reports by users of these tools and categorises the tools according to functions and objectives. It also draws on testimony and experiences recorded in interviews with some users of these tools in public sector and civil society organisations internationally.

The objective is to introduce online deliberation tools to a wider audience, including benefits, limitations and potential disadvantages, in the immediate context of research on democratic deliberation. We identify limitations of tools and of the context and markets in which online deliberation tools are currently being developed. The paper suggests that fostering a collaborative approach among technology developers and democratic practitioners, might improve opportunities for funding and continual optimisation that have been used successfully in other online application sectors…(More)”.

Report by Mark Kaigwa et al: “The “Dada Disinfo: Technology-Facilitated Gender-Based Violence (TFGBV) Report,” prepared by Nendo and Pollicy, outlines the pervasive issue of TFGBV in Kenya’s vibrant but volatile social media ecosystem. The report draws on extensive research, including social media analytics, surveys, and in-depth interviews with content creators, to shed light on the manifestations, perpetrators, and impacts of TFGBV. The project, supported by USAID and conducted in collaboration with Pollicy, integrates advanced analytics to offer insights and potential solutions to mitigate online gender-based violence in Kenya…(More)”.

United Nations: “Technological advances have revolutionized communications, connecting people on a previously unthinkable scale. They have supported communities in times of crisis, elevated marginalized voices and helped mobilize global movements for racial justice and gender equality.

Yet these same advances have enabled the spread of misinformation, disinformation and hate speech at an unprecedented volume, velocity and virality, risking the integrity of the information ecosystem.

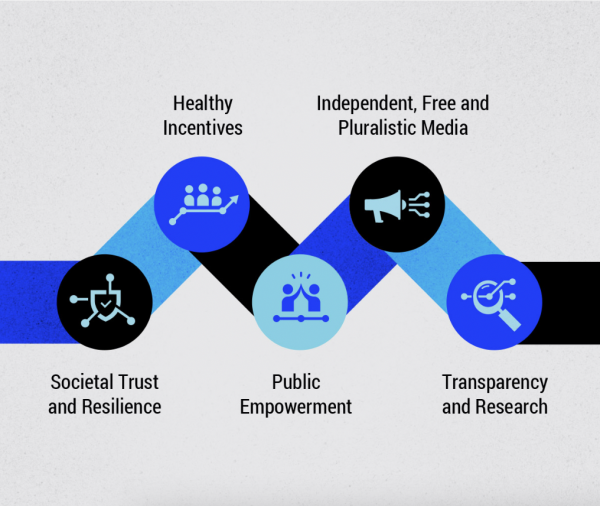

New and escalating risks stemming from leaps in AI technologies have made strengthening information integrity one of the urgent tasks of our time.

This clear and present global threat demands coordinated international action.

The United Nations Global Principles for Information Integrity show us another future is possible…(More)”

Report by Search for Common Ground (Search): “… has specialized in approaches that leverage media such as radio and television to reach target audiences. In recent years, the organization has been more intentional about digital and online spaces, delving deeper into the realm of digital peacebuilding. Search has since implemented a number of digital peacebuilding projects.

Search wanted to understand if and how its initiatives were able to catalyze constructive agency among social media users, away from a space of apathy, self-doubt, or fear to incite inclusion, belonging, empathy, mutual understanding, and trust. This report examines these hypotheses using primary data from former and current participants in Search’s digital peacebuilding initiatives…(More)”

Report by Michael Lawrence and Megan Shipman: “Polycrisis analysis reveals the complex and systemic nature of the world’s problems, but it can also help us pursue “positive pathways” to better futures. This report outlines the sorts of systems changes required to avoid, mitigate, and navigate through polycrisis given the dual nature of crises as harmful disasters and opportunities for transformation. It then examines the progression between three prominent approaches to systems change—leverage points, tipping points, and multi-systemic stability landscapes—by highlighting their advances and limitations. The report concludes that new tools like Cross-Impact Balance analysis can build on these approaches to help navigate through polycrisis by identifying stable and desirable multi-systemic equilibria…(More)”