Extract from ‘Geek Sublime: Writing Fiction, Coding Software’ by Vikram Chandra: “A geek hunched over a laptop tapping frantically at the keyboard, neon-bright lines of green code sliding up the screen – the programmer at work is now a familiar staple of popular entertainment. The clipped shorthand and digits of programming languages are familiar even to civilians, if only as runic incantations charged with world-changing power.

Computing has transformed all our lives but the processes and cultures that produce software remain largely opaque, alien, unknown. This is certainly true within my own professional community of fiction writers; whenever I tell one of my fellow authors that I supported myself through the writing of my first novel by working as a programmer and a computer consultant, I evoke a response that mixes bemusement, bafflement, and a touch of awe, as if I had just said that I could levitate.

Most of the artists I know – painters, film-makers, actors, poets – seem to regard programming as an esoteric scientific discipline; they are keenly aware of its cultural mystique, envious of its potential profitability and eager to extract metaphors, imagery, and dramatic possibility from its history, but coding may as well be nuclear physics as far as relevance to their own daily practice is concerned.

Many programmers, on the other hand, regard themselves as artists. Since programmers create complex objects, and care not just about function but also about beauty, they are just like painters and sculptors. The best-known assertion of this notion is the 2003 essay “Hackers and Painters” by programmer and venture capitalist Paul Graham. “Of all the different types of people I’ve known, hackers and painters are among the most alike,” writes Graham. “What hackers and painters have in common is that they’re both makers. Along with composers, architects, and writers, what hackers and painters are trying to do is make good things.”

According to Graham, the iterative processes of programming – write, debug (discover and remove bugs, which are coding errors), rewrite, experiment, debug, rewrite – exactly duplicate the methods of artists. “The way to create something beautiful is often to make subtle tweaks to something that already exists, or to combine existing ideas in a slightly new way,” he writes. “You should figure out programs as you’re writing them, just as writers and painters and architects do.”

Attention to detail further marks good hackers with artist-like passion, he argues. “All those unseen details [in a Leonardo da Vinci painting] combine to produce something that’s just stunning, like a thousand barely audible voices all singing in tune. Great software, likewise, requires a fanatical devotion to beauty. If you look inside good software, you find that parts no one is ever supposed to see are beautiful too.”

This desire to equate art and programming has a lengthy pedigree. In 1972, famed computer scientist Butler Lampson published an editorial titled “Programmers as Authors” that began: “Creative endeavour varies greatly in the amount of overhead (ie money, manpower and organisation) associated with a project which calls for a given amount of creative work. At one extreme is the activity of an aircraft designer, at the other that of a poet. The art of programming currently falls much closer to the former than the latter. I believe, however, that this situation is likely to change considerably in the next decade.”

A Task-Fit Model of Crowdsourcing: Finding the Right Crowdsourcing Approach to Fit the Task

Boston's Building a Synergy Between City Hall & Startups

Gillis Bernard at BostInno: “Boston’s local government and startup scene want to do more than peacefully co-exist. They want to co-create. The people perhaps credited for contributing the most buzz to this trend are those behind relatively new parking ticket app TicketZen. Cort Johnson, along with a few others from Terrible Labs, a Web and mobile app design consultancy in Chinatown, came up with the idea for the app after spotting a tweet from one of Boston’s trademark entrepreneurs. A few months back, ex-KAYAK CTO (and Blade co-founder) Paul English sent out a 140-character message calling for an easy, instantaneous payment solution for parking tickets, Johnson told BostInno.

The idea was that in the time it takes for Boston’s enforcement office to process a parking ticket, its recipient has already forgotten his or her frustration or misplaced the bright orange slip, thus creating a situation in which both parties lose: the local government’s collection process is held up and the recipient is forced to pay a larger fine for the delay.

With the problem posed and the spark lit, the Terrible Labs team took to building TicketZen, an app which allows people to scan their tickets and immediately send validation to City Hall to kick off the process.

“When we first came up with the prototype, [City Hall was] really excited and worked to get it launched in Boston first,” said Johnson. “But we have built a bunch of integrations for major cities where most of the parking tickets are issued, which will launch early this year.”

But in order to even get the app up-and-running, Terrible Labs needed to work with some local government representatives – namely, Chris Osgood and Nigel Jacob of the Mayor’s Office of New Urban Mechanics….

Since its inception in 2010, the City Hall off-shoot has worked with all kinds of Boston citizens to create civic-facing innovations that would be helpful to the city at large.

For example, a group of mothers with children at Boston Public Schools approached New Urban Mechanics to create an app that shares when the school bus will arrive, similar to that of the MBTA’s, which shows upcoming train times. The nonprofit then arranged a partnership with Vermonster LLC, a software application development firm in Downtown Boston to create the Where’s My School Bus app.

“There’s a whole host of different backgrounds, from undergrad students to parents, who would never consider themselves to be entrepreneurs or innovators originally … There are just so many talented, driven and motivated folks that would likely have a similar interest in doing work in the civic space. The challenge is to scale that beyond what’s currently out there,” shared Osgood. “We’re asking, ‘How can City Hall do a better job to support innovators?’”

Of course, District Hall was created for this very purpose – supporting creatives and entrepreneurs by providing them a perpetually open door and an event space. Additionally, there have been a number of events geared toward civic innovation within the past few months targeting both entrepreneurs and government.

The former mayor Thomas Menino led the charge in opening the Office of Business Development, which features a sleek new website and focuses on providing entrepreneurs and existing businesses with access to financial and technical resources. Further, a number of organizations collaborated in early December 2013 to host a free-to-register event dubbed MassDOT Visualizing Transportation Hackathon to help generate ideas for improving public transit from the next generation’s entrepreneurs; just this month, the Venture Café and the Cambridge Innovation Center hosted Innovation and the City, a conference uniting leading architects, urban planners, educators and business leaders from different cities around the U.S. to speak to the changing landscape of civic development.”

Civic Tech Forecast: 2014

Laura Dyson from Code for America: “Last year was a big year for civic technology and government innovation, and if last week’s Municipal Innovation discussion was any indication, 2014 promises to be even bigger. More than sixty civic innovators from both inside and outside of government gathered to hear three leading civic tech experts share their “Top Five” list of civic tech trends from 2013m, and predictions for what’s to come in 2014. From responsive web design to overcoming leadership change, guest speakers Luke Fretwell, Juan Pablo Velez, and Alissa Black covered both challenges and opportunities. And the audience had a few predictions of their own. Highlights included:

Mark Leech, Application Development Manager, City of Albuquerque: “Regionalization will allow smaller communities to participate and act as a force multiplier for them.”

Rebecca Williams, Policy Analyst, Sunlight Foundation: “Open data policy (law and implementation) will become more connected to traditional forms of governance, like public records and town hall meetings.”

Rick Dietz, IT Director, City of Bloomington, Ind.: “I think governments will need to collaborate directly more on open source development, particularly on enterprise scale software systems — not just civic apps.”

Kristina Ng, Office of Financial Empowerment, City and County of San Francisco: “I’m excited about the growing community of innovative government workers.”

Hillary Hartley, Presidential Innovation Fellow: “We’ll need to address sustainability and revenue opportunities. Consulting work can only go so far; we must figure out how to empower civic tech companies to actually make money.”

An informal poll of the audience showed that roughly 96 percent of the group was feeling optimistic about the coming year for civic innovation. What’s your civic tech forecast for 2014? Read on to hear what guest speakers Luke Fretwell, Juan Pablo Velez, and Alissa Black had to say, and then let us know how you’re feeling about 2014 by tweeting at @codeforamerica.”

Big Data, Privacy, and the Public Good

Forthcoming book and website by Julia Lane, Victoria Stodden, Stefan Bender, and Helen Nissenbaum (editors): “The overarching goal of the book is to identify ways in which vast new sets of data on human beings can be collected, integrated, and analysed to improve evidence based decision making while protecting confidentiality. …

Massive amounts of new data on human beings can now be accessed and analyzed. Much has been made of the many uses of such data for pragmatic purposes, including selling goods and services, winning political campaigns, and identifying possible terrorists. Yet “big data” can also be harnessed to serve the public good: scientists can use new forms of data to do research that improves the lives of human beings, federal, state and local governments can use data to improve services and reduce taxpayer costs and public organizations can use information to advocate for public causes.

Much has also been made of the privacy and confidentiality issues associated with access. A survey of statisticians at the 2013 Joint Statistical Meeting found that the majority thought consumers should worry about privacy issues, and that an ethical framework should be in place to guide data scientists. Yet there are many unanswered questions. What are the ethical and legal requirements for scientists and government officials seeking to serve the public good without harming individual citizens? What are the rules of engagement? What are the best ways to provide access while protecting confidentiality? Are there reasonable mechanisms to compensate citizens for privacy loss?

The goal of this book is to answer some of these questions. The book’s authors paint an intellectual landscape that includes the legal, economic and statistical context necessary to frame the many privacy issues, including the value to the public of data access. The authors also identify core practical approaches that use new technologies to simultaneously maximize the utility of data access while minimizing information risk. As is appropriate for such a new and evolving field, each chapter also identifies important questions that require future research.

The work in this book is also intended to be accessible to an audience broader than the academy. In addition to informing the public, we hope that the book will be useful to people trying to provide data access but protect confidentiality in the roles as data custodians for federal, state and local agencies, or decision makers on institutional review boards.”

Visual Insights: A Practical Guide to Making Sense of Data

New book by Katy Börner and David E. Polley: “In the age of Big Data, the tools of information visualization offer us a macroscope to help us make sense of the avalanche of data available on every subject. This book offers a gentle introduction to the design of insightful information visualizations. It is the only book on the subject that teaches nonprogrammers how to use open code and open data to design insightful visualizations. Readers will learn to apply advanced data mining and visualization techniques to make sense of temporal, geospatial, topical, and network data.

Visual Insights will be an essential resource on basic information visualization techniques for scholars in many fields, students, designers, or anyone who works with data.”

Check out also the Information Visualization MOOC at http://ivmooc.cns.iu.edu/

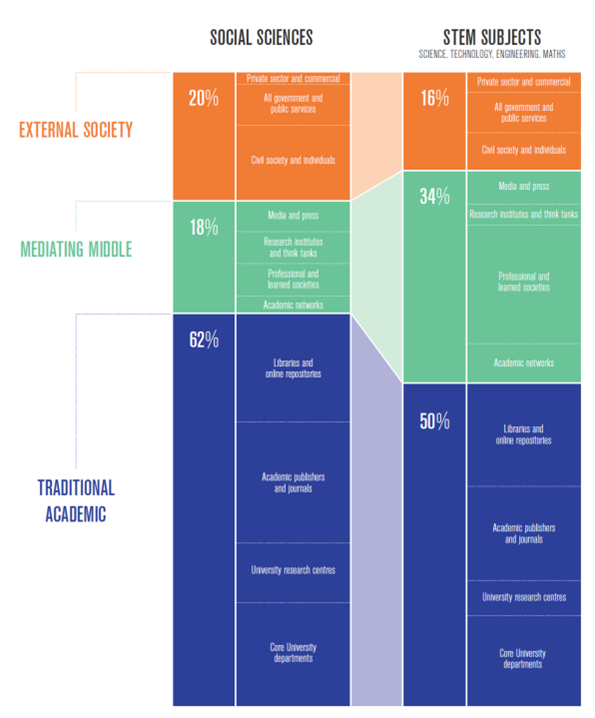

The Impact of the Social Sciences

New book: The Impact of the Social Sciences: How Academics and Their Research Make a Difference by Simon Bastow, Jane Tinkler and Patrick Dunleavy.

The three-year Impact of Social Sciences Project has culminated in a monograph published by SAGE. The book presents thorough analysis of how academic research in the social sciences achieves public policy impacts, contributes to economic prosperity, and informs public understanding of policy issues as well as economic and social changes. This book is essential reading for academics, researchers, university administrators, government and private funders, and anyone interested in the global conversation about joining up and increasing the societal value and impact of social science knowledge and research.

Resources:

Resources:

- View the data visualisations that appear in the book here.

- Browse our Living Bibliography with links to further resources.

- Research Design and Methods Appendix [PDF]

- “Assessing the Impacts of Academic Social Science Research: Modelling the economic impact on the UK economy of UK-based academic social science research” [PDF] A report prepared for the LSE Public Policy Group by Cambridge Econometrics.

Research Blogs:

- The contemporary social sciences are now converging strongly with STEM disciplines in the study of ‘human-dominated systems’ and ‘human-influenced systems’ by Patrick Dunleavy, Simon Bastow and Jane Tinkler.

It’s the Neoliberalism, Stupid: Why instrumentalist arguments for Open Access, Open Data, and Open Science are not enough.

Eric Kansa at LSE Blog: “…However, I’m increasingly convinced that advocating for openness in research (or government) isn’t nearly enough. There’s been too much of an instrumentalist justification for open data an open access. Many advocates talk about how it will cut costs and speed up research and innovation. They also argue that it will make research more “reproducible” and transparent so interpretations can be better vetted by the wider community. Advocates for openness, particularly in open government, also talk about the wonderful commercial opportunities that will come from freeing research…

These are all very big policy issues, but they need to be asked if the Open Movement really stands for reform and not just a further expansion and entrenchment of Neoliberalism. I’m using the term “Neoliberalism” because it resonates as a convenient label for describing how and why so many things seem to suck in Academia. Exploding student debt, vanishing job security, increasing compensation for top administrators, expanding bureaucracy and committee work, corporate management methodologies (Taylorism), and intensified competition for ever-shrinking public funding all fall under the general rubric of Neoliberalism. Neoliberal universities primarily serve the needs of commerce. They need to churn out technically skilled human resources (made desperate for any work by high loads of debt) and easily monetized technical advancements….

“Big Data,” “Data Science,” and “Open Data” are now hot topics at universities. Investments are flowing into dedicated centers and programs to establish institutional leadership in all things related to data. I welcome the new Data Science effort at UC Berkeley to explore how to make research data professionalism fit into the academic reward systems. That sounds great! But will these new data professionals have any real autonomy in shaping how they conduct their research and build their careers? Or will they simply be part of an expanding class of harried and contingent employees- hired and fired through the whims of creative destruction fueled by the latest corporate-academic hype-cycle?

Researchers, including #AltAcs and “data professionals”, need a large measure of freedom. Miriam Posner’s discussion about the career and autonomy limits of Alt-academic-hood help highlight these issues. Unfortunately, there’s only one area where innovation and failure seem survivable, and that’s the world of the start-up. I’ve noticed how the “Entrepreneurial Spirit” gets celebrated lots in this space. I’m guilty of basking in it myself (10 years as a quasi-independent #altAc in a nonprofit I co-founded!).

But in the current Neoliberal setting, being an entrepreneur requires a singular focus on monetizing innovation. PeerJ and Figshare are nice, since they have business models that less “evil” than Elsevier’s. But we need to stop fooling ourselves that the only institutions and programs that we can and should sustain are the ones that can turn a profit. For every PeerJ or Figshare (and these are ultimately just as dependent on continued public financing of research as any grant-driven project), we also need more innovative organizations like the Internet Archive, wholly dedicated to the public good and not the relentless pressure to commoditize everything (especially their patrons’ privacy). We need to be much more critical about the kinds of programs, organizations, and financing strategies we (as a society) can support. I raised the political economy of sustainability issue at a recent ThatCamp and hope to see more discussion.

In reality so much of the Academy’s dysfunctions are driven by our new Gilded Age’s artificial scarcity of money. With wealth concentrated in so few hands, it is very hard to finance risk taking and entreprenurialism in the scholarly community, especially to finance any form of entrepreneurialism that does not turn a profit in a year or two.

Open Access and Open Data will make so much more of a difference if we had the same kind of dynamism in the academic and nonprofit sector as we have in the for-profit start-up sector. After all, Open Access and Open Data can be key enablers to allow much broader participation in research and education. However, broader participation still needs to be financed: you cannot eat an open access publication. We cannot gloss over this key issue.

We need more diverse institutional forms so that researchers can find (or found) the kinds of organizations that best channel their passions into contributions that enrich us all. We need more diverse sources of financing (new foundations, better financed Kickstarters) to connect innovative ideas with the capital needed to see them implemented. Such institutional reforms will make life in the research community much more livable, creative, and dynamic. It would give researchers more options for diverse and varied career trajectories (for-profit or not-for-profit) suited to their interests and contributions.

Making the case to reinvest in the public good will require a long, hard slog. It will be much harder than the campaign for Open Access and Open Data because it will mean contesting Neoliberal ideologies and constituencies that are deeply entrenched in our institutions. However, the constituencies harmed by Neoliberalism, particularly the student community now burdened by over $1 trillion in debt and the middle class more generally, are much larger and very much aware that something is badly amiss. As we celebrate the impressive strides made by the Open Movement in the past year, it’s time we broaden our goals to tackle the needs for wider reform in the financing and organization of research and education.

This post originally appeared on Digging Digitally and is reposted under a CC-BY license.”

Big Data’s Dangerous New Era of Discrimination

Michael Schrage in HBR blog: “Congratulations. You bought into Big Data and it’s paying off Big Time. You slice, dice, parse and process every screen-stroke, clickstream, Like, tweet and touch point that matters to your enterprise. You now know exactly who your best — and worst — customers, clients, employees and partners are. Knowledge is power. But what kind of power does all that knowledge buy?

Big Data creates Big Dilemmas. Greater knowledge of customers creates new potential and power to discriminate. Big Data — and its associated analytics — dramatically increase both the dimensionality and degrees of freedom for detailed discrimination. So where, in your corporate culture and strategy, does value-added personalization and segmentation end and harmful discrimination begin?

Let’s say, for example, that your segmentation data tells you the following:

Your most profitable customers by far are single women between the ages of 34 and 55 closely followed by “happily married” women with at least one child. Divorced women are slightly more profitable than “never marrieds.” Gay males — single and in relationships — are also disproportionately profitable. The “sweet spot” is urban and 28 to 50. These segments collectively account for roughly two-thirds of your profitability. (Unexpected factoid: Your most profitable customers are overwhelmingly Amazon Prime subscriber. What might that mean?)

Going more granular, as Big Data does, offers even sharper ethno-geographic insight into customer behavior and influence:

- Single Asian, Hispanic, and African-American women with urban post codes are most likely to complain about product and service quality to the company. Asian and Hispanic complainers happy with resolution/refund tend to be in the top quintile of profitability. African-American women do not.

- Suburban Caucasian mothers are most likely to use social media to share their complaints, followed closely by Asian and Hispanic mothers. But if resolved early, they’ll promote the firm’s responsiveness online.

- Gay urban males receiving special discounts and promotions are the most effective at driving traffic to your sites.

My point here is that these data are explicit, compelling and undeniable. But how should sophisticated marketers and merchandisers use them?

Campaigns, promotions and loyalty programs targeting women and gay males seem obvious. But should Asian, Hispanic and white females enjoy preferential treatment over African-American women when resolving complaints? After all, they tend to be both more profitable and measurably more willing to effectively use social media. Does it make more marketing sense encouraging African-American female customers to become more social media savvy? Or are resources better invested in getting more from one’s best customers? Similarly, how much effort and ingenuity flow should go into making more gay male customers better social media evangelists? What kinds of offers and promotions could go viral on their networks?…

Of course, the difference between price discrimination and discrimination positively correlated with gender, ethnicity, geography, class, personality and/or technological fluency is vanishingly small. Indeed, the entire epistemological underpinning of Big Data for business is that it cost-effectively makes informed segmentation and personalization possible…..

But the main source of concern won’t be privacy, per se — it will be whether and how companies and organizations like your own use Big Data analytics to justify their segmentation/personalization/discrimination strategies. The more effective Big Data analytics are in profitably segmenting and serving customers, the more likely those algorithms will be audited by regulators or litigators.

Tomorrow’s Big Data challenge isn’t technical; it’s whether managements have algorithms and analytics that are both fairly transparent and transparently fair. Big Data champions and practitioners had better be discriminating about how discriminating they want to be.”

Report “Big and open data in Europe: A growth engine or a missed opportunity?”

Press Release: “Big data and open data are not just trendy issues, they are the concern of the government institutions at the highest level. On January 29th, 2014 a Conference concerning Big & Open Data in Europe 2020 was held in the European Parliament.

Questions were asked and discussed like: Is Big & Open Data a truly transformative phenomena or just a ‘hot air’? Does it matter for Europe? How big is the economic potential of Big and Open Data for Europe till 2020? How each of the 28 Member States may benefit from it?…

The conference complemented a research project by demosEUROPA – Centre for European Strategy on Big and Open Data in Europe that aims at fostering and facilitating policy debate on the socioeconomic impact of data. The key outcome of the project, a pan-European macroeconomic study titled “Big and open data In Europe: A growth engine or a missed opportunity?” carried out by the Warsaw Institute for Economic Studies (WISE) was presented.

We have the pleasure to be one of the first to present some of the findings of the report and offer the report for download.

The report analyses how technologies have the potential to influence various aspects of the European society, about their substantial, long term impact on our wealth and quality of life, but also about the new developmental challenges for the EU as a whole – as well as for its member states and their regions.

You will learn from the report:

– the resulting economic gains of business applications of big data

– how to structure big data to move from Big Trouble to Big Value

– the costs and benefits of opening data to holders

– 3 challenges that Europeans face with respect to big and open data

– key areas, growth opportunities and challenges for big and open data in Europe per particular regions.

The study also elaborates on the key principle of open data philosophy, which is open by default.

Europe by 2020. What will happen?

The report contains a prognosis for the 28 countries from the EU about the impact of big and open data from 2020 and its additional output and how it will affect trade, health, manufacturing, information and communication, finance & insurance and public administration in different regions. It foresees that the EU economy will grow by 1.9% by 2020 thanks to big and open data and describes the increase of the general GDP level by countries and sectors.

One of the many interesting findings of the report is that the positive impact of the data revolution will be felt more acutely in Northern Europe, while most of the New Member States and Southern European economies will benefit significantly less, with two notable exceptions being the Czech Republic and Poland. If you would like to have first-hand up-to-date information about the impact of big and open data on the future of Europe – download the report.”