Wired: “Twenty-five years on from the web’s inception, its creator has urged the public to re-engage with its original design: a decentralised internet that at its very core, remains open to all.

Speaking with Wired editor David Rowan at an event launching the magazine’s March issue, Tim Berners-Lee said that although part of this is about keeping an eye on for-profit internet monopolies such as search engines and social networks, the greatest danger is the emergence of a balkanised web.

“I want a web that’s open, works internationally, works as well as possible and is not nation-based,” Berners-Lee told the audience… “What I don’t want is a web where the Brazilian government has every social network’s data stored on servers on Brazilian soil. That would make it so difficult to set one up.”

It’s the role of governments, startups and journalists to keep that conversation at the fore, he added, because the pace of change is not slowing — it’s going faster than ever before. For his part Berners-Lee drives the issue through his work at the Open Data Institute, World Wide Web Consortium and World Wide Web Foundation, but also as an MIT professor whose students are “building new architectures for the web where it’s decentralised”. On the issue of monopolies, Berners-Lee did say it’s concerning to be “reliant on big companies, and one big server”, something that stalls innovation, but that competition has historically resolved these issues and will continue to do so.

The kind of balkanised web he spoke about, as typified by Brazil’s home-soil servers argument or Iran’s emerging intranet, is partially being driven by revelations of NSA and GCHQ mass surveillance. The distrust that it has brewed, from a political level right down to the threat of self-censorship among ordinary citizens, threatens an open web and is, said Berners-Lee, a greater threat than censorship. Knowing the NSA may be breaking commercial encryption services could result in the emergence of more networks like China’s Great Firewall, to “protect” citizens. This is why we need a bit of anti-establishment push back, alluded to by Berners-Lee.”

"Natural Cities" Emerge from Social Media Location Data

Emerging Technology From the arXiv: “Nobody agrees on how to define a city. But the emergence of “natural cities” from social media data sets may change that, say computational geographers…

A city is a large, permanent human settlement. But try and define it more carefully and you’ll soon run into trouble. A settlement that qualifies as a city in Sweden may not qualify in China, for example. And the reasons why one settlement is classified as a town while another as a city can sometimes seem almost arbitrary.

City planners know this problem well. They tend to define cities by administrative, legal or even historical boundaries that have little logic to them. Indeed, the same city can sometimes be defined in various different ways.

That causes all kinds of problems from counting the total population to working out who pays for the upkeep of the place. Which definition do you use?

Now help may be at hand thanks to the work of Bin Jiang and Yufan Miao at the University of Gävle in Sweden. These guys have found a way to use people’s location recorded by social media to define the boundaries of so-called natural cities which have a close resemblance to real cities in the US.

Jiang and Miao began with a dataset from the Brightkite social network, which was active between 2008 and 2010. The site encouraged users to log in with their location details so that they could see other users nearby. So the dataset consists of almost 3 million locations in the US and the dates on which they were logged.

To start off, Jiang and Miao simply placed a dot on a map at the location of each login. They then connected these dots to their neighbours to form triangles that end up covering the entire mainland US.

Next, they calculated the size of each triangle on the map and plotted this size distribution, which turns out to follow a power law. So there are lots of tiny triangles but only a few large ones.

Finally, the calculated the average size of the triangles and then coloured in all those that were smaller than average. The coloured areas are “natural cities”, say Jiang and Miao.

It’s easy to imagine that resulting map of triangles is of little value. But to the evident surprise of ther esearchers, it produces a pretty good approximation of the cities in the US. “We know little about why the procedure works so well but the resulting patterns suggest that the natural cities effectively capture the evolution of real cities,” they say.

That’s handy because it suddenly gives city planners a way to study and compare cities on a level playing field. It allows them to see how cities evolve and change over time too. And it gives them a way to analyse how cities in different parts of the world differ.

Of course, Jiang and Miao will want to find out why this approach reveals city structures in this way. That’s still something of a puzzle but the answer itself may provide an important insight into the nature of cities (or at least into the nature of this dataset).

A few days ago, this blog wrote about how a new science of cities is emerging from the analysis of big data. This is another example and expect to see more.

Ref: http://arxiv.org/abs/1401.6756 : The Evolution of Natural Cities from the Perspective of Location-Based Social Media”

Use big data and crowdsourcing to detect nuclear proliferation, says DSB

FierceGovernmentIT: “A changing set of counter-nuclear proliferation problems requires a paradigm shift in monitoring that should include big data analytics and crowdsourcing, says a report from the Defense Science Board.

Much has changed since the Cold War when it comes to ensuring that nuclear weapons are subject to international controls, meaning that monitoring in support of treaties covering declared capabilities should be only one part of overall U.S. monitoring efforts, says the board in a January report (.pdf).

There are challenges related to covert operations, such as testing calibrated to fall below detection thresholds, and non-traditional technologies that present ambiguous threat signatures. Knowledge about how to make nuclear weapons is widespread and in the hands of actors who will give the United States or its allies limited or no access….

The report recommends using a slew of technologies including radiation sensors, but also exploitation of digital sources of information.

“Data gathered from the cyber domain establishes a rich and exploitable source for determining activities of individuals, groups and organizations needed to participate in either the procurement or development of a nuclear device,” it says.

Big data analytics could be used to take advantage of the proliferation of potential data sources including commercial satellite imaging, social media and other online sources.

The report notes that the proliferation of readily available commercial satellite imagery has created concerns about the introduction of more noise than genuine signal. “On balance, however, it is the judgment from the task force that more information from remote sensing systems, both commercial and dedicated national assets, is better than less information,” it says.

In fact, the ready availability of commercial imagery should be an impetus of governmental ability to find weak signals “even within the most cluttered and noisy environments.”

Crowdsourcing also holds potential, although the report again notes that nuclear proliferation analysis by non-governmental entities “will constrain the ability of the United States to keep its options open in dealing with potential violations.” The distinction between gathering information and making political judgments “will erode.”

An effort by Georgetown University students (reported in the Washington Post in 2011) to use open source data analyzing the network of tunnels used in China to hide its missile and nuclear arsenal provides a proof-of-concept on how crowdsourcing can be used to augment limited analytical capacity, the report says – despite debate on the students’ work, which concluded that China’s arsenal could be many times larger than conventionally accepted…

For more:

– download the DSB report, “Assessment of Nuclear Monitoring and Verification Technologies” (.pdf)

– read the WaPo article on the Georgetown University crowdsourcing effort”

From Faith-Based to Evidence-Based: The Open Data 500 and Understanding How Open Data Helps the American Economy

Beth Noveck in Forbes: “Public funds have, after all, paid for their collection, and the law says that federal government data are not protected by copyright. By the end of 2009, the US and the UK had the only two open data one-stop websites where agencies could post and citizens could find open data. Now there are over 300 such portals for government data around the world with over 1 million available datasets. This kind of Open Data — including weather, safety and public health information as well as information about government spending — can serve the country by increasing government efficiency, shedding light on regulated industries, and driving innovation and job creation.

It’s becoming clear that open data has the potential to improve people’s lives. With huge advances in data science, we can take this data and turn it into tools that help people choose a safer hospital, pick a better place to live, improve the performance of their farm or business by having better climate models, and know more about the companies with whom they are doing business. Done right, people can even contribute data back, giving everyone a better understanding, for example of nuclear contamination in post-Fukushima Japan or incidences of price gouging in America’s inner cities.

The promise of open data is limitless. (see the GovLab index for stats on open data) But it’s important to back up our faith with real evidence of what works. Last September the GovLab began the Open Data 500 project, funded by the John S. and James L. Knight Foundation, to study the economic value of government Open Data extensively and rigorously. A recent McKinsey study pegged the annual global value of Open Data (including free data from sources other than government), at $3 trillion a year or more. We’re digging in and talking to those companies that use Open Data as a key part of their business model. We want to understand whether and how open data is contributing to the creation of new jobs, the development of scientific and other innovations, and adding to the economy. We also want to know what government can do better to help industries that want high quality, reliable, up-to-date information that government can supply. Of those 1 million datasets, for example, 96% are not updated on a regular basis.

The GovLab just published an initial working list of 500 American companies that we believe to be using open government data extensively. We’ve also posted in-depth profiles of 50 of them — a sample of the kind of information that will be available when the first annual Open Data 500 study is published in early 2014. We are also starting a similar study for the UK and Europe.

Even at this early stage, we are learning that Open Data is a valuable resource. As my colleague Joel Gurin, author of Open Data Now: the Secret to Hot Start-Ups, Smart Investing, Savvy Marketing and Fast Innovation, who directs the project, put it, “Open Data is a versatile and powerful economic driver in the U.S. for new and existing businesses around the country, in a variety of ways, and across many sectors. The diversity of these companies in the kinds of data they use, the way they use it, their locations, and their business models is one of the most striking things about our findings so far.” Companies are paradoxically building value-added businesses on top of public data that anyone can access for free….”

FULL article can be found here.

Protecting personal data in E-government: A cross-country study

Open data and transparency: a look back at 2013

Web-based technology continued to offer increasing numbers of people the ability to share standardised data and statistics to demand better governance and strengthen accountability. 2013 seemed to herald the moment that the open data/transparency movement entered the mainstream.

Yet for those who have long campaigned on the issue, the call was more than just a catchphrase, it was a unique opportunity. “If we do get a global drive towards open data in relation to development or anything else, that would be really transformative and it’s quite rare to see such bold statements at such an early stage of the process. I think it set the tone for a year in which transparency was front and centre of many people’s agendas,” says David Hall Matthews, of Publish What You Fund.

This year saw high level discussions translated into commitments at the policy level. David Cameron used the UK’s presidency of the G8 to trigger international action on the three Ts (tax, trade and transparency) through the IF campaign. The pledge at Lough Erne, in Scotland, reaffirmed the commitment to the Busan open data standard as well as the specific undertaking that all G8 members would implement International Aid Transparency Index (IATI) standards by the end of 2015.

2013 was a particularly good year for the US Millenium Challenge Corporation (MCC) which topped the aid transparency index. While at the very top MCC and UK’s DfID were examples of best practice, there was still much room for improvement. “There is a really long tail of agencies who are not really taking transparency at all, yet. This includes important donors, the whole of France and the whole of Japan who are not doing anything credible,” says Hall-Matthews.

Yet given the increasing number of emerging and ‘frontier‘ markets whose growth is driven in large part by wealth derived from natural resources, 2013 saw a growing sense of urgency for transparency to be applied to revenues from oil, gas and mineral resources that may far outstrip aid. In May, the new Extractive Industries Transparency Initiative standard (EITI) was adopted, which is said to be far broader and deeper than its previous incarnation.

Several countries have done much to ensure that transparency leads to accountability in their extractive industries. In Nigeria, for example, EITI reports are playing an important role in the debate about how resources should be managed in the country. “In countries such as Nigeria they’re taking their commitment to transparency and EITI seriously, and are going beyond disclosing information but also ensuring that those findings are acted upon and lead to accountability. For example, the tax collection agency has started to collect more of the revenues that were previously missing,” says Jonas Moberg, head of the EITI International Secretariat.

But just the extent to which transparency and open data can actually deliver on its revolutionary potential has also been called into question. Governments and donors agencies can release data but if the power structures within which this data is consumed and acted upon do not shift is there really any chance of significant social change?

The complexity of the challenge is illustrated by the case of Mexico which, in 2014, will succeed Indonesia as chair of the Open Government Partnership. At this year’s London summit, Mexico’s acting civil service minister, spoke of the great strides his country has made in opening up the public procurement process, which accounts for around 10% of GDP and is a key area in which transparency and accountability can help tackle corruption.

There is, however, a certain paradox. As SOAS professor, Leandro Vergara Camus, who has written extensively on peasant movements in Mexico, explains: “The NGO sector in Mexico has more of a positive view of these kinds of processes than the working class or peasant organisations. The process of transparency and accountability have gone further in urban areas then they have in rural areas.”…

With increasing numbers of organisations likely to jump on the transparency bandwagon in the coming year the greatest challenge is using it effectively and adequately addressing the underlying issues of power and politics.

Top 2013 transparency publications

Open data, transparency and international development, The North South Institute

Data for development: The new conflict resource?, Privacy International

The fix-rate: a key metric for transparency and accountability, Integrity Action

Making UK aid more open and transparent, DfID

Getting a seat at the table: Civil Society advocacy for budget transparency in “untransparent” countries, International Budget Partnership

The dates that mattered

23-24 May: New Extractive Industries Transparency Index standard adopted

30 May: Post 2015 high level report calling for a ‘data revolution’ is published

17-18 June: UK premier, David Cameron, campaigns for tax, trade and transparency during the G8

24 October: US Millenium Challenge Corporation tops the aid transparency index”

30 October – 1 November: Open Government Partnership in London gathers civil society, governments and data experts

The Age of Democracy

Xavier Marquez at Abandoned Footnotes: “This is the age of democracy, ideologically speaking. As I noted in an earlier post, almost every state in the world mentions the word “democracy” or “democratic” in its constitutional documents today. But the public acknowledgment of the idea of democracy is not something that began just a few years ago; in fact, it goes back much further, all the way back to the nineteenth century in a surprising number of cases.

Here is a figure I’ve been wanting to make for a while that makes this point nicely (based on data graciously made available by the Comparative Constitutions Project). The figure shows all countries that have ever had some kind of identifiable constitutional document (broadly defined) that mentions the word “democracy” or “democratic” (in any context – new constitution, amendment, interim constitution, bill of rights, etc.), arranged from earliest to latest mention. Each symbol represents a “constitutional event” – a new constitution adopted, an amendment passed, a constitution suspended, etc. – and colored symbols indicate that the text associated with the constitutional event in question mentions the word “democracy” or “democratic”…

The earliest mentions of the word “democracy” or “democratic” in a constitutional document occurred in Switzerland and France in 1848, as far as I can tell.[1] Participatory Switzerland and revolutionary France look like obvious candidates for being the first countries to embrace the “democratic” self-description; yet the next set of countries to embrace this self-description (until the outbreak of WWI) might seem more surprising: they are all Latin American or Caribbean (Haiti), followed by countries in Eastern Europe (various bits and pieces of the Austro-Hungarian empire), Southern Europe (Portugal, Spain), Russia, and Cuba. Indeed, most “core” countries in the global system did not mention democracy in their constitutions until much later, if at all, despite many of them having long constitutional histories; even French constitutions after the fall of the Second Republic in 1851 did not mention “democracy” until after WWII. In other words, the idea of democracy as a value to be publicly affirmed seems to have caught on first not in the metropolis but in the periphery. Democracy is the post-imperial and post-revolutionary public value par excellence, asserted after national liberation (as in most of the countries that became independent after WWII) or revolutions against hated monarchs (e.g., Egypt 1956, Iran 1979, both of them the first mentions of democracy in these countries but not their first constitutions).

Data Mining Reveals the Secret to Getting Good Answers

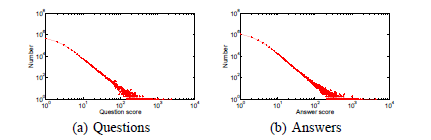

arXiv: “If you want a good answer, ask a decent question. That’s the startling conclusion to a study of online Q&As.

If you spend any time programming, you’ll probably have come across the question and answer site Stack Overflow. The site allows anybody to post a question related to programing and receive answers from the community. And it has been hugely successful. According to Alexa, the site is the 3rd most popular Q&A site in the world and 79th most popular website overall.

But this success has naturally led to a problem–the sheer number of questions and answers the site has to deal with. To help filter this information, users can rank both the questions and the answers, gaining a reputation for themselves as they contribute.

Nevertheless, Stack Overflow still struggles to weed out off topic and irrelevant questions and answers. This requires considerable input from experienced moderators. So an interesting question is whether it is possible to automate the process of weeding out the less useful question and answers as they are posted.

Today we get an answer of sorts thanks to the work of Yuan Yao at the State Key Laboratory for Novel Software Technology in China and a team of buddies who say they’ve developed an algorithm that does the job.

And they say their work reveals an interesting insight: if you want good answers, ask a decent question. That may sound like a truism, but these guys point out that there has been no evidence to support this insight, until now.

…But Yuan and co digged a little deeper. They looked at the correlation between well received questions and answers. And they discovered that these are strongly correlated.

A number of factors turn out to be important. These include the reputation of the person asking the question or answering it, the number of previous questions or answers they have posted, the popularity of their input in the recent past along with measurements like the length of the question and its title.

Put all this into a number cruncher and the system is able to predict the quality score of the question and its expected answers. That allows it to find the best questions and answers and indirectly the worst ones.

There are limitations to this approach, of course…In the meantime, users of Q&A sites can learn a significant lesson from this work. If you want good answers, first formulate a good question. That’s something that can take time and experience.

Perhaps the most interesting immediate application of this new work might be as a teaching tool to help with this learning process and to boost the quality of questions and answers in general.

See also: http://arxiv.org/abs/1311.6876: Want a Good Answer? Ask a Good Question First!”

Owning the city: New media and citizen engagement in urban design

Paper by Michiel de Lange and Martijn de Waal in First Monday : “In today’s cities our everyday lives are shaped by digital media technologies such as smart cards, surveillance cameras, quasi–intelligent systems, smartphones, social media, location–based services, wireless networks, and so on. These technologies are inextricably bound up with the city’s material form, social patterns, and mental experiences. As a consequence, the city has become a hybrid of the physical and the digital. This is perhaps most evident in the global north, although in emerging countries, like Indonesia and China mobile phones, wireless networks and CCTV cameras have also become a dominant feature of urban life (Castells, et al., 2004; Qiu, 2007, 2009; de Lange, 2010). What does this mean for urban life and culture? And what are the implications for urban design, a discipline that has hitherto largely been concerned with the city’s built form?

In this contribution we do three things. First we take a closer look at the notion of ‘smart cities’ often invoked in policy and design discourses about the role of new media in the city. In this vision, the city is mainly understood as a series of infrastructures that must be managed as efficiently as possible. However, critics note that these technological imaginaries of a personalized, efficient and friction–free urbanism ignore some of the basic tenets of what it means to live in cities (Crang and Graham, 2007).

Second, we want to fertilize the debates and controversies about smart cities by forwarding the notion of ‘ownership’ as a lens to zoom in on what we believe is the key question largely ignored in smart city visions: how to engage and empower citizens to act on complex collective urban problems? As is explained in more detail below, we use ‘ownership’ not to refer to an exclusive proprietorship but to an inclusive form of engagement, responsibility and stewardship. At stake is the issue how digital technologies shape the ways in which people in cities manage coexistence with strangers who are different and who often have conflicting interests, and at the same time form new collectives or publics around shared issues of concern (see, for instance, Jacobs, 1992; Graham and Marvin, 2001; Latour, 2005). ‘Ownership’ teases out a number of shifts that take place in the urban public domain characterized by tensions between individuals and collectives, between differences and similarities, and between conflict and collaboration.

Third, we discuss a number of ways in which the rise of urban media technologies affects the city’s built form. Much has been said and written about changing spatial patterns and social behaviors in the media city. Yet as the editors of this special issue note, less attention has been paid to the question how urban new media shape the built form. The notion of ownership allows us to figure the connection between technology and the city as more intricate than direct links of causality or correlation. Therefore, ownership in our view provides a starting point for urban design professionals and citizens to reconsider their own role in city making.

Questions about the role of digital media technologies in shaping the social fabric and built form of urban life are all the more urgent in the context of challenges posed by rapid urbanization, a worldwide financial crisis that hits particularly hard on the architectural sector, socio–cultural shifts in the relationship between professional and amateur, the status of expert knowledge, societies that face increasingly complex ‘wicked’ problems, and governments retreating from public services. When grounds are shifting, urban design professionals as well as citizens need to reconsider their own role in city making.”

Where in the World are Young People Using the Internet?

Georgia Tech: “According to a common myth, today’s young people are all glued to the Internet. But in fact, only 30 percent of the world’s youth population between the ages of 15 and 24 years old has been active online for at least five years. In South Korea, 99.6 percent of young people are active, the highest percentage in the world. The least? The Asian island of Timor Leste with less than 1 percent.

Digital natives as a percentage of total population, 2012 (Courtesy: ITU)

Those are among the many findings in a study from the Georgia Institute of Technology and International Telecommunication Union (ITU). The study is the first attempt to measure, by country, the world’s “digital natives.” The term is typically used to categorize young people born around the time the personal computer was introduced and have spent their lives connected with technology.

Nearly 96 percent of American millennials are digital natives. That figure is behind Japan (99.5 percent) and several European countries, including Finland, Denmark and the Netherlands.

But the percentage that Georgia Tech Associate Professor Michael Best thinks is the most important is the number of digital natives as compared to a country’s total population….

The countries with the highest proportion of digital natives among their population are mostly rich nations, which have high levels of overall Internet penetration. Iceland is at the top of the list with 13.9 percent. The United States is sixth (13.1 percent). A big surprise is Malaysia, a middle-income country with one of the highest proportions of digital natives (ranked 4th at 13.4 percent). Malaysia has a strong history of investing in educational technology.

The countries with the smallest estimated proportion of digital natives are Timor-Leste, Myanmar and Sierra Leone. The bottom 10 consists entirely of African or Asian nations, many of which are suffering from conflict and/or have very low Internet availability.”