Book by Majid Yar on Virtual Utopias and Dystopias: “Contemporary culture offer contradictory views of the internet and new media technologies, painting them in extremes of optimistic enthusiasm and pessimistic foreboding. While some view them as a repository of hopes for democracy, freedom and self-realisation, others consider these developments as sources of alienation, dehumanisation and danger. This book explores such representations, and situates them within the traditions of utopian and dystopian thought that have shaped the Western cultural imaginary. Ranging from ancient poetry to post-humanism, and classical sociology to science fiction, it uncovers the roots of our cultural responses to the internet, which are centred upon a profoundly ambivalent reaction to technological modernity. Majid Yar argues that it is only by better understanding our society’s reactions to technological innovation that we can develop a balanced and considered response to the changes and challenges that the internet brings in its wake.”

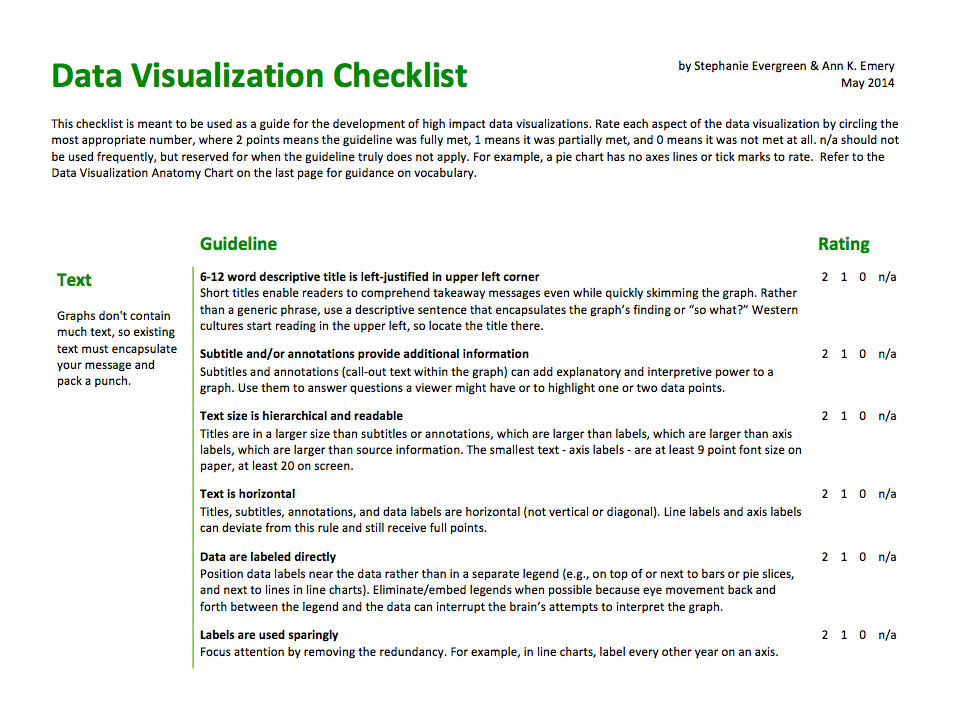

Introducing the Data Visualization Checklist

Stephanie Evergreen: “This post has been a long time coming. Ann Emery and I knew some time ago that evaluators and social scientists had a thirst for better graphs, a clear understanding of why better graphs were necessary, but they lacked efficient guidance on how, exactly, to make a graph better. Introducing the Data Visualization Checklist.

Download this checklist and refer to it when you are constructing your next data visualization so that what you produce rocks worlds. Use the checklist to gauge the effectiveness of graphs you’ve already made and adjust places where you don’t score full points. Make copies and slip them into your staff mailboxes.

What’s in the Checklist?

We compiled a set of best practices based on extensive research, tested against the practical day-to-day realities of evaluation practice and the pragmatic needs of our stakeholders. This guidance may not apply to other fields. In fact, we pilot-tested the checklist with a dozen data visualists and found that those who were not in a social science field found more areas of disagreement. That’s ok. Their dissemination purposes are different from ours. Their audiences are not our audiences. You, evaluator, will find clear guidelines on how to make the best use of a graph’s text, color, arrangement, and overall design. We also included a data visualization anatomy chart on the last page of the checklist to illustrate key concepts and point out terminology…”

The Secret Science of Retweets

How come? What makes somebody retweet information from a stranger? That’s the question addressed today by Kyumin Lee from Utah State University in Logan and a few pals from IBM’s Almaden research center in San Jose….by studying the characteristics of Twitter users, it is possible to identify strangers who are more likely to pass on your message than others. And in doing this, the researchers say they’ve been able to improve the retweet rate of messages sent strangers by up to 680 percent.

So how did they do it? The new technique is based on the idea that some people are more likely to tweet than others, particularly on certain topics and at certain times of the day. So the trick is to find these individuals and target them when they are likely to be most effective.

So the approach was straightforward. The idea is to study the individuals on Twitter, looking at their profiles and their past tweeting behavior, looking for clues that they might be more likely to retweet certain types of information. Having found these individuals, send your tweets to them.

That’s the theory. In practice, it’s a little more involved. Lee and co wanted to test people’s response to two types of information: local news (in San Francisco) and tweets about bird flu, a significant issue at the time of their research. They then created several Twitter accounts with a few followers, specifically to broadcast information of this kind.

Next, they selected people to receive their tweets. For the local news broadcasts, they searched for Twitter users geolocated in the Bay area, finding over 34,000 of them and choosing 1,900 at random.

They then a sent a single message to each user of the format:

“@ SFtargetuser “A man was killed and three others were wounded in a shooting … http://bit.ly/KOl2sC” Plz RT this safety news”

So the tweet included the user’s name, a short headline, a link to the story and a request to retweet.

Of these 1,900 people, 52 retweeted the message they received. That’s 2.8 percent.

For the bird flu information, Lee and co hunted for people who had already tweeted about bird flu, finding 13,000 of them and choosing 1,900 at random. Of these, 155 retweeted the message they received, a retweet rate of 8.4 percent.

But Lee and co found a way to significantly improve these retweet rates. They went back to the original lists of Twitter users and collected publicly available information about each of them, such as their personal profile, the number of followers, the people they followed, their 200 most recent tweets and whether they retweeted the message they had received

Next, the team used a machine learning algorithm to search for correlations in this data that might predict whether somebody was more likely to retweet. For example, they looked at whether people with older accounts were more likely to retweet or how the ratio of friends to followers influenced the retweet likelihood, or even how the types of negative or positive words they used in previous tweets showed any link. They also looked at the time of day that people were most active in tweeting.

The result was a machine learning algorithm capable of picking users who were most likely to retweet on a particular topic.

And the results show that it is surprisingly effective. When the team sent local information tweets to individuals identified by the algorithm, 13.3 percent retweeted it, compared to just 2.6 percent of people chosen at random.

And they got even better results when they timed the request to match the periods when people had been most active in the past. In that case, the retweet rate rose to 19.3 percent. That’s an improvement of over 600 percent.

Similarly, the rate for bird flu information rose from 8.3 percent for users chosen at random to 19.7 percent for users chosen by the algorithm.

That’s a significant result that marketers, politicians, news organizations will be eyeing with envy.

An interesting question is how they can make this technique more generally applicable. It raises the prospect of an app that allows anybody to enter a topic of interest and which then creates a list of people most likely to retweet on that topic in the next few hours.

Lee and co do not mention any plans of this kind. But if they don’t exploit it, then there will surely be others who will.

Ref: arxiv.org/abs/1405.3750 : Who Will Retweet This? Automatically Identifying and Engaging Strangers on Twitter to Spread Information”

#BringBackOurGirls: Can Hashtag Activism Spur Social Change?

Nancy Ngo at TechChange: “In our modern times of media cycles fighting for our short attention spans, it is easy to ride the momentum of a highly visible campaign that can quickly fizzle out once another competing story emerges. Since the kidnappings of approximately 300 Nigerian girls by militant Islamist group Boko Haram last month, the international community has embraced the hashtag, “#BringBackOurGirls”, in a very vocal and visible social media campaign demanding action to rescue the Chibok girls. But one month since the mass kidnapping without the rescue of the girls, do we need to take a different approach? Will #BringBackOurGirls be just another campaign we forget about once the next celebrity scandal becomes breaking news?

#BringBackOurGirls goes global starting in Nigeria

Most of the #BringBackOurGirls campaign activity has been highly visible on Twitter, Facebook, and international media outlets. In this fascinating Twitter heat map created using the tool, CartoDB, featured in TIME Magazine, we can see a time-lapsed digital map of how the hashtag, “#BringBackOurGirls” spread globally, starting organically from within Nigeria in mid April.

The #BringBackOurGirls hashtag has been embraced widely by many public figures and has garnered wide support across the world. Michelle Obama, David Cameron, and Malala Yusafzai have posted images with the hashtag, along with celebrities such as Ellen Degeneres, Angelina Jolie, and Dwayne Johnson. To date, nearly 1 million people signed the Change.org petition. Countries including the USA, UK, China, Israel have pledged to join the rescue efforts, and other human rights campaigns have joined the #BringBackOurGirls Twitter momentum, as seen on this Hashtagify map.

Is #BringBackOurGirls repeating the mistakes of #KONY2012?

A great example of a past campaign where this happened was with the KONY2012 campaign, which brought some albeit short-lived urgency to addressing the child soldiers recruited by Joseph Kony, leader of the Lord’s Resistance Army (LRA). Michael Poffenberger, who worked on that campaign, will join us a guest expert in TC110: Social Media for Social Change online course in June 2013 and compare it the current #BringBackOurGirls campaign. Many have drawn parallels to both campaigns and warned of the false optimism that hyped social media messages can bring when context is not fully considered and understood.

According to Lauren Wolfe of Foreign Policy magazine, “Understanding what has happened to the Nigerian girls and how to rescue them means beginning to face what has happened to hundreds of thousands, if not millions, of girls over years in global armed conflict.” To some critics, this hashtag trivializes the weaknesses of Nigerian democracy that have been exposed. Critics of using social media in advocacy campaigns have used the term “slacktivism” to describe the passive, minimal effort needed to participate in these movements. Others have cited such media waves being exploited for individual gain, as opposed to genuinely benefiting the girls. Florida State University Political Science professor, Will H. Moore, argues that this hashtag activism is not only hurting the larger cause of rescuing the kidnapped girls, but actually helping Boko Haram. Jumoke Balogun, Co-Founder of CompareAfrique, also highlights the limits of the #BringBackOurGirls hashtag impact.

Hashtag activism, alone, is not enough

With all this social media activity and international press, what actual progress has been made in rescuing the kidnapped girls? If the objective is raising awareness of the issue, yes, the hashtag has been successful. If the objective is to rescue the girls, we still have a long way to go, even if the hashtag campaign has been part of a multi-pronged approach to galvanize resources into action.

The bottom line: social media can be a powerful tool to bring visibility and awareness to a cause, but a hashtag alone is not enough to bring about social change. There are a myriad of resources that must be coordinated to effectively implement this rescue mission, which will only become more difficult as more time passes. However, prioritizing and shining a sustained light on the problem, instead getting distracted by competing media cycles on celebrities getting into petty fights, is the first step toward a solution…”

The Emerging Science of Superspreaders (And How to Tell If You're One Of Them)

Emerging Technology From the arXiv: “Who are the most influential spreaders of information on a network? That’s a question that marketers, bloggers, news services and even governments would like answered. Not least because the answer could provide ways to promote products quickly, to boost the popularity of political parties above their rivals and to seed the rapid spread of news and opinions.

So it’s not surprising that network theorists have spent some time thinking about how best to identify these people and to check how the information they receive might spread around a network. Indeed, they’ve found a number of measures that spot so-called superspreaders, people who spread information, ideas or even disease more efficiently than anybody else.

But there’s a problem. Social networks are so complex that network scientists have never been able to test their ideas in the real world—it has always been too difficult to reconstruct the exact structure of Twitter or Facebook networks, for example. Instead, they’ve created models that mimic real networks in certain ways and tested their ideas on these instead.

But there is growing evidence that information does not spread through real networks in the same way as it does through these idealised ones. People tend to pass on information only when they are interested in a topic and when they are active, factors that are hard to take into account in a purely topological model of a network.

So the question of how to find the superspreaders remains open. That looks set to change thanks to the work of Sen Pei at Beihang University in Beijing and a few pals who have performed the first study of superspreaders on real networks.

These guys have studied the way information flows around various networks ranging from the Livejournal blogging network to the network of scientific publishing at the American Physical Society’s, as well as on subsets of the Twitter and Facebook networks. And they’ve discovered the key indicator that identifies superspreaders in these networks.

In the past, network scientists have developed a number of mathematical tests to measure the influence that individuals have on the spread of information through a network. For example, one measure is simply the number of connections a person has to other people in the network, a property known as their degree. The thinking is that the most highly connected people are the best at spreading information.

Another measure uses the famous PageRank algorithm that Google developed for ranking webpages. This works by ranking somebody more highly if they are connected to other highly ranked people.

Then there is ‘betweenness centrality’ , a measure of how many of the shortest paths across a network pass through a specific individual. The idea is that these people are more able to inject information into the network.

And finally there is a property of nodes in a network known as their k-core. This is determined by iteratively pruning the peripheries of a network to see what is left. The k-core is the step at which that node or person is pruned from the network. Obviously, the most highly connected survive this process the longest and have the highest k-core score..

The question that Sen and co set out to answer was which of these measures best picked out superspreaders of information in real networks.

They began with LiveJournal, a network of blogs in which individuals maintain lists of friends that represent social ties to other LiveJournal users. This network allows people to repost information from other blogs and to use a reference the links back to the original post. This allows Sen and co to recreate not only the network of social links between LiveJournal users but also the way in which information is spread between them.

Sen and co collected all of the blog posts from February 2010 to November 2011, a total of more than 56 million posts. Of these, some 600,000 contain links to other posts published by LiveJournal users.

The data reveals two important properties of information diffusion. First, only some 250,000 users are actively involved in spreading information. That’s a small fraction of the total.

More significantly, they found that information did not always diffuse across the social network. The found that information could spread between two LiveJournal users even though they have no social connection.

That’s probably because they find this information outside of the LiveJournal ecosystem, perhaps through web searches or via other networks. “Only 31.93% of the spreading posts can be attributed to the observable social links,” they say.

That’s in stark contrast to the assumptions behind many social network models. These simulate the way information flows by assuming that it travels directly through the network from one person to another, like a disease spread by physical contact.

The work of Sen and co suggests that influences outside the network are crucial too. In practice, information often spreads via several seemingly independent sources within the network at the same time. This has important implications for the way superspreaders can be spotted.

Sen and co say that a person’s degree– the number of other people he or her are connected to– is not as good a predictor of information diffusion as theorists have thought. “We find that the degree of the user is not a reliable predictor of influence in all circumstances,” they say.

What’s more, the Pagerank algorithm is often ineffective in this kind of network as well. “Contrary to common belief, although PageRank is effective in ranking web pages, there are many situations where it fails to locate superspreaders of information in reality,” they say….

Ref: arxiv.org/abs/1405.1790 : Searching For Superspreaders Of Information In Real-World Social Media”

New crowdsourcing site like ‘Yelp’ for philanthropy

Vanessa Small in the Washington Post: “Billionaire investor Warren Buffett once said that there is no market test for philanthropy. Foundations with billions in assets often hand out giant grants to charity without critique. One watchdog group wants to change that.

The National Committee for Responsive Philanthropy has created a new Web site that posts public feedback about a foundation’s giving. Think Yelp for the philanthropy sector.

Along with public critiques, the new Web site, Philamplify.org, uploads a comprehensive assessment of a foundation conducted by researchers at the National Committee for Responsive Philanthropy.

The assessment includes a review of the foundation’s goals, strategies, partnerships with grantees, transparency, diversity in its board and how any investments support the mission.

The site also posts recommendations on what would make the foundation more effective in the community. The public can agree or disagree with each recommendation and then provide feedback about the grantmaker’s performance.

People who post to the site can remain anonymous.

NCRP officials hope the site will stir debate about the giving practices of foundations.

“Foundation leaders rarely get honest feedback because no one wants to get on the wrong side of a foundation,” said Lisa Ranghelli, a director at NCRP. “There’s so much we need to do as a society that we just want these philanthropic resources to be used as powerfully as possible and for everyone to feel like they have a voice in how philanthropy operates.”

With nonprofit rating sites such as Guidestar and Charity Navigator, Philamplify is just one more move to create more transparency in the nonprofit sector. But the site might be one of the first to force transparency and public commentary exclusively about the organizations that give grants.

Foundation leaders are open to the site, but say that some grantmakers already use various evaluation methods to improve their strategies.

Groups such as Grantmakers for Effective Organizations and the Center for Effective Philanthropy provide best practices for foundation giving.

The Council on Foundations, an Arlington-based membership organization of foundation groups, offers a list of tools and ideas for foundations to make their giving more effective.

“We will be paying close attention to Philamplify and new developments related to it as the project unfolds,” said Peter Panepento, senior vice president of community and knowledge at the Council on Foundations.

Currently there are three foundations up for review on the Web site: the William Penn Foundation in Philadelphia, which focuses on improving the Greater Philadelphia community; the Robert W. Woodruff Foundation in Atlanta, which gives grants in science and education; and the Lumina Foundation for Education in Indianapolis, which focuses on access to higher learning….”

Officials say Philamplify will focus on the top 100 largest foundations to start. Large foundations would include groups such as the Bill and Melinda Gates Foundation, the Robert Wood Johnson Foundation and Silicon Valley Community Foundation, and the foundations of companies such as Wal-Mart, Wells Fargo, Johnson & Johnson and GlaxoSmithKline.

Although there are concerns about the site’s ability to keep comments objective, grantees hope it will start a dialogue that has been absent in philanthropy.

How Britain’s Getting Public Policy Down to a Science

in Governing: “Britain has a bold yet simple plan to do something few U.S. governments do: test the effectiveness of multiple policies before rolling them out. But are American lawmakers willing to listen to facts more than money or politics?

In medicine they do clinical trials to determine whether a new drug works. In business they use focus groups to help with product development. In Hollywood they field test various endings for movies in order to pick the one audiences like best. In the world of public policy? Well, to hear members of the United Kingdom’s Behavioural Insights Team (BIT) characterize it, those making laws and policies in the public sector tend to operate on some well-meaning mix of whim, hunch and dice roll, which all too often leads to expensive and ineffective (if not downright harmful) policy decisions.

….One of the prime BIT examples for why facts and not intuition ought to drive policy hails from the U.S. The much-vaunted “Scared Straight” program that swept the U.S. in the 1990s involved shepherding at-risk youth into maximum security prisons. There, they would be confronted by inmates who, presumably, would do the scaring while the visiting juveniles would do the straightening out. Scared Straight seemed like a good idea — let at-risk youth see up close and personal what was in store for them if they continued their wayward ways. Initially the results reported seemed not just good, but great. Programs were reporting “success rates” as high as 94 percent, which inspired other countries, including the U.K., to adopt Scared Straight-like programs.

The problem was that none of the program evaluations included a control group — a group of kids in similar circumstances with similar backgrounds who didn’t go through a Scared Straight program. There was no way to see how they would fare absent the experience. Eventually, a more scientific analysis of seven U.S. Scared Straight programs was conducted. Half of the at-risk youth in the study were left to their own devices and half were put through the program. This led to an alarming discovery: Kids who went through Scared Straight were more likely to offend than kids who skipped it — or, more precisely, who were spared it. The BIT concluded that “the costs associated with the programme (largely related to the increase in reoffending rates) were over 30 times higher than the benefits, meaning that ‘Scared Straight’ programmes cost the taxpayer a significant amount of money and actively increased crime.”

It was witnessing such random acts of policymaking that in 2010 inspired a small group of political and social scientists to set up the Behavioural Insights Team. Originally a small “skunk works” tucked away in the U.K. Treasury Department, the team gained traction under Prime Minister David Cameron, who took office evincing a keen interest in both “nonregulatory solutions to policy problems” and in spending public money efficiently, Service says. By way of example, he points to a business support program in the U.K. that would give small and medium-sized businesses up to £3,000 to subsidize advice from professionals. “But there was no proven link between receiving that money and improving business. We thought, ‘Wouldn’t it be better if you could first test the efficacy of some million-pound program or other, rather than just roll it out?’”

The BIT was set up as something of a policy research lab that would scientifically test multiple approaches to a public policy problem on a limited, controlled basis through “randomized controlled trials.” That is, it would look at multiple ways to skin the cat before writing the final cat-skinning manual. By comparing the results of various approaches — efforts to boost tax compliance, say, or to move people from welfare to work — policymakers could use the results of the trials to actually hone in on the most effective practices before full-scale rollout.

The various program and policy options that are field tested by the BIT aren’t pie-in-the-sky surmises, which is where the “behavioural” piece of the equation comes in. Before settling on what options to test, the BIT takes into account basic human behavior — what motivates us and what turns us off — and then develops several approaches to a policy problem based on actual social science and psychology.

The a pproach seems to work. Take, for example, the issue of recruiting organ donors. It can be a touchy topic, suggesting one’s own mortality while also conjuring up unsettling images of getting carved up and parceled out by surgeons. It’s no wonder, then, that while nine out of 10 people in England profess to support organ donations, fewer than one in three are officially registered as donors. To increase the U.K.’s ratio, the BIT decided to play around with the standard recruitment message posted on a high-traffic gov.uk website that encourages people to sign up with the national Organ Donor Register (see “‘Please Help Others,’” page 18). Seven different messages that varied in approach and tone were tested, and at the end of the trial, one message emerged clearly as the most effective — so effective, in fact, that the BIT concluded that “if the best-performing message were to be used over the whole year, it would lead to approximately 96,000 extra registrations completed.”

pproach seems to work. Take, for example, the issue of recruiting organ donors. It can be a touchy topic, suggesting one’s own mortality while also conjuring up unsettling images of getting carved up and parceled out by surgeons. It’s no wonder, then, that while nine out of 10 people in England profess to support organ donations, fewer than one in three are officially registered as donors. To increase the U.K.’s ratio, the BIT decided to play around with the standard recruitment message posted on a high-traffic gov.uk website that encourages people to sign up with the national Organ Donor Register (see “‘Please Help Others,’” page 18). Seven different messages that varied in approach and tone were tested, and at the end of the trial, one message emerged clearly as the most effective — so effective, in fact, that the BIT concluded that “if the best-performing message were to be used over the whole year, it would lead to approximately 96,000 extra registrations completed.”

According to the BIT there are nine key steps to a defensible controlled randomized trial, the first and second — and the two most obvious — being that there must be at least two policy interventions to compare and that the outcome that the policies they’re meant to influence must be clear. But the “randomized” factor in the equation is critical, and it’s not necessarily easy to achieve.

In BIT-speak, “randomization units” can range from individuals (randomly chosen clients) entering the same welfare office but experiencing different interventions, to different groups of clientele or even different institutions like schools or congregate care facilities. The important point is to be sure that the groups or institutions chosen for comparison are operating in circumstances and with clientele similar enough so that researchers can confidently say that any differences in outcomes are due to different policy interventions and not other socioeconomic or cultural exigencies. There are also minimum sampling sizes that ensure legitimacy — essentially, the more the merrier.

As a matter of popular political culture, the BIT’s approach is known as “nudge theory,” a strand of behavioral economics based on the notion that the economic decisions that human beings make are just that — human — and that by tuning into what motivates and appeals to people we can much better understand why those economic decisions are made. In market economics, of course, nudge theory helps businesses tune into customer motivation. In public policy, nudge theory involves figuring out ways to motivate people to do what’s best for themselves, their families, their neighborhoods and society.

When the BIT started playing around with ways to improve tax compliance, for example, the group discovered a range of strategies to do that, from the very obvious approach — make compliance easy — to the more behaviorally complex. The idea was to key in on the sorts of messages to send to taxpayers that will resonate and improve voluntary compliance. The results can be impressive. “If you just tell taxpayers that the majority of folks in their area pay their taxes on time [versus sending out dunning letters],” says the BIT’s Service, “that adds 3 percent more people who pay, bringing in millions of pounds.” Another randomized controlled trial showed that in pestering citizens to pay various fines, personal text messages were more effective than letters.

There has been pushback on using randomized controlled trials to develop policy. Some see it as a nefarious attempt at mind control on the part of government. “Nudge” to some seems to mean “manipulate.” Service bridles at the criticism. “We’re sometimes referred to as ‘the Nudge Team,’ but we’re the ‘Behavioural Insights Team’ because we’re interested in human behavior, not mind control.”

The essence of the philosophy, Service adds, is “leading people to do the right thing.” For those interested in launching BIT-like efforts without engendering immediate ideological resistance, he suggests focusing first on “non-headline-grabbing” policy areas such as tax collection or organ donation that can be launched through administrative fiat.”

United States federal government use of crowdsourcing grows six-fold since 2011

at E Pluribus Unum: “Citizensourcing and open innovation can work in the public sector, just as crowdsourcing can in the private sector. Around the world, the use of prizes to spur innovation has been booming for years. The United States of America has been significantly scaling up its use of prizes and challenges to solving grand national challenges since January 2011, when, President Obama signed an updated version of the America COMPETES Act into law.

According to the third congressionally mandated report released by the Obama administration today (PDF/Text), the number of prizes and challenges conducted under the America COMPETES Act has increased by 50% since 2012, 85% since 2012, and nearly six-fold overall since 2011. 25 different federal agencies offered prizes under COMPETES in fiscal year 2013, with 87 prize competitions in total. The size of the prize purses has also grown as well, with 11 challenges over $100,000 in 2013. Nearly half of the prizes conducted in FY 2013 were focused on software, including applications, data visualization tools, and predictive algorithms. Challenge.gov, the award-winning online platform for crowdsourcing national challenges, now has tens of thousands of users who have participated in more than 300 public-sector prize competitions. Beyond the growth in prize numbers and amounts, Obama administration highlighted 4 trends in public-sector prize competitions:

- New models for public engagement and community building during competitions

- Growth software and information technology challenges, with nearly 50% of the total prizes in this category

- More emphasis on sustainability and “creating a post-competition path to success”

- Increased focus on identifying novel approaches to solving problems

The growth of open innovation in and by the public sector was directly enabled by Congress and the White House, working together for the common good. Congress reauthorized COMPETES in 2010 with an amendment to Section 105 of the act that added a Section 24 on “Prize Competitions,” providing all agencies with the authority to conduct prizes and challenges that only NASA and DARPA has previously enjoyed, and the White House Office of Science and Technology Policy (OSTP), which has been guiding its implementation and providing guidance on the use of challenges and prizes to promote open government.

“This progress is due to important steps that the Obama Administration has taken to make prizes a standard tool in every agency’s toolbox,” wrote Cristin Dorgelo, assistant director for grand challenges in OSTP, in a WhiteHouse.gov blog post on engaging citizen solvers with prizes:

In his September 2009 Strategy for American Innovation, President Obama called on all Federal agencies to increase their use of prizes to address some of our Nation’s most pressing challenges. Those efforts have expanded since the signing of the America COMPETES Reauthorization Act of 2010, which provided all agencies with expanded authority to pursue ambitious prizes with robust incentives.

To support these ongoing efforts, OSTP and the General Services Administration have trained over 1,200 agency staff through workshops, online resources, and an active community of practice. And NASA’s Center of Excellence for Collaborative Innovation (COECI) provides a full suite of prize implementation services, allowing agencies to experiment with these new methods before standing up their own capabilities.

Sun Microsystems co-founder Bill Joy famously once said that “No matter who you are, most of the smartest people work for someone else.” This rings true, in and outside of government. The idea of governments using prizes like this to inspire technological innovation, however, is not reliant on Web services and social media, born from the fertile mind of a Silicon Valley entrepreneur. As the introduction to the third White House prize report notes:

“One of the most famous scientific achievements in nautical history was spurred by a grand challenge issued in the 18th Century. The issue of safe, long distance sea travel in the Age of Sail was of such great importance that the British government offered a cash award of £20,000 pounds to anyone who could invent a way of precisely determining a ship’s longitude. The Longitude Prize, enacted by the British Parliament in 1714, would be worth some £30 million pounds today, but even by that measure the value of the marine chronometer invented by British clockmaker John Harrison might be a deal.”

Centuries later, the Internet, World Wide Web, mobile devices and social media offer the best platforms in history for this kind of approach to solving grand challenges and catalyzing civic innovation, helping public officials and businesses find new ways to solve old problem. When a new idea, technology or methodology that challenges and improves upon existing processes and systems, it can improve the lives of citizens or the function of the society that they live within….”

The advent of crowdfunding innovations for development

SciDevNet: “FundaGeek, TechMoola and RocketHub have more in common than just their curious names. These are all the monikers of crowdsourcing websites that are dedicated to raising money for science and technology projects. As the coffers that were traditionally used to fund research and development have been squeezed in recent years, several such sites have sprouted up.

In 2013, general crowdsourcing site Kickstarter saw a total of US$480 million pledged to its projects by three million backers. That’s up from US$320 million in 2012, US$99 million in 2011 and just US$28million in 2010. Kickstarter expects the figures to climb further this year, and not just for popular projects such as films and books.

Science and technology projects — particularly those involving simple designs — are starting to make waves on these sites. And new sites, such as those bizarrely named ones, are now catering specifically for scientific projects, widening the choice of platforms on offer and raising crowdsourcing’s profile among the global scientific community online.

All this means that crowdsourcing is fast becoming one of the most significant innovations in funding the development of technology that can aid poor communities….

A good example of how crowdsourcing can help the developing world is the GravityLight, a product launched on Indiegogo over a year ago that uses gravity to create light. Not only did UK design company Therefore massively exceed its initial funding target — ultimately raising $US400,000 instead of a planned US$55,000 — it amassed a global network of investors and distributors that has allowed the light to be trialled in 26 countries as of last December.

The light was developed in-house after Therefore was given a brief to produce a cheap solar-powered lamp by private clients. Although this project faltered, the team independently set out to produce a lamp to replace the ubiquitous and dangerous kerosene lamps widely used in remote areas in Africa. After several months of development, Therefore had designed a product that is powered by a rope with a heavy weight on its end being slowly drawn through the light’s gears (see video)…

Crowdfunding is not always related to a specific product. Earlier this year, Indiegogo hosted a project hoping to build a clean energy store in a Ugandan village. The idea is to create an ongoing supply chain for technologies such as cleaner-burning stoves, water filters and solar lights that will improve or save lives, according to ENVenture, the project’s creators. [1] The US$2,000 target was comfortably exceeded…”

The Universe Is Programmable. We Need an API for Everything

Keith Axline in Wired: “Think about it like this: In the Book of Genesis, God is the ultimate programmer, creating all of existence in a monster six-day hackathon.

Or, if you don’t like Biblical metaphors, you can think about it in simpler terms. Robert Moses was a programmer, shaping and re-shaping the layout of New York City for more than 50 years. Drug developers are programmers, twiddling enzymes to cure what ails us. Even pickup artists and conmen are programmers, running social scripts on people to elicit certain emotional results.

Keith Axline in Wired: “Everyone is becoming a programmer. The next step is to realize that everything is a program.

The point is that, much like the computer on your desk or the iPhone in your hand, the entire Universe is programmable. Just as you can build apps for your smartphones and new services for the internet, so can you shape and re-shape almost anything in this world, from landscapes and buildings to medicines and surgeries to, well, ideas — as long as you know the code.

That may sound like little more than an exercise in semantics. But it’s actually a meaningful shift in thinking. If we look at the Universe as programmable, we can start treating it like software. In short, we can improve almost everything we do with the same simple techniques that have remade the creation of software in recent years, things like APIs, open source code, and the massively popular code-sharing service GitHub.

The great thing about the modern software world is that you don’t have to build everything from scratch. Apple provides APIs, or application programming interfaces, that can help you build apps on their devices. And though Tim Cook and company only give you part of what you need, you can find all sorts of other helpful tools elsewhere, thanks to the open source software community.

The same is true if you’re building, say, an online social network. There are countless open source software tools you can use as the basic building blocks — many of them open sourced by Facebook. If you’re creating almost any piece of software, you can find tools and documentation that will help you fashion at least a small part of it. Chances are, someone has been there before, and they’ve left some instructions for you.

Now we need to discover and document the APIs for the Universe. We need a standard way of organizing our knowledge and sharing it with the world at large, a problem for which programmers already have good solutions. We need to give everyone a way of handling tasks the way we build software. Such a system, if it can ever exist, is still years away — decades at the very least — and the average Joe is hardly ready for it. But this is changing. Nowadays, programming skills and the DIY ethos are slowly spreading throughout the population. Everyone is becoming a programmer. The next step is to realize that everything is a program.

What Is an API?

The API may sound like just another arcane computer acronym. But it’s really one of the most profound metaphors of our time, an idea hiding beneath the surface of each piece of tech we use everyday, from iPhone apps to Facebook. To understand what APIs are and why they’re useful, let’s look at how programmers operate.

If I’m building a smartphone app, I’m gonna need — among so many other things — a way of validating a signup form on a webpage to make sure a user doesn’t, say, mistype their email address. That validation has nothing to do with the guts of my app, and it’s surprisingly complicated, so I don’t really want to build it from scratch. Apple doesn’t help me with that, so I start looking on the web for software frameworks, plugins, Software Developer Kits (SDKs) — anything that will help me build my signup tool.

Hopefully, I’ll find one. And if I do, chances are it will include some sort of documentation or “Readme file” explaining how this piece of code is supposed to be used so that I can tailor it to my app. This Readme file should contain installation instructions as well as the API for the code. Basically, an API lays out the code’s inputs and outputs. It shows what me what I have to send the code and what it will spit back out. It shows how I bolt it onto my signup form. So the name is actually quite explanatory: Application Programming Interface. An API is essentially an instruction manual for a piece of software.

Now, let’s combine this with the idea that everything is an application: molecules, galaxies, dogs, people, emotional states, abstract concepts like chaos. If you do something to any these things, they’ll respond in some way. Like software, they have inputs and outputs. What we need to do is discover and document their APIs.

We aren’t dealing with software code here. Inputs and outputs can themselves be anything. But we can closely document these inputs and their outputs — take what we know about how we interface with something and record it in a standard way that it can be used over and over again. We can create a Readme file for everything.

We can start by doing this in small, relatively easy ways. How about APIs for our cities? New Zealand just open sourced aerial images of about 95 percent of its land. We could write APIs for what we know about building in those areas, from properties of the soil to seasonal weather patterns to zoning laws. All this knowledge exists but it hasn’t been organized and packaged for use by anyone who is interested. And we could go still further — much further.

For example, between the science community, the medical industry and the billions of human experiences, we could probably have a pretty extensive API mapped out of the human stomach — one that I’d love to access when I’m up at 3am with abdominal pains. Maybe my microbiome is out of whack and there’s something I have on-hand that I could ingest to make it better. Or what if we cracked the API for the signals between our eyes and our brain? We wouldn’t need to worry about looking like Glassholes to get access to always-on augmented reality. We could just get an implant. Yes, these APIs will be slightly different for everyone, but that brings me to the next thing we need.

A GitHub for Everything

We don’t just need a Readme for the Universe. We need a way of sharing this Readme and changing it as need be. In short, we need a system like GitHub, the popular online service that lets people share and collaborate on software code.

Let’s go back to the form validator I found earlier. Say I made some modifications to it that I think other programmers would find useful. If the validator is on GitHub, I can create a separate but related version — a fork — that people can find and contribute to, in the same way I first did with the original software.

This creates a tree of knowledge, with giant groups of people creating and merging branches, working on their small section and then giving it back to the whole.

GitHub not only enables this collaboration, but every change is logged into separate versions. If someone were so inclined, they could go back and replay the building of the validator, from the very first save all the way up to my changes and whoever changes it after me. This creates a tree of knowledge, with giant groups of people creating and merging branches, working on their small section and then giving it back to the whole.

We should be able to funnel all existing knowledge of how things work — not just software code — into a similar system. That way, if my brain-eye interface needs to be different, I (or my personal eye technician) can “fork” the API. In a way, this sort of thing is already starting to happen. People are using GitHub to share government laws, policy documents, Gregorian chants, and the list goes on. The ultimate goal should be to share everything.

Yes, this idea is similar to what you see on sites like Wikipedia, but the stuff that’s shared on Wikipedia doesn’t let you build much more than another piece of text. We don’t just need to know what things are. We need to know how they work in ways that let us operate on them.

The Open Source Epiphany

If you’ve never programmed, all this can sound a bit, well, abstract. But once you enter the coding world, getting a loose grasp on the fundamentals of programming, you instantly see the utility of open source software. “Oooohhh, I don’t have to build this all myself,” you say. “Thank God for the open source community.” Because so many smart people contribute to open source, it helps get the less knowledgeable up to speed quickly. Those acolytes then pay it forward with their own contributions once they’ve learned enough.

Today, more and more people are jumping on this train. More and more people are becoming programmers of some shape or form. It wasn’t so long ago that basic knowledge of HTML was considered specialized geek speak. But now, it’s a common requirement for almost any desk job. Gone are the days when kids made fun of their parents for not being able to set the clock on the VCR. Now they get mocked for mis-cropping their Facebook profile photos.

These changes are all part of the tech takeover of our lives that is trickling down to the masses. It’s like how the widespread use of cars brought a general mechanical understanding of engines to dads everywhere. And this general increase in aptitude is accelerating along with the technology itself.

Steps are being taken to make programming a skill that most kids get early in school along with general reading, writing, and math. In the not too distant future, people will need to program in some form for their daily lives. Imagine the world before the average person knew how to write a letter, or divide two numbers, compared to now. A similar leap is around the corner…”