Book by Kate Crawford: “What happens when artificial intelligence saturates political life and depletes the planet? How is AI shaping our understanding of ourselves and our societies? In this book Kate Crawford reveals how this planetary network is fueling a shift toward undemocratic governance and increased inequality. Drawing on more than a decade of research, award-winning science, and technology, Crawford reveals how AI is a technology of extraction: from the energy and minerals needed to build and sustain its infrastructure, to the exploited workers behind “automated” services, to the data AI collects from us.

Rather than taking a narrow focus on code and algorithms, Crawford offers us a political and a material perspective on what it takes to make artificial intelligence and where it goes wrong. While technical systems present a veneer of objectivity, they are always systems of power. This is an urgent account of what is at stake as technology companies use artificial intelligence to reshape the world…(More)”.

AI Ethics Needs Good Data

Paper by Angela Daly, S Kate Devitt, and Monique Mann: “In this chapter we argue that discourses on AI must transcend the language of ‘ethics’ and engage with power and political economy in order to constitute ‘Good Data’. In particular, we must move beyond the depoliticised language of ‘ethics’ currently deployed (Wagner 2018) in determining whether AI is ‘good’ given the limitations of ethics as a frame through which AI issues can be viewed. In order to circumvent these limits, we use instead the language and conceptualisation of ‘Good Data’, as a more expansive term to elucidate the values, rights and interests at stake when it comes to AI’s development and deployment, as well as that of other digital technologies.

Good Data considerations move beyond recurring themes of data protection/privacy and the FAT (fairness, transparency and accountability) movement to include explicit political economy critiques of power. Instead of yet more ethics principles (that tend to say the same or similar things anyway), we offer four ‘pillars’ on which Good Data AI can be built: community, rights, usability and politics. Overall we view AI’s ‘goodness’ as an explicly political (economy) question of power and one which is always related to the degree which AI is created and used to increase the wellbeing of society and especially to increase the power of the most marginalized and disenfranchised. We offer recommendations and remedies towards implementing ‘better’ approaches towards AI. Our strategies enable a different (but complementary) kind of evaluation of AI as part of the broader socio-technical systems in which AI is built and deployed….(More)”.

Towards Algorithm Auditing: A Survey on Managing Legal, Ethical and Technological Risks of AI, ML and Associated Algorithms

Paper by Adriano Koshiyama: “Business reliance on algorithms are becoming ubiquitous, and companies are increasingly concerned about their algorithms causing major financial or reputational damage. High-profile cases include VW’s Dieselgate scandal with fines worth of $34.69B, Knight Capital’s bankruptcy (~$450M) by a glitch in its algorithmic trading system, and Amazon’s AI recruiting tool being scrapped after showing bias against women. In response, governments are legislating and imposing bans, regulators fining companies, and the Judiciary discussing potentially making algorithms artificial “persons” in Law.

Soon there will be ‘billions’ of algorithms making decisions with minimal human intervention; from autonomous vehicles and finance, to medical treatment, employment, and legal decisions. Indeed, scaling to problems beyond the human is a major point of using such algorithms in the first place. As with Financial Audit, governments, business and society will require Algorithm Audit; formal assurance that algorithms are legal, ethical and safe. A new industry is envisaged: Auditing and Assurance of Algorithms (cf. Data privacy), with the remit to professionalize and industrialize AI, ML and associated algorithms.

The stakeholders range from those working on policy and regulation, to industry practitioners and developers. We also anticipate the nature and scope of the auditing levels and framework presented will inform those interested in systems of governance and compliance to regulation/standards. Our goal in this paper is to survey the key areas necessary to perform auditing and assurance, and instigate the debate in this novel area of research and practice….(More)”.

Practical Fairness

Book by Aileen Nielsen: “Fairness is becoming a paramount consideration for data scientists. Mounting evidence indicates that the widespread deployment of machine learning and AI in business and government is reproducing the same biases we’re trying to fight in the real world. But what does fairness mean when it comes to code? This practical book covers basic concerns related to data security and privacy to help data and AI professionals use code that’s fair and free of bias.

Many realistic best practices are emerging at all steps along the data pipeline today, from data selection and preprocessing to closed model audits. Author Aileen Nielsen guides you through technical, legal, and ethical aspects of making code fair and secure, while highlighting up-to-date academic research and ongoing legal developments related to fairness and algorithms.

- Identify potential bias and discrimination in data science models

- Use preventive measures to minimize bias when developing data modeling pipelines

- Understand what data pipeline components implicate security and privacy concerns

- Write data processing and modeling code that implements best practices for fairness

- Recognize the complex interrelationships between fairness, privacy, and data security created by the use of machine learning models

- Apply normative and legal concepts relevant to evaluating the fairness of machine learning models…(More)”.

A new intelligence paradigm: how the emerging use of technology can achieve sustainable development (if done responsibly)

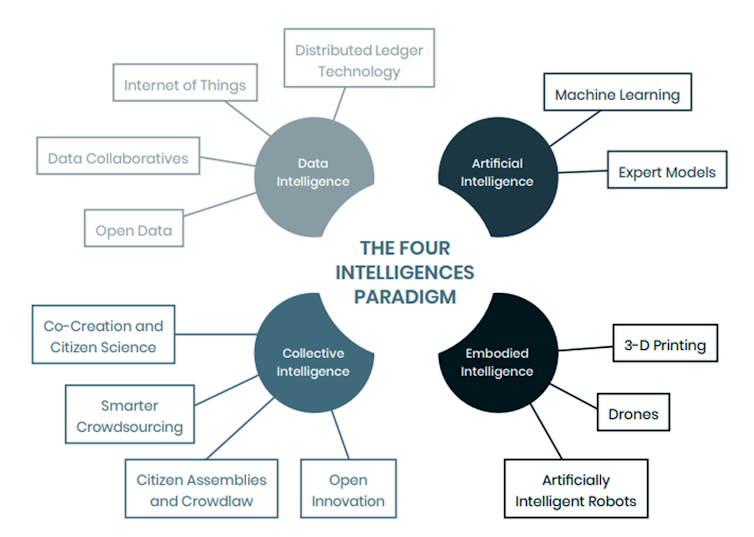

Peter Addo and Stefaan G. Verhulst in The Conversation: “….This month, the GovLab and the French Development Agency (AFD) released a report looking at precisely these possibilities. “Emerging Uses of Technology for Development: A New Intelligence Paradigm” examines how development practitioners are experimenting with emerging forms of technology to advance development goals. It considers when practitioners might turn to these tools and provides some recommendations to guide their application.

Broadly, the report concludes that experiments with new technologies in development have produced value and offer opportunities for progress. These technologies – which include data intelligence, artificial intelligence, collective intelligence, and embodied intelligence tools – are associated with different prospective benefits and risks. It is essential they be informed by design principles and practical considerations.

Four intelligences

The report derives its conclusions from an analysis of dozens of projects around Africa, including Senegal, Tanzania, Uganda. Linking practice and theory, this approach allows us to construct a conceptual framework that helps development practitioners allocate resources and make key decisions based on their specific circumstances. We call this framework the “four intelligences” paradigm; it offers a way to make sense of how new and emerging technologies intersect with the development field….(More)” (Full Report).

Facial Recognition in the Public Sector: The Policy Landscape

Brief by Rashida Richardson: “Facial-recognition technology is increasingly common throughout society. We can unlock our phones with our faces, smart doorbells let us know who is outside our home, and sentiment analysis allows potential employers to screen interviewees for desirable traits. In the public sector, facial recognition is now in widespread use—in schools, public housing, public transportation, and other areas. Some of the most worrying applications of the technology are in law enforcement, with police departments and other bodies in the United States, Europe, and elsewhere around the world using public and private databases of photos to identify criminal suspects and conduct real-time surveillance of public spaces.

Despite the widespread use of facial recognition and the concerns it presents for privacy and civil liberties, this technology is only subject to a patchwork of laws and regulations. Certain jurisdictions have imposed bans on its use while others have implemented more targeted interventions. In some cases, laws and regulations written to address other technologies may apply to facial recognition as well.

This brief first surveys how facial-recognition technology has been deployed in the public sector around the world. It then reviews the spectrum of proposed and pending laws and regulations that seek to mitigate or address human and civil rights concerns associated with government use of facial recognition, including:

- moratoriums and bans

- standards, limitations, and requirements regarding databases or data sources

- data regulations

- oversight and use requirements

- government commissions, consultations, and studies…(More)”

Beyond the promise: implementing ethical AI

Ray Eitel-Porter at AI and Ethics: “Artificial Intelligence (AI) applications can and do have unintended negative consequences for businesses if not implemented with care. Specifically, faulty or biased AI applications risk compliance and governance breaches and damage to the corporate brand. These issues commonly arise from a number of pitfalls associated with AI development, which include rushed development, a lack of technical understanding, and improper quality assurance, among other factors. To mitigate these risks, a growing number of organisations are working on ethical AI principles and frameworks. However, ethical AI principles alone are not sufficient for ensuring responsible AI use in enterprises. Businesses also require strong, mandated governance controls including tools for managing processes and creating associated audit trails to enforce their principles. Businesses that implement strong governance frameworks, overseen by an ethics board and strengthened with appropriate training, will reduce the risks associated with AI. When applied to AI modelling, the governance will also make it easier for businesses to bring their AI deployments to scale….(More)”.

This is how we lost control of our faces

Karen Hao at MIT Technology Review: “In 1964, mathematician and computer scientist Woodrow Bledsoe first attempted the task of matching suspects’ faces to mugshots. He measured out the distances between different facial features in printed photographs and fed them into a computer program. His rudimentary successes would set off decades of research into teaching machines to recognize human faces.

Now a new study shows just how much this enterprise has eroded our privacy. It hasn’t just fueled an increasingly powerful tool of surveillance. The latest generation of deep-learning-based facial recognition has completely disrupted our norms of consent.

Deborah Raji, a fellow at nonprofit Mozilla, and Genevieve Fried, who advises members of the US Congress on algorithmic accountability, examined over 130 facial-recognition data sets compiled over 43 years. They found that researchers, driven by the exploding data requirements of deep learning, gradually abandoned asking for people’s consent. This has led more and more of people’s personal photos to be incorporated into systems of surveillance without their knowledge.

It has also led to far messier data sets: they may unintentionally include photos of minors, use racist and sexist labels, or have inconsistent quality and lighting. The trend could help explain the growing number of cases in which facial-recognition systems have failed with troubling consequences, such as the false arrests of two Black men in the Detroit area last year.

People were extremely cautious about collecting, documenting, and verifying face data in the early days, says Raji. “Now we don’t care anymore. All of that has been abandoned,” she says. “You just can’t keep track of a million faces. After a certain point, you can’t even pretend that you have control.”…(More)”.

AI Ethics: Global Perspectives

“The Governance Lab (The GovLab), NYU Tandon School of Engineering, Global AI Ethics Consortium (GAIEC), Center for Responsible AI @ NYU (R/AI), and Technical University of Munich (TUM) Institute for Ethics in Artificial Intelligence (IEAI) jointly launched a free, online course, AI Ethics: Global Perspectives, on February 1, 2021. Designed for a global audience, it conveys the breadth and depth of the ongoing interdisciplinary conversation on AI ethics and seeks to bring together diverse perspectives from the field of ethical AI, to raise awareness and help institutions work towards more responsible use.

“The use of data and AI is steadily growing around the world – there should be simultaneous efforts to increase literacy, awareness, and education around the ethical implications of these technologies,” said Stefaan Verhulst, Co-Founder and Chief Research and Development Officer of The GovLab. “The course will allow experts to jointly develop a global understanding of AI.”

“AI is a global challenge, and so is AI ethics,” said Christoph Lütge, the director of IEAI. “Τhe ethical challenges related to the various uses of AI require multidisciplinary and multi-stakeholder engagement, as well as collaboration across cultures, organizations, academic institutions, etc. This online course is GAIEC’s attempt to approach and apply AI ethics effectively in practice.”

The course modules comprise pre-recorded lectures on either AI Applications, Data and AI, and Governance Frameworks, along with supplemental readings. New course lectures will be released the first week of every month.

“The goal of this course is to create a nuanced understanding of the role of technology in society so that we, the people, have tools to make AI work for the benefit of society,” said Julia Stoyanvoich, a Tandon Assistant Professor of Computer Science and Engineering, Director of the Center for Responsible AI at NYU Tandon, and an Assistant Professor at the NYU Center for Data Science. “It is up to us — current and future data scientists, business leaders, policy makers, and members of the public — to make AI what we want it to be.”

The collaboration will release four new modules in February. These include lectures from:

- Idoia Salazar, President and Co-Founder of OdiselA, who presents “Alexa vs Alice: Cultural Perspectives on the Impact of AI.” Salazar explores why it is important to take into account the cultural, geographical, and temporal aspects of AI, as well as their precise identification, in order to achieve the correct development and implementation of AI systems;

- Jerry John Kponyo, Associate Professor of Telecommunication Engineering at KNUST, who sheds light on the fundamentals of Artificial Intelligence in Transportation System (AITS) and safety, and looks at the technologies at play in its implementation;

- Danya Glabau, Director of Science and Technology studies at the NYU Tandon School of Engineering, asks and answers the question, “Who is artificial intelligence for?” and presents evidence that AI systems do not always help their intended users and constituencies;

- Mark Findlay, Director of the Centre for AI and Data Governance at SMU, reviews the ethical challenges — discrimination, lack of transparency, neglect of individual rights, and more — which have arisen from COVID-19 technologies and their resultant mass data accumulation.

To learn more and sign up to receive updates as new modules are added, visit the course website at aiethicscourse.org “

From satisficing to artificing: The evolution of administrative decision-making in the age of the algorithm

Paper by Thea Snow at Data & Policy: “Algorithmic decision tools (ADTs) are being introduced into public sector organizations to support more accurate and consistent decision-making. Whether they succeed turns, in large part, on how administrators use these tools. This is one of the first empirical studies to explore how ADTs are being used by Street Level Bureaucrats (SLBs). The author develops an original conceptual framework and uses in-depth interviews to explore whether SLBs are ignoring ADTs (algorithm aversion); deferring to ADTs (automation bias); or using ADTs together with their own judgment (an approach the author calls “artificing”). Interviews reveal that artificing is the most common use-type, followed by aversion, while deference is rare. Five conditions appear to influence how practitioners use ADTs: (a) understanding of the tool (b) perception of human judgment (c) seeing value in the tool (d) being offered opportunities to modify the tool (e) alignment of tool with expectations….(More)”.