Paper by Simon Chesterman: “The past decade has seen a proliferation of guides, frameworks, and principles put forward by states, industry, inter- and non-governmental organizations to address matters of AI ethics. These diverse efforts have led to a broad consensus on what norms might govern AI. Far less energy has gone into determining how these might be implemented — or if they are even necessary. This chapter focuses on the intersection of ethics and law, in particular discussing why regulation is necessary, when regulatory changes should be made, and how it might work in practice. Two specific areas for law reform address the weaponization and victimization of AI. Regulations aimed at general AI are particularly difficult in that they confront many ‘unknown unknowns’, but the threat of uncontrollable or uncontainable AI became more widely discussed with the spread of large language models such as ChatGPT in 2023. Additionally, however, there will be a need to prohibit some conduct in which increasingly lifelike machines are the victims — comparable, perhaps, to animal cruelty laws…(More)”

Detecting Human Rights Violations on Social Media during Russia-Ukraine War

Paper by Poli Nemkova, et al: “The present-day Russia-Ukraine military conflict has exposed the pivotal role of social media in enabling the transparent and unbridled sharing of information directly from the frontlines. In conflict zones where freedom of expression is constrained and information warfare is pervasive, social media has emerged as an indispensable lifeline. Anonymous social media platforms, as publicly available sources for disseminating war-related information, have the potential to serve as effective instruments for monitoring and documenting Human Rights Violations (HRV). Our research focuses on the analysis of data from Telegram, the leading social media platform for reading independent news in post-Soviet regions. We gathered a dataset of posts sampled from 95 public Telegram channels that cover politics and war news, which we have utilized to identify potential occurrences of HRV. Employing a mBERT-based text classifier, we have conducted an analysis to detect any mentions of HRV in the Telegram data. Our final approach yielded an F2 score of 0.71 for HRV detection, representing an improvement of 0.38 over the multilingual BERT base model. We release two datasets that contains Telegram posts: (1) large corpus with over 2.3 millions posts and (2) annotated at the sentence-level dataset to indicate HRVs. The Telegram posts are in the context of the Russia-Ukraine war. We posit that our findings hold significant implications for NGOs, governments, and researchers by providing a means to detect and document possible human rights violations…(More)” See also Data for Peace and Humanitarian Response? The Case of the Ukraine-Russia War

The Power and Perils of the “Artificial Hand”: Considering AI Through the Ideas of Adam Smith

Speech by Gita Gopinath: “…Nowadays, it’s almost impossible to talk about economics without invoking Adam Smith. We take for granted many of his concepts, such as the division of labor and the invisible hand. Yet, at the time when he was writing, these ideas went against the grain. He wasn’t afraid to push boundaries and question established thinking.

Smith grappled with how to advance well-being and prosperity at a time of great change. The Industrial Revolution was ushering in new technologies that would revolutionize the nature of work, create winners and losers, and potentially transform society. But their impact wasn’t yet clear. The Wealth of Nations, for example, was published the same year James Watt unveiled his steam engine.

Today, we find ourselves at a similar inflection point, where a new technology, generative artificial intelligence, could change our lives in spectacular—and possibly existential—ways. It could even redefine what it means to be human.

Given the parallels between Adam Smith’s time and ours, I’d like to propose a thought experiment: If he were alive today, how would Adam Smith have responded to the emergence of this new “artificial hand”?…(More)”.

There’s a model for governing AI. Here it is.

Article by Jacinda Ardern: “…On March 15, 2019, a terrorist took the lives of 51 members of New Zealand’s Muslim community in Christchurch. The attacker livestreamed his actions for 17 minutes, and the images found their way onto social media feeds all around the planet. Facebook alone blocked or removed 1.5 million copies of the video in the first 24 hours; in that timeframe, YouTube measured one upload per second.

Afterward, New Zealand was faced with a choice: accept that such exploitation of technology was inevitable or resolve to stop it. We chose to take a stand.

We had to move quickly. The world was watching our response and that of social media platforms. Would we regulate in haste? Would the platforms recognize their responsibility to prevent this from happening again?

New Zealand wasn’t the only nation grappling with the connection between violent extremism and technology. We wanted to create a coalition and knew that France had started to work in this space — so I reached out, leader to leader. In my first conversation with President Emmanuel Macron, he agreed there was work to do and said he was keen to join us in crafting a call to action.

We asked industry, civil society and other governments to join us at the table to agree on a set of actions we could all commit to. We could not use existing structures and bureaucracies because they weren’t equipped to deal with this problem.

Within two months of the attack, we launched the Christchurch Call to Action, and today it has more than 120 members, including governments, online service providers and civil society organizations — united by our shared objective to eliminate terrorist and other violent extremist content online and uphold the principle of a free, open and secure internet.

The Christchurch Call is a large-scale collaboration, vastly different from most top-down approaches. Leaders meet annually to confirm priorities and identify areas of focus, allowing the project to act dynamically. And the Call Secretariat — made up of officials from France and New Zealand — convenes working groups and undertakes diplomatic efforts throughout the year. All members are invited to bring their expertise to solve urgent online problems.

While this multi-stakeholder approach isn’t always easy, it has created change. We have bolstered the power of governments and communities to respond to attacks like the one New Zealand experienced. We have created new crisis-response protocols — which enabled companies to stop the 2022 Buffalo attack livestream within two minutes and quickly remove footage from many platforms. Companies and countries have enacted new trust and safety measures to prevent livestreaming of terrorist and other violent extremist content. And we have strengthened the industry-founded Global Internet Forum to Counter Terrorism with dedicated funding, staff and a multi-stakeholder mission.

We’re also taking on some of the more intransigent problems. The Christchurch Call Initiative on Algorithmic Outcomes, a partnership with companies and researchers, was intended to provide better access to the kind of data needed to design online safety measures to prevent radicalization to violence. In practice, it has much wider ramifications, enabling us to reveal more about the ways in which AI and humans interact.

From its start, the Christchurch Call anticipated the emerging challenges of AI and carved out space to address emerging technologies that threaten to foment violent extremism online. The Christchurch Call is actively tackling these AI issues.

Perhaps the most useful thing the Christchurch Call can add to the AI governance debate is the model itself. It is possible to bring companies, government officials, academics and civil society together not only to build consensus but also to make progress. It’s possible to create tools that address the here and now and also position ourselves to face an unknown future. We need this to deal with AI…(More)”.

AI and Global Governance: Modalities, Rationales, Tensions

Paper by Michael Veale, Kira Matus and Robert Gorwa: “Artificial intelligence (AI) is a salient but polarizing issue of recent times. Actors around the world are engaged in building a governance regime around it. What exactly the “it” is that is being governed, how, by who, and why—these are all less clear. In this review, we attempt to shine some light on those questions, considering literature on AI, the governance of computing, and regulation and governance more broadly. We take critical stock of the different modalities of the global governance of AI that have been emerging, such as ethical councils, industry governance, contracts and licensing, standards, international agreements, and domestic legislation with extraterritorial impact. Considering these, we examine selected rationales and tensions that underpin them, drawing attention to the interests and ideas driving these different modalities. As these regimes become clearer and more stable, we urge those engaging with or studying the global governance of AI to constantly ask the important question of all global governance regimes: Who benefits?…(More)”.

Artificial Intelligence in the COVID-19 Response

Report by Sean Mann, Carl Berdahl, Lawrence Baker, and Federico Girosi: “We conducted a scoping review to identify AI applications used in the clinical and public health response to COVID-19. Interviews with stakeholders early in the research process helped inform our research questions and guide our study design. We conducted a systematic search, screening, and full text review of both academic and gray literature…

- AI is still an emerging technology in health care, with growing but modest rates of adoption in real-world clinical and public health practice. The COVID-19 pandemic showcased the wide range of clinical and public health functions performed by AI as well as the limited evidence available on most AI products that have entered use.

- We identified 66 AI applications (full list in Appendix A) used to perform a wide range of diagnostic, prognostic, and treatment functions in the clinical response to COVID-19. This included applications used to analyze lung images, evaluate user-reported symptoms, monitor vital signs, predict infections, and aid in breathing tube placement. Some applications were used by health systems to help allocate scarce resources to patients.

- Many clinical applications were deployed early in the pandemic, and most were used in the United States, other high-income countries, or China. A few applications were used to care for hundreds of thousands or even millions of patients, although most were used to an unknown or limited extent.

- We identified 54 AI-based public health applications used in the pandemic response. These included AI-enabled cameras used to monitor health-related behavior and health messaging chatbots used to answer questions about COVID-19. Other applications were used to curate public health information, produce epidemiologic forecasts, or help prioritize communities for vaccine allocation and outreach efforts.

- We found studies supporting the use of 39 clinical applications and 8 public health applications, although few of these were independent evaluations, and we found no clinical trials evaluating any application’s impact on patient health. We found little evidence available on entire classes of applications, including some used to inform care decisions such as patient deterioration monitors.

- Further research is needed, particularly independent evaluations on application performance and health impacts in real-world care settings. New guidance may be needed to overcome the unique challenges to evaluating AI application impacts on patient- and population-level health outcomes….(More)” – See also: The #Data4Covid19 Review

From LogFrames to Logarithms – A Travel Log

Article by Karl Steinacker and Michael Kubach: “..Today, authorities all over the world are experimenting with predictive algorithms. That sounds technical and innocent but as we dive deeper into the issue, we realise that the real meaning is rather specific: fraud detection systems in social welfare payment systems. In the meantime, the hitherto banned terminology had it’s come back: welfare or social safety nets are, since a couple of years, en vogue again. But in the centuries-old Western tradition, welfare recipients must be monitored and, if necessary, sanctioned, while those who work and contribute must be assured that there is no waste. So it comes at no surprise that even today’s algorithms focus on the prime suspect, the individual fraudster, the undeserving poor.

Fraud detection systems promise that the taxpayer will no longer fall victim to fraud and efficiency gains can be re-directed to serve more people. The true extent of welfare fraud is regularly exaggerated while the costs of such systems is routinely underestimated. A comparison of the estimated losses and investments doesn’t take place. It is the principle to detect and punish the fraudsters that prevail. Other issues don’t rank high either, for example on how to distinguish between honest mistakes and deliberate fraud. And as case workers spent more time entering and analysing data and in front of a computer screen, the less they have time and inclination to talk to real people and to understand the context of their life at the margins of society.

Thus, it can be said that routinely hundreds of thousands of people are being scored. Example Denmark: Here, a system called Udbetaling Danmark was created in 2012 to streamline the payment of welfare benefits. Its fraud control algorithms can access the personal data of millions of citizens, not all of whom receive welfare payments. In contrast to the hundreds of thousands affected by this data mining, the number of cases referred to the Police for further investigation are minute.

In the city of Rotterdam in the Netherlands every year, data of 30,000 welfare recipients is investigated in order to flag suspected welfare cheats. However, an analysis of its scoring system based on machine learning and algorithms showed systemic discrimination with regard to ethnicity, age, gender, and parenthood. It revealed evidence of other fundamental flaws making the system both inaccurate and unfair. What might appear to a caseworker as a vulnerability is treated by the machine as grounds for suspicion. Despite the scale of data used to calculate risk scores, the output of the system is not better than random guesses. However, the consequences of being flagged by the “suspicion machine” can be drastic, with fraud controllers empowered to turn the lives of suspects inside out.

As reported by the World Bank, the recent Covid-19 pandemic provided a great push to implement digital social welfare systems in the global South. In fact, for the World Bank the so-called Digital Public Infrastructure (DPI), enabling “Digitizing Government to Person Payments (G2Px)”, are as fundamental for social and economic development today as physical infrastructure was for previous generations. Hence, the World Bank is finances globally systems modelled after the Indian Aadhaar system, where more than a billion persons have been registered biometrically. Aadhaar has become, for all intents and purposes, a pre-condition to receive subsidised food and other assistance for 800 million Indian citizens.

Important international aid organisations are not behaving differently from states. The World Food Programme alone holds data of more than 40 million people on its Scope data base. Unfortunately, WFP like other UN organisations, is not subject to data protection laws and the jurisdiction of courts. This makes the communities they have worked with particularly vulnerable.

In most places, the social will become the metric, where logarithms determine the operational conduit for delivering, controlling and withholding assistance, especially welfare payments. In other places, the power of logarithms may go even further, as part of trust systems, creditworthiness, and social credit. These social credit systems for individuals are highly controversial as they require mass surveillance since they aim to track behaviour beyond financial solvency. The social credit score of a citizen might not only suffer from incomplete, or inaccurate data, but also from assessing political loyalties and conformist social behaviour…(More)”.

How AI could take over elections – and undermine democracy

Article by Archon Fung and Lawrence Lessig: “Could organizations use artificial intelligence language models such as ChatGPT to induce voters to behave in specific ways?

Sen. Josh Hawley asked OpenAI CEO Sam Altman this question in a May 16, 2023, U.S. Senate hearing on artificial intelligence. Altman replied that he was indeed concerned that some people might use language models to manipulate, persuade and engage in one-on-one interactions with voters.

Altman did not elaborate, but he might have had something like this scenario in mind. Imagine that soon, political technologists develop a machine called Clogger – a political campaign in a black box. Clogger relentlessly pursues just one objective: to maximize the chances that its candidate – the campaign that buys the services of Clogger Inc. – prevails in an election.

While platforms like Facebook, Twitter and YouTube use forms of AI to get users to spend more time on their sites, Clogger’s AI would have a different objective: to change people’s voting behavior.

As a political scientist and a legal scholar who study the intersection of technology and democracy, we believe that something like Clogger could use automation to dramatically increase the scale and potentially the effectiveness of behavior manipulation and microtargeting techniques that political campaigns have used since the early 2000s. Just as advertisers use your browsing and social media history to individually target commercial and political ads now, Clogger would pay attention to you – and hundreds of millions of other voters – individually.

It would offer three advances over the current state-of-the-art algorithmic behavior manipulation. First, its language model would generate messages — texts, social media and email, perhaps including images and videos — tailored to you personally. Whereas advertisers strategically place a relatively small number of ads, language models such as ChatGPT can generate countless unique messages for you personally – and millions for others – over the course of a campaign.

Second, Clogger would use a technique called reinforcement learning to generate a succession of messages that become increasingly more likely to change your vote. Reinforcement learning is a machine-learning, trial-and-error approach in which the computer takes actions and gets feedback about which work better in order to learn how to accomplish an objective. Machines that can play Go, Chess and many video games better than any human have used reinforcement learning.How reinforcement learning works.

Third, over the course of a campaign, Clogger’s messages could evolve in order to take into account your responses to the machine’s prior dispatches and what it has learned about changing others’ minds. Clogger would be able to carry on dynamic “conversations” with you – and millions of other people – over time. Clogger’s messages would be similar to ads that follow you across different websites and social media…(More)”.

Governing the Unknown

Article by Kaushik Basu: “Technology is changing the world faster than policymakers can devise new ways to cope with it. As a result, societies are becoming polarized, inequality is rising, and authoritarian regimes and corporations are doctoring reality and undermining democracy.

For ordinary people, there is ample reason to be “a little bit scared,” as OpenAI CEO Sam Altman recently put it. Major advances in artificial intelligence raise concerns about education, work, warfare, and other risks that could destabilize civilization long before climate change does. To his credit, Altman is urging lawmakers to regulate his industry.

In confronting this challenge, we must keep two concerns in mind. The first is the need for speed. If we take too long, we may find ourselves closing the barn door after the horse has bolted. That is what happened with the 1968 Nuclear Non-Proliferation Treaty: It came 23 years too late. If we had managed to establish some minimal rules after World War II, the NPT’s ultimate goal of nuclear disarmament might have been achievable.

The other concern involves deep uncertainty. This is such a new world that even those working on AI do not know where their inventions will ultimately take us. A law enacted with the best intentions can still backfire. When America’s founders drafted the Second Amendment conferring the “right to keep and bear arms,” they could not have known how firearms technology would change in the future, thereby changing the very meaning of the word “arms.” Nor did they foresee how their descendants would fail to realize this even after seeing the change.

But uncertainty does not justify fatalism. Policymakers can still effectively govern the unknown as long as they keep certain broad considerations in mind. For example, one idea that came up during a recent Senate hearing was to create a licensing system whereby only select corporations would be permitted to work on AI.

This approach comes with some obvious risks of its own. Licensing can often be a step toward cronyism, so we would also need new laws to deter politicians from abusing the system. Moreover, slowing your country’s AI development with additional checks does not mean that others will adopt similar measures. In the worst case, you may find yourself facing adversaries wielding precisely the kind of malevolent tools that you eschewed. That is why AI is best regulated multilaterally, even if that is a tall order in today’s world…(More)”.

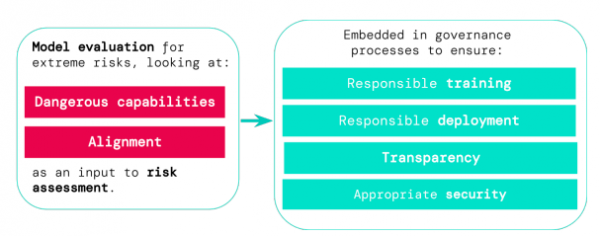

Model evaluation for extreme risks

Paper by Toby Shevlane et al: “Current approaches to building general-purpose AI systems tend to produce systems with both beneficial and harmful capabilities. Further progress in AI development could lead to capabilities that pose extreme risks, such as offensive cyber capabilities or strong manipulation skills. We explain why model evaluation is critical for addressing extreme risks. Developers must be able to identify dangerous capabilities (through “dangerous capability evaluations”) and the propensity of models to apply their capabilities for harm (through “alignment evaluations”). These evaluations will become critical for keeping policymakers and other stakeholders informed, and for making responsible decisions about model training, deployment, and security.

Figure 1 | The theory of change for model evaluations for extreme risk. Evaluations for dangerous capabilities and alignment inform risk assessments, and are in turn embedded into important governance processes…(More)”.