New Report by Professor Gwanhoo Lee for the IBM Center for The Business of Government: “Ideation is the process of generating new ideas or solutions using crowdsourcing technologies, and it is changing the way federal government agencies innovate and solve problems. Ideation tools use online brainstorming or social voting platforms to submit new ideas, search previously submitted ideas, post questions and challenges, discuss and expand on ideas, vote them up or down and flag them.

This report examines the current status, challenges, and best practices of federal internal ideation programs made available exclusively to employees. Initial experiences from a variety of agencies show that these ideation tools hold great promise in engaging employees and stakeholders in problem-solving.

While ideation programs offer promising benefits, making innovation an aspect of everyone’s job is very hard to achieve. Given that these ideation tools and programs are still relatively new, agencies have not yet figured out the best practices and often do not know what to expect during the implementation process. This report seeks to fill this gap.

Based on field research and a literature review, the report describes four federal internal ideation programs, including IdeaHub (Department of Transportation), the Sounding Board (the Department of State), IdeaFactory (Department of Homeland Security), and CDC IdeaLab (Centers for Disease Control and Prevention, Department of Health and Human Services).

Four important challenges are associated with the adoption and implementation of federal internal ideation programs. These are: managing the ideation process and technology; managing cultural change; managing privacy, security and transparency; and managing use of the ideation tool.

Federal government agencies have been moving in the right direction by embracing these tools and launching ideation programs in boosting employee-driven innovation. However, many daunting challenges and issues remain to be addressed. For a federal agency to sustain its internal ideation program, it should note the following:

Recommendation One: Treat the ideation program not as a management fad but as a vehicle to reinvent the agency.

Recommendation Two: Institutionalize the ideation program.

Recommendation Three: Make the ideation team a permanent organizational unit.

Recommendation Four: Document ideas that are implemented.Quantify their impact and demonstrate the return on investment.Share the return with the employees through meaningful rewards.

Recommendation Five: Assimilate and integrate the ideation program into the mission-critical administrative processes.

Recommendation Six: Develop an easy-to-use mobile app for the ideation system.

Recommendation Seven: Keep learning from other agencies and even from commercial organizations.”

Mozilla Location Service: crowdsourcing data to help devices find your location without GPS

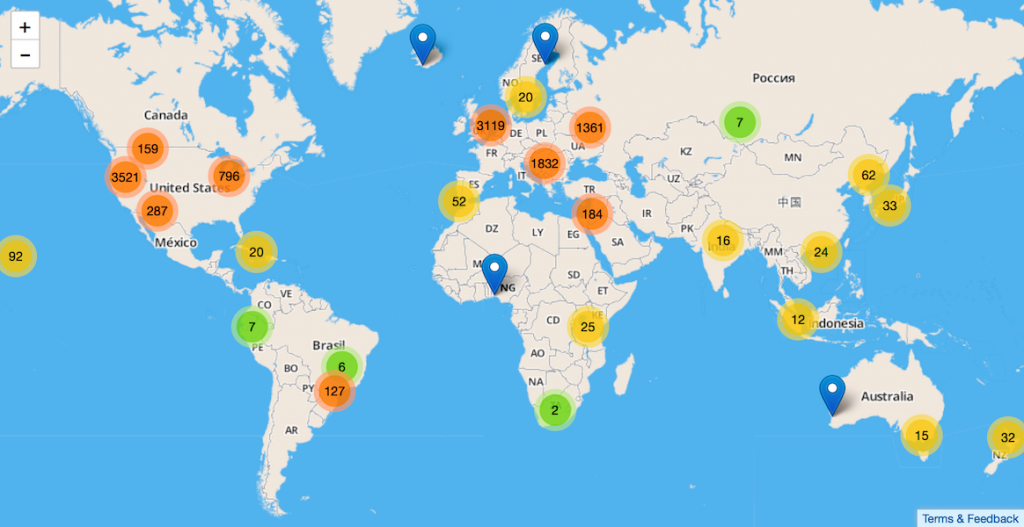

“The Mozilla Location Service is an experimental pilot project to provide geolocation lookups based on publicly observable cell tower and WiFi access point information. Currently in its early stages, it already provides basic service coverage of select locations thanks to our early adopters and contributors.

While many commercial services exist in this space, there’s currently no large public service to provide this crucial part of any mobile ecosystem. Mobile phones with a weak GPS signal and laptops without GPS hardware can use this service to quickly identify their approximate location. Even though the underlying data is based on publicly accessible signals, geolocation data is by its very nature personal and privacy sensitive. Mozilla is committed to improving the privacy aspects for all participants of this service offering.

If you want to help us build our service, you can install our dedicated Android MozStumbler and enjoy competing against others on our leaderboard or choose to contribute anonymously. The service is evolving rapidly, so expect to see a more full featured experience soon. For an overview of the current experience, you can head over to the blog of Soledad Penadés, who wrote a far better introduction than we did.

We welcome any ideas or concerns about this project and would love to hear any feedback or experience you might have. Please contact us either on our dedicated mailing list or come talk to us in our IRC room #geo on Mozilla’s IRC server.

For more information please follow the links on our project page.”

The GovLab Academy: A Community and Platform for Learning and Teaching Governance Innovations

Press Release: “Today the Governance Lab (The GovLab) launches The GovLab Academy at the Open Government Partnership Annual Meeting in London.

Available at www.thegovlabacademy.org, the Academy is a free online community for those wanting to teach and learn how to solve public problems and improve lives using innovations in governance. A partnership between The GovLab at New York University and MIT Media Lab’s Online Learning Initiative, the site launching today offers curated videos, podcasts, readings and activities designed to enable the purpose driven learner to deepen his or her practical knowledge at her own pace.

The GovLab Academy is funded by a grant from the John S. and James L. Knight Foundation. “The GovLab Academy addresses a growing need among policy makers at all levels – city, federal and global – to leverage advances in technology to govern differently,” says Carol Coletta, Vice President of Community and National Initiatives at the Knight Foundation. “By connecting the latest technological innovations to a community of willing mentors, the Academy has the potential to catalyze more experimentation in a sector that badly needs it.”

Initial topics include using data to improve policymaking and cover the role of big data, urban analytics, smart disclosure and open data in governance. A second track focuses on online engagement and includes practical strategies for using crowdsourcing to solicit ideas, organize distributed work and gather data. The site features both curated content drawn from a variety of sources and original interviews with innovators from government, civil society, the tech industry, the arts and academia talking about their work around the world implementing innovations in practice, what worked and what didn’t, to improve real people’s lives.

Beth Noveck, Founder and Director of The GovLab, describes its mission: “The Academy is an experiment in peer production where every teacher is a learner and every learner a teacher. Consistent with The GovLab’s commitment to measuring what works, we want to measure our success by the people contributing as well as consuming content. We invite everyone with ideas, stories, insights and practical wisdom to contribute to what we hope will be a thriving and diverse community for social change”.”

Crowdsourcing the sounds of cities’ quiet spots

Springwise: “Finding a place in the city to collect your thoughts and enjoy some quietude is a rare thing. While startups such as Breather are set to open up private spaces for work and relaxation in several US cities, a new project called Stereopublic is hoping to map the ones already there, recruiting citizens to collect the sounds of those spaces.

Participants can download the free iOS app created by design studio Freerange Future, which enables them to become an ‘earwitness’. When they discover a tranquil spot in their city, they can use their GPS co-ordinates to record its exact location on the Stereopublic map, as well as record a 30-second sound clip and take a photo to give others a better idea of what it’s like. The team then works with sound experts to create quiet tours of each participating city, which currently includes Adelaide, London, LA, New York City, Singapore and 26 other global cities. The video below offers some more information about the project:

Implementing Open Innovation in the Public Sector: The Case of Challenge.gov

Article by Ines Mergel and Kevin C. Desouza in Public Administration Review: “As part of the Open Government Initiative, the Barack Obama administration has called for new forms of collaboration with stakeholders to increase the innovativeness of public service delivery. Federal managers are employing a new policy instrument called Challenge.gov to implement open innovation concepts invented in the private sector to crowdsource solutions from previously untapped problem solvers and to leverage collective intelligence to tackle complex social and technical public management problems. The authors highlight the work conducted by the Office of Citizen Services and Innovative Technologies at the General Services Administration, the administrator of the Challenge.gov platform. Specifically, this Administrative Profile features the work of Tammi Marcoullier, program manager for Challenge.gov, and Karen Trebon, deputy program manager, and their role as change agents who mediate collaborative practices between policy makers and public agencies as they navigate the political and legal environments of their local agencies. The profile provides insights into the implementation process of crowdsourcing solutions for public management problems, as well as lessons learned for designing open innovation processes in the public sector”.

Text messages are saving Swedes from cardiac arrest

Philip A. Stephenson in Quartz: “Sweden has found a faster way to treat people experiencing cardiac emergencies through a text message and a few thousand volunteers.

A program called SMSlivräddare, (or SMSLifesaver) (link in Swedish) solicits people who’ve been trained in cardiopulmonary resuscitation (CPR). When a Stockholm resident dials 112 for emergency services, a text message is sent to all volunteers within 500 meters of the person in need. The volunteer then arrives at the location within the crucial first minutes to perform lifesaving CPR. The odds for surviving cardiac arrest drop 10% for every minute it takes first responders to arrive…

With ambulance resources stretched thin, the average response time is some eight minutes, allowing SMS-livräddare-volunteers to reach victims before ambulances in 54% of cases.

Through a combination of techniques, including SMS-livräddare, Stockholm County has seen survival rates after cardiac arrest rise from 3% to nearly 11%, over the last decade. Local officials have also enlisted fire and police departments to respond to cardiac emergencies, but the Lifesavers routinely arrive before them as well.

Currently 9,600 Stockholm residents are registered SMS-livräddare-volunteers and there are plans to continue to increase enrollment. An estimated 200,000 Swedes have completed the necessary CPR training, and could, potentially, join the program….

Medical officials in other countries, including Scotland, are now considering similar community-based programs for cardiac arrest.”

Why Crowdsourcing is the Next Cloud Computing

Alpheus Bingham, co-founder and a member of the board of directors at InnoCentive, in Wired: “But over the course of a decade, what we now call cloud-based or software-as-a-service (SaaS) applications has taken the world by storm and become mainstream. Today, cloud computing is an umbrella term that applies to a wide variety of successful technologies (and business models), from business apps like Salesforce.com, to infrastructure like Amazon Elastic Compute Cloud (Amazon EC2), to consumer apps like Netflix. It took years for all these things to become mainstream, and if the last decade saw the emergence (and eventual dominance) of the cloud over previous technologies and models, this decade will see the same thing with crowdsourcing.

Both an art and a science, crowdsourcing taps into the global experience and wisdom of individuals, teams, communities, and networks to accomplish tasks and work. It doesn’t matter who you are, where you live, or what you do or believe — in fact, the more diversity of thought and perspective, the better. Diversity is king and it’s common for people on the periphery of — or even completely outside of — a discipline or science to end up solving important problems.

The specific nature of the work offers few constraints – from a small business needing a new logo, to the large consumer goods company looking to ideate marketing programs, or to the nonprofit research organization looking to find a biomarker for ALS, the value is clear as well.

To get to the heart of the matter on why crowdsourcing is this decade’s cloud computing, several immediate reasons come to mind:

Crowdsourcing Is Disruptive

Much as cloud computing has created a new guard that in many ways threatens the old guard, so too has crowdsourcing. …

Crowdsourcing Provides On-Demand Talent Capacity

Labor is expensive and good talent is scarce. Think about the cost of adding ten additional researchers to a 100-person R&D team. You’ve increased your research capacity by 10% (more or less), but at a significant cost – and, a significant FIXED cost at that. …

Crowdsourcing Enables Pay-for-Performance.

You pay as you go with cloud computing — gone are the days of massive upfront capital expenditures followed by years of ongoing maintenance and upgrade costs. Crowdsourcing does even better: you pay for solutions, not effort, which predictably sometimes results in failure. In fact, with crowdsourcing, the marketplace bears the cost of failure, not you….

Crowdsourcing “Consumerizes” Innovation

Crowdsourcing can provide a platform for bi-directional communication and collaboration with diverse individuals and groups, whether internal or external to your organization — employees, customers, partners and suppliers. Much as cloud computing has consumerized technology, crowdsourcing has the same potential to consumerize innovation, and more broadly, how we collaborate to bring new ideas, products and services to market.

Crowdsourcing Provides Expert Services and Skills That You Don’t Possess.

One of the early value propositions of cloud-based business apps was that you didn’t need to engage IT to deploy them or Finance to help procure them, thereby allowing general managers and line-of-business heads to do their jobs more fluently and more profitably…”

Facilitating scientific discovery through crowdsourcing and distributed participation

Antony Williams in EMBnet. journal:” Science has evolved from the isolated individual tinkering in the lab, through the era of the “gentleman scientist” with his or her assistant(s), to group-based then expansive collaboration and now to an opportunity to collaborate with the world. With the advent of the internet the opportunity for crowd-sourced contribution and large-scale collaboration has exploded and, as a result, scientific discovery has been further enabled. The contributions of enormous open data sets, liberal licensing policies and innovative technologies for mining and linking these data has given rise to platforms that are beginning to deliver on the promise of semantic technologies and nanopublications, facilitated by the unprecedented computational resources available today, especially the increasing capabilities of handheld devices. The speaker will provide an overview of his experiences in developing a crowdsourced platform for chemists allowing for data deposition, annotation and validation. The challenges of mapping chemical and pharmacological data, especially in regards to data quality, will be discussed. The promise of distributed participation in data analysis is already in place.”

Building a Smarter City

PSFK: “As cities around the world grow in size, one of the major challenges will be how to make city services and infrastructure more adaptive and responsive in order to keep existing systems running efficiently, while expanding to accommodate greater need. In our Future Of Cities report, PSFK Labs investigated the key trends and pressing issues that will play a role in shaping the evolution of urban environments over the next decade.

A major theme identified in the report is Sensible Cities, which is bringing intelligence to the city and its citizens through the free flow of information and data, helping improve both immediate and long term decision making. This theme consists of six key trends: Citizen Sensor Networks, Hyperlocal Reporting, Just-In-Time Alerts, Proximity Services, Data Transparency, and Intelligent Transport.

The Citizen Sensor Networks trend described in the Future Of Cities report highlights how sensor-laden personal electronics are enabling everyday people to passive collect environmental data and other information about their communities. When fed back into centralized, public databases for analysis, this accessible pool of knowledge enables any interested party to make more informed choices about their surroundings. These feedback systems require little infrastructure, and transform people into sensor nodes with little effort on their part. An example of this type of network in action is Street Bump, which is a crowdsourcing project that helps residents improve their neighborhood streets by collecting data around real-time road conditions while they drive. Using the mobile application’s motion- detecting accelerometer, Street Bump is able to sense when a bump is hit, while the phone’s GPS records and transmits the location.

The next trend of Hyperlocal Reporting describes how crowdsourced information platforms are changing the top-down nature of how news is gathered and disseminated by placing reporting tools in the hands of citizens, allowing any individual to instantly broadcast about what is important to them. Often using mobile phone technology, these information monitoring systems not only provide real-time, location specific data, but also boost civic engagement by establishing direct channels of communication between an individual and their community. A good example of this is Retio, which is a mobile application that allows Mexican citizens to report on organized crime and corruption using social media. Each issue is plotted on a map, allowing users and authorities to get an overall idea of what has been reported or narrow results down to specific incidents.

…

.

Data Transparency is a trend that examines how city administrators, institutions, and companies are publicly sharing data generated within their systems to add new levels of openness and accountability. Availability of this information not only strengthens civic engagement, but also establishes a collaborative agenda at all levels of government that empowers citizens through greater access and agency. For example, OpenSpending is a mobile and web-based application that allows citizens in participating cities to examine where their taxes are being spent through interactive visualizations. Citizens can review their personal share of public works, examine local impacts of public spending, rate and vote on proposed plans for spending and monitor the progress of projects that are or are not underway…”

Crowdsourcing Mobile App Takes the Globe’s Economic Pulse

Tom Simonite in MIT Technology Review: “In early September, news outlets reported that the price of onions in India had suddenly spiked nearly 300 percent over prices a year before. Analysts warned that the jump in price for this food staple could signal an impending economic crisis, and the Research Bank of India quickly raised interest rates.

A startup company called Premise might’ve helped make the response to India’s onion crisis timelier. As part of a novel approach to tracking the global economy from the bottom up, the company has a daily feed of onion prices from stores around India. More than 700 people in cities around the globe use a mobile app to log the prices of key products in local stores each day.

Premise’s cofounder David Soloff says it’s a valuable way to take the pulse of economies around the world, especially since stores frequently update their prices in response to economic pressures such as wholesale costs and consumer confidence. “All this information is hiding in plain sight on store shelves,” he says, “but there’s no way of capturing and aggregating it in any meaningful way.”

That information could provide a quick way to track and even predict inflation measures such as the U.S. Consumer Price Index. Inflation figures influence the financial industry and are used to set governments’ monetary and fiscal policy, but they are typically updated only once a month. Soloff says Premise’s analyses have shown that for some economies, the data the company collects can reliably predict monthly inflation figures four to six weeks in advance. “You don’t look at the weather forecast once a month,” he says….

Premise’s data may have other uses outside the financial industry. As part of a United Nations program called Global Pulse, Cavallo and PriceStats, which was founded after financial professionals began relying on data from an ongoing academic price-indexing effort called the Billion Prices Project, devised bread price indexes for several Latin American countries. Such indexes typically predict street prices and help governments and NGOs spot emerging food crises. Premise’s data could be used in the same way. The information could also be used to monitor areas of the world, such as Africa, where tracking online prices is unreliable, he says.”