Report by Danielle Goldfarb: “From collecting millions of online price data to measure inflation, to assessing the economic impact of the COVID-19 pandemic on low-income workers, digital data sets can be used to benefit the public interest. Using these and other examples, this special report explores how digital data sets and advances in artificial intelligence (AI) can provide timely, transparent and detailed insights into global challenges. These experiments illustrate how governments and civil society analysts can reuse digital data to spot emerging problems, analyze specific group impacts, complement traditional metrics or verify data that may be manipulated. AI and data governance should extend beyond addressing harms. International institutions and governments need to actively steward digital data and AI tools to support a step change in our understanding of society’s biggest challenges…(More)”

Boosting: Empowering Citizens with Behavioral Science

Paper by Stefan M. Herzog and Ralph Hertwig: “Behavioral public policy came to the fore with the introduction of nudging, which aims to steer behavior while maintaining freedom of choice. Responding to critiques of nudging (e.g., that it does not promote agency and relies on benevolent choice architects), other behavioral policy approaches focus on empowering citizens. Here we review boosting, a behavioral policy approach that aims to foster people’s agency, self-control, and ability to make informed decisions. It is grounded in evidence from behavioral science showing that human decision making is not as notoriously flawed as the nudging approach assumes. We argue that addressing the challenges of our time—such as climate change, pandemics, and the threats to liberal democracies and human autonomy posed by digital technologies and choice architectures—calls for fostering capable and engaged citizens as a first line of response to complement slower, systemic approaches…(More)”.

The Simple Macroeconomics of AI

Paper by Daron Acemoglu: “This paper evaluates claims about large macroeconomic implications of new advances in AI. It starts from a task-based model of AI’s effects, working through automation and task complementarities. So long as AI’s microeconomic effects are driven by cost savings/productivity improvements at the task level, its macroeconomic consequences will be given by a version of Hulten’s theorem: GDP and aggregate productivity gains can be estimated by what fraction of tasks are impacted and average task-level cost savings. Using existing estimates on exposure to AI and productivity improvements at the task level, these macroeconomic effects appear nontrivial but modest—no more than a 0.66% increase in total factor productivity (TFP) over 10 years. The paper then argues that even these estimates could be exaggerated, because early evidence is from easy-to-learn tasks, whereas some of the future effects will come from hard-to-learn tasks, where there are many context-dependent factors affecting decision-making and no objective outcome measures from which to learn successful performance. Consequently, predicted TFP gains over the next 10 years are even more modest and are predicted to be less than 0.53%. I also explore AI’s wage and inequality effects. I show theoretically that even when AI improves the productivity of low-skill workers in certain tasks (without creating new tasks for them), this may increase rather than reduce inequality. Empirically, I find that AI advances are unlikely to increase inequality as much as previous automation technologies because their impact is more equally distributed across demographic groups, but there is also no evidence that AI will reduce labor income inequality. Instead, AI is predicted to widen the gap between capital and labor income. Finally, some of the new tasks created by AI may have negative social value (such as design of algorithms for online manipulation), and I discuss how to incorporate the macroeconomic effects of new tasks that may have negative social value…(More)”.

A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI

Report by Hannah Chafetz, Sampriti Saxena, and Stefaan G. Verhulst: “Since late 2022, generative AI services and large language models (LLMs) have transformed how many individuals access, and process information. However, how generative AI and LLMs can be augmented with open data from official sources and how open data can be made more accessible with generative AI – potentially enabling a Fourth Wave of Open Data – remains an under explored area.

For these reasons, The Open Data Policy Lab (a collaboration between The GovLab and Microsoft) decided to explore the possible intersections between open data from official sources and generative AI. Throughout the last year, the team has conducted a range of research initiatives about the potential of open data and generative including a panel discussion, interviews, and Open Data Action Labs – a series of design sprints with a diverse group of industry experts.

These initiatives were used to inform our latest report, “A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI,” (May 2024) which provides a new framework and recommendations to support open data providers and other interested parties in making open data “ready” for generative AI…

The report outlines five scenarios in which open data from official sources (e.g. open government and open research data) and generative AI can intersect. Each of these scenarios includes case studies from the field and a specific set of requirements that open data providers can focus on to become ready for a scenario. These include…(More)” (Arxiv).

The Wisdom of Partisan Crowds: Comparing Collective Intelligence in Humans and LLM-based Agents

Paper by Yun-Shiuan Chuang et al: “Human groups are able to converge to more accurate beliefs through deliberation, even in the presence of polarization and partisan bias – a phenomenon known as the “wisdom of partisan crowds.” Large Language Models (LLMs) agents are increasingly being used to simulate human collective behavior, yet few benchmarks exist for evaluating their dynamics against the behavior of human groups. In this paper, we examine the extent to which the wisdom of partisan crowds emerges in groups of LLM-based agents that are prompted to role-play as partisan personas (e.g., Democrat or Republican). We find that they not only display human-like partisan biases, but also converge to more accurate beliefs through deliberation, as humans do. We then identify several factors that interfere with convergence, including the use of chain-of-thought prompting and lack of details in personas. Conversely, fine-tuning on human data appears to enhance convergence. These findings show the potential and limitations of LLM-based agents as a model of human collective intelligence…(More)”

Copyright Policy Options for Generative Artificial Intelligence

Paper by Joshua S. Gans: “New generative artificial intelligence (AI) models, including large language models and image generators, have created new challenges for copyright policy as such models may be trained on data that includes copy-protected content. This paper examines this issue from an economics perspective and analyses how different copyright regimes for generative AI will impact the quality of content generated as well as the quality of AI training. A key factor is whether generative AI models are small (with content providers capable of negotiations with AI providers) or large (where negotiations are prohibitive). For small AI models, it is found that giving original content providers copyright protection leads to superior social welfare outcomes compared to having no copyright protection. For large AI models, this comparison is ambiguous and depends on the level of potential harm to original content providers and the importance of content for AI training quality. However, it is demonstrated that an ex-post `fair use’ type mechanism can lead to higher expected social welfare than traditional copyright regimes…(More)”.

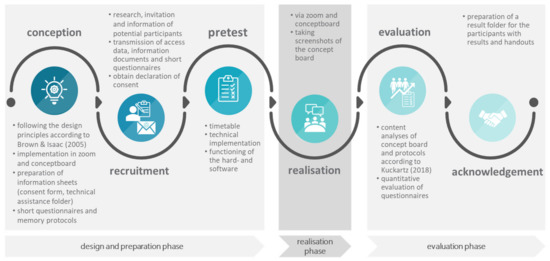

Hosting an Online World Café to Develop an Understanding of Digital Health Promoting Settings from a Citizen’s Perspective—Methodological Potentials and Challenges

Paper by Joanna Albrecht: “Brown and Isaacs’ World Café is a participatory research method to make connections to the ideas of others. During the SARS-CoV-2 pandemic and the corresponding contact restrictions, only digital hostings of World Cafés were possible. This article aims to present and reflect on the potentials and challenges of hosting online World Cafés and to derive recommendations for other researchers. Via Zoom and Conceptboard, three online World Cafés were conducted in August 2021. In the World Cafés, the main focus was on the increasing digitization in settings in the context of health promotion and prevention from the perspective of setting members of educational institutions, leisure clubs, and communities. Between 9 and 13 participants participated in three World Cafés. Hosting comprises the phases of design and preparation, realisation, and evaluation. Generally, hosting an online World Café is a suitable method for participatory engagement, but particular challenges have to be overcome. Overall café hosts must create an equal participation environment by ensuring the availability of digital devices and stable internet access. The event schedule must react flexibly to technical disruptions and varying participation numbers. Further, compensatory measures such as support in the form of technical training must be implemented before the event. Finally, due to the higher complexity of digitalisation, roles of participants and staff need to be distributed and coordinated…(More)”.

Behavioural science is unlikely to change the world without a heterogeneity revolution

Article by Christopher J. Bryan, Elizabeth Tipton & David S. Yeager: “In the past decade, behavioural science has gained influence in policymaking but suffered a crisis of confidence in the replicability of its findings. Here, we describe a nascent heterogeneity revolution that we believe these twin historical trends have triggered. This revolution will be defined by the recognition that most treatment effects are heterogeneous, so the variation in effect estimates across studies that defines the replication crisis is to be expected as long as heterogeneous effects are studied without a systematic approach to sampling and moderation. When studied systematically, heterogeneity can be leveraged to build more complete theories of causal mechanism that could inform nuanced and dependable guidance to policymakers. We recommend investment in shared research infrastructure to make it feasible to study behavioural interventions in heterogeneous and generalizable samples, and suggest low-cost steps researchers can take immediately to avoid being misled by heterogeneity and begin to learn from it instead….(More)”.

The Business of City Hall

Paper by Kenneth R. Ahern: “Compared to the federal government, the average citizen in the U.S. has far greater interaction with city governments, including policing, health services, zoning laws, utilities, schooling, and transportation. At the regional level, it is city governments that provide the infrastructure and services that facilitate agglomeration economies in urban areas. However, there is relatively little empirical evidence on the operations of city governments as economic entities. To overcome deficiencies in traditional datasets, this paper amasses a novel, hand-collected dataset on city government finances to describe the functions, expenses, and revenues of the largest 39 cities in the United States from 2003 to 2018. First, city governments are large, with average revenues equivalent to the 78th percentile of U.S. publicly traded firms. Second, cities collect an increasingly large fraction of revenues through direct user fees, rather than taxes. By 2018, total charges for services equal tax revenue in the median city. Third, controlling for city fixed effects, population, and personal income, large city governments shrunk by 15% between 2009 and 2018. Finally, the growth rate of city expenses is more sensitive to population growth, while the growth rate of city revenues is more sensitive to income. These sensitivities lead smaller, poorer cities’ expenses to grow faster than their revenues….(More)”.

Beyond the promise: implementing ethical AI

Ray Eitel-Porter at AI and Ethics: “Artificial Intelligence (AI) applications can and do have unintended negative consequences for businesses if not implemented with care. Specifically, faulty or biased AI applications risk compliance and governance breaches and damage to the corporate brand. These issues commonly arise from a number of pitfalls associated with AI development, which include rushed development, a lack of technical understanding, and improper quality assurance, among other factors. To mitigate these risks, a growing number of organisations are working on ethical AI principles and frameworks. However, ethical AI principles alone are not sufficient for ensuring responsible AI use in enterprises. Businesses also require strong, mandated governance controls including tools for managing processes and creating associated audit trails to enforce their principles. Businesses that implement strong governance frameworks, overseen by an ethics board and strengthened with appropriate training, will reduce the risks associated with AI. When applied to AI modelling, the governance will also make it easier for businesses to bring their AI deployments to scale….(More)”.